Yeah. A card which will launch at, say, $600 being 20% faster than the card available now for $1200 is a clear disaster for everyone. Wait.

Plus where else you gonna go? AMD?

Yeah. A card which will launch at, say, $600 being 20% faster than the card available now for $1200 is a clear disaster for everyone. Wait.

Intel Xe of course!

OUCH!!! While my PSU is more than adequate lol, the estimated cost per month hurt my feelings!! $555 per year for this thing!

Exactly, people over estimate the power usage, and underestimate the cost. I also run 24/7, and I am rethinking that, its just not friendly for the environment.

Yeah, absolutely, it's not running full blast all the time. IMO we should all still try to be more sustainable.It's also not realistic to draw that much power 24/7. My machine idles at <100W most of the time. It jumps to 400W while gaming maybe. Even at the ridiculous price of US$0.20/kW that's only $175/year idling.

I fully expect Nvidia to keep the same prices from the 20 series.The really important part is the pricing. If the pricing tiers are kept the same, then a 50%-odd performance increase over the 2080 for the 3080 is great (plus, it would give us 1080Ti owners a viable upgrade). If they decide to increase the prices again, then it might not be so good. Not that I think they're going to pull a Turing and shift the prices to the extent of the 3080 costing the same as a 2080Ti, but I wouldn't be surprised in the slightest if there was a $100-$200 hike.

Does the rumored 300+ wattage/tdp mean these cards are gonna run stupid hot? My 180w GTX 1080 already increases my room temperature a few degrees when under heavy load so I'm really worried the 3000 series is gonna make my room unbearable :/

Glad I opted for a 750w Platinum PSU on my build. It felt like overkill at the time, but who knows.

The amount of heat generated will be the same no matter what kind of cooler is used. A better cooler just moves more heat from the card into the ambient environment.Should probably wait for the partner cards with extra cooling to come out then. Thats what i'll probably do.

That's not how that works. The card will still be using the same amount of power. A better cooler will only make the card run coolerShould probably wait for the partner cards with extra cooling to come out then. Thats what i'll probably do.

Same here but a 750W Gold. I went with it specifically for this reason: if wattage requirements go up I don't want to have to think about it.

Another 750W Gold here, only because it was available. For 13 years I've had 600W-650W for my computers.

I bought my Seasonic 750W platinum just because it was the first PSU that I found that was available at MSRP and was highly rated. There were some 500W options and non-modular options but I didn't want to deal with the wires. The PSU shortage right now is very real. Also it came with a 10-year warranty so I figured that the extra cost would be made up if I use it in at least one more build in the future.Another 750W Gold here, only because it was available. For 13 years I've had 600W-650W for my computers.

Does the rumored 300+ wattage/tdp mean these cards are gonna run stupid hot? My 180w GTX 1080 already increases my room temperature a few degrees when under heavy load so I'm really worried the 3000 series is gonna make my room unbearable :/

absolutely correct, i was just correcting the statement that its not happening *at all*, and if you have an HEDT cpu like a threadripper gen 3 part, then yeah 250w will be the norm... during multithreaded productivity loads, gaming power draw on basically all of these cpus is like.... 50 - 90w its not even worth caring about in this contextEven in that situation it's not drawing 250w when playing a game.

The amount of heat generated will be the same no matter what kind of cooler is used. A better cooler just moves more heat from the card into the ambient environment.

That's not how that works. The card will still be using the same amount of power. A better cooler will only make the card run cooler

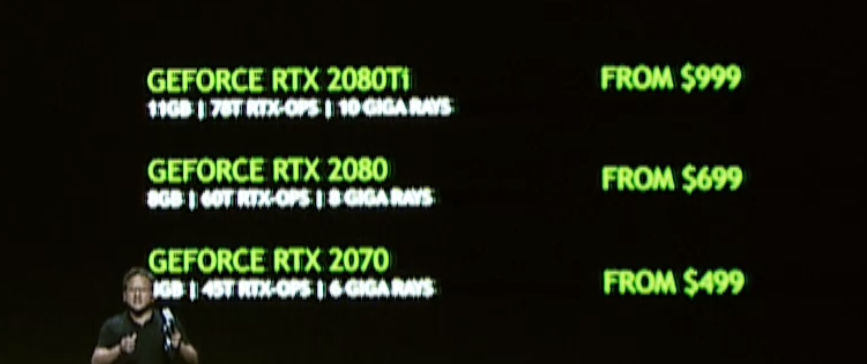

LOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOL in your dreams.If $699 that's not bad. Pretty much the same core count as the 2080 Ti. So basically getting a much improved 2080 ti with better RT and faster memory (although losing 1gb), for quite a bit less money.

We'll see if they hit that price point. Hope the 3070 can hit $500 as well.

???LOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOOL in your dreams.

Mid range cards at launch have not been around 500 for a few gens now sadly :(

No retailer had them for that price.

2070 was 599, I can't remember the super price though

No way they do a refresh on an entirely new node, thats nonesense.

Would be funny a couple of years ago but with Navi 21 this may well be an option finally.

Some people's expectations are delusional. Even the 1080 was less than 30% faster than the 980 Ti on a node shrink. If the 3080 is slightly above 20% over the 2080 Ti without a price increase from last generation, it seems fine.

Yep, exactly. The real question is going to be the pricing, I'd prefer they lower the pricing across the board but if not they at least need to not raise the price. With Turing they had an excuse since they had monster size chips due to not having a node shrink.Some people's expectations are delusional. Even the 1080 was less than 30% faster than the 980 Ti on a node shrink. If the 3080 is slightly above 20% over the 2080 Ti without a price increase from last generation, it seems fine.

That's fair. I was really more focused on the performance gains between generations. As long as it's close to the gains of Pascal, I'd mark that down as a success. It would be nice for pricing to come down especially with Nvidia expressing some disappointment with initial RTX sales.I hate that these insane graphics cards prices have been normalized. I don't think the price of the 2080 Ti was fine to begin with.

absolutely correct, i was just correcting the statement that its not happening *at all*, and if you have an HEDT cpu like a threadripper gen 3 part, then yeah 250w will be the norm... during multithreaded productivity loads, gaming power draw on basically all of these cpus is like.... 50 - 90w its not even worth caring about in this context

Would be funny a couple of years ago but with Navi 21 this may well be an option finally.

Which is also why people expecting the doubling of 2080 price or something are weird.

Would be funny a couple of years ago but with Navi 21 this may well be an option finally.

Which is also why people expecting the doubling of 2080 price or something are weird.

The problem fof me is not the PSU failing, or being on the edge, which would be unusual cases anyway.

It would be the heat and thus noise generated from a high power card as is rumoured paired with a very hot and power hungry CPU (9900k or 10900K). I am past dealing with a noisey system and I'm past dealing with my room heating up after a gaming session, I thought this was all in the past!

There is a certain joy from gaming on a cool and quiet system.

It is not always like that , before series 900 AMD was a real competitor in this market. 5700 XT showed they can compete again.AMD it's always "wait for x..." where x has no real competition against Nvidia's top end.

You can't tell me Jensen Huang, and his niece, Lisa Su, haven't had conversations about this. The GPU market is a niche and hardcore market.

Also about zero chance of any "MCM" or "chiplet" GPUs reaching consumers any time soon from any IHV. Hopper is likely a 100% data center / AI / HPC oriented design. No clear info on if it's even a separate architecture or just Ampere chip(let)s in a multichip configuration.

I'd give about 1% of the first happening with Navi 2x vs Ampere and the second is basically guaranteed.

Oh, we definitely shouldn't expect any of the two companies to gift their new GPUs for some peanuts or such. But considering that NV's top end will launch to a competition coming in a couple of months after that expecting them to increase the prices is equally unrealistic. This upcoming gen will be a big fight between the two which means that anything above $1000 is likely out - if only because either would be forced to introduce a much cheaper card with essentially the same performance (think 1080Ti vs GTX Titan and such). With NV opting for a supposedly cheaper Samsung 8nm process they seem to be fully aware of what's coming and are trying to protect their margins this way which means that the prices won't go up and have a good chance of actually coming down from where they were with Turing and RDNA1.I definitely agree that doubling the price would be suicide. But beyond that, I have zero expectations on pricing. You can't tell me Jensen Huang, and his niece, Lisa Su, haven't had conversations about this. The GPU market is a niche and hardcore market. Price wars don't lead to growing the pie. It may result in market share moving a couple percentage points here or there, but ultimately it destroys the margins for both without bringing in many new customers. I expect Nvidia will charge the most it thinks its customers are willing to pay, and AMD will undercut that pricing slightly.

Navi 21 is top end though and it should launch this year so there's that.AMD it's always "wait for x..." where x has no real competition against Nvidia's top end.

It's... going to be more then some years I think. Chiplet design for GPUs will prompt for a total rebuild of how games are being programmed and the likely dumping of rasterization h/w in favor of something more natively parallel. Won't happen at the beginning of a new console gen which is for all intents and purposes very old school in its GPU approach. By the end of this gen though or at the start of the next one, when we'll be testing 3nm processes which are very likely to be the end of scaling on silicon - well, maybe. But that's 2025+ at best I think.I guess its going to take a few additional years before GPU MCM or GPU Chiplets will become useful for games, and made easy to code for, for developers.

Depending on which card you go with in the Ampere lineup, your PSU might need to supply your GPU alone with between 300-350W, more if you overclock it.Why're you all flexing your 750W PSUs? Was some news revealed that would lead you to believe you'd need gigantic PSUs? I got a 650W and I was always told its oversized for single GPU rigs.

It's... going to be more then some years I think. Chiplet design for GPUs will prompt for a total rebuild of how games are being programmed and the likely dumping of rasterization h/w in favor of something more natively parallel. Won't happen at the beginning of a new console gen which is for all intents and purposes very old school in its GPU approach. By the end of this gen though or at the start of the next one, when we'll be testing 3nm processes which are very likely to be the end of scaling on silicon - well, maybe. But that's 2025+ at best I think.

There will likely be some HPC / DL testing for chiplet designs though, we've already seen the research on that from several IHVs and it's very probable that we'll see some products designated for pure GPU compute markets much sooner than anything like this will come to gaming.

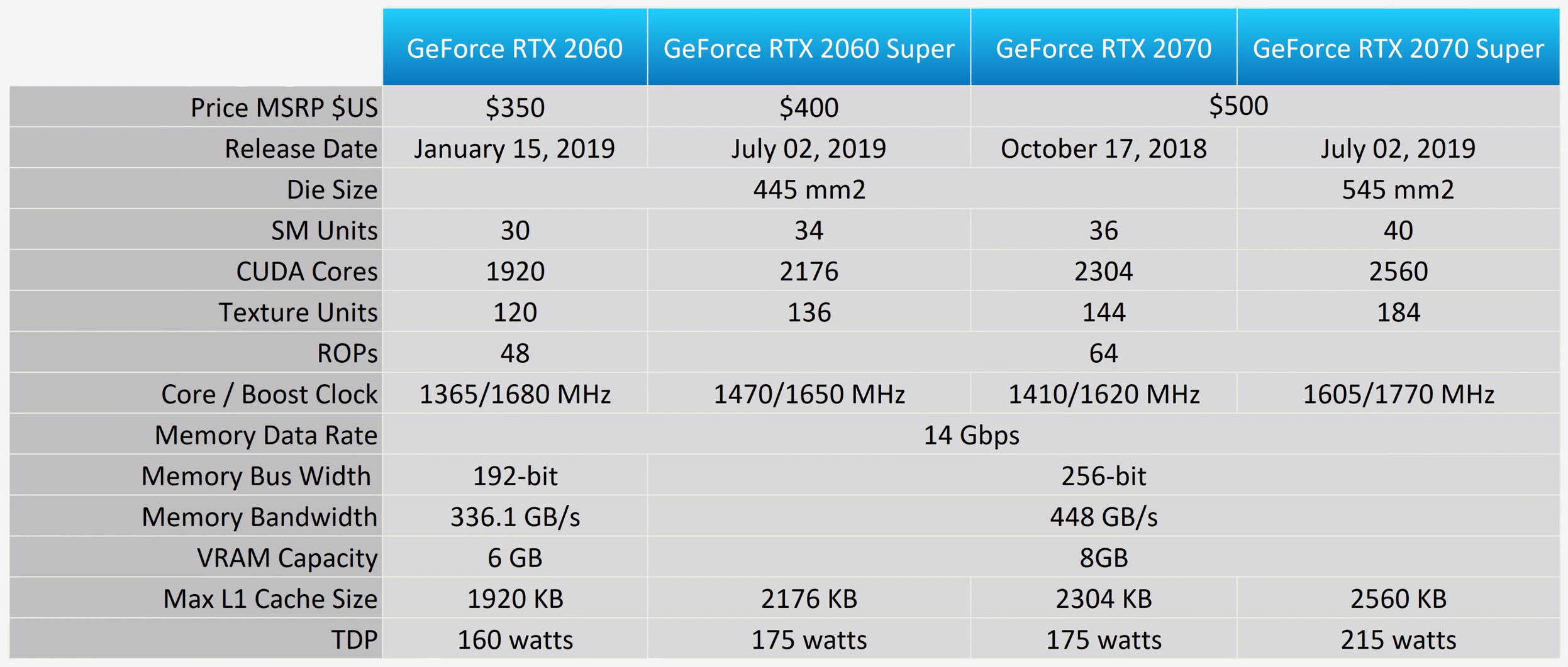

ugh, am I gonna be good next gen with a 2070 super or did i blow my money too early?

depends on the resolution you game at. I have a 2070 super as well and, at 1440p, certain titles from 2016 and 2018 are kind of tough. barely getting 60fps in ass creed odyssey and going through areas in Deus Ex Mankind Divided where the fps drops to high 40s/low50s is kind of wild for a $560 2018/9 gpu imough, am I gonna be good next gen with a 2070 super or did i blow my money too early?

I mean I got it like a year ago (and then the first one died and I had to get a replacement, naturally) so I do think I did ok with the info I had at the time but I guess I'm getting another one in like, a year and a half, which blowsYou should be fine but I would have recommended waiting to see what NVIDIA and AMD have to offer in a few months time, if only to see how much better ray-tracing performance is on either company's cards.