-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Rumored specs for NVIDIA's upcoming "Ampere" RTX 3000 series graphics cards (RTX 3060, RTX 3070, RTX 3080 and RTX 3090)

- Thread starter Deleted member 3812

- Start date

- Rumor

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

RTX 3000 series power consumption, PCB shape rumor Picture showing two RTX 3080s has leaked UPDATE: NVIDIA event announced for September 1st RTX 3090 Specs Rumor: "20% increase in cores over the GeForce RTX 2080 Ti", up to 24 GB memory with faster GDDR6X pin speeds, 384-bit bus, nearly 1 TB/s bandwidth RTX 30xx series power cable: "It is recommended to use a power supply rated 850W or higher with this cable" 3090 Phoenix GS product images leak REMINDER: Tomorrow, September 1st is the NVIDIA event that is expected to announce the RTX 3000 series graphics cardsTuring is the architecture that pioneered mesh shaders, sampler feedback, dxr acceleration and variable rate shading, all the DX12 Ultimate features that are important for next gen gaming, as well as boosting machine learning performance considerably. It's far from a bad overpriced architecture if you can look beyond the raw performance in current gen titles and it might last longer than previous architectures because of that advanced featureset.

The value of Turing will be clear once more games use machine learning and DX12 Ultimate features.

I'd argue the only architecture that is overpriced and bad right now is RDNA1 because it lacks the featureset and machine learning acceleration that is so important for next generation games. Thankfully it's soon to be replaced by RDNA2.

Yes, and Turing evidently pulled further ahead with DX12 heavy games like RDR2 and Woldenstein Young blood

DLSS 2.0 and beyond will also have a ton of importance allowing lower Turing cards to decently run intensive games at "high" resolution in the future. Some of the results they shared with a 2060 were mind-blowing. It still was a bit expensive for what it was at launch but its value has improved

The point obviously is that two years is a very long time to upgrade.Then what was the point of you even bothering to comment about two years being a very long time for an upgrade?

It doesn't mean that everyone is upgrading every two years nor that there's a need to do it every two years.

So the actual question is what is your point exactly?

It's great to have but it's barely something you can bank on.DLSS 2.0 and beyond will also have a ton of importance allowing lower Turing cards to decently run intensive games at "high" resolution in the future. Some of the results they shared with a 2060 were mind-blowing. It still was a bit expensive for what it was at launch but its value has improved

The numbers of games that support it is infinitesimally small compared to the number of releases at large.

They have said the target of DLSS is not requiring being whitelisted and be easy to implement (not in 2.0 sadly), and given the high improvements on DLSS from 1.0 to the current standard, I can see that becoming a reality for future games in some time in the future.It's great to have but it's barely something you can bank on.

The numbers of games that support it is infinitesimally small compared to the number of releases at large.

The 2.0 tech was just introduced in march I think. So games that was releasing soon after didnt have time to implement.It's great to have but it's barely something you can bank on.

The numbers of games that support it is infinitesimally small compared to the number of releases at large.

we should give it some time before judging.

@9:15

He says that eg 3080 and 3080Ti (or w.e. its called) use the same chip but with different memory interface and VRAM.

Means that A and non-A chips are replaced by just naming them differently. A chip would be a 3080 Ti and a non-A chip would be 3080. The biggest card would then be the 3090.

Naming the biggest model 3090 and having a 3080Ti that isnt the biggest anymore for a lower price would make sense if they want to sell it.

The 2080Ti is a synonym for the strongest Gaming GPU (Titan excluded). With ampere they can make another xx80 Ti and many would associate it with the 2080 Ti.

If i understand it correctly, then that means a 3080TI is a 3080 Super in a sense?

So thats how they are going to make a 3080 cost $500, ahhhhhhhhhIt is like we are having 3070-80-80ti rebranded into 80-80ti-90

So now a 3070 is a 3080 and will let Nvidia starts around $699

lowering prices indeed, lol.

Wouldn't call it a rebrand. I mean, there more likely still will be 70-60-50 cards additional to that. Maybe you consider it a shift/rebrand comparing the 80ti with the 90, but the rest sounds like it seems to stay the same as previous generations.It is like we are having 3070-80-80ti rebranded into 80-80ti-90

So now a 3070 is a 3080 and will let Nvidia starts around $699

The point obviously is that two years is a very long time to upgrade.

It doesn't mean that everyone is upgrading every two years nor that there's a need to do it every two years.

So the actual question is what is your point exactly?

You seem to be talking in circles now. You were saying "everyone" would be fine with Nothing's comment about upgrading every two years. What exactly were you even talking about then, that everyone would be happy upgrading every two years, or that everyone would be fine with someone else upgrading every two years? If someone doesn't need to upgrade, then yet again two years is entirely relative. I also don't understand why you seem to want to hardcore defend the 20 series as not being the misstep that so many others know it was.

Did you buy two 2080 Ti's or something? The 20 series was nowhere near the same level of improvement Nvidia cards had previously without the massive inflation of cost, which was obviously due to it being the first iteration of their ray tracing tech. For "everyone being fine" with that, and the 2080 Ti being fine for next gen, there's sure a lot of complaining by those who actually bought one.

Last edited:

He wanted something brand new to last him for several years and so he wouldn't have to think about it again. It was his first time building a PC. I tried to talk him into getting a new card for around $200 as a stopgap solution, bc the rasterization improvement with RTX 2000 simply wasn't there. But while strongly considering a regular 2070 for $500+ it seemed to make more sense to him to go all-in on stepping up into the 2080 instead.Did you happen to look into the used marked for a 1080 Ti before buying that? I know it was slim pickings after 2018 though. The 2080 SC is obviously a little better with rasterization and had the added benefit of ray tracing (and now the new image sharpening and integer scaling features that are only on the RTX cards), but $840 is still crazy for what you're getting. In November 2018 I got a really good, used EVGA 1080 Ti for $500 and it's been great since. I chose to go that route since I already had a 1080 in one of my PCs and the normal 2080 was an abysmally bad upgrade for the price. The 2080 Ti to me was just a stop-gap until the real next-gen cards actually hit, which appears to be Ampere.

Even though the 2080 Ti was way overpriced, I won't hesitate to pick up the Ampere version for up to $1,200 and it should last at least five years in my main gaming PC, and a few years more when that becomes my secondary.

Yeah, I did the exact same thing as you actually. Surveyed the market for quite awhile, and in October 2018 (lol) purchased a lightly used EVGA GTX 1080 FTW3 for only $285 (after an Ebay coupon). It turned out to be an incredible investment and the card is still running well today at 1440p. I couldn't justify the costs of an RTX card, and in the meantime all I've had to do is put off playing Control and Metro: Exodus. I'm looking toward pre-ordering an EVGA RTX 3070 XC Ultra or equivalent for $499 in August, if everything works out as planned. But if Nvidia wants to gouge us again, that would be their mistake. People will just keep waiting, keep buying used, buy AMD, or buy consoles. But I don't think that's going to happen.

It's shaping up to be a great time to buy a new card this fall.

I've bought a 2080 which used to cost the same as 1080Ti while being both faster and more future proof. So you can preach whatever you want really, the simple fact is - Turing never was a bad investment and it certainly isn't now. Which is the point where this argument started.The 20 series was nowhere near the same level of improvement Nvidia cards had previously without the massive inflation of cost

I still only see nvidia promoted games implementing this. There are not a lot of nvidia promoted games.They have said the target of DLSS is not requiring being whitelisted and be easy to implement (not in 2.0 sadly), and given the high improvements on DLSS from 1.0 to the current standard, I can see that becoming a reality for future games in some time in the future.

I don't think they will. This time Nvidia has to compete with AMD's big Navi plus the new consoles. Nvidia had a stranglehold on the market previously but this cycle they need to be much more competitive. People have choices now. They also aren't going to freely concede a good portion of the market share to AMD.

I've bought a 2080 which used to cost the same as 1080Ti while being both faster and more future proof. So you can preach whatever you want really, the simple fact is - Turing never was a bad investment and it certainly isn't now. Which is the point where this argument started.

More future proof at what, the hope that more games implement DLSS 2.0? The regular rasterization of the 2080 wasn't even equal to a 1080 Ti at launch in many games, and it took almost a year for it to actually have an insignificant lead in the majority of games. Do you think future ray traced games are somehow going to become more optimized and that the 2080 won't struggle to even have it above a medium setting and 1080p? I guess if you're fine staying at a locked 30fps, or don't mind constant drops into the mid 40s and 1080p it's great.

Turing was absolutely a bad investment, even more so if the current benchmarks aren't even of the flagship card. Even a 2080 Ti couldn't do a locked 60fps on a mix of Very High at 4K with more demanding games, and you also had to play at 1080p to get decent ray tracing performance. Like what the fuck? It's a card where you have to choose between a higher resolution and graphical options, or ray tracing, but can't have both without DLSS 2.0 being implemented.

If all someone cared about was very high frame rates, regardless of the resolution, then maybe that 25% advantage of the 2080 Ti over the 1080 Ti (or 2080/2080S) would be considered a good value for some, but that's not future proofing, because you're buying it for the current frame rate advantage it could give. You'll still be stuck with a card that makes you choose ray tracing over resolution and graphics though, and that's the flagship.

Ampere looks like it's going to be closer to the jump Turing should have been for the cost. Actually more, but still closer than what Turing offered.

I don't think they will. This time Nvidia has to compete with AMD's big Navi plus the new consoles. Nvidia had a stranglehold on the market previously but this cycle they need to be much more competitive. People have choices now. They also aren't going to freely concede a good portion of the market share to AMD.

Not only that turing also sold pretty bad compared to pascal.

We reached the maximum what folks are willing to pay for a GPU and with another price increase and even more would nope out.

As a 1080 Ti owner, I just don't want to have to spend over $1k for a relatively small upgrade. I've bought the Ti variant 3 gens in a row, but the 2080 Ti was just too much for me after the previous 3 were like $700 and not $1200 (without even factoring in the small performance gain).

Same. Which is why I waited for the 3080ti/3090. With the 24% upgrade we saw from the 1080ti to the 2080ti and the 30-50% upgrade we will see from 2080i to the 3090....I'm super exited.As a 1080 Ti owner, I just don't want to have to spend over $1k for a relatively small upgrade. I've bought the Ti variant 3 gens in a row, but the 2080 Ti was just too much for me after the previous 3 were like $700 and not $1200 (without even factoring in the small performance gain).

Especially considering the global economy. Now is not the time to push prices higher.Not only that turing also sold pretty bad compared to pascal.

We reached the maximum what folks are willing to pay for a GPU and with another price increase and even more would nope out.

You may even be able to hang onto it through the 3000 series depending what games you play and at what resolutions.As a 1080 Ti owner, I just don't want to have to spend over $1k for a relatively small upgrade. I've bought the Ti variant 3 gens in a row, but the 2080 Ti was just too much for me after the previous 3 were like $700 and not $1200 (without even factoring in the small performance gain).

It's already bothering me that I've had it for nearly 4 years, no way I can hang onto it past the end of this year.You may even be able to hang onto it through the 3000 series depending what games you play and at what resolutions.

yeah it's going to be a lonnng two months until they are announced in August lol.graphics card marketing is so labyrinthine.

really just want them to announce already geeeez

"Turing certainly isn't a bad investment now". ROFL. Yeah nevermind that the PS5/XBSX are putting GPU + CPU combos in their machines that will come close to matching the performance of a 2080 Super with raytracing capabilities PLUS modern Zen 2 processing (cutdown) at a cost of around ~$200-250ish.I've bought a 2080 which used to cost the same as 1080Ti while being both faster and more future proof. So you can preach whatever you want really, the simple fact is - Turing never was a bad investment and it certainly isn't now. Which is the point where this argument started.

At everything, when compared to all other options.

I'm still waiting on you providing an example of a better investment than Turing during the last two years.

Cool, yeah you do that. *thumbsup*I'm still waiting on you providing an example of a better investment than Turing during the last two years.

This revisionist history on first-gen Turing has to stop. Now.More future proof at what, the hope that more games implement DLSS 2.0? The regular rasterization of the 2080 wasn't even equal to a 1080 Ti at launch in many games, and it took almost a year for it to actually have an insignificant lead in the majority of games.

Techpowerup 2080 FE Review. 20+ games tested.

Please go through each game's graphs, and count how many in which the 1080 Ti has the advantage, at any resolution. I'll wait for you to report back on your findings, as I already did so and counted.

Please, no pivots.

DLSS 2.0 Confirmed for Cyberpunk 2077 (we all expected it, but nice to see it confirmed)

Also nice to see they confirmed the type of RT effects. It will use Ray-Traced Diffuse Illumination, Ray-Traced Reflections, Ray-Traced Ambient Occlusion and Ray-Traced Shadows.

Also nice to see they confirmed the type of RT effects. It will use Ray-Traced Diffuse Illumination, Ray-Traced Reflections, Ray-Traced Ambient Occlusion and Ray-Traced Shadows.

Honestly I think the delay of CP2077 favoured NVIDIA a lot. From the marketing side they know it's gonna be the benchmark game, so to speak, and while I think I had read the game was completed and they were fixing/optimizing/etc, a November release gives NVIDIA more time and more leeway to say "Look, this is how it works with OUR new line of GPUs :)".

I am very excited to see AMD rocking Nvidia's boat and I would definitely change my GPU for an amd one if it wasn't for rendering issues, but in any case it's gonna be exciting to see how Nvidia uses such games for marketing considering that at the end, consoles are also a big piece of the cake, and that piece pertains to AMD.

But god I just wanna get a 3070 for 600€ or less and be done with this..

I am very excited to see AMD rocking Nvidia's boat and I would definitely change my GPU for an amd one if it wasn't for rendering issues, but in any case it's gonna be exciting to see how Nvidia uses such games for marketing considering that at the end, consoles are also a big piece of the cake, and that piece pertains to AMD.

But god I just wanna get a 3070 for 600€ or less and be done with this..

This revisionist history on first-gen Turing has to stop. Now.

Techpowerup 2080 FE Review. 20+ games tested.

Please go through each game's graphs, and count how many in which the 1080 Ti has the advantage, at any resolution. I'll wait for you to report back on your findings, as I already did so and counted.

Please, no pivots.

Yeah 1080 Ti was never on average faster (or even as fast) as the 2080 (and the gap is widening in many new games).

But there are still many who believe that to this day.

It doesn't help when even tech channels like Gamersnexus say dumb stuff stull like "we get it Nvidia you can make a 1080 Ti" in their 2080 Super review even tough the super is 15% faster...

I get that it wasn't the most exciting product but come on...

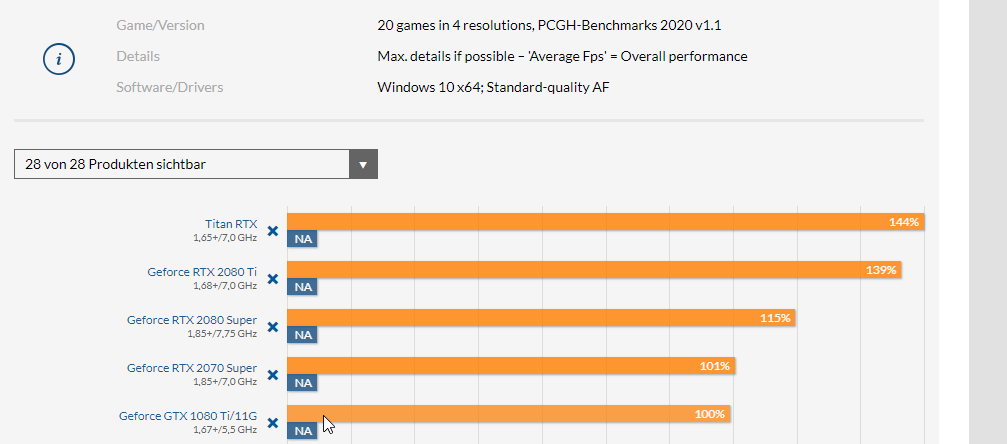

Grafikkarten-Rangliste 2024: 31 GPUs im Benchmark

Grafikkarten-Rangliste 2024 mit Nvidia-, AMD- und Intel-Grafikkarten: Benchmark-Übersicht mit allen wichtigen Grafikchips von Nvidia, AMD und Intel.

Please stop making stuff up. It's really annoying to argue with someone who just makes stuff up to strengthen their argument. It doesn't help that it takes 5 seconds of google to prove you wrong.More future proof at what, the hope that more games implement DLSS 2.0? The regular rasterization of the 2080 wasn't even equal to a 1080 Ti at launch in many games, and it took almost a year for it to actually have an insignificant lead in the majority of games. Do you think future ray traced games are somehow going to become more optimized and that the 2080 won't struggle to even have it above a medium setting and 1080p? I guess if you're fine staying at a locked 30fps, or don't mind constant drops into the mid 40s and 1080p it's great.

Turing was absolutely a bad investment, even more so if the current benchmarks aren't even of the flagship card. Even a 2080 Ti couldn't do a locked 60fps on a mix of Very High at 4K with more demanding games, and you also had to play at 1080p to get decent ray tracing performance. Like what the fuck? It's a card where you have to choose between a higher resolution and graphical options, or ray tracing, but can't have both without DLSS 2.0 being implemented.

If all someone cared about was very high frame rates, regardless of the resolution, then maybe that 25% advantage of the 2080 Ti over the 1080 Ti (or 2080/2080S) would be considered a good value for some, but that's not future proofing, because you're buying it for the current frame rate advantage it could give. You'll still be stuck with a card that makes you choose ray tracing over resolution and graphics though, and that's the flagship.

Ampere looks like it's going to be closer to the jump Turing should have been for the cost. Actually more, but still closer than what Turing offered.

Welp I sold it for $980. Not bad since I paid $1070 for it 16 months ago. Combined with what I've saved these last few months I'll be going all in on whatever the Ti equivalent happens to be. Might even go for a fancy model this time.The possible memory size and minor performance edge in most current games could be offset or go completely the other way as the 3070 like Ampere cards are rumored to having significantly improved ray tracing capability in games that use it and more games probably will be as the next generation gets rolling. This is a terrible time to buy a 2080 Ti or any Turing card really.

IMO buying a 2080 Ti for more than a 3070 is probably going to be about as good of a decision as buying a 780 Ti when the 970 was close to coming out or after. It's going to be interesting to see how aggressive clearance sales get.

If you can get that much, I would absolutely go for it, would go a long way to buying a 3080 Ti or probably cover the cost of a 3080 with money left over.

This revisionist history on first-gen Turing has to stop. Now.

Techpowerup 2080 FE Review. 20+ games tested.

Please go through each game's graphs, and count how many in which the 1080 Ti has the advantage, at any resolution. I'll wait for you to report back on your findings, as I already did so and counted.

Please, no pivots.

Absolutely not pivoting, and I'm not just pulling shit out of thin air. The main reason I decided to not get a new 2080 over a used 1080 Ti was precisely because the 2080 wasn't even matching the 1080 Ti in some games in the early benchmarks I was previewing, and when it was beating the 1080 Ti, it was always under 5fps. What I was seeing was likely a highly overclocked 1080 Ti (without knowing it was overclocked) vs a stock 2080 FE, which isn't a fair comparison and might have intentionally been misleading to make the 2080 look worse than it actually was.

Graphs like this are what I was looking at, although not this one in particular:

NVIDIA RTX 2080 Founders Edition Review & Benchmarks: Overclocking, FPS, Thermals, Noise | GamersNexus

stub It’s more “RTX OFF” than “RTX ON,” at the moment. The sum of games that include RTX-ready features on launch is 0. The number of tech demos is growing by the hour – the final hours – but tech demos don’t count. It’s impressive to see what nVidia is doing in its “Asteroids” mesh shading and...

So I was wrong. /crow

That's the level of discussion I'm used to expect from people like you.

You've chosen a used 1080Ti because it was dirt cheap at the end of GPU mining boom back in 2018. There were no other reasons, don't kid yourself. Newsflash: there is no mining boom now and you won't get a dirt cheap 2080Ti this time.The main reason I decided to not get a new 2080 over a used 1080 Ti was precisely because the 2080 wasn't even matching the 1080 Ti

You've chosen a used 1080Ti because it was dirt cheap at the end of GPU mining boom back in 2018. There were no other reasons, don't kid yourself. Newsflash: there is no mining boom now and you won't get a dirt cheap 2080Ti this time.

That was absolutely a factor, and ultimately the deciding one in the end because of the performance. Had the performance been better on the 2080 than what it was though, I would have gladly paid the initial premium for it, but I wasn't going to stretch to $1,200 for what the 2080 Ti offered, since I expected a card that could do 4K without going below 60fps. I also don't care about getting a cheap 2080 Ti, and wouldn't even buy one now unless it was at some unrealistically low figure. As I previously said in this thread, I am willing to give up to $1,200 for the equivalent Ampere version though this time.

It's already bothering me that I've had it for nearly 4 years, no way I can hang onto it past the end of this year.

Man, I've had my 970 for longer than that, imagine how I feel!

The discussion is already on this page. Maybe open up your eyes and try to read and understand the things that people post.That's the level of discussion I'm used to expect from people like you.

I've upgraded twice since I had my 970's (980Ti to 1080Ti), can't imagine how nice it'll feel for you to see such a huge jump!Man, I've had my 970 for longer than that, imagine how I feel!

I've upgraded twice since I had my 970's (980Ti to 1080Ti), can't imagine how nice it'll feel for you to see such a huge jump!

i'm still waiting for replacing my old R9 280X, that's a big jump lol

This is the first time in 7 years that I can get something better than a 660, a 960 or a 1060, oof.

Man, I've had my 970 for longer than that, imagine how I feel!

Same, I just receiced my new components (i7 10700, 32GB RAM and 1tb nVME SSD), but i'm waiting for the next Geforce to change my 970. I was motivated to upgrade because I want to run DCS World on my Pimax 5K VR headset.

I really hope the next Geforce cards will not have ridiculous prices. I miss having a high end GPU for 350€. I'm prepared to put about 700€ tops but I'm afraid they will start at 800 or some shit.

Same, I just receiced my new components (i7 10700, 32GB RAM and 1tb nVME SSD), but i'm waiting for the next Geforce to change my 970. I was motivated to upgrade because I want to run DCS World on my Pimax 5K VR headset.

I really hope the next Geforce cards will not have ridiculous prices. I miss having a high end GPU for 350€. I'm prepared to put about 700€ tops but I'm afraid they will start at 800 or some shit.

What's the performance uplift like? I've been wondering what a cutting-edge rig with an older, weaker graphics card would actually be like.

What's the performance uplift like? I've been wondering what a cutting-edge rig with an older, weaker graphics card would actually be like.

It's better. Just not total solution better. I went from 4670K>R3600 and overall the framerate is clearly better. Certainly windows and everything else is great. But the 970 just sometimes gets into full-chop situations where the framerate can tank, and it really struggles at 1440p. And I've mentioned this before but same games (AC:Odyssey, for example, at 1440p will go past the 3.5GB soft-limit on the 970 which will further tank performance...)

It's better. Just not total solution better. I went from 4670K>R3600 and overall the framerate is clearly better. Certainly windows and everything else is great. But the 970 just sometimes gets into full-chop situations where the framerate can tank, and it really struggles at 1440p. And I've mentioned this before but same games (AC:Odyssey, for example, at 1440p will go past the 3.5GB soft-limit on the 970 which will further tank performance...)

Interesting. My current rig (from 2013 outside of the graphics card) is a 970 with an i5-3570K, and 8GB of DDR3 and a SanDisk SATA SSD. Last Black Friday when there was an eBay Plus sale, I considered getting most of what I needed for a new rig (3900X, 32GB of 3600mhz DDR4, an NVMe drive, etc) and keeping the 970 until I could get a 3080 on sale. I decided the result wouldn't be worth it and I'd be better off waiting a year and going Zen3 instead.

Speaking of Zen3, I was wondering - do you think the larger core counts are going to trickle down to the cheaper CPUs this time? Like, say, a 12-core 4800X when the 3800X was only 8-core?

I went from a 4670k to a 8600k (both with a 1070) and I could really see a jump in framerate. Mostly in 1% lows. But yeah, it depends on what you are playing. Competitive games like OW? CPU-bound, eye-candy games like SotTR? GPU-bound (mostly). In uour case, I think a cheap and easy way to get some extra performance without any hassle is to buy another 8 gigs of ram, more and more games need a lot of ram.Interesting. My current rig (from 2013 outside of the graphics card) is a 970 with an i5-3570K, and 8GB of DDR3 and a SanDisk SATA SSD. Last Black Friday when there was an eBay Plus sale, I considered getting most of what I needed for a new rig (3900X, 32GB of 3600mhz DDR4, an NVMe drive, etc) and keeping the 970 until I could get a 3080 on sale. I decided the result wouldn't be worth it and I'd be better off waiting a year and going Zen3 instead.

Speaking of Zen3, I was wondering - do you think the larger core counts are going to trickle down to the cheaper CPUs this time? Like, say, a 12-core 4800X when the 3800X was only 8-core?

As for Zen3, I don't think there will be any change in the core count. Zen3 is made using N7P, an optimized N7 which is currently used in Zen2. So probably higher clocks all around. But the greatest change is in the architecture. I suspect they further improved the infinity fabric and latency is lower now.

Zen4 is probably going to be in either N7+, which while being still 7nm uses a completely different process, or N5, so maybe we'll see more cores then.

DLSS or any other technology that has to be explicitly implemented by the developer will never see widespread adoption unless its supported by consoles.

My current PC is exactly the same as yours and I'm also waiting for Ryzen 4000 and Nvidia's 3000. I'm so ready.Interesting. My current rig (from 2013 outside of the graphics card) is a 970 with an i5-3570K, and 8GB of DDR3 and a SanDisk SATA SSD. Last Black Friday when there was an eBay Plus sale, I considered getting most of what I needed for a new rig (3900X, 32GB of 3600mhz DDR4, an NVMe drive, etc) and keeping the 970 until I could get a 3080 on sale. I decided the result wouldn't be worth it and I'd be better off waiting a year and going Zen3 instead.

Speaking of Zen3, I was wondering - do you think the larger core counts are going to trickle down to the cheaper CPUs this time? Like, say, a 12-core 4800X when the 3800X was only 8-core?

My current PC is exactly the same as yours and I'm also waiting for Ryzen 4000 and Nvidia's 3000. I'm so ready.

Is the Ryzen 4000 series still coming this year? I heard rumors that it was being pushed back to 2021...

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

RTX 3000 series power consumption, PCB shape rumor Picture showing two RTX 3080s has leaked UPDATE: NVIDIA event announced for September 1st RTX 3090 Specs Rumor: "20% increase in cores over the GeForce RTX 2080 Ti", up to 24 GB memory with faster GDDR6X pin speeds, 384-bit bus, nearly 1 TB/s bandwidth RTX 30xx series power cable: "It is recommended to use a power supply rated 850W or higher with this cable" 3090 Phoenix GS product images leak REMINDER: Tomorrow, September 1st is the NVIDIA event that is expected to announce the RTX 3000 series graphics cards- Status

- Not open for further replies.