The Medium - PC system requirements released

- Thread starter Deleted member 11276

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

That's what I'm wondering. I got an i7 6600k so no idea how that will hold up.On the processor side, how's my i7-6700k holding up? I've lost track of cpu advances since I got one.

As far as Star Citizen, is the SSD part of the minimum or recommended requirements? Or both?

This game it's listed under minimum requirements, just in parentheses:

steamcommunity.com

steamcommunity.com

This game it's listed under minimum requirements, just in parentheses:

Steam :: The Medium :: Official system requirements

Dear players! Take a look at the official system requirements for the PC version of The Medium.

It's this generations coding to the metal.Given the high volume of "SSD?" comments, storage is probably the first line item the console crew jumped on.

At least this time there are some tangible specs to cite.

I said this before but if a 1060 is really the minimum then neither PC nor consoles will be able to do native 4k30fps so I'm really doubtful of the 1060 actually being the minimum here. The 1060 really isn't that much slower than a 2080. I often have a feeling people overestimate the difference between cards. Either that or people underestimate just how much GPU power native 4K needs.I'd assume that the 1060 and a similar performing AMD card is going to be the minimum requirement for 1080p going forward in next gen games.

However, at some point in the future, a 2060 could be the standard when next gen is fully utilized, as well as SDDs, maybe even NVMe SSDs. It could be that games are going to be built with Raytracing, NVMe SSDs and the DX12 Ultimate featureset in mind.

That will take a long while though, because it would significantly decrease PC user base.

Like you mention the 2060 as a minimum but the difference between a 2080 & 2060 is rather small. The 2060 is only 30% slower which is pretty much the difference between 1800p & 2160p.

On the processor side, how's my i7-6700k holding up? I've lost track of cpu advances since I got one.

I've got the same one. It was pretty great at the time, hopefully it's still good enough for the near future.

It doesn't look good, even by current gen standartsWait. How does that prove it's not demanding? Am I missing something?

Heh, my current build (and first gaming PC) that I built in 2016 is the bare minimum spec. I am building a new PC in a few months, though, so I'm not worried.

A 6 core CPU for recommended. I wonder how often this will be the case. I didn't expect console games to use much CPU grunt...

And yet it only runs 30fps on XSX.

And yet it only runs 30fps on XSX.

What about it specifically doesn't look good? What does current gen standards even mean? Everything is relative, and this isn't a big budget blockbuster AAA release.

how so?If you look closely the minimum CPU requirement is actually the most interesting part.

Because they are considerable more demanding as minimum than most games these days.

Assassin's Creed: Odyssey (minimum)

- OS: Windows 7 SP1, Windows 8.1, Windows 10 (64-bit versions only)

- CPU: AMD FX 6300 @ 3.8 GHz, Ryzen 3 – 1200, Intel Core i5-2400 @ 3.1 GH.

- GPU: AMD Radeon R9 285 or Nvidia GeForce GTX 660 (2GB VRAM with Shader Model 5.0)

- RAM: 8GB.

- Resolution: 720p.

- Targeted frame-rate: 30fps.

- Video Preset: Low.

- OS: Windows 7 64-bit.

- CPU: Intel Core i3-3220 2-Core 3.3 GHz or AMD FX-6100 6-Core 3.3GHz.

- RAM:Â 8 GB System Memory.

- GPU RAM:Â 1 GB Video Memory.

- GPU: GeForce GTX 650 or Radeon HD 7750.

- HDD: 55 GB Available Hard Drive Space.

- DX: DirectX 11.

- CPU: Intel Core i5-4690 / AMD FX 4350

- GPU: Nvidia GeForce GTX 780 / AMD Radeon R9 280X

- RAM: 8GB

- OS: Windows 7, 64bit

- DirectX: DX11

Devil May Cry 5 PC system requirements (minimum)

- OS: Windows 7 (64-bit required)

- Processor: Intel Core i5-4660, AMD FX-6300, or better.

- Memory: 8GB RAM.

- Graphics: Nvidia GeForce GTX 760, AMD Radeon R7 260x with 2GB RAM, or better.

- DirectX: Version 11.

- Storage: 35GB available space.

- Additional notes: Controllers recommended

I would love to see Control run on the minimum specsBecause they are considerable more demanding as minimum than most games these days.

Assassin's Creed: Odyssey (minimum)

Star Wars Jedi - Fallen Order Minimum System Requirements

- OS: Windows 7 SP1, Windows 8.1, Windows 10 (64-bit versions only)

- CPU: AMD FX 6300 @ 3.8 GHz, Ryzen 3 – 1200, Intel Core i5-2400 @ 3.1 GH.

- GPU: AMD Radeon R9 285 or Nvidia GeForce GTX 660 (2GB VRAM with Shader Model 5.0)

- RAM: 8GB.

- Resolution: 720p.

- Targeted frame-rate: 30fps.

- Video Preset: Low.

Control (minimum)

- OS: Windows 7 64-bit.

- CPU: Intel Core i3-3220 2-Core 3.3 GHz or AMD FX-6100 6-Core 3.3GHz.

- RAM:Â 8 GB System Memory.

- GPU RAM:Â 1 GB Video Memory.

- GPU: GeForce GTX 650 or Radeon HD 7750.

- HDD: 55 GB Available Hard Drive Space.

- DX: DirectX 11.

- CPU: Intel Core i5-4690 / AMD FX 4350

- GPU: Nvidia GeForce GTX 780 / AMD Radeon R9 280X

- RAM: 8GB

- OS: Windows 7, 64bit

- DirectX: DX11

Devil May Cry 5 PC system requirements (minimum)

- OS: Windows 7 (64-bit required)

- Processor: Intel Core i5-4660, AMD FX-6300, or better.

- Memory: 8GB RAM.

- Graphics: Nvidia GeForce GTX 760, AMD Radeon R7 260x with 2GB RAM, or better.

- DirectX: Version 11.

- Storage: 35GB available space.

- Additional notes: Controllers recommended

Doesn't look that bad to me

Yeah, it still looks great and with a proper CPU, it's even possible to play it at 60 FPS.

With the FX 4300 in the minimum requirements, you should be able to get a stable 30 FPS.

Given the game keeps both environments loaded at once, I can understand why SSD is recommended but not needed. Ram is going to be more important than loading speeds, in that regard, for what is and isn't feasible.

When I thought it was switching back and forth, I presumed SSD speeds played a key part, streaming in all the new assets and texture to replace the ones loaded, but that doesn't seem to be how the game works. Honestly, I think the game design looks really intriguing with the shifting and the split screen areas etc.

When I thought it was switching back and forth, I presumed SSD speeds played a key part, streaming in all the new assets and texture to replace the ones loaded, but that doesn't seem to be how the game works. Honestly, I think the game design looks really intriguing with the shifting and the split screen areas etc.

Not the minimum, but I played it on an i5 3450 with an RX 570. It wasn't that bad honestly. Had to turn down settings of course.

Its actually pretty high i was thinking minium would be a little lower and reccomend would be a ryzen 2600 or 2700xIf you look closely the minimum CPU requirement is actually the most interesting part.

I don't think you can make any claims about raytracing on consoles right now. We have very little idea what raytracing performance is going to look like there. The fact is, this is going to be a first gen AMD solution. Hopefully it's good, but raytracing is an expensive technique and I remain skeptical we're going to see it used extensively unless there a significant other compromises made (I areas like resolution and performance).How do you come to that conclusion after you have seen the requirements here? The 2080 seems to be roughly on par with a XSX, Raytracing performance included, going by these requirements and various other indicators. Your 2070 should be fine at 1440p with Raytracing in next gen games. And DLSS could give you a huge boost compared to the consoles.

Its actually pretty high i was thinking minium would be a little lower and reccomend would be a ryzen 2600 or 2700x

I'm pretty curious/skeptical about this too. To suddenly see the CPU requirement explode on next gen games doesn't make a lot of sense to me when it doesn't outwardly look like they're doing something that would be super CPU intensive (Especially for 30fps).

Honestly, I wouldn't be surprised if they figured they may as well put the latest CPUs on the Recommended, because, why not? Maybe I'll be wrong and suddenly games are going to be tremendously more CPU demanding right out of the gate, but that seems pretty unlikely.

Yep, AMD has said shit, and I doubt they will be in par with Nvidia, they are seemingly years behind on gpu's.I don't think you can make any claims about raytracing on consoles right now. We have very little idea what raytracing performance is going to look like there. The fact is, this is going to be a first gen AMD solution. Hopefully it's good, but raytracing is an expensive technique and I remain skeptical we're going to see it used extensively unless there a significant other compromises made (I areas like resolution and performance).

Yeah nothing wrong with tweaking it to run good enough. Hell i got a 5700 xt and i still turn off shadows as a reflexNot the minimum, but I played it on an i5 3450 with an RX 570. It wasn't that bad honestly. Had to turn down settings of course.

I don't doubt that the chips will be good and that they are a huge jump over the previous gen, but with new tech that AMD simply hasn't used before, I think it would be shocking if they had super efficient RTX solutions on their first shot.Yep, AMD has said shit, and I doubt they will be in par with Nvidia, they are seemingly years behind on gpu's.

My man! I'm rocking my I7-2600 like a champ!I still run an ancient i7 870 from almost 10 years ago. Wonder if the game will even boot :)

I hope we can run Medium.

The minimum CPU requirement is high to people? Have we forgotten how old the i5-6600 is?

This should've been expected. Welcome to a world where our systems aren't sleepwalking through PC ports any more.

This should've been expected. Welcome to a world where our systems aren't sleepwalking through PC ports any more.

I think it would only if it is optimized poorly out the gate. Lets hope its not something like that though.I don't think you can make any claims about raytracing on consoles right now. We have very little idea what raytracing performance is going to look like there. The fact is, this is going to be a first gen AMD solution. Hopefully it's good, but raytracing is an expensive technique and I remain skeptical we're going to see it used extensively unless there a significant other compromises made (I areas like resolution and performance).

I'm pretty curious/skeptical about this too. To suddenly see the CPU requirement explode on next gen games doesn't make a lot of sense to me when it doesn't outwardly look like they're doing something that would be super CPU intensive (Especially for 30fps).

Honestly, I wouldn't be surprised if they figured they may as well put the latest CPUs on the Recommended, because, why not? Maybe I'll be wrong and suddenly games are going to be tremendously more CPU demanding right out of the gate, but that seems pretty unlikely.

medium

My Take

1 - Odd seeing a GTX 1060 as a minimum and a 2080 for 4k. There is not a 4x gap between the 2. I think the game should run just fine on less powerful GPUs like a 1050ti given they have 4GB of VRAM.

2- It will be interesting to know how demanding next gen AAA games will be from a CPU front. Will devs use those 8/16 CPUs on the consoles to push high FPS gaming or develop games so demanding that they max out the CPUs while targeting 30 FPS? With the second scenario, we can say goodbye to 60 FPS gaming. HW progress is stagnant for the most part.

3 - How much VRAM will these games use? I don't see a game at 1080p maxing out the 8GB VRAM of an RX 580 on low textures. This may age as bad as that old Bill Gates quote.

-

1 - Odd seeing a GTX 1060 as a minimum and a 2080 for 4k. There is not a 4x gap between the 2. I think the game should run just fine on less powerful GPUs like a 1050ti given they have 4GB of VRAM.

2- It will be interesting to know how demanding next gen AAA games will be from a CPU front. Will devs use those 8/16 CPUs on the consoles to push high FPS gaming or develop games so demanding that they max out the CPUs while targeting 30 FPS? With the second scenario, we can say goodbye to 60 FPS gaming. HW progress is stagnant for the most part.

3 - How much VRAM will these games use? I don't see a game at 1080p maxing out the 8GB VRAM of an RX 580 on low textures. This may age as bad as that old Bill Gates quote.

-

Probably won't launch the game. Might be able to play at 720p "low" if it launches.

🤷♂️

I'd just wait for the RTX 3000 series or buy a Series X.

this the first game outside of alyx to require 1060+ for min specs?MINIMUM:

- Requires a 64-bit processor and operating system

- OS: Windows 10 (64bit version only)

- Processor: Intel® Core™ i5-6600 / AMD Ryzen™ 5 2500X

- Memory: 8 GB RAM

- Graphics: @1080p NVIDIA GeForce® GTX 1060 6GB / AMD Radeon™ R9 390X (or equivalent with 4 GB VRAM)

- DirectX: Version 11

- Storage: 30 GB available space

- Sound Card: DirectX compatible, headphones recommended

Was there ever any confirmation from the devs about why they couldn't make the game before XSX?

Like many others I assumed that comment was around the lack of SSDs previously, but this doesn't seem to be the case at all (I would have thought a 32GB ram requirement would be the norm for next gen also).

The CPU requirement though is pretty high.

Like many others I assumed that comment was around the lack of SSDs previously, but this doesn't seem to be the case at all (I would have thought a 32GB ram requirement would be the norm for next gen also).

The CPU requirement though is pretty high.

Medium doesnt need the SSD because both worlds are rendered at the same time splitscreen so the entire scene is loaded into the vram anyway.

it's not doing what Ratchet is doing with its 2 second loading portals.

Still, by far the best visual showcase at the MS conference. I love the splitscreen idea too.

it's not doing what Ratchet is doing with its 2 second loading portals.

Still, by far the best visual showcase at the MS conference. I love the splitscreen idea too.

LOL I was thinking the same thing, and surprised somebody hadn't made it yet.

Wouldn't you need atleast 30gigs of system ram then plus whatever is needed to run the game? I think SSDs will become standard well before 64gb of ram does.

SSDs do seem quite common already, but RAM prices seem to be going down. Can get 32GB for ~£130 now, and potentially 64GB for ~£230+. And DDR5 is going to be 32GB minimum for dual sticks.

Actually I think that DF said the XSX performs a bit under the 2080 (TI?Super?)

Not that I am aware of. That would be a laughable claim.

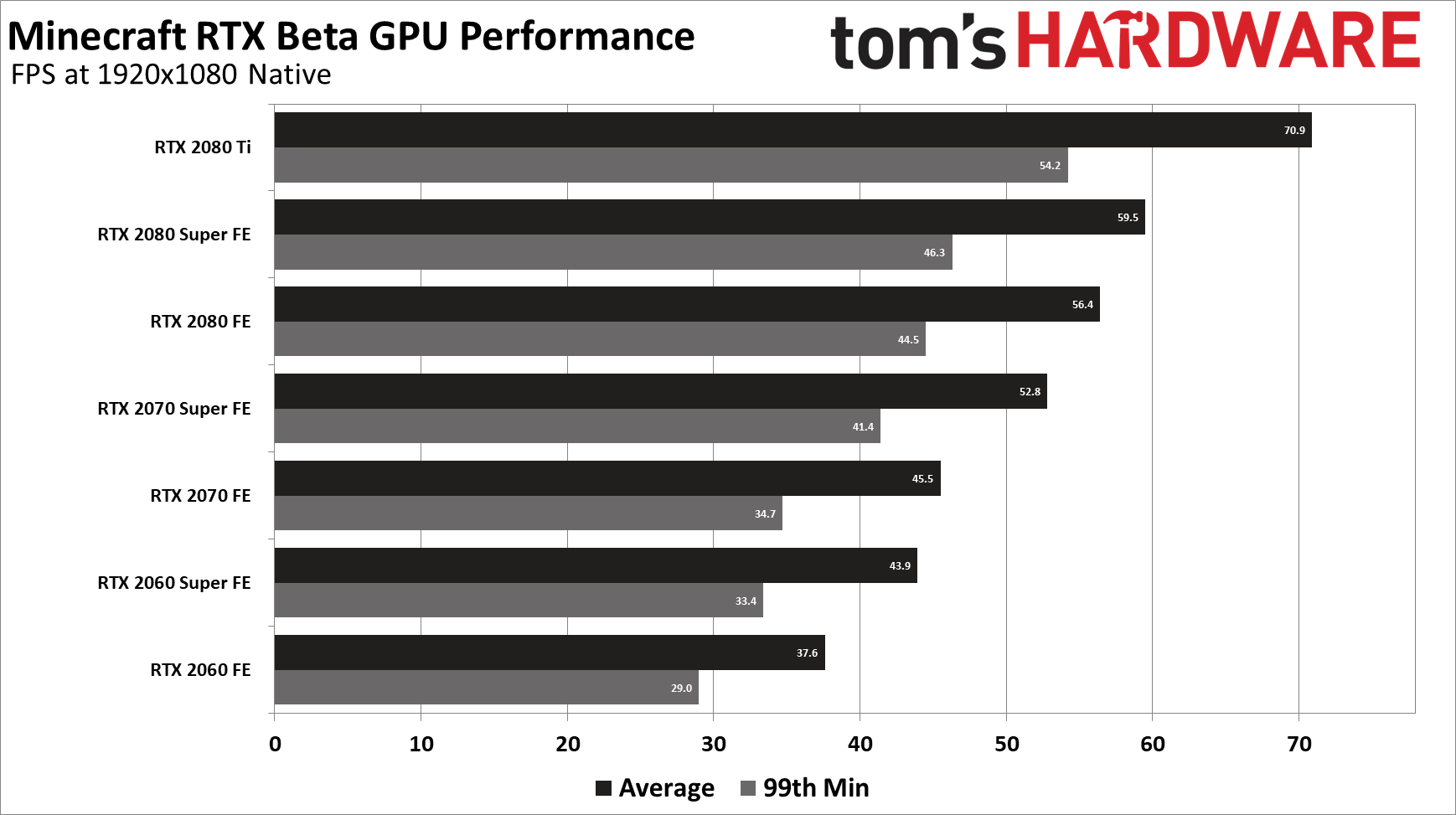

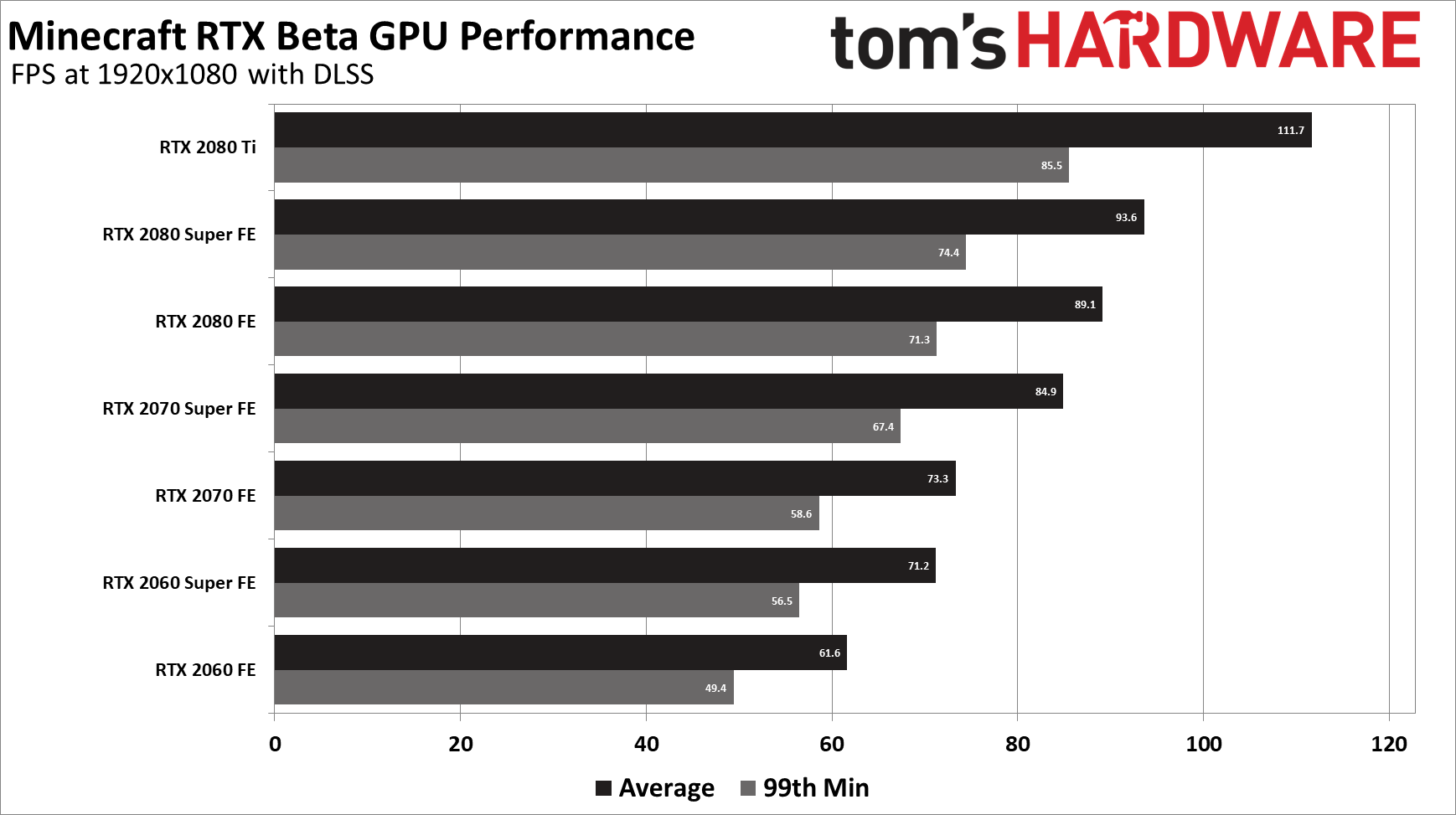

The XSX runs Minecraft RTX in reconstructed 1080p at 30 fps with dips depending on how complex the scene is. If you switch to the classic renderer it runs at 4k60. A RTX 2060 FE - the smallest RT card nvidia offers! - runs Minecraft RTX at 61fps average with DLSS 2.0 reconstructed 1080p.

That is a huge difference in RT performance - but is expected of AMD's first RT hardware. AMD is at least 3 years behind nvidia in RT-hardware R&D.

The theoretical raster performance of the XSX (which means almost nothing, hello Teraflops) is roughly on par with a Desktop 2070 (non Super) or a Desktop 5700 XT.

That is not to bash the XSX. This level of performance is still way more impressive than the PS4 was at launch in comparison. While the PS4 matched a ~$250 GPU of the previous year in performance, the XSX will match a current $400 GPU (5700 XT) at launch. Combine this much more potent GPU with a tenfold more capable CPU and next gen starts off WAY better than current gen.

Not that I am aware of. That would be a laughable claim.

The XSX runs Minecraft RTX in reconstructed 1080p at 30 fps with dips depending on how complex the scene is. If you switch to the classic renderer it runs at 4k60. A RTX 2060 FE - the smallest RT card nvidia offers! - runs Minecraft RTX at 61fps average with DLSS 2.0 reconstructed 1080p.

That is a huge difference in RT performance - but is expected of AMD's first RT hardware. AMD is at least 3 years behind nvidia in RT-hardware R&D.

The theoretical raster performance of the XSX (which means almost nothing, hello Teraflops) is roughly on par with a Desktop 2070 (non Super) or a Desktop 5700 XT.

That is not to bash the XSX. This level of performance is still way more impressive than the PS4 was at launch in comparison. While the PS4 matched a ~$250 GPU of the previous year in performance, the XSX will match a current $400 GPU (5700 XT) at launch. Combine this much more potent GPU with a tenfold more capable CPU and next gen starts off WAY better than current gen.

Wasn't the XSX Minecraft demo running at 1080p 60 fps with dips? It remains to be seen how the 2060 will really compare, but I think it will end up closer than people think when raytracing and DLSS are present.

Wasn't the XSX Minecraft demo running at 1080p 60 fps with dips? It remains to be seen how the 2060 will really compare, but I think it will end up closer than people think when raytracing and DLSS are present.

The demo ran at ~30 fps unlocked. If you see nothing but sky it shoots up into the high 40s low 50s. If the scene gets very complex (especially water or under water) it can drop below 30.

Where did you get that information? DF just said it was running between 30-60 FPS AFAIK.The demo ran at ~30 fps unlocked. If you see nothing but sky it shoots up into the high 40s low 50s. If the scene gets very complex (especially water or under water) it can drop below 30.

I think we should compare it without DLSS, if we are going by raw compute power.

This is for the Imagination Island map. If we just look at the performance, between 30 and 60 FPS fits mostly the 2070 Super here.

However, I think there are other factors. The Imagination Island map is extremly complex, much more complex than what DF saw at the event, I'd assume and we know world complexity has a big effect on framerate.

But yes, accounting for DLSS, a 2060 does seem to have superior performance to a XSX:

The Nvidia maps were created by professional minecraft players going nuts, while the XSX looked like a default map, so the performance cannot be easily compared. However, in my opinion, it looks like RTX is on the lead here.

With a goal of 4k/60 I'm thinking a 3070 will be good enough for next gen, especially with DLSS around. I'm a bit more concerned with my 3600 though. Games targeting 30 fps on console might be an issue.

With a goal of 4k/60 I'm thinking a 3070 will be good enough for next gen, especially with DLSS around. I'm a bit more concerned with my 3600 though. Games targeting 30 fps on console might be an issue.

Only a problem if it's 1080p/30 for me.

I'm guessing I can "easily" do 1080p/60 if consoles are targeting 4K/30fps

But if a game runs at 1080p/30 on next gen consoles...gg