According to CB, the 3070 is struggling to keep its FPS steady at 4K with the highest texture settings

Isn't the 3070 aimed more at 1080/1440 though?

According to CB, the 3070 is struggling to keep its FPS steady at 4K with the highest texture settings

In my personal experience with the RTX 3070 FE I've found that it's a really good card at 2560x1440 but a jump to just 3440x1440 and it becomes a bit questionable and concerning regarding future-proofing. It all depends on your settings in-game and your monitor/refresh rate but I think anything above 2560x1440 and you'll want a RTX 3080 especially for upcoming games (and some current ones as well).

2 weeks? Good luck, you'll need itSo the 6800s are a pretty nice alternative to 3070/80 if we don't care about RT. I hope I will see both available in 2 weeks when I will enter the RX/RTX wars. And good to know the 6800 is dimensioned well enough for ITX cases.

Thanks for this chart, this makes it really easy to see how the cards all stack up from each review. It doesn't tell the whole story but it's a good summary.

Soundly outperformed in 4K raster loads, annihilated in RT and DLSS, why would anyone buy these to save $50?

Yeah, the prices are ridiculous. I also tried to get my hand on a 6800 or 6800XT at mindfactory and alternate but not at this cost. AMD site didn´t sell any on their site and we already have people on ebay and ebay kleinanzeigen selling their models that are not even in their hands.Now the scalpers get directly supplied by the manufacturers.

The only two German retailers that got them, wanted 820 Euros for the 6800 XT and 740 for the 6800.

Fuck this...

People hypothesized that since consoles basically had the same hardware, games are going to take advantage of AMD stuff in the future.

Soundly outperformed in 4K raster loads, annihilated in RT and DLSS, why would anyone buy these to save $50?

In Canada the 6800xt is much cheaper than a 3080. All of the 3080 are above 1000$ CAD on Newegg and the reference 6800xt was 860$ CAD. According to the rumors posted earlier in the thread AMD is sending all of their supplies to AIB instead for their custom cards so there might be a lot of stock next week. So if you cannot get a 3080 you might be tempted by one of these. The 6800xt also has more VRAM than the 3080 (16GB vs 10GB) and so does the 6800 vs the 3070 (twice as much). And the 6800 pretty much mops the floor with the 3070. If you don't care about RT they are still a decent alternative if you can find them over the 3000 series. AMD is also working on a DLSS alternative just like they did to Gsync.

This is a pretty decent try by AMD imo. They came really close this time. They must be doing things right because NVIDIA and Intel want to do a Smart Access Memory for their GPU too. NVIDIA is also coming up with emergency cards with more VRAM like the 3060Ti 12 GB and 3080Ti 20 GB so this is for the best too. Competition is good.

I still cannot get a 3080 ( I want the Strix) but if suppliers are good for the 6800xt I will definitely try my luck.

But then in Canada, the 3080 FE is 899$, 40$ difference. When 6800XT AIBs release in 1 week, the prices will raise up too.

If you did not get a 6800XT reference from this morning, then we're back on square 0, either 3080 FE or AMD 6800XT, we're talking about unicorns that don't exist in Canada.

nice. very helpful. hugely helpful, even. great perspective.

Thanks, great chart. From this it seems like 6800 on average has about 8.1% more performance than a 3070 at +16% in MSRP and +13.6% in power draw. I expect the price gap of $80 between the two to decrease significantly as these cards become easier to get.

60 people in the line and only two 6800xt .. This is paper launch.

I guess this is all for the best since I haven't finished updating my rig yet anyway.

It is normal for cpu prices to go up this time of year, yeah?

It didn't happen last gen.People hypothesized that since consoles basically had the same hardware, games are going to take advantage of AMD stuff in the future.

It did, AMD benefited when games went heavy on compute, like RDR2. Nvidia has taken away any advantages AMD has now.

Yes and no.I'm not sure i follow what he means.

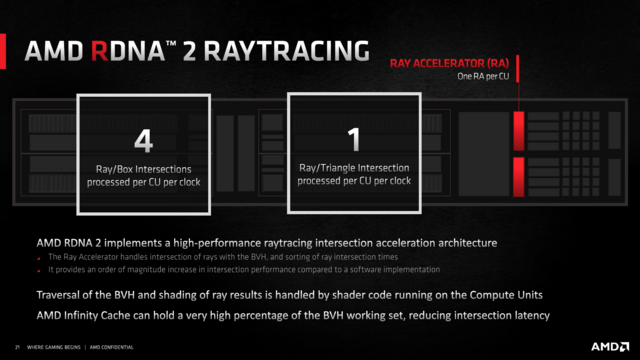

"... so my guess is that it's more representative of what we can expect from the DXR implementations that developers are likely to be adopting from here on out, with the exceptions of titles where Nvidia specifically pays developers to include their own implementations like what we see with watchdog.."

I'm sorry, the guy is clueless (as most youtubers), Nvidia does not have "their own implementations", Quake II RTX has Vulkan RT, the rest are DXR. Why do you think AMD cards can even be benchmarked otherwise? Console to PC games will use DXR. The same DXR calls that have been used since Turing, will be for Ampere and will be for RDNA2, it's hardware agnostic. It's presumptuous to even try to pass that as information during a review, holy shit. Did Nvidia kill everyone's dogs? Or they are just that ignorant.

And the "it's more representative" is a game with only RT shadows, the bare minimum. Are we hoping now that all console ports to PC will do bare minimum so that AMD cards "drop less" in performances? When has that ever happened? They go all in on PC and then drop down for consoles, for most devs anyway.

It seems AMD will hold a virtual event tomorrow (20th November) in my country, which is quite rare. I don't think Nvidia held any event when Ampere was released here. Announcing and releasing the cards close to the MSRP would be dope though.

Yeah lol

This can just as easily explained by the fact that those newer games are actually doing less w.r.t. Raytracing than the older ones. The difference in fps being Minecraft > Control > Watch Dogs perfectly aligns with the amount of Raytracing those games have implemented.Now, I'm not saying anyone should base their decisions off future potential, that's dumb, but it doesn't sound too farfetched that at least RT performance could age a bit better. While it could likely be a fluke, the 6800XT performed better than the 3080 in 5/7 of the new current gen games, compared to 3/17 older games, with a reduced gap in RT performance (with RDNA performing better than Ampere in Watch Dogs...which is arguably just a piece of shit though on PC haha).

It sure did, quite a bit.

Yes and no.

I genuinely don't listen to YouTubers (lol @ "pays devs for their own implementation"...this is why no one takes you seriously), but CB speculates the same.

Very very fair point.This can just as easily explained by the fact that those newer games are actually doing less w.r.t. Raytracing than the older ones. The difference in fps being Minecraft > Control > Watch Dogs perfectly aligns with the amount of Raytracing those games have implemented.

Pay for what? There is no "RTX implementation" like there was with PhysX enabled games, it's a generic interface. Nvidia literally worked with Microsoft on this, as part of DXR.

60 people in the line and only two 6800xt .. This is paper launch.

To use code written by Nvidia engineers.Very very fair point.

The new CoD also performs significantly worse on RDNA2 with RT on, though I don't know anything abouts it RT implementation. Then again Dirt 5 performs a lot better on RDNA.

Pay for what? There is no "RTX implementation" like there was with PhysX enabled games, it's a generic interface. Nvidia literally worked with Microsoft on this, as part of DXR.

They pay for advertising, branding, and cross-promotion, but it's not any different than when Sony or Microsoft gets the marketing rights to an upcoming multi-plat game. Unless a game use OptiX (which is not meant for games btw), no game with RT will run exclusively on NV.

Pay for what? There is no "RTX implementation" like there was with PhysX enabled games, it's a generic interface. Nvidia literally worked with Microsoft on this, as part of DXR.

They pay for advertising, branding, and cross-promotion, but it's not any different than when Sony or Microsoft gets the marketing rights to an upcoming multi-plat game. Unless a game use OptiX (which is not meant for games btw), no game with RT will run exclusively on NV.

6800 is the star there, significantly faster than the 3070, and the 6800 gets almost 10% more performance from overclocking the reference design. Also lower power draw on top despite having 8GB more VRAM.

with a reduced gap in RT performance (with RDNA performing better than Ampere in Watch Dogs...which is arguably just a piece of shit though on PC haha).