This is incredibly impressive.Damn that's freaking awesome, if true. The uplift in RT performance is HUUUUGE!

For reference (overclocked 2080Ti ~18 tflops):

I'm excited for the 3090.

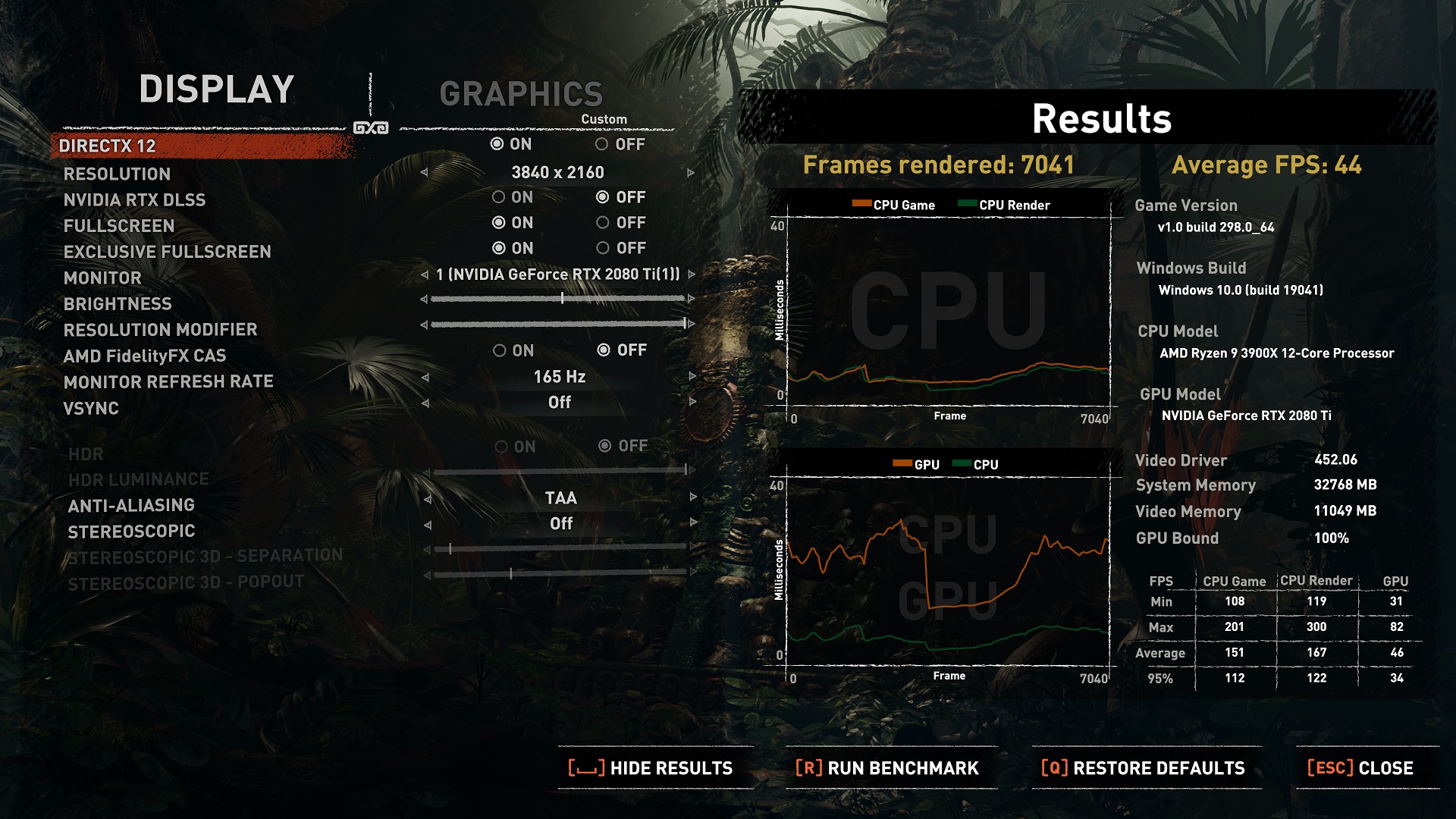

44 fps average in the benchmark (4k, native)

~30 fps in Control (4k, native)

I scored 9 899 in Port Royal

AMD Ryzen 9 3900X, NVIDIA GeForce RTX 2080 Ti x 1, 32768 MB, 64-bit Windows 10}www.3dmark.com

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Those firestrike performance scores seem wrong. A 2080 ti does 30k easy. Maybe they are not listing the graphics scores.

The numbers in the OP are comparing it to a 2080 Ti and 2080 Super, which are more powerful than a stock 2080, and DLSS is a upscale algorithm that uses some game engine info and machine learning to make games look similar to native resolution or a bit less so for more FPS.I'm completly out if the loop.

is my math of?

here it said:

Nvidia RTX 3070 ($499), 3080 ($699), 3090 ($1,499) Announced (Please See Threadmarks) News

RTX3090 $1,499 (up to 50% faster than TITAN RTX) RTX3080 $699 (2x faster than RTX 2080) RTX3070 $499+ (faster than RTX 2080 Ti) You can watch the event here: https://www.youtube.com/watch?v=QKx-eMAVK70 Full Specs https://www.nvidia.com/en-gb/geforce/graphics-cards/30-series/...www.resetera.com

and what is DLSS and why does turning it ON give more framerate?

DLSS, or Deep Learning SuperSampling, is a technique developed by Nvidia, wherein a specially trained AI model, accelerated by specialized hardware called Tensor present in 20xx and 30xx series Geforce graphics cards, can seamlessly increase the resolution of a rendered image, at great speed. The practical upshot is either increasing effective resolution and image quality for the same performance, or increasing performance for the same resolution (sometimes even with increases in image quality).and what is DLSS and why does turning it ON give more framerate?

It requires some effort on part of the developer to implement in their games, and requires support in the form of a Game Ready driver to configure, so it isn't widely adopted quite yet, but the obvious benefits in performance and image quality across the board, combined with relative ease of implementation (essentially replacing Temporal Antialiasing), will surely mean near-ubiquitous adoption in major titles going forward.

noone, not even nvidia claimed it was 80% better then a 2080Ti

they said up to 2x 2080s

Big Navi is rumored to be 50% better than 2080TI.

Perhaps there is hope for the Red team?

Depends on pricing and ray tracing performance. But I think we're already seeing positive impact from RDNA2's impending entry into the market in the form of better-than-expected pricing for the 3070/3080.

DLSS 1.x was almost a meme because of how videos would come out that shows that setting internal resolution to 70-80% was arguably as good or better. Plus DLSS 1.x was kind of smeary when it came to certain details like faces. It wasn't horrific and you still got a decent performance boost, but it wasn't anything to write home about. It looked a little weird but still felt like a fair trade for the performance gains to be had.

DLSS 2.0 is a completely different animal. In no way does it feel like a fair trade at all. It's very close to the "this shouldn't be possible" feeling.

Thanks, ya'll. Makes perfect sense.DLSS started off rough. It's not version 2.0 like it is on Control/Death Stranding etc.

What TV do you have. I've run games at 1440p on my TV and it didn't look blurry at all.I'm not sure what you mean.

I can go into the Nvidia control panel and set a custom resolution of 1440p and output that to the TV, but that doesn't scale well and introduces a blur particularly on UI elements; I think from an IQ standpoint it looks worse that just choosing 1080p and letting the TV scale up to 4k. Generally, if I choose 1440 from within a game, I get a washed out, 30fps locked game.

Looks like 4k/160FPS to me.

Few questions:

1) is DLSS a card setting or game setting? Like can it only be used in certain games or on literally anything the GPU is driving frames for?

2) how are the thermals compared to 20XX series?

3) will this fit in a Node 202 with 15mm case fans lol? I get the feeling no...

1) is DLSS a card setting or game setting? Like can it only be used in certain games or on literally anything the GPU is driving frames for?

2) how are the thermals compared to 20XX series?

3) will this fit in a Node 202 with 15mm case fans lol? I get the feeling no...

1) is DLSS a card setting or game setting? Like can it only be used in certain games or on literally anything the GPU is driving frames for?

Individual games have to add support for it, and you turn it on in the game's own settings. At this point it's not some driver-level thing you can apply to all games, and it's not certain it ever will be—it needs some level of support/integration in the game engine, similar to TAA.

Was expecting 2x 2080. Doesn't seem like it's reaching that judging from those 2080S comparisons?

They are as "efficient" as Turing ones. Ints in Ampere can't run in parallel to all flops though so you have varying degrees of gains depending on the mix of instructions in a workload.And yes it was kind of obvious Ampere flops are significantly less efficient than Turing ones.

If you look at the benches in OP you'll notice that the gains are higher when there's more shading happening - which shows that Ampere is limited by something else there besides its flops.

As a pure example, running a 2080Ti on a dual core CPU will result in zero gains over 1080Ti running on the same system. Doesn't mean anything for 2080Ti's "flops efficiency".

I have a h210 and the posted numbers for the 3080 do make it fit I thought? Unless you have a radiator at the front?just brought myself a h210-i with a 2080 super after doing the measurements the 3080 founders edition does not fit, does anyone reckon they will release a smaller version of the 3080. otherwise ill have to consider the 3070

Nvidia should really jump in a and get more dev and games to support DLSS support. Especially something as popular and big as Modern Warfare/warzone. There is no reason why that game doesn't support DLSS especially when it was some of their sponsored game with rtx support. There are still a lot of games lack dlss support that were promised before the launch of 2000 series. Darksider 3.ME Andromeda are the 2 that never got DLSS support that was promised.

It's a Samsung 4k TV from about 3 1/2 years ago. I think people will differ on what they do and do not find acceptable, but between 1440p not having a clean scaling ratio to 4k, and the TV not employing its generally quite good internal scaler when sending a custom resolution, I do not find it acceptable.What TV do you have. I've run games at 1440p on my TV and it didn't look blurry at all.

Can you not set your graphics card to always put out the native resolution of your TV and do the upscaling internally by the GPU and not by the monitor/TV?It's a 4k TV. It's a matter of the TV not supporting as one of its resolutions 1440p/60fps, and as such, when you force the resolution in game, it will display 1440p, but defaults the fps to 30. You can set a custom resolution to 1440p/60fps in the control panel, but the TV does not recognize it natively, and therefore doesn't scale it as cleanly like it does a 1080p/fps signal. It's a pretty common problem for 4k tvs if you google it. Perhaps newer model 4k TVs have added 1440p/60ps as a recognized setting.

Pretty sure it's in the Nvidia control panel, but I've never tried it.

Impressive numbers, mostly 1440p here so I can't wait for those sweet frame increases.

Will do 4K/60FPS on my OLED if the game has proper HDR.

Will do 4K/60FPS on my OLED if the game has proper HDR.

Can you not set your graphics card to always put out the native resolution of your TV and do the upscaling internally by the GPU and not by the monitor/TV?

Pretty sure it's in the Nvidia control panel, but I've never tried it.

If there's a method to doing it ("Send 4k signal to TV" > "Only actually render at 1440p"), I'd be interested in trying it, but I'm not aware of how to do it. It kind of sounds like what I assume people do when they downsample, but in reverse?

An incredible 4K, 60+ FPS card and an unstoppable 1440p, 144 FPS card. Can't wait to buy one in a few years.

This seems considerably less impressive than the performance implied by the Digital Foundry selection (which showed a 70-80% uplift vs 2080, whereas seems closer to 60% uplift from 2080 after normalising 2080S and 3080 against the vanilla 2080).

I could be missing something, but that's my first impression. We need to see a broader suite of results. DLSS remains impressive, but is hardly the norm for 2020 and it won't be for 2021 either.

More benches now!

I could be missing something, but that's my first impression. We need to see a broader suite of results. DLSS remains impressive, but is hardly the norm for 2020 and it won't be for 2021 either.

More benches now!

Last edited:

This is why I'm excited. I've been running a 1440p 144hz monitor on a 1070 FE. GSync is the only thing saving me right now.An incredible 4K, 60+ FPS card and an unstoppable 1440p, 144 FPS card. Can't wait to buy one in a few years.

Playing at 1440p/144hz the 3080 will crush anything I throw at it.

For now yea but as games become exclusively made for next-gen its hard to tell. Luckily we can edit settings for large performance gains and little graphical difference most of the time.As someone who still only has a 1080p monitor and getting a 3080 next week (Gods willing) -

I'll be playing maxed out at 144fps for 5+ years won't I? hahaha

I want to see those 1440p benchmarks the most!

Just guessing, but from what I seen it's usually 1080p - - >4K. 720- ->1440p

The 2080 is little more powerful than the 1080ti. I just add 5% to the difference between 2080 vs 3080 in these benchmarks

Im going to cry if there isnt enough on day 1.

Get your tissues ready...

Stock up on tissues.