If the dynamic resolution was not capped at 1440p and instead at 2160p, yes, by a high chance. Though you also need some more CPU performance, but I think we are talking Gen2 mode (Native mode) anyway, so the CPU provides full performance too.Anyone on my BC boost question?

"

Guys a question for this hypothetical ps5 boost mode regression test.

So if a game ran with dynamic resolution around 1440p on pro with clock of 900mhz , would a ps5 boost clock of 2ghz make it native 4K??

"

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Next-gen PS5 and next Xbox speculation |OT10| - We aim to transition those from OT9 at a pace never seen before. [SEE THREADMARKS FOR CHANCE AT GLORY]

- Thread starter Mecha Meister

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 10 threadmarks

Reader mode

Reader mode

Recent threadmarks

Liabe Brave - NAVI GPU Block Sizes + Next Gen APU Predictions Xbox Series X Prototype sighting Xbox Series X Prototype allegedly registered to account Dictator (Digital Foundry) - Ray Tracing and Storage Speeds Albert Panello comments on SOC design (2) Albert Panello comments on SOC design (1) Albert Panello comments on SOC design (3) RULES FOR CHANCE AT GLORY CONTESTI think that almost everyone can agree that it really won't hurt Sony one way or another ultimately with whatever method they choose to communicate and when, and of course it is their right to do whatever they want with any of this. But their apparent lack of concern for their hardcore fans in how they go about things is a little odd to me, including a simple blog post on the actual PlayStation 25 year anniversary instead of something fun there. If they are still doing something in February which I think is a legitimate possibility then it just seems strange to not just put out the reveal date tease on 1/31 when so many were expecting that on the anniversary of when they did it for the PS4. Of course I guess maybe they really don't do anything at all in February which would start to make me wonder if they are having some unknown issues that they want to sort out prior to doing a reveal as that seems more reasonable than some type of next level strategy against Xbox or something.

You would still use them for shading even with path tracing.impossible to tell as we don't know yet what power we're getting.

Was mentioned by DF several times. Here for example: https://youtu.be/y_nYcbQv2xA?t=1449

Full path tracing is out of the question for next gen consoles. They won't be anywhere near fast enough to handle it with AAA or even AA graphics.

That being said, I'm fairly sure that SIMD units are still being used in path tracing as well, not for shading but for general computations.

Same as you use texture units for texturing.

One needs information about surfaces the rays hit and light them accordingly. (BRDF and so on.)

Thanks man . So engine dependent basically .If the dynamic resolution was not capped at 1440p and instead at 2160p, yes, by a high chance. Though you also need some more CPU performance, but I think we are talking Gen2 mode anyway, so the CPU provides full performance too.

For pro owners , Do we know any dynamic resolution games on ps4 pro ?is RDr 2 dynamic CB 4k? What about finally fantasy etc..

🙌

Good to be back, I missed y'all.

I will now be PM'ing all my jokes to III-V before submitting them to this thread. He will verify that they're okay to post.Welcome back bro haha Becareful with your jokes not all mods are familiar With it unfortunately 😜😆

Haha sure , we need you now . We are getting close to the reveals(in our hearts Ofcourse not in reality 😂)🙌

Good to be back, I missed y'all.

I will now be PM'ing all my jokes to III-V before submitting them to this thread. He will verify that they're okay to post.

But what you found is - I think - supporting what I was trying to explain. The cooling solution will help but not solve the challenge completely. Also the last point is about proving by further research.

I believe that paper was published 5 years so there is likely more research out there especially since AMD has used die stacking models in their marketing. I think the emphasis in their research that this can even in a low cost fanless solution shows their confidence in being able to cool die stack solutions.

Anyone on my BC boost question?

"

Guys a question for this hypothetical ps5 boost mode regression test.

So if a game ran with dynamic resolution around 1440p on pro with clock of 900mhz , would a ps5 boost clock of 2ghz make it native 4K?? Without any patch I mean

"

If the dynamic solution allowed it to get as high as 4K - i.e. used it as a baseline resolution that got scaled down along either or both axes -, there's no reason why it wouldn't (same for uncapped frame rates).

But can't we look at the Github leak and Phil's photo of the XSX chip to assume they must have close to a 12 TF console?Assumptions.

You have to consider people are chasing tales trying to make sense of information they have access to but no context of. For example, we don't know the multiplier for an XBOX Series X so everything is skewed around an assumed 12TF baseline. It also informs the technology inside and it's comparative power to a PS5.

On the other side of the fence we have people working parallel to the industry stating PS5 is slightly ahead but that they are so close in power there's practically nothing in it. This is best case scenario.

At this point who do you trust, people with a fascination or people in the know?

Just remember this is a speculation thread for a reason.

Do you have any games as an example off top of your mind ?If the dynamic solution allowed it to get as high as 4K - i.e. used it as a baseline resolution that got scaled down along either or both axes -, there's no reason why it wouldn't (same for uncapped frame rates).

They all look pretty much the same BECAUSE there isn't an ounce of detail in ANY of these shots because they are all highly compressed jpegs. If you actually looked at the third rope of flags like you suggested that I should, then all you can see is nasty macro-blocking.Um, no. DLSS adds details due to high resolution training feed.

Native resolution (1440p)

native resolution + max sharpening

DLSS quality, sharpening at default (like first pic)

For example, look at the third flag rope, in native resolution its barely visible, while in the DLSS image you can clearly see the thin line where the flags are attached. Why do you think this looks objectively worse? Maybe you should open your mind to new techniques.

Also, look at the huge performance boost. 105 FPS to 143 FPS!

If you can post uncompressed images then we can see which looks better, and also worth advising the native resolution of the DLSS shot before reconstructed to 1440p?

Personally I think DLSS looks trash IRL, especially in motion when artefacts become apparent. And yes, that's not just me basing it on my experience with games like Metro Exodus, but also the "DLSS flagship"..Control. I actually prefer to reduce the effects in Control so I can hit 2160p (on my 2080Ti) rather than using DLSS. TBH the only reconstruction technique I don't mind is temporal injection, because it has the cleanest IQ and least artefacts of any of them when reconstructing to 4K.

Again, do not insinuate from yourself to others. My last statement on that.AFIAK, DLSS currently doesn't use tensor cores on NVidia GPUs. It's a compute shader that runs on shader cores the same as it would on an AMD GPU.

I would have been happy to discuss it if the discussion was established in good faith. It clearly wasn't.

And as has already been demonstrated so far with the discussion picked up by others here, not many here really have the level of domain expertise to correctly assess the viability.

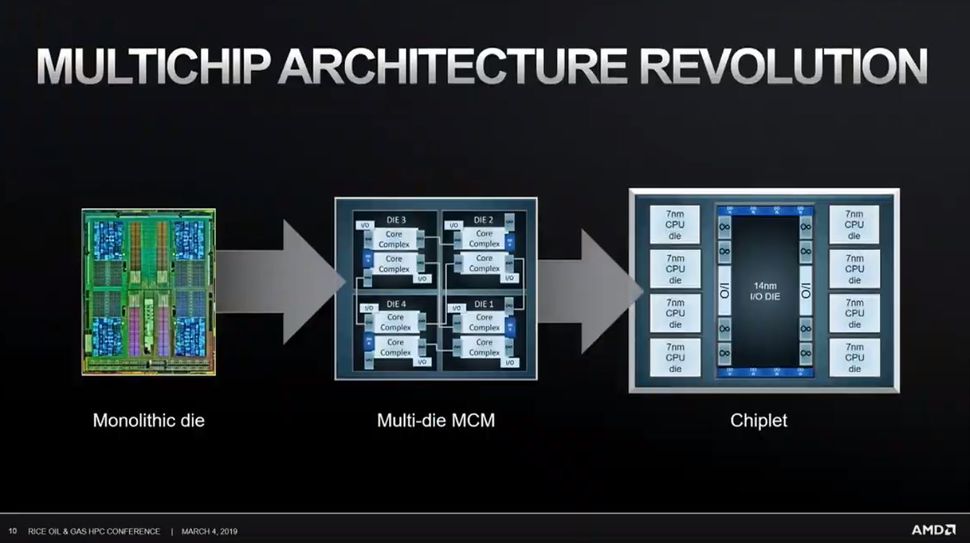

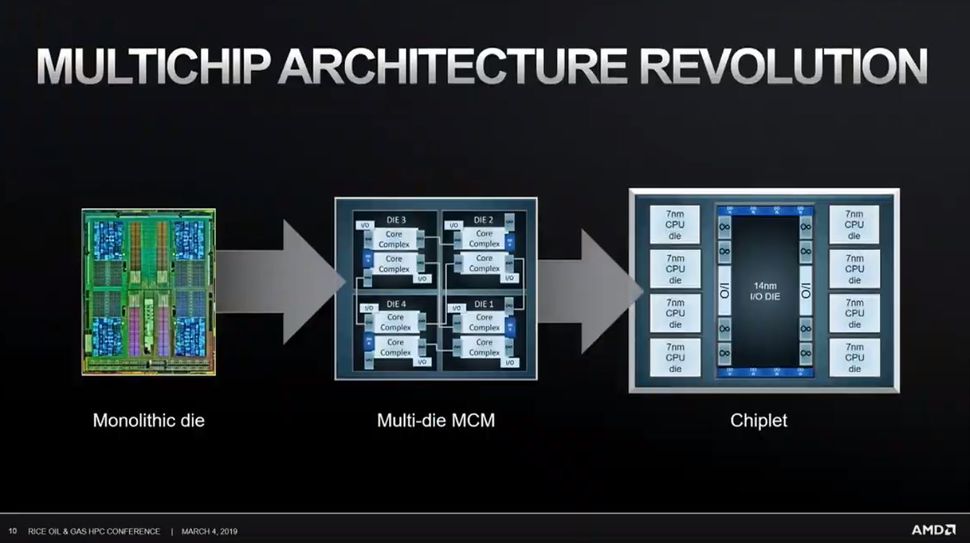

I'm pretty sure an MCM approach isn't unlikely due to "complexity and heat". The WiiU used an MCM for heavens sake.

Regarding the Nintendo MCM:

You surely have seen the distance between the chiplets and that the memory was not HBM, it was on the logic board.

The discussion currently ongoing was about memory being on the MCM. Which brings it nearer to the CPU and GPU hotspots.

However the Nintendo design could be a valid way of having a GPU only Oberon. Though there comes another challenge/issue we are already aware of from the Ryzen CPUs and that is the speed of the Infinity Fabric. Did AMD not say itself for high performance GPUs that need low latency (games) IF is not capable enough? Best example is Renoir: surprise surprise, a monolithic design.

Last edited:

Plus God of War: Ascension ,GT6 and Beyond Two Souls later as well.PS4 sales are at all time lows and Sony announced the PS4 despite launching The Last of Us that summer.

2013 was indeed fully packed with PS3 first party games...Puppeteer and GT6 sent to die but with BC that won't be the problem this time.

Last edited:

Not aware of any solutions that already use the patent. And I talk about data center, not consumer hardware. AMD itself, for their super computer, selected liquid cooling and not the patented solution, IIRC. Maybe they are still not at target efficiency. There ends my knowledge.I believe that paper was published 5 years so there is likely more research out there especially since AMD has used die stacking models in their marketing. I think the emphasis in their research that this can even in a low cost fanless solution shows their confidence in being able to cool die stack solutions.

AFIAK, DLSS currently doesn't use tensor cores on NVidia GPUs. It's a compute shader that runs on shader cores the same as it would on an AMD GPU.

There was some talk about some of the DLSS implementations not even using DL models in any meaningful way. From the fairly extensive contact I have had with generative neural networks, I'm quite skeptical about it being successfully used in real time - models that surpass algorithmic approaches almost invariably are way too big and slow to achieve anything.

Not really, I don't think any of the games out there doing DR get anywhere near native 4K at any point.

Not aware of any solutions that already use the patent. And I talk about data center, not consumer hardware. AMD itself, for their super computer, selected liquid cooling and not the patented solution, IIRC. Maybe they are still not at target efficiency. There ends my knowledge.

AMD has been talking about it for years nonstop. They already stack HBM on the same package as their GPUs.

Again, do not insinuate from yourself to others. My last statement on that.

Regarding the Nintendo MCM:

You surely have seen the distance between the chiplets and that the memory was not HBM, it was on the logic board.

The discussion currently ongoing was about memory being on the MCM. Which brings it nearer to the CPU and GPU hotspots.

However the Nintendo design could be a valid way of having a GPU only Oberon. Though there comes another challenge/issue we are already aware of from the Ryzen CPUs and that is the speed of the Infinity Fabric. Did AMD not say itself for high performance GPUs that need low latency (games) IF is not capable enough? Best example is Renoir: surprise surprise, a monolithic design.

My understanding is that infinity fabric is only relevant between sockets and MCMs like threadripper. Within the same interposer you would use an active interposer to control the on die communications so infinity fabric wouldn't come into play at all for the GPU/CPU

here's a good example of AMDs marketing

I can't think of a game where I wouldn't want at least a toggle switch between prioritize visuals vs. prioritize 60FPS.If the dynamic solution allowed it to get as high as 4K - i.e. used it as a baseline resolution that got scaled down along either or both axes -, there's no reason why it wouldn't (same for uncapped frame rates).

That is true but the example you posted was not that. There was the interposer missing below all chiplets. There were interposers for each chipset only.My understanding is that infinity fabric is only relevant between sockets and MCMs like threadripper. Within the same interposer you would use an active interposer to control the on die communications so infinity fabric wouldn't come into play at all for the GPU/CPU

here's a good example of AMDs marketing

Also:

AMD talking about a long term plan is different to actually have an example being in production. Of course we have to look on all this from a console standpoint, right? For me it is highly unlikely to see such a sophisticated design in a console. But that is just me the typical Colbert, The Party Pooper :)

Last edited:

You compared the wrong screenshots. Based on your comparison it looks like you compare the DLSS shot against itself.They all look pretty much the same BECAUSE there isn't an ounce of detail in ANY of these shots because they are all highly compressed jpegs. If you actually looked at the third rope of flags like you suggested that I should, then all you can see is nasty macro-blocking.

If you can post uncompressed images then we can see which looks better, and also worth advising the native resolution of the DLSS shot before reconstructed to 1440p?

Personally I think DLSS looks trash IRL, especially in motion when artefacts become apparent. And yes, that's not just me basing it on my experience with games like Metro Exodus, but also the "DLSS flagship"..Control. I actually prefer to reduce the effects in Control so I can hit 2160p (on my 2080Ti) rather than using DLSS. TBH the only reconstruction technique I don't mind is temporal injection, because it has the cleanest IQ and least artefacts of any of them when reconstructing to 4K.

The first picture is native, the second one is native and sharpening from Nvidia control panel and the third one is the DLSS shot. Here's the rope with the correct comparison:

native resolution on the left, dlss on the right

Even with the compression, it can be easily seen that the rope is a detail that has been added by DLSS which is only barely visible at native resolution so I'm not going to bother recreating that screenshot on my PC (the pic is not from me)

BTW, Control DLSS and Wolfenstein DLSS are two different leagues, Wolfenstein is definately the DLSS flagship now, I suggest you to invest 5 bucks and try it out for yourself, you will also see that there are no artifacts when moving. I personally was not a fan of Control's DLSS either and afaik it isn't even using the tensor cores and just runs on the shader cores. But Wolfenstein DLSS definately does as there is no performance impact in reconstruction unlike Control and looks a lot better as well.

Last edited:

Just be glad sony delayed the PSM announcement just for you🙌

Good to be back, I missed y'all.

I will now be PM'ing all my jokes to III-V before submitting them to this thread. He will verify that they're okay to post.

What game is it? I'll give it a try myself and see if I can recreate the screenshot.You compared the wrong screenshots. The first one is native, the second one is native and sharpening from Nvidia control panel and the third one is the DLSS shot. Here's the rope with the correct comparison:

native resolution on the left, dlss on the right

Even with the compression, it can be easily seen that the rope is a detail that has been added by DLSS which is only barely visible at native resolution so I'm not going to bother recreating that screenshot on my PC (the pic is not from me)

BTW, Control DLSS and Wolfenstein DLSS are two different leagues, Wolfenstein is definately the DLSS flagship now, I suggest you to invest 5 bucks and try it out for yourself, you will also see that there are no artifacts when moving. I personally was not a fan of Control's DLSS either and afaik it isn't even using the tensor cores and just runs on the shader cores. But Wolfenstein DLSS definately does as there is no performance impact in reconstruction unlike Control and looks a lot better as well.

I've already got Youngblood which already ran at max settings (pre RTX) with 60fps at native 4k so never tried running at a lower resolution + DLSS at the time. Will give it a try later to see how it holds up as haven't tried since the RTX patch. Disappointing game though :(

That is true but the example you posted was not that. There was the interposer missing below all chiplets. There were interposers for each chipset only.

Also:

AMD talking about a long term plan is different to actually have an example being in production. Of course we have to look on all this from a console standpoint, right? For me it is highly unlikely to see such a sophisticated design in a console. But that is just me the typical Colbert, The Party Pooper :)

i had to go back and look at the original diagram and didn't notice the individual interposers. But didn't the rumors say Sony's design was more sophisticated anyway? It sounds like something Sony would want in a design for the space and power savings

Ah, that's great. Yes it's indeed youngblood so you just have to update your game and drivers.What game is it? I'll give it a try myself and see if I can recreate the screenshot.

I've already got Youngblood which already ran at max settings (pre RTX) with 60fps at native 4k so never tried running at a lower resolution + DLSS at the time. Will give it a try later to see how it holds up as haven't tried since the RTX patch. Disappointing game though :(

You don't have to switch your resolution to a lower one. Just stay on your native resolution, and then choose one of the DLSS presets (there's quality, balanced and performance) I would suggest to start with quality.

Don't forget to check that the resolution scaling is off and at 1.00, sometimes it resets itself for a strange reason.

Ah, that's great. Yes it's indeed youngblood so you just have to update your game and drivers.

You don't have to switch your resolution to a lower one. Just stay on your native resolution, and then choose one of the DLSS presets (there's quality, balanced and performance) I would suggest to start with quality.

Don't forget to check that the resolution scaling is off and at 1.00, sometimes it resets itself for a strange reason.

Out of interest do we know what resolution the game is dropping to when DLSS is enabled based on the three different options?

Out of interest do we know what resolution the game is dropping to when DLSS is enabled based on the three different options?

Yes, 66% of native resolution for DLSS quality. 50% for performance and balanced something in between.

DF did a pretty good analysis on it too: https://www.eurogamer.net/articles/digitalfoundry-2020-wolfenstein-youngblood-rtx-upgrade-analysis

Last edited:

I would opt for sophisticated in implementing capabilities and features rather than sophisticated in manufacturing/producing in that case:)i had to go back and look at the original diagram and didn't notice the individual interposers. But didn't the rumors say Sony's design was more sophisticated anyway? It sounds like something Sony would want in a design for the space and power savings

Edit:

The point is what we currently discuss, while exciting, doesn't add any feature or capability nor do I see it is a requirement to enable features important to run games on it. I would hope Sony and MS were focused on that capabilities and features instead of a leading edge stacked design.

Last edited:

That is true but the example you posted was not that. There was the interposer missing below all chiplets. There were interposers for each chipset only.

Also:

AMD talking about a long term plan is different to actually have an example being in production. Of course we have to look on all this from a console standpoint, right? For me it is highly unlikely to see such a sophisticated design in a console. But that is just me the typical Colbert, The Party Pooper :)

OK Colbert, I'm tired of you (great Late Night Show, BTW). Why is the rumored "Lockhart" only 4TF (RDNA) and 12 GB of DDR6. Huh, Huh, Huh? That is because there is no Series "S"! Lockhart turns your existing Xbox One, One S, and One X into a next-gen machine. Can you Party POOP on that..MR. COLBERT!

Interesting analysis from DF, sounds like DLSS has come on hugely.Yeah, it is youngblood.

Yes, 66% of native resolution for DLSS quality. 50% for performance and balanced something in between.

DF did a pretty good analysis on it too: https://www.eurogamer.net/articles/digitalfoundry-2020-wolfenstein-youngblood-rtx-upgrade-analysis

I wonder if next gen consoles will have their own type of DLSS implementation? Wouldn't they need their own type of tensor cores in the GPU etc? Will be interesting to see.

I would opt for sophisticated in capabilities and features rather than sophisticated in manufacturing/producing in that case:)

chiplets should be cheaper to manufacture in theory as your yields should be much higher than a monolithic chip, further if you are future proofing your console for a possible PS5 pro then rather than designing a new chip you tack on more chiplets.

Maybe I'm misreading it but It just seems like there are more bonuses to going the chiplet route rather than the traditional monolithic die.

That would be cool. Theoretically that deep learning model from wolfenstein dlss can also be inference on the GPU cores, but it would have a great performance impact when used. So that would kind of defeat the purpose of using it, that's why the tensor cores come in very handy.Interesting analysis from DF, sounds like DLSS has come on hugely.

I wonder if next gen consoles will have their own type of DLSS implementation? Wouldn't they need their own type of tensor cores in the GPU etc? Will be interesting to see.

I don't know for sure, but I think Controls DLSS was not based on AI, if thats true, something similar could be used in next gen consoles.

But personally I think most games on Xbox Series X atleast will run in native 4K and more demanding ones will use VRS.

Yes, 66% of native resolution for DLSS quality. 50% for performance and balanced something in between.

DF did a pretty good analysis on it too: https://www.eurogamer.net/articles/digitalfoundry-2020-wolfenstein-youngblood-rtx-upgrade-analysis

Can we expect more game to be as good as Wolfenstein DLSS ? It makes me want to buy a nvidia card if I had to choose one now

Lorul2 is already tired of me 😂chiplets should be cheaper to manufacture in theory as your yields should be much higher than a monolithic chip, further if you are future proofing your console for a possible PS5 pro then rather than designing a new chip you tack on more chiplets.

Maybe I'm misreading it but It just seems like there are more bonuses to going the chiplet route rather than the traditional monolithic die.

I will do him a favor and formulate my last comment on this:

I think I pointed out where I see the challenges:

3D or 2.5D stacking —> cost & complexity of manufacturing

MCM chiplets —> potentially bottlenecked by IF

Memory on die/MCM —> unresolved cooling for memory specifically

All regarding a console of course.

Lorul2

About party pooping on Lockhart.

your wish is my duty: 💩

Last edited:

no! These things are being bought in BULK!

I dint think y'all understand how that's works as you keep bringing up the CONSUMERS price for an individual's piece of hardware!

The more you buy (in this case millions) the cheaters it costs period!

Yeah, new games will be based on the new model. Technically, the Wolfenstein DLSS model is from Deliver Us To The Moon, which was the first game to use the new model.Can we expect more game to be as good as Wolfenstein DLSS ? It makes me want to buy a nvidia card if I had to choose one now

Bodes very well for Doom and Cyberpunk!

There's a trade off because it takes chip area to facilitate communication between the chiplets. So on both chiplets you need to set aside area for inter die communication. It doesn't come for free. There's a crossover point where chiplets would become cheaper.chiplets should be cheaper to manufacture in theory as your yields should be much higher than a monolithic chip, further if you are future proofing your console for a possible PS5 pro then rather than designing a new chip you tack on more chiplets.

Maybe I'm misreading it but It just seems like there are more bonuses to going the chiplet route rather than the traditional monolithic die.

Just saw insomniac games put up an ad for a character artist last week. It said they needed someone for a project that would last about about 12 months.

Sounds like launch window or early next year. Not really sure which one of their studios is working on spiderman.

Sounds like launch window or early next year. Not really sure which one of their studios is working on spiderman.

as many games as the devs sends to Nvidia and as many games Nvidia can process at once. a pretty big bottleneck but Microsoft has put out DirectML which could possibly be used to the same effect but on any DX-supported gpuCan we expect more game to be as good as Wolfenstein DLSS ? It makes me want to buy a nvidia card if I had to choose one now

They work differently than that. They are one big team with members in two different locations. For projects, they just move members around as necessary. They don't have dedicated teams for each project.Just saw insomniac games put up an ad for a character artist last week. It said they needed someone for a project that would last about about 12 months.

Sounds like launch window or early next year. Not really sure which one of their studios is working on spiderman.

As for that project you mentioned, I would guess it is Ratchet and Clank. It probably started during Spidey development. I would guess 2017.

I would bet we get another R&C game before we get Spider-Man 2.Just saw insomniac games put up an ad for a character artist last week. It said they needed someone for a project that would last about about 12 months.

Sounds like launch window or early next year. Not really sure which one of their studios is working on spiderman.

That makes sense. I remember when Price and their team put out that anti muslim ban video and he said they had members there via video call.They work differently than that. They are one big team with members in two different locations. For projects, they just move members around as necessary. They don't have dedicated teams for each project.

As for that project you mentioned, I would guess it is Ratchet and Clank. It probably started during Spidey development. I would guess 2017.

Anyways I cant imagine what a next gen ratchet would look like.

Can we see Insomniac releasing 2 PS5 games in the span of a year? Ratchet and Clank in Holiday 2020 and Spider-Man 2 in Holiday 2021? I know that they are efficient, but that would be unprecedented for them, right?

no! These things are being bought in BULK!

I dint think y'all understand how that's works as you keep bringing up the CONSUMERS price for an individual's piece of hardware!

The more you buy (in this case millions) the cheaters it costs period!

Many of us understand bulk pricing. I make purchases for thousands of devices at a time.

I may get a computer with a list price of $900, buy in bulk for thousands and have that drop to $700 a unit. People who keep talking about bulk pricing clearly don't understand it. Most technology margins aren't 90% profit off the hardware. There is a real tangible manufacturing cost and buying in bulk doesn't suddenly mean you can purchase a $1000 GPU for $100. As I have said many times, I believe the consumer equivalent of whatever GPU we get will be $200 for the low end and absolute max a $500 consumer equivalent. More likely in the $300-$400 range equivalent GPU. The chip purchased even in the millions is still going to cost somewhere around $100-$200 per unit in a console.

I'm already convincedWe know the focus will be on eco sytem and maybe legacy for SONY.

If they they let you play a selection of PS1 and 2 games with PS+ (like Nintendo with Online), would it convincing you to buy the console ?

Threadmarks

View all 10 threadmarks

Reader mode

Reader mode

Recent threadmarks

Liabe Brave - NAVI GPU Block Sizes + Next Gen APU Predictions Xbox Series X Prototype sighting Xbox Series X Prototype allegedly registered to account Dictator (Digital Foundry) - Ray Tracing and Storage Speeds Albert Panello comments on SOC design (2) Albert Panello comments on SOC design (1) Albert Panello comments on SOC design (3) RULES FOR CHANCE AT GLORY CONTEST- Status

- Not open for further replies.