I said wowSekiro: Shadows Die Twice. 1080p promotional screenshot. ESRGAN model. 4K downsampled for wallpaper. Cropped, full scale comparison:

Bicubic resampling:

ESRGAN:

With some interpolation between the PSNR model I'm sure it could soften some of the harsher edge enhancement of the arm bands though it's not really apparent when downsampled.

-

If you have been following the news, you should be aware that the people of Lebanon have been under a violent bombardment that has recently escalated. Please consider donating to help them if you can or trying to spread word of the fundraiser.

-

Asheville and Western North Carolina at large are in crisis after Helene, please consider contributing to these local organizations and helping the people whose lives have been destroyed.

AI Neural Networks being used to generate HQ textures for older games (You can do it yourself!)

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Completely missed this thread, but these results look incredible. With some artifact removal I can finally see PS1 era FF games getting the love they deserve. Will follow with great interest.

Yeah, messed around with the interp and I've got it in a good spot now.

Here's what you asked for btw.

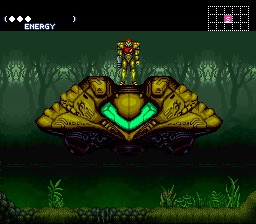

What the hell? What romhack is that???

Here's a Kyrandia screen from earlier in the thread, upscaled using the Manga109 model. WAY better than what we had before:

I tried doing this with dumped Melee textures from Dolphin, but the script have me type conversation errors. Did you run into anything similar?Transparency issues in the textures. But man it's pretty easy to use this

Can somebody try this with Westwood's Blade Runner videogame, please?

Original res (Source): One | Two | Three

ESRGAN Manga109: One | Two | Three

SMAA + ESRGAN Manga109: One | Two | Three

Tidies up the character outlines a bit, but smudges the background in some places.

Resized the upscaled shots from 2560x1920 to 1440x1080.

-----

Not sure if this has been posted, but there's an article about this thread on DSOG: https://www.dsogaming.com/screensho...ls-of-retro-games-the-results-are-incredible/

Last edited:

I just discovered this thread. Incredible stuff. How computationally resource intensive is it?

This could be a really great tool for decompression down the line.

Also... I play all my old games on a CRT precisely to see games in native resolution. But this is seemingly able to replicate the look of old games in a higher res with a better than nearest neighbor upscale. It really could be a boon for remasters too. Could someone try some SNK fighting game backgrounds?

This could be a really great tool for decompression down the line.

Also... I play all my old games on a CRT precisely to see games in native resolution. But this is seemingly able to replicate the look of old games in a higher res with a better than nearest neighbor upscale. It really could be a boon for remasters too. Could someone try some SNK fighting game backgrounds?

Last edited:

wewOriginal res (Source): One | Two | Three

ESRGAN Manga109: One | Two | Three

SMAA + ESRGAN Manga109: One | Two | Three

Tidies up the character outlines a bit, but smudges the background in some places.

Resized the upscaled shots from 2560x1920 to 1440x1080.

-----

Not sure if this has been posted, but there's an article about this thread on DSOG: https://www.dsogaming.com/screensho...ls-of-retro-games-the-results-are-incredible/

reading the comments of that article is certainly something

Now that I've had more time, I've tested ESRGAN, SFTGAN and Waifu2x on a particular Legend of Mana sprite to see how each network performs. On each of them, I magnified the image 4x.

Original Image:

The results can be found here.

My favorite result (ESRGAN trained on Manga109 dataset):

My Observations:

- Before going into this, I expected Waifu2x (urgh, why the name?) to do best because it was trained on illustrations that I think are closest to the image I was trying to upscale.

- However, to my eye, I think the ESRGAN trained on the Manga109 dataset did the best. It had the fewest artifacts and I was very impressed with the result.

- The results from Waifu2x were disappointing - the image just looked as if someone rescaled the original image to 4x using nearest neighbor interpolation and ran a blur filter through it. I tried it on every pre-trained model available and tried combining it with de-noising but frankly everything came out looking very similar to each other.

- The default ESRGAN model did not work so well - lots of artifacts especially in the vine region in the center of the image.

- I ran into a bunch of random issues getting SFTGAN to work. I don't remember all the specifics but there were times it had issues detecting CUDA, crashed my PC, using a lot of resources, etc. I didn't really have time to figure out what was going on so not sure if it's on my end.

- I actually find the artifacts from SFTGAN quite aesthetically pleasing. It looks like oil painting textures.

Incredible. Looks damn good.

Beautiful! I am aiming to work with neural networks after finishing my degree, and projects like these are precisely what inspires me. I believe gaming is going to see a new age of innovation with the advent of cloud computing and neural networks/deep-learning algorithms, exciting times!

I don't suppose anyone has completed a REmake mod... I'm about to start it and I'd love to give it a try.

Original res (Source): One | Two | ThreeCan somebody try this with Westwood's Blade Runner videogame, please?

ESRGAN Manga109: One | Two | Three

SMAA + ESRGAN Manga109: One | Two | Three

Tidies up the character outlines a bit, but smudges the background in some places.

Resized the upscaled shots from 2560x1920 to 1440x1080.

Some might find it interesting to compare results to the high resolution Blade Runner renders from this article and gallery.

Comparison gif

I don't suppose anyone has completed a REmake mod... I'm about to start it and I'd love to give it a try.

I havent completed it, but I have all 2k BG's done with Manga109 model. I think I am going to personally train a model on this stuff specifically before I release anything substantial. I also think I want to try to get some other stuff done, ie. normal maps, depth maps etc for Isharuka Dolphin, and I will look into making the mod work with PC HD release.

Don't use Manga model on BR. I think the regular 0.8 mix of the two original models would do better with that game.

Have been thinking about using NNs to do texture scaling for a while, now (since 2015, when I first read about some NN-based SRI research Flipboard was doing). This thread pretty much confirms my thinking that it would be revolutionary.

One of the cool things about this line of research is that these NNs can be used as a form of compression: pack low-res seed textures, a trained NN and a compressed residual and you can regenerate the original, super high resolution texture, while using a fraction of the storage. I've read several proposals along this line to get around data caps and storage media size restrictions.

One of the cool things about this line of research is that these NNs can be used as a form of compression: pack low-res seed textures, a trained NN and a compressed residual and you can regenerate the original, super high resolution texture, while using a fraction of the storage. I've read several proposals along this line to get around data caps and storage media size restrictions.

Did a test on the entire series of textures in 2 stages of Sonic Adventure (varying between Manga109 and the original 2 models).

A lot of them went miraculously well but some had inherent blurriness or compression artifacts that ESRGAN couldn't make much of a difference for, or ended up with even more artifacts. For any alpha'd textures, I either just gave the ESRGAN ones a quick shop or used waifu2x (UpPhoto).

Each example are before and after respectively:

On another note, if anyone knows (probably vestan ), what particular parts of net_interp.py would you need to modify to interpolate Manga109 with one of the original models?

A lot of them went miraculously well but some had inherent blurriness or compression artifacts that ESRGAN couldn't make much of a difference for, or ended up with even more artifacts. For any alpha'd textures, I either just gave the ESRGAN ones a quick shop or used waifu2x (UpPhoto).

Each example are before and after respectively:

On another note, if anyone knows (probably vestan ), what particular parts of net_interp.py would you need to modify to interpolate Manga109 with one of the original models?

One of the great things about this whole field exploding is that tech is advancing absurdly fast. Just finished reading a paper on DNSR, a newer, different method that gets considerably more pleasing HR results. Does so by developing its own internal degradation model from natural LR images in the dataset instead of relying on artificially downsampled images. That's important because it gets around the issue of the network learning the latent structure left by the downsampling algorithm.

The results have fewer artifacts and less of the oversharpened look.

The results have fewer artifacts and less of the oversharpened look.

On another note, if anyone knows (probably vestan ), what particular parts of net_interp.py would you need to modify to interpolate Manga109 with one of the original models?

Depending on which model you want to interpolate with, you just need to change the path on line 7 or 8.

For example, to interpolate RRDB_ESRGAN_x4 and Manga109, just change line 7 to read:

Code:

net_PSNR_path = './models/Manga109Attempt.pth'If you want to do it the other way around, change line 8 to read:

Code:

net_ESRGAN_path = './models/Manga109Attempt.pth'I've also opted to change line 9, so that my interpolated models don't overwrite the original ones. I've changed it so that they're named 'manga109_interp_xx':

Code:

net_interp_path = './models/manga109_interp_{:02d}.pth'.format(int(alpha*10))I'm interested to see what Final Fantasy XII looks like through this. I am not a fan of the basic Photoshop upscaling they did for The Zodiac Age.

I wonder if Final Fantasy XV could also benefit from this. I recall some people said some textures were appalling for an 8th gen game

Can anybody tell me what game this is?Yeah, messed around with the interp and I've got it in a good spot now.

Here's what you asked for btw.

it's a romhack called Super Metroid Ruin

http://metroidconstruction.com/hack.php?id=337

yeah what alexbull_uk said. just mess around with the net_interp.py a bitDid a test on the entire series of textures in 2 stages of Sonic Adventure (varying between Manga109 and the original 2 models).

A lot of them went miraculously well but some had inherent blurriness or compression artifacts that ESRGAN couldn't make much of a difference for, or ended up with even more artifacts. For any alpha'd textures, I either just gave the ESRGAN ones a quick shop or used waifu2x (UpPhoto).

Each example are before and after respectively:

On another note, if anyone knows (probably vestan ), what particular parts of net_interp.py would you need to modify to interpolate Manga109 with one of the original models?

megaman.

Damn. so the only implementation into a game will be texture mods.

If the engine had the trained data it could be feasible to have the engine generate a texture cache as it goes, kinda how some emulators generate shaders at runtime then use a cached version of it later, gradually getting to the point that it no longer has to generate them

Awesome! I'll definitely replay Gothic with the new textures!this Gothic gif got posted on the Gameupscale subreddit. goddamn

Thanks! Didn't know what I was looking for originally but taking it apart this makes it look much more understandable.Depending on which model you want to interpolate with, you just need to change the path on line 7 or 8.

For example, to interpolate RRDB_ESRGAN_x4 and Manga109, just change line 7 to read:

Code:net_PSNR_path = './models/Manga109Attempt.pth'

If you want to do it the other way around, change line 8 to read:

Code:net_ESRGAN_path = './models/Manga109Attempt.pth'

I've also opted to change line 9, so that my interpolated models don't overwrite the original ones. I've changed it so that they're named 'manga109_interp_xx':

Code:net_interp_path = './models/manga109_interp_{:02d}.pth'.format(int(alpha*10))

Going to try test these tomorrow.

it's a romhack called Super Metroid Ruin

http://metroidconstruction.com/hack.php?id=337

yeah what alexbull_uk said. just mess around with the net_interp.py a bit

Awesome, thank you!

Not you.

Looking for some help from someone who got ESRGAN working.

I'm at the point of trying to perform the first test under the "Quick test" section on this page: https://github.com/xinntao/ESRGAN

When attempting the following command In the Python CLI:

I get an "invalid syntax" error.

I also tried bypassing the git clone command above, downloading the ESRGAN package from github, and putting it in my Python directory. In this configuration, I attempted the following command:

I got the same syntax error, even with the RRDB_ESRGAN_x4.pth file saved in the same folder from which I launched the Python executable.

Any advice is greatly appreciated. I think there is a simple error going on here.

I'm at the point of trying to perform the first test under the "Quick test" section on this page: https://github.com/xinntao/ESRGAN

When attempting the following command In the Python CLI:

Code:

git clone https://github.com/xinntao/ESRGANI get an "invalid syntax" error.

I also tried bypassing the git clone command above, downloading the ESRGAN package from github, and putting it in my Python directory. In this configuration, I attempted the following command:

Code:

test.py models/RRDB_ESRGAN_x4.pthI got the same syntax error, even with the RRDB_ESRGAN_x4.pth file saved in the same folder from which I launched the Python executable.

Any advice is greatly appreciated. I think there is a simple error going on here.

instructions

- download the ESRGAN repo

- put it in its own folder somewhere on your PC

- (assuming you've got pytorch, numpy and python installed) open CMD

- enter this command into your cmd window: set path=YOUR PYTHON DIRECTORY (for me its C:\Python\Python36)

- in cmd, navigate to where your ESRGAN repo is downloaded using cd... (i just put it in the root c drive)

- type in: python net_interp.py 0.9

- make sure there's an image in the LR folder within the ESRGAN repo

- type: python test.py models/interp_09.pth into the cmd window

- the process should start and after a bit your final result should be in the "results" folder found in the ESRGAN folder

Looking for some help from someone who got ESRGAN working.

I'm at the point of trying to perform the first test under the "Quick test" section on this page: https://github.com/xinntao/ESRGAN

When attempting the following command In the Python CLI:

Code:git clone https://github.com/xinntao/ESRGAN

I get an "invalid syntax" error.

I also tried bypassing the git clone command above, downloading the ESRGAN package from github, and putting it in my Python directory. In this configuration, I attempted the following command:

Code:test.py models/RRDB_ESRGAN_x4.pth

I got the same syntax error, even with the RRDB_ESRGAN_x4.pth file saved in the same folder from which I launched the Python executable.

Any advice is greatly appreciated. I think there is a simple error going on here.

What error do you get? Could you post a screenshot?

Just checking, but you are actually running it with Python, right? The full command in a normal cmd or PowerShell window should be

Code:

python test.py models/RRDB_ESRGAN_x4.pthAlso make sure that you're running from the correct directory. You can quickly open a command window into any directory by holding Shift and right-clicking inside the folder, and then choosing 'Open PowerShell window here'

This worked. Thank you very much!

I'm curious about the function of the two interpolation commands. Does the first

python net_interp.py 0.9 set some "interpolation parameter," where the latter command python test.py models/interp_09.pth generates a super res image by interpolating between the results produced by BOTH the ESRGAN and PSNR models, using the established parameter? Is that right, or am I totally off?I assume, then, that the commands

Code:

python test.py models/RRDB_ESRGAN_x4.pth

python test.py models/RRDB_PSNR_x4.pthrespectively produce super res images using only the corresponding model.

Vestan set me straight, but thanks for the fast reply!

completely right, although "super res" is all dependent on the original size of the image'm curious about the function of the two interpolation commands. Does the firstpython net_interp.py 0.9set some "interpolation parameter," where the latter commandpython test.py models/interp_09.pthgenerates a super res image by interpolating between the results produced by BOTH the ESRGAN and PSNR models, using the established parameter? Is that right, or am I totally off?

yup yup. you can edit the net_interp.py file in a text editor if you want to switch the models with something else like the manga109 one posted earlier in the threadI assume, then, that the commands respectively produce super res images using only the corresponding model.

completely right, although "super res" is all dependent on the original size of the image

yup yup. you can edit the net_interp.py file in a text editor if you want to switch the models with something else like the manga109 one posted earlier in the thread

Awesome. I greatly, greatly appreciate the help.

all good :)

Question - could you take a video clip that might be slightly out of focus, export it into 24 png frames per second and run it through to sharpen it?

I'm not an expert and haven't tried, but I'd expect the effect of video compression would probably make this unfeasible, or at least not more useful than just using a sharpening filter in a traditional video editing package.

Question - could you take a video clip that might be slightly out of focus, export it into 24 png frames per second and run it through to sharpen it?

Can be done, but one problem you'll run into is that the reconstruction won't be stable from frame to frame.

You want a method that takes temporal information - previous and following frames - into account when doing the superesolution, instead of SISR. I've read about some that were used to resolve security footage with crazy results - even better than SISR, because you have more data to sample from.

I'm not an expert and haven't tried, but I'd expect the effect of video compression would probably make this unfeasible, or at least not more useful than just using a sharpening filter in a traditional video editing package.

Someone already did some tests using Virtualdub + some filters

That channel also has some Phantasmagoria examples, NSFW.

Right. Makes sense. Just a random thought I had thanks!Can be done, but one problem you'll run into is that the reconstruction won't be stable from frame to frame.

You want a method that takes temporal information - previous and following frames - into account when doing the superesolution, instead of SISR. I've read about some that were used to resolve security footage with crazy results - even better than SISR, because you have more data to sample from.

A guy on Twitter did it for every FFIX backgrounds (and says it plans to improve/fix them) :

Link to: imgur gallery

Some of them has issue but some are really impressive:

Link to: imgur gallery

Some of them has issue but some are really impressive:

Next thing you know they'll be able to detect where a light source is coming from and improve the lighting to modern levels in a retro game.

Some time after they'll be able to auto generate levels that are intelligently and intricately designed from being fed examples of levels in games.

Think of this applied to everything. First improved, then ability to create from scratch. Animations, levels, and then entire fucking games. Just like they're already able to do now with fake celebs and fake photos of any scenario after being trained on sets of those images. But it's not gonna stop there.

Some time after they'll be able to auto generate levels that are intelligently and intricately designed from being fed examples of levels in games.

Think of this applied to everything. First improved, then ability to create from scratch. Animations, levels, and then entire fucking games. Just like they're already able to do now with fake celebs and fake photos of any scenario after being trained on sets of those images. But it's not gonna stop there.