MQ-9 Reaper Flies With AI Pod That Sifts Through Huge Sums Of Data To Pick Out Targets

The system is designed to help parse through mountains of intelligence data quickly so that operators only get data-linked objects of interest.

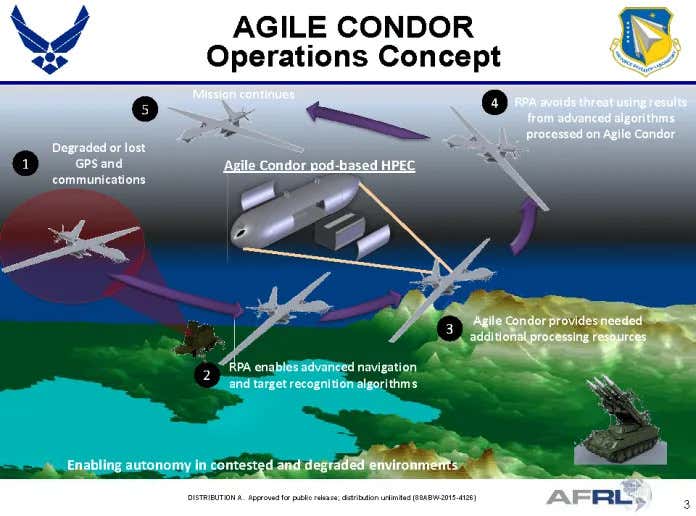

General Atomics says that it has successfully integrated and flight-tested Agile Condor, a podded, artificial intelligence-driven targeting computer, on its MQ-9 Reaper drone as part of a technology demonstration effort for the U.S. Air Force. The system is designed to automatically detect, categorize, and track potential items of interest. It could be an important stepping stone to giving various types of unmanned, as well as manned aircraft, the ability to autonomously identify potential targets, and determine which ones might be higher priority threats, among other capabilities.

"Computing at the edge has tremendous implications for future unmanned systems," GA-ASI President David R. Alexander said in a statement. "GA-ASI is committed to expanding artificial intelligence capabilities on unmanned systems and the Agile Condor capability is proof positive that we can accurately and effectively shorten the observe, orient, decide and act cycle to achieve information superiority. GA-ASI is excited to continue working with AFRL [Air Force Research Laboratory] to advance artificial intelligence technologies that will lead to increased autonomous mission capabilities."

At least at present, the general idea is still to have a human operator in the 'kill chain' making decisions about how to act on such information, including whether or not to initiate a lethal strike. The Air Force has been emphatic about ensuring that there will be an actual person in the loop at all times, no matter how autonomous a drone or other unmanned vehicle may be in the future.

The actual Agile Condor technology inside the pod is designed to be as lightweight as possible and use modular and open-architecture design elements to make it easy to add in new and improved functionality and capabilities in the future. While it has been flight tested on the Reaper, it's not hard to see how the technology could be applicable to any other unmanned aircraft capable of carrying it, as well as manned platforms, where the system could assist onboard human sensor operators and analysts. There are already programs in the works to develop AI "copilots" that can provide various kinds of assistance to the crews of manned fixed-wing aircraft and helicopters. It's also very possible that the technology could have naval and ground-based applications, as well.

All told, Agile Condor is just another example of a broader push across the U.S. military to leverage AI and machine learning to improve autonomous capabilities across the board. The Air Force in particular has a number of publicly acknowledged autonomous unmanned aircraft programs ongoing now, the most notable of which is Skyborg, an effort seeking to develop a suite of systems able to autonomously operate drones in the loyal wingmen role supporting manned aircraft, as well as part of fully independent swarms. This technology could even potentially go into a fully autonomous unmanned combat air vehicle (UCAV).

However the use of AI and machine learning continues to grow and evolve in the U.S. military, it is certainly a major area of focus broadly and systems such as Agile Condor are paving the way for this new era in warfighting.

Last edited: