So I went dumpster diving into the

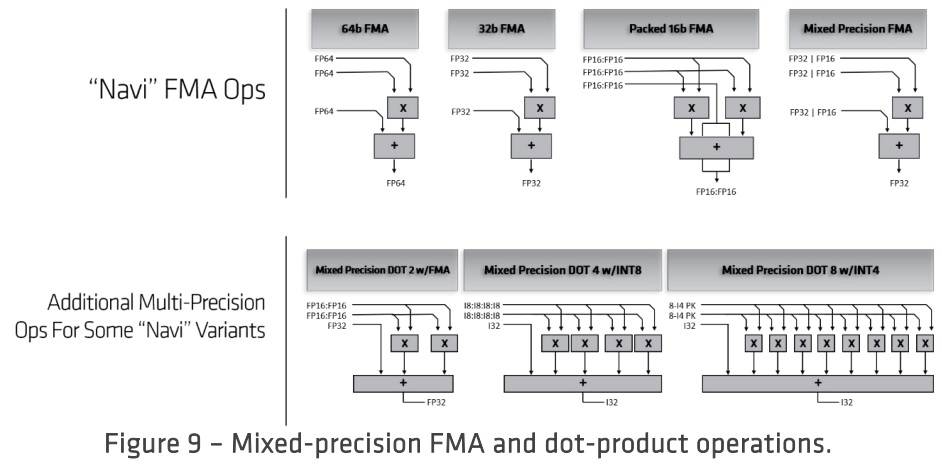

RDNA whitepaper to see mentions of packed integer for INT8, INT4.

There were some quotes, page 12, 13 and 14

So it appears if things are similar in RDNA2 there might not be a point to run under INT8 to save space in ALUs. However, it's true Microsoft mentions "we added special hardware support for this specific scenario", but it doesn't necessarily mean it's specific to their iGPU. It could simply mean they weighed in on the change. Keep in mind there isn't a point to run integer quantization for every stage of inference, however there is a point to run at least FP16.

If anyone is interested in what GDDR6 IC will be used on the Xbox Series X, I looked up

saturday's digital foundry teardown of the console and found it Microsoft uses Samsung ICs, at least on this sample.

- XSX uses 10 GDDR6 ICs, 6 2GB modules and 4 1GB modules, all rated for 14GBps.

- The "top" 4 ICs are 2GB/16Gb K4ZAF325BM-HC14 modules from Samsung,

- "left" and "right" ICs are split into two categories: middle ones are 2GB, "bottom" and "top" ones are K4Z80325BC-HC14 1GB modules from Samsung.

This should confirm the memory layout:

This means the 4 "top" modules closer to the 2 4-core CPU CCX are 2GB modules, and the ones closer to the GPU are split 1GB/2GB modules but the most bandwidth seems affected to the iGPU.

If people wondered what might make a nice addition to a Pro refresh in a few years time, they might switch to faster GDDR6 memory and add new silicon revisions of Zen2 or RDNA2. I don't think Samsung provides a spec sheet for these, but Micron

does provide one with further details on clocking.