Seems premature to be concerned about exactly what trade-offs devs will make. And DLSS is ultimately just a particularly fancy upscaling method, so it's not like devs don't have other options there (which have already been employed this gen, even if inferior in results to DLSS).

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

Without DLSS, Should devs really be chasing Ray Tracing on consoles?

- Thread starter Sems4arsenal

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Seems premature to be concerned about exactly what trade-offs devs will make. And DLSS is ultimately just a particularly fancy upscaling method, so it's not like devs don't have other options there (which have already been employed this gen, even if inferior in results to DLSS).

The Tensor Cores also do denoising which is what lets a 2060 get away with using 5 gigarays/sec for 1080p60. 40 samples per pixel is going to be noisy af and the denoising makes it look a LOT better.

Umm no? Unless youre suggesting the tensor cores have been sitting there unused until March of this year.

They ditched the per game AI training cause it seemed to be pointless. Which bodes well for AMD.

There's definitely time vs performance trade-off here. You can let your designers take the time to bake all the GI lighting into an environment and painstakingly produce and align cube-maps to achieve great reflections a la TLoU2, or you can adopt real-time ray-tracing implementations and probably suffer a drop in performance. Each approach has its pros and cons, but leaning on ray-tracing definitely leads to shorter development time up front and possibly more time optimizing performance on the tail end of production.This.

It's annoying that devs are wasting their GPU budget on RTX.

Do you have any examples?There is no inherent performance cost to ray-tracing. You can use it in such ways which won't hit performance more than what it will substitute visually.

You might be interested in checking out Cryengine's software-based RT implementation. When RT-enabled objects are close to the viewer/camera, ray-tracing implementations of popular effects such as reflections are used; as the camera moves farther away, other less accurate techniques like SSR are used. Imagine it like LoD transitions except for lighting effects.I wonder if raytracing will eventually become the new "pop-in" on console versions. Like you move 5ft away from something and it switches from raytracing to a cube map or something.

The point is that you have dedicated hardware to offload the work to. Even if you have to render everything in a series, there's plenty of pipelining solutions (e.g. work queues) that you can make use of that'll take advantage of the extra shader core budget.The DLSS2.0 process occurs in series with the rest of the rendering pipeline, not in parallel. The dedicated hardware doesnt allow you to simultaneously use other resources elsewhere. The GPU process the scene, the tensor cores do the upscaling work, then the GPU does its post work.

MS' solution will have the same work flow, but will a bit slower.

This post details quite a few the things to take into consideration when balancing performance vs quality with regards to ray-tracing.Like anything in real-time rendering, it's all about compromise to reach what appears to be a better visual output, modern day ray-tracing is no exception.

Ray-tracing as we see it in games is true ray-tracing like what we see in path-traced renderers like V-Ray, it's calculating lights hitting surfaces according to how it happens in the real world based off of physical values, but even my RTX 2080 Ti doesn't have the power to do this at an unlimited amount of samples or bounce rate.

Typically ray-traced reflections for a real-time renderer like Unreal Engine 4 would only do a ray tracing calculation for a pixel if the material of the surface had a roughness of something like 0.7 or higher, all pixels below that would fall over to screen space reflections or prebaked reflection probes. Another way to improve speed would be to test if the pixel reflection is present in the screen space reflection map already calculated, using the SSR result instead of calculating an RT reflection for that pixel.

Now let's presume that we need to do an RT calculation for that pixel, how many reflection bounces should we do if the surface we hit in the reflection also has a roughness value higher than 0.7? The more bounces we need to do, the more compute power we need.

Now it's also really computationally heavy to do this for every pixel on screen, so what if we only calculate a limited amount of RT pixels every frame and temporally reconstruct them over 3-5 frames to make it even faster at the cost of ghosting artifacts if the viewport changes significantly?

However developers chose to implement RT features, it will always be about compromising until they reach the best performance to visual output ratio, upscaling algorithms like DLSS is just another way of compromising, RT can be implemented in many many ways.

Personally, I would be more inclined to maximize the number of bounces to produce more accurate lighting. To try to keep performance cost low, you would want to perform this at a much lower resolution than native 4K and then make use of an image-upscaling solution like DLSS.

If the cost of bounces is too expensive, then you would probably want to look at image-denoising solutions to remedy the loss in accuracy of lighting data gathered from less bounces. The suggestion of using a temporal approach is pretty neat to think about, too.

Another approach is to try to minimize the cost of testing for ray-triangle intersections; maybe there are ways to further minimize the number of objects to take into account for on-the-fly construction of BVHs. Tree-traversal is incredibly fast, but there may be faster ways of pruning branches of the tree from the get-go.

Last edited:

Yes, even version 1.9 from control. It wasn't until dlss 2.0 nvidia started using the tensor cores. Which also mean dlss algorithm in theary can work on all hardware. Atleast the old one but only do an half ass job.Umm no? Unless youre suggesting the tensor cores have been sitting there unused until March of this year.

It's good that they are experimenting and trying to put ray tracing in games, that is the only way to find ways to optimize the tech and find the best methods to implement it visually. Companies care more about a game being marketable in pictures and in trailers than a buzzword so i think they will not make bad use of ray tracing.

You brush off the basic point that those techniques are not as good as DLSS 2 and a software solution will not be as good as one which involves dedicated hardware.

Seems premature to be concerned about exactly what trade-offs devs will make. And DLSS is ultimately just a particularly fancy upscaling method, so it's not like devs don't have other options there (which have already been employed this gen, even if inferior in results to DLSS).

You brush off the basic point that those techniques are not as good as DLSS 2 and a software solution will not be as good as one which involves dedicated hardware.

The ray tracing is discrete fixed function hardware. The unit will put out 5 gigarays/sec on a 2060. If there's less rays than allowed by the frame time then there won't have a performance penalty.

RT shadows ran on some very particular light sources and for some very specific geometry in SoTTR and CODMW result in something which would've actually be slower to render with shadow maps with similar quality.

Smaller scaled usage of RT for shadows is the most obvious option where RT may actually end up being faster for the resulting quality than rasterization approaches.

Then there are some research in progress GI approaches where RT is used to accumulate lighting calculations over several frames meaning that you get your real time RTGI with some temporal artifacting - but you free up the shading units from this work to do other stuff instead of do GI for you.

I'm sure there will be a lot of use cases for RT where using RT will actually help with performance which devs will discover over the next years.

No but there's silicon space cost - hence general gpu performance and wallet cost to it. I'd buy a cheaper 2080 equivalent without rt any day, or for the same cost get one with 2x the 3d performance and no rt.There is no inherent performance cost to ray tracing. You can use it in such ways which won't hit performance more than what it will substitute visually.

Ratchet is native 4k 30 fps with ray traced reflections.

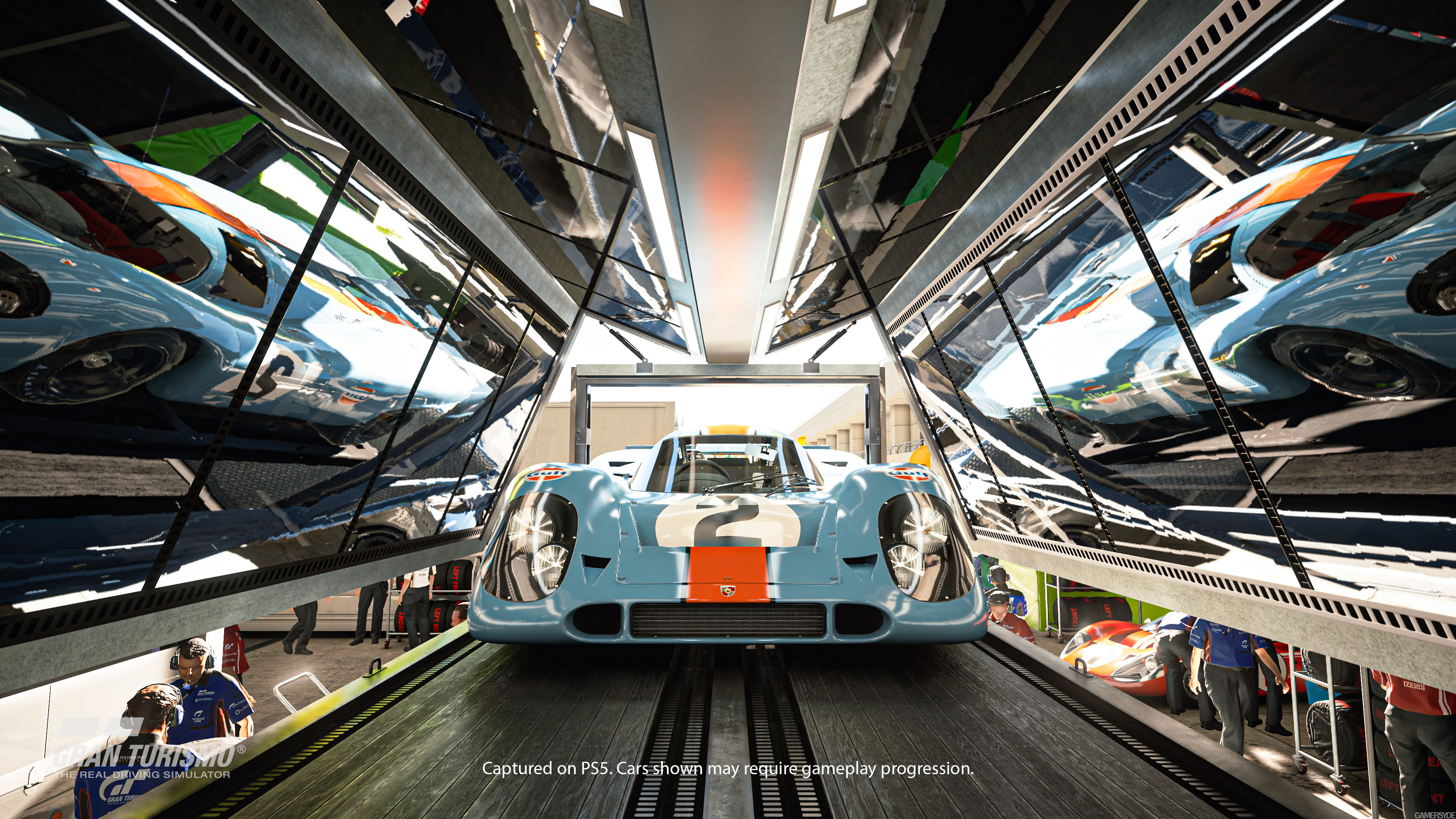

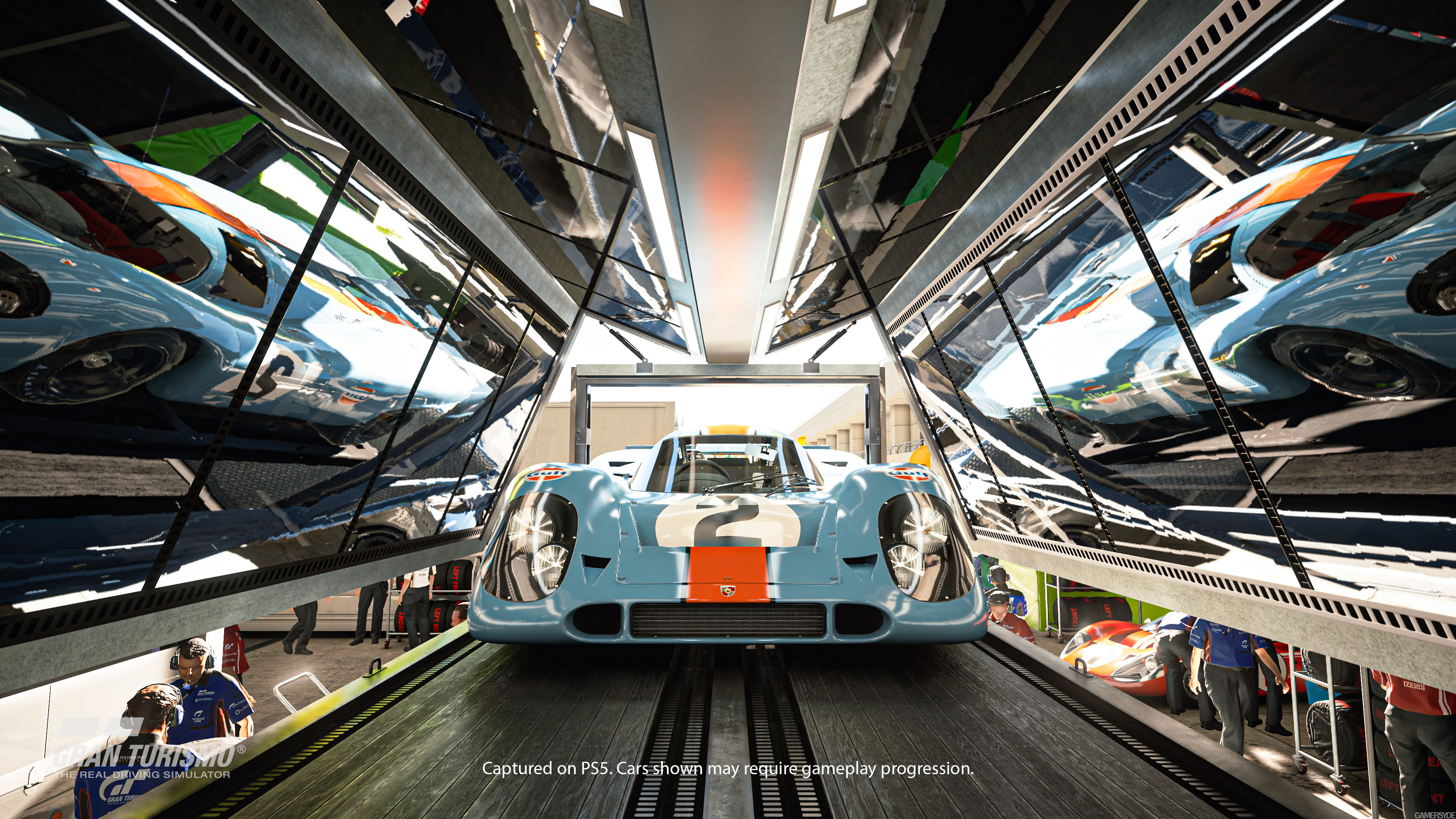

GT7 is native 4k 60 fps with ray traced reflections.

Demon Souls is native 4k 30 fps with ray traced shadows.

Spiderman is native 4k 30 fps with ray traced reflections.

Horizon is native 4k 30 fps with no ray traced features as far as we know.

I think as long as they are smart about ray traced features and build the game around it then should be fine. It's a valid concern though.

GT7 is native 4k 60 fps with ray traced reflections.

Demon Souls is native 4k 30 fps with ray traced shadows.

Spiderman is native 4k 30 fps with ray traced reflections.

Horizon is native 4k 30 fps with no ray traced features as far as we know.

I think as long as they are smart about ray traced features and build the game around it then should be fine. It's a valid concern though.

Right but I thought MS mentioned for RDNA2 they have INT16/8 packed?

Doesn't the console RDNA2 have most likely half of that of a 2060? That is not good

yeah about half of the ML performance of a 2060. So DLSS on an XSX would cost around 5.5 to 6 miliseconds of compute time if it scaled like it does across Turing to RNDA 2.Doesn't the console RDNA2 have most likely half of that of a 2060? That is not good

Silicon sizes has measly effect on retail pricing of products. Case in point - AMD's 5000 series which are sold at the same prices as Turing while being 2X times smaller and not having any RT h/w.No but there's silicon space cost - hence general gpu performance and wallet cost to it. I'd buy a cheaper 2080 equivalent without rt any day, or for the same cost get one with 2x the 3d performance and no rt.

You would also likely get some +5% or so of performance by removing RT h/w from Turing while loosing about 2-6X of performance in ray tracing. Seems like a really stupid exchange to me.

There's definitely time vs performance trade-off here. You can let your designers take the time to bake all the GI lighting into an environment and painstakingly produce and align cube-maps to achieve great reflections a la TLoU2, or you can adopt real-time ray-tracing implementations and probably suffer a drop in performance. Each approach has its pros and cons, but leaning on ray-tracing definitely leads to shorter development time up front and possibly more time optimizing performance on the tail end of production.

Do you have any examples?

You might be interested in checking out Cryengine's software-based RT implementation. When RT-enabled objects are close to the viewer/camera, ray-tracing implementations of popular effects such as reflections are used; as the camera moves farther away, other less accurate techniques like SSR are used. Imagine it like LoD transitions except for lighting effects.

The point is that you have dedicated hardware to offload the work to. Even if you have to render everything in a series, there's plenty of pipelining solutions (e.g. work queues) that you can make use of that'll take advantage of the extra shader core budget.

This post details quite a few the things to take into consideration when balancing performance vs quality with regards to ray-tracing.

Personally, I would be more inclined to maximize the number of bounces to produce more accurate lighting. To try to keep performance cost low, you would want to perform this at a much lower resolution than native 4K and then make use of an image-upscaling solution like DLSS.

If the cost of bounces is too expensive, then you would probably want to look at image-denoising solutions to remedy the loss in accuracy of lighting data gathered from less bounces. The suggestion of using a temporal approach is pretty neat to think about, too.

Another approach is to try to minimize the cost of testing for ray-triangle intersections; maybe there are ways to further minimize the number of objects to take into account for on-the-fly construction of BVHs. Tree-traversal is incredibly fast, but there may be faster ways of pruning branches of the tree from the get-go.

That's not how DLSS works. The GPU processes the scene at a reduced resolution, then idles while the tensor cores upscale, then the tensor cores idle while ther GPU post processes.

The benefit of the separate hardware isn't that you can use there shader cores for other tasks during the downtime. It's that you can fit more hardware for integer math than the CU alone would allow. This means faster means more frame time for rendering processes.

Last edited:

My apologies. I've spent a lot of time working on CPU design and figured work queues could easily be applied to GPUs. Usually, idling hardware is something to be avoided when there's work to be done so the goal is to maximize throughput at each stage in the processor pipeline. Why isn't this the case for GPUs?That's not how DLSS works. The GPU processes the scene at a reduced resolution, then idles while the tensor cores upscale, then the tensor cores idle while ther GPU post processes.

The benefit of the separate hardware isn't that you can use there shader cores for other tasks during the downtime. It's that you can fit more hardware for integer math than the CU alone would allow. This means faster means more frame time for rendering processes.

You're right, the benefit of extra hardware isn't only utilizing freed up resources for other tasks. Someone else also pointed out that tensor cores are specialized for matrix multiplication -- something used heavily in machine learning models.

Last edited:

I think we'll be seeing relatively limited and/or low-quality RT implementations on console games in order to keep the performance penalty low, but even then I don't think the tradeoffs are worth it.

Last edited:

When the cpu can essentially stream data off the ssd in real time, at ultra high data rates, it's no longer necessary to buffer all of the graphics assets into memory for an entire level or area. It's only necessary to load assets for the narrow slice of what is being depicted on screen at that moment. Ray tracing is going to benefit in exactly the same way from next gen system architecture because the I/O bottleneck has been removed.

When the cpu can essentially stream data off the ssd in real time, at ultra high data rates, it's no longer necessary to buffer all of the graphics assets into memory for an entire level or area. It's only necessary to load assets for the narrow slice of what is being depicted on screen at that moment. Ray tracing is going to benefit in exactly the same way from next gen system architecture because the I/O bottleneck has been removed.

lol I'll take hot takes for 100. You could make the case that raytracing needs the whole level/area loaded into memory but I don't even think it's worth explaining why that might be to you.

When you're saving cpu cycles and memory by selectively loading assets through the use of fast storage and data plane among other things, you free the system up for other types of computation that would otherwise be used for other stuff. You don't need to offload ray tracing computation to some cloud AI system that introduces a whole bunch of latency if you can do it just fine locally.lol I'll take hot takes for 100. You could make the case that raytracing needs the whole level/area loaded into memory but I don't even think it's worth explaining why that might be to you.

Last edited:

DF already confirmed that the reflections in GT7 are 1080p despite the main game being native 4k. They might even be checkerboarded to 1080p. Console devs are used to having limited resources and tend to come up with pretty decent optimizations like this.I think we'll be seeing relatively limited and/or low-quality RT implementations on console games in order to keep the performance penalty low, but even then I don't think the tradeoffs are worth it.

DF already confirmed that the reflections in GT7 are 1080p despite the main game being native 4k. They might even be checkerboarded to 1080p. Console devs are used to having limited resources and tend to come up with pretty decent optimizations like this.

Yeah, that's what I meant when I said relatively limited (one, maybe two RT features per game) and low-quality (lower resolution).

Some more reflections in GT. in the Official topic, there was discussion whether it was ray traced or some kind of hybrid, but the driver showing up in the rearview mirror was definitely not in GT Sports so it doesnt really matter if its fully ray traced because they are able to do things they couldnt do before.

Switch the next gif to HD to see how awesome the reflections look on the helmet in 60 fps. i am fine with 1080p checkerboarded reflections like this tbh.

Switch the next gif to HD to see how awesome the reflections look on the helmet in 60 fps. i am fine with 1080p checkerboarded reflections like this tbh.

This isn't the case with tensor units specifically as they are using the same local storage and register bandwidth as main SIMDs meaning that they can't operate in parallel - at least in Turing. The win is still there though as tensors can be up to 20X faster than main SIMDs on matrix math tasks.My apologies. I've spent a lot of time working on CPU design and figured work queues could easily be applied to GPUs. Usually, idling hardware is something to be avoided when there's work to be done so the goal is to maximize throughput at each stage in the processor pipeline. Why isn't this the case for GPUs?

When you're saving cpu cycles and memory by selectively loading assets through the use of fast storage and data plane among other things, you free the system up for other types of computation that would otherwise be used for other stuff. You don't need to offload ray tracing computation to some cloud AI system that introduces a whole bunch of latency if you can do it just fine locally.

The hell did raytracing in a cloud ai system come from, do you need them for your data plane to fly through?

This isn't the case with tensor units specifically as they are using the same local storage and register bandwidth as main SIMDs meaning that they can't operate in parallel - at least in Turing. The win is still there though as tensors can be up to 20X faster than main SIMDs on matrix math tasks.

Is doing the AI work in parallel a potential option for Ampere to save even more performance?

I'm not going in expecting much more than what GT7 is doing. RT reflections rendered at a lower, possibly reconstructed, resolution along with plenty of less immediately striking effects. That's enough for me, the benefits for devs and players are gonna be huge even with the first round of console RT adoption.

Does AMD have an DLSS equivelant on pc now or one in the works that might be available on the upcoming consoles?? I'd take DLSS any day over raytracing if theres no mechanics tied to it. DLSS is probably the one thing that truly excites me.

Microsoft's DirectML is the next-generation game-changer that nobody's talking about

AI has the power to revolutionise gaming, and DirectML is how Microsoft plans to exploit it

Theoretically yes but it's hard to say if such parallel execution of *something* on both SIMDs and tensors often appear in practice.Is doing the AI work in parallel a potential option for Ampere to save even more performance?

GA100 for example tend to use tensors for the majority of work it is supposed to be doing so the fact that they can't run in parallel with main SIMDs means little for performance.

Gaming Ampere is unlikely to be different - the cost of providing enough storage and bandwidth for parallel operation of two streaming processors would likely be way higher than the performance gain you'd get in - what? one gaming application for tensor cores in form of DLSS?

But we'll see soon I guess.

What exactly do you think DLSS is lolThe hell did raytracing in a cloud ai system come from, do you need them for your data plane to fly through?

Not raytracing running in the cloud.

All that raytracing in death stranding looks marvelous btw.

/s

Maybe in the future, devs will optimize the tech to be viable, but seeing Watchdogs legion for example -- it mostly looked like a current gen game (albeit a very good looking current gen) but the cars, and windows reflect stuff better.

Watch Dogs Legion IS a current gen game, isn't it?