I'm going with an 85 inch X900H. I won't go with an OLED until the image retention issue is 100% solved

Damn, they must be paying you moderators good!

I'm going with an 85 inch X900H. I won't go with an OLED until the image retention issue is 100% solved

I'm sure it looks gorgeous! Kind of pissed I bought an OLED right before 120Hz panels became a thing. Not sure I can justify an upgrade right now.Get a TV that supports 120hz. Ori in 120hz is a sight to behold ;)

Can you believe this flex?

Which OLED do you have?I'm sure it looks gorgeous! Kind of pissed I bought an OLED right before 120Hz panels became a thing. Not sure I can justify an upgrade right now.

I'm going with an 85 inch X900H. I won't go with an OLED until the image retention issue is 100% solved

H! No. No no no. I'm some sort of weird masochist that spins his free time doing this. No, I buy a TV once every five years usually so I go big for it. I'm replacing a 70 inch 2015 Vizio

Then according to the internet you are in luck playing any game in 1080p120hz which I think will be possible with Ori?

Interesting! I wonder if the resolution dip will be worth it. Also from a quick Google search, it would no longer be in HDR, so have to weigh that up.Then according to the internet you are in luck playing any game in 1080p120hz which I think will be possible with Ori?

I'm going with an 85 inch X900H. I won't go with an OLED until the image retention issue is 100% solved

Pretty much. I love the tech and the colors, but I do a lot of work on my TV from my comfy couch, so static images all day. I ain't risking that

This thread is yikes. Everyone blindly yapping "OLED OLED" over and over again, as if this is the only TV in town and as if everyone has thousands to spend on this expensive tech.

OP, go with 65" Q70R. Not only will you have money left, but bigger screen size will be far more impactful than 55" OLED.

Also, OLEDs black levels aren't that important for gaming, because on average games are far brighter than movies. I would argue that QLEDs peak brightness is far more important for gaming than OLEDs black levels, especially if you aren't gaming in a pitch black room setting.

Yeah. OLED is definitely better in many ways but it's not like there aren't many really good alternatives available. If the main use is for gaming, I don't see how some higher-end QLED's like Q80T/Q90T or Sony's XH90 (X900H) would be worse. The black levels aren't bad in any way and those might even be a better choice for basically majority of people.This thread is yikes. Everyone blindly yapping "OLED OLED" over and over again, as if this is the only TV in town and as if everyone has thousands to spend on this expensive tech.

OP, go with 65" Q70R. Not only will you have money left, but bigger screen size will be far more impactful than 55" OLED.

Also, OLEDs black levels aren't that important for gaming, because on average games are far brighter than movies. I would argue that QLEDs peak brightness is far more important for gaming than OLEDs black levels, especially if you aren't gaming in a pitch black room setting.

Developers do not have to do anything to make VRR work. It's a system-level feature that happens automatically.VRR doesn't help with microstutters or sudden hitches. What it can help with is with games running at 30-45 or 45-60 fps. But this must be targeted by a game to work and with most users not having VRR capable displays I doubt that many will.

Philips have been a leader in introducing several display technologies such as motion interpolation - they even had CRT televisions with their Digital Natural Motion feature.What's the consensus on Philips TVs these days? Was the other day at a mall and saw they make oled TVs as well, not sure how good or bad they may be

That looks like posterization, which is not "just how OLED is" but likely just how that TV's tone mapping/HDR processing is, rather than a fault.If you'd have asked me 6 months ago, I'd have said go for it. I've got a 55" 803 OLED in my living room, and it's been fantastic. Unfortunately it's developed a fault with displaying HDR content, and they're refusing to accept that it's an issue.

EvilBoris - would you concur that this behaviour is not "Just how OLED is"? :P

This is not an HDMI 2.1 display, so a bad recommendation for anyone buying a TV specifically for next-gen consoles.This thread is yikes. Everyone blindly yapping "OLED OLED" over and over again, as if this is the only TV in town and as if everyone has thousands to spend on this expensive tech.

OP, go with 65" Q70R. Not only will you have money left, but bigger screen size will be far more impactful than 55" OLED.

The Japanese site for the A9S says that it only supports 4K60 on the spec page - which would mean HDMI 2.0b.I'm edging towards the CX 48 inch but I'm hoping Sony 48 Inch is HDMI 2.1 but I don't think it is.

Developers do not have to do anything to make VRR work. It's a system-level feature that happens automatically.

This is why G-Sync works with games that were released 20 years prior to it, with no modifications.

If a game is built for 30 FPS and it drops to 25 FPS when things get busy, the display will sync to that automatically.

Same thing if it's built for 60 FPS and were to drop to 50 FPS. In that case it's likely to remain smooth enough that most people won't even notice the drop, while you would have obvious stuttering on a non-VRR 60Hz display.

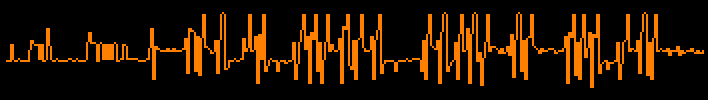

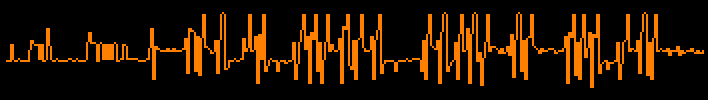

If the frame rate is dropping because of a CPU or I/O bottleneck, then it is likely to stutter due to a spiky frame-time graph like this:

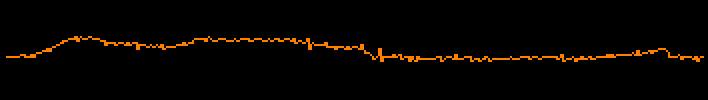

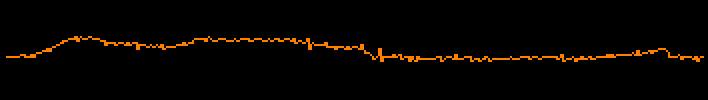

If the frame rate is dropping due to a GPU bottleneck - which is most common - frame drops are generally smooth; producing a frame-time graph like this:

It doesn't matter what the frame rate is, only that it's a smooth transition.

Where developers may have to intervene is if they also want to unlock the frame rate.

If a game is built for 30 FPS there are two main ways you can achieve that: via V-Sync, or via a frame rate limiter.

In the latter scenario, the developer would have to step in if they wanted to enable frame rates above 30 FPS when using VRR, but they don't have to do anything for VRR to work below 30 FPS.

- If it locked to 30 FPS by V-Sync, it's possible that enabling VRR could automatically remove or double that limit at the system level.

- If it's locked to 30 FPS by a frame rate limiter, even disabling V-Sync entirely would not remove that limit - since it's a part of the game itself.

The developer might want to set an upper limit of 40 FPS, so that the game now fluctuates between 25–40 FPS rather than 25–30 FPS, since leaving it totally unlocked might run at 25–90 FPS and that difference is just too great.

Or the engine might have CPU-related stuttering above 50 FPS on that specific console, so they want to keep the limit below the point where that occurs.

On top of that, even if a game were to be completely locked to 30 FPS and never drop a frame, you still have the latency benefit of V-Sync no longer being engaged when VRR is used.

A 30 FPS game running on a VRR display should have at least one frame lower latency than the same game running on a non-VRR display.

Philips have been a leader in introducing several display technologies such as motion interpolation - they even had CRT televisions with their Digital Natural Motion feature.

But they also had a reputation for building unreliable displays for decades - though I believe they are now manufactured by a completely different company.

The main reason I see to choose a Philips OLED over others is the Ambilight feature, but none of their 2020 models include HDMI 2.1 support - so I would not recommend any of them to pair with a next-gen console.

If you really want the Ambilight feature, I would suggest waiting for 2021 displays.

That looks like posterization, which is not "just how OLED is" but likely just how that TV's tone mapping/HDR processing is, rather than a fault.

It's also possible for some color management systems to introduce this problem when adjusted, so there may be some picture settings that help minimize/remove the artifacts.

This is why smooth gradations are one of the things I value most in a display, and is why I appreciate HDTVtest's near-black testing they do now (that's where posterization is most commonly seen). It's one of the reasons I'm thinking that I may hold out another year for Panasonic to add HDMI 2.1 support, as they have the best-performing OLED in that regard.

This is not an HDMI 2.1 display, so a bad recommendation for anyone buying a TV specifically for next-gen consoles.

It will only support 48–120Hz VRR at 1440p - and the PS5 may not even have a 1440p output option, which would limit you to 1080p.

The Japanese site for the A9S says that it only supports 4K60 on the spec page - which would mean HDMI 2.0b.

It's possible that it could receive an update for HDMI 2.1 via a later update like the X900H/Z8H, but I wouldn't count on it. I think they're waiting until 2021 for their OLEDs.

The display is set to launch in Europe by the end of August, so hopefully we'll have details then.

HDMI 2.1 is input standard, not display standard. 4K60 will be more than enough for next gen consoles. Titles that do 4K120 will probably be countable on two hands, and even those will probably be sub 4K. If one wants to spend twice as much money just so they can brag they have full HDMI 2.1 with 4K120 support sure, they are free to do so. Also you don't know that PS5 won't support 1440p 120hz.This is not an HDMI 2.1 display, so a bad recommendation for anyone buying a TV specifically for next-gen consoles.

It will only support 48–120Hz VRR at 1440p - and the PS5 may not even have a 1440p output option, which would limit you to 1080p.

That looks like posterization, which is not "just how OLED is" but likely just how that TV's tone mapping/HDR processing is, rather than a fault.

It's also possible for some color management systems to introduce this problem when adjusted, so there may be some picture settings that help minimize/remove the artifacts.

This is why smooth gradations are one of the things I value most in a display, and is why I appreciate HDTVtest's near-black testing they do now (that's where posterization is most commonly seen). It's one of the reasons I'm thinking that I may hold out another year for Panasonic to add HDMI 2.1 support, as they have the best-performing OLED in that regard.

To be fair I find the c9 to be too bright so this is a Definitely a positive feature for me.The Cx appears to hold back a little on brightness too, so it's likely to be less prone to burn in.

So not a great deal.

Would you recommend the Sony X950H over the X900H? Kinda looks like it would be worth it for an extra $200.If you're investing in a tv in 2020 for games, get something with HDMI 2.1 so you can be sure that you'll have a good time with PS5 or XSX (or PCs with upcoming HDMI 2.1 GPUs if you're into that). You want that VRR and up to 120hz for the next gen.

Quality HDMI 2.1 sets include:

- LG C9 (2019 OLED - In clearance and harder to find this late into 2020 but good potential clearance discount if you can find one)

- LG CX (2020 OLED - A top tier gaming set for 2020; only hesitate if price-size ratio isn't doing it for you or you're too anxious about OLED burn in possibilities to use OLED with games)

- Sony X900H (2020 FALD - Less perfect blacks than OLED and some FALD light halos, but great colors and picture, and Android TV is among the best Smart TV interfaces. Great price for size.)

- Vizio P-Series Quantum X ("2021" FALD - Reviews pending but this set should be launching in a few days. Highest peak brightness in the land for the most popping HDR in places, but like the Sony, you will get typical FALD halos on certain bright objects in dark backgrounds. Also great price for size like the Sony. Lousy Smart TV functions though, but a non-issue with an Apple TV or Shield TV.)

As I said already: VRR requires 120Hz to work correctly, because most televisions have a minimum refresh rate of 48Hz.HDMI 2.1 is input standard, not display standard. 4K60 will be more than enough for next gen consoles. Titles that do 4K120 will probably be countable on two hands, and even those will probably be sub 4K. If one wants to spend twice as much money just so they can brag they have full HDMI 2.1 with 4K120 support sure, they are free to do so.

That's why I said it may not have the option. Not that it won't.

The images looked like tone mapping / color management precision errors rather than a fault, but you're the one with the display, and you're saying that it's started to get worse recently - so I'll take your word for it.It only happens with blues/greys, and it's only started over the past few months... It's not like 'normal' posterisation/banding, and no amount of tweaking solves the problem. When you pause it in certain places, the edges of the problem areas actually flicker quite a lot as well, which suggests to me that it's a processing fault rather than banding. It looks a lot, lot worse in motion, to the point where certain scenes in movies become completely unwatchable...

The X950H is an HDMI 2.0 display, not HDMI 2.1 like the X900H.Would you recommend the Sony X950H over the X900H? Kinda looks like it would be worth it for an extra $200.

Would you recommend the Sony X950H over the X900H? Kinda looks like it would be worth it for an extra $200.

Sony X950H vs Sony X900H Side-by-Side TV Comparison

The Sony X950H is slightly better than the Sony X900H overall. The X950H has better viewing angles, reflection handling, and it delivers a better HDR experience, as it has a better HDR color gamut and it can get brighter. However, the X900H has a higher contrast ratio since it doesn't have the...www.rtings.com

The images looked like tone mapping / color management precision errors rather than a fault, but you're the one with the display, and you're saying that it's started to get worse recently - so I'll take your word for it.

I am going to upgrade for next-gen. Should I be concerned about burn-in? It seems a lot of the games I play have static HUDs. That said, new gen, new games. I play a couple of hours a day, but at the weekends, I sometimes have a six-hour session.X900H is probably the bang-for-buck champ. And then you have the CX if you can afford the upgrade and have zero burn-in concerns.

I just purchased a 48CX for my games room/office. Absolutely astonishing TV.

If you'd have asked me 6 months ago, I'd have said go for it. I've got a 55" 803 OLED in my living room, and it's been fantastic. Unfortunately it's developed a fault with displaying HDR content, and they're refusing to accept that it's an issue.

EvilBoris - would you concur that this behaviour is not "Just how OLED is"? :P

If you saw a 4000nit display you would disagree :PTo be fair I find the c9 to be too bright so this is a Definitely a positive feature for me.

I used to want 4000 nits HDR but now I am quite happy with sub - 1000 Oleds. This is the sweet spot imo

That's with double buffered vsync being always on. Most games disengage vsync when they can't hit the output frequency these days. VRR will remove the tearing in such cases but not much else.If a game is built for 30 FPS and it drops to 25 FPS when things get busy, the display will sync to that automatically.

Same thing if it's built for 60 FPS and were to drop to 50 FPS. In that case it's likely to remain smooth enough that most people won't even notice the drop, while you would have obvious stuttering on a non-VRR 60Hz display.

Thats a nice upgrade. I had an 850D for a week and returned it lol, it was gaaaarbage.Just upgraded from an 65" X850D to the 65" X900H and I have never been so impressed. I hope it gets the needed software updates before the PS5 hits.

Few televisions are made in the 50" size any more. 55" has typically replaced it.

That's not really an issue of display brightness - HDR content is not intended to be viewed in a bright room like that.I'm looking forward to updating my Ks8000 soon with the new consoles. I've been so stuck on getting an OLED but I usually game in daytime in my bright sunny living room (I have big bay windows that don't have curtains). even with the 1000 nit backlit display on my KS8000 I have trouble seeing HDR content well on my tv during the day. Hopefully in the future I'll have space for a dedicated game space where I can have it more dimly lit but I'm already worried that an oled won't be nearly as bright as I'll need it to be. My dad has a QLED which is bright as hell, but I have a hard time letting go of getting an OLED

As I said, that doesn't sound out of the ordinary for a display that has less precision in its image processing/color management systems, or poor tone mapping.It's really hard to describe it... It's on every input, so not just limited to store apps or one device. It's so frustrating. I've run a 4k HDR test video through it at 35Mb/s via Plex, 4k HDR discs, PS4 Pro, everything... When loading screens in TLOU2 come up there's very pixellated halo effects that flicker around the flies/bugs that show up in the top right corner... If it was like it from day one I'd have noticed it, but it's definitely gotten worse. The scene where the guy runs across the battlefield in 1917 was flickering so much that even my father-in-law, who's partially sighted, noticed it!

That's why I don't think it's necessarily faulty, but could be an issue of poor image processing - or a setting that is causing it to happen.I've replied on the tweet for you, that's not normal.

It's like it's given on any attempt to gamut map correctly and is just clipping colours

One of the biggest problems with LG's WOLED design is that the white subpixel affects color accuracy of real-world scenes (not test patterns) and dilutes the color saturation at higher brightness levels. If they could achieve 700-800 nits peak brightness without it, I think the differences would be far less noticeable.If you saw a 4000nit display you would disagree :P

Even a 1500nit display has a visible difference in colour volume

Most games are not using adaptive v-sync. They're triple-buffered to prevent screen tearing and drops below a 30/60 FPS target reducing the frame rate to 20/30 FPS.That's with double buffered vsync being always on. Most games disengage vsync when they can't hit the output frequency these days. VRR will remove the tearing in such cases but not much else.