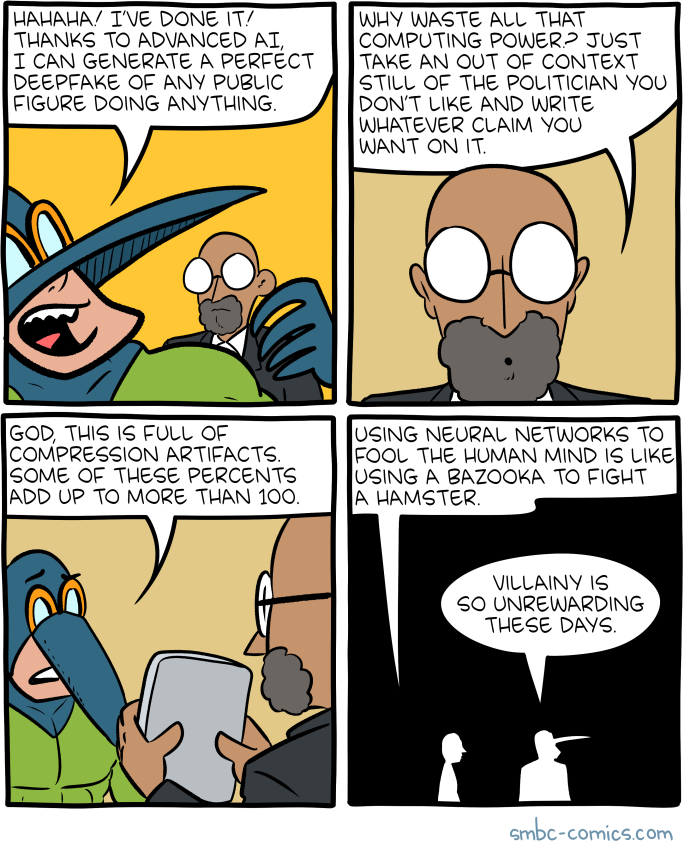

So, I was reading today's SMBC:

And it raises an interesting point to me. We're kind of already in entirely different realities. Would deepfakes advancing even do anything other than make us toss out video evidence already? Shit, people are currently using an out-of-context clip of Joe Biden saying "we're putting together the most advanced voter fraud program" to push their point.

I can imagine things getting slightly worse, but now I don't see it as dramatic as I once did. Maybe we're already fucked, and we simply won't be able to trust footage at all anymore. But it's not like we currently live in a reality governed by silly things like facts.

And it raises an interesting point to me. We're kind of already in entirely different realities. Would deepfakes advancing even do anything other than make us toss out video evidence already? Shit, people are currently using an out-of-context clip of Joe Biden saying "we're putting together the most advanced voter fraud program" to push their point.

I can imagine things getting slightly worse, but now I don't see it as dramatic as I once did. Maybe we're already fucked, and we simply won't be able to trust footage at all anymore. But it's not like we currently live in a reality governed by silly things like facts.