-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

VRAM in 2020-2024: Why 10GB is enough.

- Thread starter Darktalon

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Threadmarks

View all 8 threadmarks

Reader mode

Reader mode

Recent threadmarks

DLSS also reduces VRAM usage Tech Jesus confirms monitoring tools are wrong Discussion of Cache Gospel of Tech Jesus 10GB VRAM is never going to be its primary bottleneck. MSI Afterburner now can show per process VRAM VRAM Testing Results Please, use this tool.Agreed. Outstanding work.Thank you for your great work Darktalon!

Great informative posts

Thank you, truly thank you, this post brought a tear to my eye and was sorely needed and appreciated.Thank you Darktalon for the og post, and all the followups.

It's a mighty interesting topic not getting the broad attention it needs to.

Shown VRAM usage, among all the other aspects of gfx, was always something that puzzled&fascinated me,

alone from the math itself, as gfx IS nothing but math, resulting in pixels lighting up,

and i saw it proven by real life experience many times.

Like a lot of even older games report 8G VRAM usage (that is actually allocation) on my 1070 8G (ups, what a coincidence *lol*),

even when i still was on 1080p, while offering not even remotely as visually pleasant and crisp textures or geometry as newer titels.

As an old boy, coming from late 80'ies consoles, my first 30386 with an amber 14" screen up to now still beeing an avid gamer,

i found my "forever sweespot" in 27"/1440p/144Hz, in the form of a marvelous Asus ROG Gsync panel.

My personal perfect size&match in regard to pov, eyemovement, viewdistance and overall feel. Anything smaller or bigger - meh, not for me.

27"/4k/144hz maybe later down the line - when prices for high end models with those specs get more reasonable...

And it is in this resolution, where i can confirm your point:

Doom Eternal (a vram hog, working a bit different than other titles) is proven to eat under 8G VRAM in 1440p@Ultranightmare.

So far, so good, but: my 1070 simply chokes on this setting, and doesn't run smoothly&pleasantly with high enough framerates.

Reducing the texture size to ultra, or even lower - does sh** nothing to improve that.

Lowering all the other settings to Ultra, and leaving the textures on Ultra Nightmare - does, then it feels smooth like silk.

Put the other way around: even if the 1070 had 24G GDDR6X, or 96GB GDDR17XXX, it would not run it smooth on 1440p - because the gpu itself simply lacks the horsepower.

And exactly that's the point, sadly so many miss:

CAD/Render/pro work on workstations all aside (that work, and those cards are a different story, and simply need a lot of vram for other purposes, than running a game)...

You can't build or buy a GPU to be futureproof for 5 years.

No matter if it's my '96 Orchid Righteous 3D (3dfx Voodo 1) 4MB (i still have laying around somewhere), or a 2020 RTX3080 10GB.

A gfx card was, and always will be, an overall, nevertheless optimal compromise of architecture/design, compute/render power, vram, power requirements etc.

For a certain lifespan, and a certain range applications (in this case games up to a certain point).

And in 5 years, you build the next next gen card - with and for technology then (and only then, not now) available.

It's the very same like simply fitting a car with bigger/wider rims&tyres (and no other adaptions), that make it look nicer, but makes it drive like sh***.

Car engineers are no idiots, and millions of test kilometers on roads and racetracks, driven by dozens of test&racedrivers (at least they do so here with our european premium cars), taking a lot of knowledge, time and money during the development of a car, to finally find an optimal spec, "a best compromise" of looks, comfort, grip, handling and price, also tailored to the specific model of the car (base/road/sport/track oriented version/model).

Hell no - they are all idiots - i put on these rims&tires, because a friend who knows somebody that told him, that Mr. Smith in his backyard garage knows it all better than all of them....

The gfx card match is:

Hell no - slap on 12, 16, 20, xy GB vram on a card that will never ever be able to take any advantage of it, because way too slow by the time that happens - but i don't care, it's more, so it's better, i want it, it will make my card futureproof, X or Y also said that, and those engineers from company XYZ have no clue....

A word of wisdom and encouragement at last Darktalon:

Thanks for your efforts, and keep going.

But, after 50 years of experience with those 2 legged mammals, it's safe to say:

Don't let people and their grief affect you. You can't convince anybody that doesn't want to (listen, think, try out themselfes, etc.)

Let them be happy with it, and we've seen in 12 pages, what that is.

There are so many things to consider on this topic - and most mix it all up/don't get it:

How company X or Y approach steady performance improvements with different methods (and often different means in the lifetime of gfx gpu generation X compared to Y),

how game engines work and how well (or badly) optimised they are towards which underlying hardware,

how the interplay with ram, cpu and storage works (and like it will change/improve with different, already mentioned i/o improvements on hw and sw level),

how utterly poor unoptimized, big in file size, textures can look,

and how photo realistic highly optimised, smaller file size textures can look - where the key is brain&effort = time = money you have to invest,

biased tendencies or prejudice towards company X or Y in general,

general attitude vs (tech) press/articles/media/insider sources, ranging from religion-like fanatism/belief to complete ignorance vs anything,

how consoles and their shared memory work,

how all those algorythms (in hardware + drivers + os) work,

and a hundred things more.

Life's too short to care for everybody&everything - time&effort better invested in actually enjoying life. Which of course, gaming is a part of.

That's why, and knowing i don't need 10GB VRAM on 1440p, but sure more gpu horsepower, i canceled my 3080 preorder today to get rid of fed-up feelings and constant stock/mail checking.

And get an actually available 3070 8G now instead, to thoroughly enjoy gsync furthermore, and the huge upgrade on 1440p for little money (compared to a 2080TI. Or those new winter tyres for my BMW *ouch* :p), while putting the 4yo 1070 in my wife's rig to give her machine a nice upgrade with that ;-)

I agree with you that 27" 1440p is a really remarkable sweet spot.

Maybe I can tempt you, with a 34" 1440p Ultrawide, which is the nearly the exact same height dimensions as the 27". :)

https://www.amazon.com/LG-34GP83A-B-Inch-Ultragear-Compatibility/dp/B08DWD38VX

For review purposes, this monitor is identical to the 34GN850-B.

https://www.rtings.com/monitor/reviews/lg/34gn850-b

You're welcome, credit where credit is due. And to assure you life forms aside angry keyboard warriors in all capital letters still exist.

(says me, the noob, who just registered here recently, with a post count of: 1 *hehe*)

Ultrawide...hmmm....tried it on several friend's systems.

Cool on first look, great while doing desktop stuff, simulations and slower stuff, but somehow not the right thing for me.

Bc i tend to get dizzy with those in longer sessions (and with fast paced shooters).

Various models, but it's not the monitors - it's just me :)

Just so perfectly comfy with the current gsync panel for fun + a vertical 24"/1080p office IPS panel beside it for work/list stuff - perfect for my needs.

+ still need to wring a lot more life&time out of that Asus ROG panel, to self-justify that ~600€ purchase :D

(says me, the noob, who just registered here recently, with a post count of: 1 *hehe*)

Ultrawide...hmmm....tried it on several friend's systems.

Cool on first look, great while doing desktop stuff, simulations and slower stuff, but somehow not the right thing for me.

Bc i tend to get dizzy with those in longer sessions (and with fast paced shooters).

Various models, but it's not the monitors - it's just me :)

Just so perfectly comfy with the current gsync panel for fun + a vertical 24"/1080p office IPS panel beside it for work/list stuff - perfect for my needs.

+ still need to wring a lot more life&time out of that Asus ROG panel, to self-justify that ~600€ purchase :D

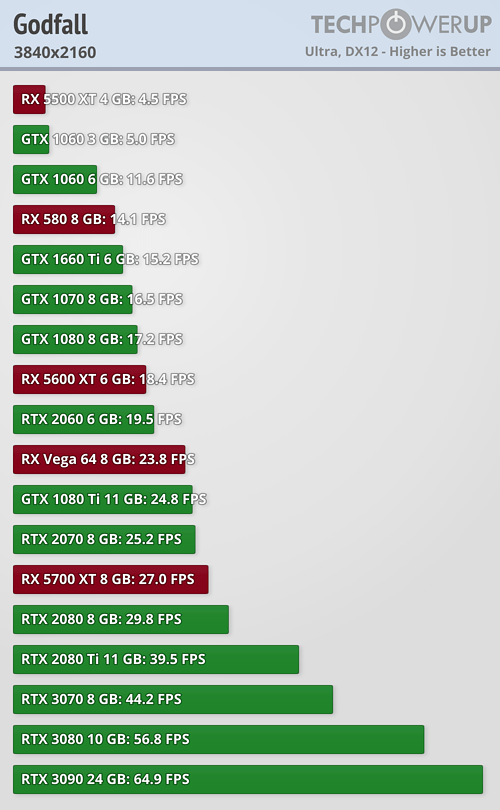

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

uh, didn't the developers say it would use 12GB? That's so far off

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

Just like we thought. They fucking lied.

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

So yeah, literally "AMD sponsored game says only AMD new card can run it correctly".

And people wanted to pretend otherwiseSo yeah, literally "AMD sponsored game says only AMD new card can run it correctly".

You're welcome, credit where credit is due. And to assure you life forms aside angry keyboard warriors in all capital letters still exist.

(says me, the noob, who just registered here recently, with a post count of: 1 *hehe*)

Ultrawide...hmmm....tried it on several friend's systems.

Cool on first look, great while doing desktop stuff, simulations and slower stuff, but somehow not the right thing for me.

Bc i tend to get dizzy with those in longer sessions (and with fast paced shooters).

Various models, but it's not the monitors - it's just me :)

Just so perfectly comfy with the current gsync panel for fun + a vertical 24"/1080p office IPS panel beside it for work/list stuff - perfect for my needs.

+ still need to wring a lot more life&time out of that Asus ROG panel, to self-justify that ~600€ purchase :D

Your writing style is strangely enjoyable.

Welcome!

I mean, sucks for 1060 owners, but I don't think any of them expected to play this game at 4k max settings. :v

I mean, sucks for 1060 owners, but I don't think any of them expected to play this game at 4k max settings. :v

That's precisely the thing - there's almost never a combination of settings and card where you are suffering issues solely because of the VRAM, any settings that need more memory than your card has will likely also cause your framerates to be dropping under 60fps anyway.

In "defense" of AMD. And also nVidia, and basically all companies:

a) AMD trying to push, seeking coalitions and gain more marketshare - eligible, and in the end good for all.

AMD/nV push themselfes/can't sit still (intel anyone? :p) , we get more cool stuff sooner and not years later (intel anyone? :p), prices balance out, and there is no sole supremacy of one alone (which always goes tits up, mostly for those down in the foodchain - the average joe, not matter the continent or decade, no matter if a party, government or - gfx card company).

b) They - all - use marketing lingo, and easy catchphrases. What else shall they use?

If they talk like here, or like that cool dude Steve from GamersNexus - 80% of average consumers (who are not interested in anything more, than using/enjoying something) would stand there, staring with mouths open like Homer Simpson, unsure of what to do next.

If you even have to sell cars these days not with hard facts&specs, but with some emotional mini-movie where some blonde shoves the small actor kids in some SUV and dance around on the beach.

And even Rolls Royce, who don't need any commercial at all - because "you simply know", and the product does the talking for itself, does commercials.

Ya sorry, i'm too old for this sh** :D

So forgive them for the trickery - they all pump out oneliners that all are only true when put into the correct context, so basically not wrong, but just a version of the truth.

If you think about it for a moment.... no matter which economical, technological or society phenomenon....ppl simply love oneliners, and love, better: outright beg, to be deceived and lied to. To feel comfy/easy/superior/taken care of/whatever.

Ultimately, i don't think this "game uses up to 12gb vram" oneliner will scratch on nVidia's global marketshare (~60-80%) a lot.

And even if - see above, all win in the end....

Those who know better, do anyway - no matter what Mr. Leatherjacket, Ms. Su or anyone else barks out on some stage or interview..... ;-)

gozu , thanks for the roses. Forgive me errors/strange words/grammar, should it happen.

There's a lot of cold water between us, and my native tongue is Austrian (the gentle, more subtle version of German).

And yes - my colleagues in the office also love (or outright death-wish hate) my detailed replies/emails, that more often than not, drift off into J.R.R.Tolkien length or Borat2, subsequent movie-like areas... :p :D

a) AMD trying to push, seeking coalitions and gain more marketshare - eligible, and in the end good for all.

AMD/nV push themselfes/can't sit still (intel anyone? :p) , we get more cool stuff sooner and not years later (intel anyone? :p), prices balance out, and there is no sole supremacy of one alone (which always goes tits up, mostly for those down in the foodchain - the average joe, not matter the continent or decade, no matter if a party, government or - gfx card company).

b) They - all - use marketing lingo, and easy catchphrases. What else shall they use?

If they talk like here, or like that cool dude Steve from GamersNexus - 80% of average consumers (who are not interested in anything more, than using/enjoying something) would stand there, staring with mouths open like Homer Simpson, unsure of what to do next.

If you even have to sell cars these days not with hard facts&specs, but with some emotional mini-movie where some blonde shoves the small actor kids in some SUV and dance around on the beach.

And even Rolls Royce, who don't need any commercial at all - because "you simply know", and the product does the talking for itself, does commercials.

Ya sorry, i'm too old for this sh** :D

So forgive them for the trickery - they all pump out oneliners that all are only true when put into the correct context, so basically not wrong, but just a version of the truth.

If you think about it for a moment.... no matter which economical, technological or society phenomenon....ppl simply love oneliners, and love, better: outright beg, to be deceived and lied to. To feel comfy/easy/superior/taken care of/whatever.

Ultimately, i don't think this "game uses up to 12gb vram" oneliner will scratch on nVidia's global marketshare (~60-80%) a lot.

And even if - see above, all win in the end....

Those who know better, do anyway - no matter what Mr. Leatherjacket, Ms. Su or anyone else barks out on some stage or interview..... ;-)

gozu , thanks for the roses. Forgive me errors/strange words/grammar, should it happen.

There's a lot of cold water between us, and my native tongue is Austrian (the gentle, more subtle version of German).

And yes - my colleagues in the office also love (or outright death-wish hate) my detailed replies/emails, that more often than not, drift off into J.R.R.Tolkien length or Borat2, subsequent movie-like areas... :p :D

Last edited:

In "defense" of AMD. And also nVidia, and basically all companies:

a) AMD trying to push, seeking coalitions and gain more marketshare - eligible, and in the end good for all.

AMD/nV push themselfes/can't sit still (intel anyone? :p) , we get more cool stuff sooner and not years later (intel anyone? :p), prices balance out, and there is no sole supremacy of one alone (which always goes tits up, mostly for those down in the foodchain - the average joe, not matter the continent or decade, no matter if a party, government or - gfx card company).

b) They - all - use marketing lingo, and easy catchphrases. What else shall they use?

If they talk like here, or like that cool dude Steve from GamersNexus - 80% of average consumers (who are not interested in anything more, than using/enjoying something) would stand there, staring with mouths open like Homer Simpson, unsure of what to do next.

If you even have to sell cars these days not with hard facts&specs, but with some emotional mini-movie where a blonde bimbo shoves the small actor kids in some SUV and dance around on the beach.

And even Rolls Royce, who don't need any commercial at all - because "you simply know", and the product does the talking for itself, does commercials.

Ya sorry, i'm too old for this sh** :D

So forgive them for the trickery - they all pump out oneliners that all are only true when put into the correct context, so basically not wrong, but just a version of the truth.

If you think about it for a moment.... no matter which economical, technological or society phenomenon....ppl simply love oneliners, and love, better: outright beg, to be deceived and lied to. To feel comfy/easy/superior/taken care of/whatever.

You speak truth.

Ultimately, i don't think this "game uses up to 12gb vram" oneliner will scratch on nVidia's global marketshare (~60-80%) a lot.

And that enormous marketshare means AAA PC games are much more likely to be optimized for nVidia's 10GB GDDR6X (less but faster VRAM) than AMD's 16GB of GDDR6.*

The GPU wars are going to get interesting now that:

1. Apple is using console-like unified SoC architecture with shared DRAM and a custom ARM CPU.

2. AMD is "cheating" by letting Zen3 and RDNA2 whisper to each other in new secret ways.

3. nVidia likely bought ARM to go towards that tighter CPU/GPU integration.

4. Intel "got serious" (for what? the 4th time now? lol) about their GPU game as well with the XE.

* Much will depend on the quality of the implementation of SSD to GPU DirectIO by AMD and nVidia. This is a brand new metric that GPUs are going to start competing on, just like DXR (Ray Tracing). Performance will depend on VRAM + DIO perf instead of just VRAM like it does now.

And that enormous marketshare means AAA PC games are much more likely to be optimized for nVidia's 10GB GDDR6X (less but faster VRAM) than AMD's 16GB of GDDR6.*

The GPU wars are going to get interesting now that:

1. Apple is using console-like unified SoC architecture with shared DRAM and a custom ARM CPU.

2. AMD is "cheating" by letting Zen3 and RDNA2 whisper to each other in new secret ways.

3. nVidia likely bought ARM to go towards that tighter CPU/GPU integration.

4. Intel "got serious" (for what? the 4th time now? lol) about their GPU game as well with the XE.

* Much will depend on the quality of the implementation of SSD to GPU DirectIO by AMD and nVidia. This is a brand new metric that GPUs are going to start competing on, just like DXR (Ray Tracing). Performance will depend on VRAM + DIO perf instead of just VRAM like it does now.

Fully agree. And understandable - you hang close to/with the market dominator in own interest.

For new i/o methods (and forgive me, as i'm no pro, just a well aged&informed consumer aka spoilt brat) my question/assumption is:

And Microsoft binds all those loose ends together with the DirectStorage API they already announced.

If they all lay their standard open to MS to be implemented (what i assume - you can reach the biggest audience using Win10).

Resulting (after some months of software hiccups and bug chasing) into kind of general support within the OS,

no matter if you use intel/ryzen,radeon/rtx, pcie 3 or 4 nvme etc.

And some new, unseen before in looks quality, AAA titles using that technique.

Where probably vram (and peripheral speed) matters even more than it's size.

Right?

Ultrawide...hmmm....tried it on several friend's systems.

Cool on first look, great while doing desktop stuff, simulations and slower stuff, but somehow not the right thing for me.

Bc i tend to get dizzy with those in longer sessions (and with fast paced shooters).

Various models, but it's not the monitors - it's just me :)

This strange, the effect is pretty much the opposite for a couple of friends with motion sickness issues, in fact I converted them to ultra wide precisely because they were able to play more first person games without getting dizzy on my setup.

So long as the frame rate is high and FOV is properly wider it always helped them.

This strange, the effect is pretty much the opposite for a couple of friends with motion sickness issues, in fact I converted them to ultra wide precisely because they were able to play more first person games without getting dizzy on my setup.

So long as the frame rate is high and FOV is properly wider it always helped them.

Nah, no motion sickness. Put me in a plane, sports/track car, rollercoaster or else, and i squeal like a happy pig.

Have good eyesight with above average fov for my age, and only 0,7 diopters in far, even less in short sight.

But too close to a screen/too big (and especially too wide) a screen - and it simply strains me too much after some time (due to constant eye/headmovement),

leading to more or less the same diziness, those with motion sickness affected feel.

So yes, the opposite, which equals "normal" i guess? ;-)

Nah, no motion sickness. Put me in a plane, sports/track car, rollercoaster or else, and i squeal like a happy pig.

Have good eyesight with above average fov for my age, and only 0,7 diopters in far, even less in short sight.

But too close to a screen/too big (and especially too wide) a screen - and it simply strains me too much after some time (due to constant eye/headmovement),

leading to more or less the same diziness, those with motion sickness affected feel.

So yes, the opposite, which equals "normal" i guess? ;-)

Lol, yeah I guess so. Stillness sickness :)

But not so weird, My wife is like that. no motion sickness but she can't stand video games at all, especially being close to my ultra wide.

Lol, yeah I guess so. Stillness sickness :)

But not so weird, My wife is like that. no motion sickness but she can't stand video games at all, especially being close to my ultra wide.

Hehehe :) However....

As i'm married since 19 years now - i can assure you that kind of female video game aversion...has other reasons than vision or monitor size.

Those looks from her (like an MMA brute doing his promo shooting) - when you already know the DHL/UPS delivery guys by their forename (with gaming not my only hobby...)?

Godlike. And me simply replying: "hey, i dont do drugs, alcohol or cheating - but I... I'm the shopping queen here in this house.".

No AAA title running @240FPS can touch that. :D

First we must put things into context. The jump between PS3 and PS4 was 256mb to 8GB. 32x increase in VRAM!

apologies if this may have been addressed but why are you comparing the VRAM in the PS3 to the total RAM in the PS4?

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

Even the 8 GB 3070 card would run this, haha. Funny. I do wonder if AMD will use "marketing" more then "technology" to try and recapture share this round. Guess we'll see when reviews/benchmarks appear.

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

Can confirm. Aside from some minor hitches transitioning between gameplay and cutscenes, My 3080 shreds at this game, running QHD Ultrawide.

As noted in the video here, it's a god damn gpu workout, with minimal load placed on the CPU.

*insert Pikachu shocked face*Just to confirm what @Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

Thank you for confirming this. Just ended up being bad amd marketing.

I love it too, don't ever stop. It's very pleasant to read.thanks for the roses. Forgive me errors/strange words/grammar, should it happen.

There's a lot of cold water between us, and my native tongue is Austrian (the gentle, more subtle version of German).

And yes - my colleagues in the office also love (or outright death-wish hate) my detailed replies/emails, that more often than not, drift off into J.R.R.Tolkien length or Borat2, subsequent movie-like areas... :p :D

New info came to light today.AMD is "cheating" by letting Zen3 and RDNA2 whisper to each other in new secret ways.

SAM is apparently just re-sizeable BAR, and is part of the pcie spec. If MB, cpu, os, and gpu all support it... It will work.

Nvidia is already testing a release updating Ampere to enable re-sizeable BAR, and thus, get the benefits of "SAM" (AMD's marketing term for it) on a zen 3 and x570/b550.

See here for more details

https://twitter.com/GamersNexus/status/1327006795253084161?s=20

In the very next paragraph, I explained that ps4 has 5GB usable for games, which is 20x increase still.apologies if this may have been addressed but why are you comparing the VRAM in the PS3 to the total RAM in the PS4?

What are you expecting me to compare? Ps3 had a split memory system, while ps4 and ps5 have a unified system...

The point of the historical analysis is that the jump from gen 7 to 8, was very different than the jump from gen 8 to 9. Even if you argue that developers used ONLY 2 GB of the RAM for the gpu (and this isn't an accurate argument), that would be an 8x jump, compared to 2.7x.

Ohhhh...interesting!New info came to light today.

SAM is apparently just re-sizeable BAR, and is part of the pcie spec. If MB, cpu, os, and gpu all support it... It will work.

Nvidia is already testing a release updating Ampere to enable re-sizeable BAR, and thus, get the benefits of "SAM" (AMD's marketing term for it) on a zen 3 and x570/b550.

See here for more details

https://twitter.com/GamersNexus/status/1327006795253084161?s=20

In the very next paragraph, I explained that ps4 has 5GB usable for games, which is 20x increase still.

What are you expecting me to compare? Ps3 had a split memory system, while ps4 and ps5 have a unified system...

The point of the historical analysis is that the jump from gen 7 to 8, was very different than the jump from gen 8 to 9. Even if you argue that developers used ONLY 2 GB of the RAM for the gpu (and this isn't an accurate argument), that would be an 8x jump, compared to 2.7x.

not sure what you think i was trying to imply. i was just wondering why you tried to make what seemed like an apples to oranges comparison.

My apologies, Im used to getting a lot of negative feedback on this particular topic.not sure what you think i was trying to imply. i was just wondering why you tried to make what seemed like an apples to oranges comparison.

My apologies, Im used to getting a lot of negative feedback on this particular topic.

it's all good, friendo.

Just to confirm what Darktalon said, this benchmark video shows that Godfall uses around 6-6.5GB of VRAM at 4K Maximum settings.

Sorry but HAHAHAHAHAHAHA.

Marketing and all but flubbing the numbers shouldn't double the VRAM usage, like damn you had to know you were going to be proven massive false by a huge margin.

I am again reminded of the Evil Within 2 release at that sketchy flat we all used to hang around in.Sorry but HAHAHAHAHAHAHA.

Marketing and all but flubbing the numbers shouldn't double the VRAM usage, like damn you had to know you were going to be proven massive false by a huge margin.

3080 redeemed :p

I'll be honest after that godfall vram thread came out I was starting to get worried, but this is good news. Hope I can hold onto this card for at least 2 years and game in peace.

I'll be honest after that godfall vram thread came out I was starting to get worried, but this is good news. Hope I can hold onto this card for at least 2 years and game in peace.

Godfall Benchmark Test & Performance Analysis

Godfall is one of the launch titles for Sony's PlayStation 5 to show off the new platform's raytracing capabilities. We took a closer look at the PC port of the game and tested it with a wide selection of graphics cards from both AMD and NVIDIA, using Full HD, 1440p, and 4K.

Judging from benchmarks, the game doesn't even need 8GB for 4K at the moment.

good question.WTF is with that massive jump for the 3090 though. Usually it's only like 10-15% better, not like, 30%

WTF is with that massive jump for the 3090 though. Usually it's only like 10-15% better, not like, 30%

Saturation with VRAM will do that. It's mild here though... in some productivity tools you can look at 300% improvement.

edited: thought i was in the 3080 megathread ahaha, all had been said here already.

Last edited:

This whole renderer gives weird results and that's not at all surprising when someone is trying to prove a point artificially.WTF is with that massive jump for the 3090 though. Usually it's only like 10-15% better, not like, 30%

This makes me wonder if we'll even have 4k/60 cards next year (Other than at a $1500 price point). Hopefully this kind of performance isn't indicative of what we can expect from "next gen" games.

Saturation with VRAM will do that. It's mild here though... in some productivity tools you can look at 300% improvement.

edited: thought i was in the 3080 megathread ahaha, all had been said here already.

Link? I don't see anything mentioned in the 3080 megathread.

I'm curious about why the 3090 has such a large advantage when the only difference between it and the 3080 is more CUDA cores and VRAM.

Link? I don't see anything mentioned in the 3080 megathread.

I'm curious about why the 3090 has such a large advantage when the only difference between it and the 3080 is more CUDA cores and VRAM.

You should have come much earlier with that, there are so many pages now it's unfindable... it was an outlier tool that simply didn't use the GPU when the project couldn't fit in it... so very extreme scenario, with a tool that works much better on cuda cores than on cpu.

I don't remember one bit what it was. Creo perhaps ? don't remember (i have no need to since i have a 3090). Or maybe an offline renderer not offering a mix mode.

At equal frequency and power consumption, with no vram exhaustion, the max attainable should be 20.6%. The reality is less than that because of internal bandwidth problems, and the slight boost clock disadvantage stock 3090 has even though silicon lottery comes put randomness in there.

In games, when exhausted, sadly pcie is too slow for emergency needs that would be seamless, so i guess it does the most urgent rather than reorganizing properly (loading pause), so stutters when you move for a little bit until framerate is back to stable speed.

Last edited:

Godfall PC Performance Review and Optimisation Guide - OC3D

Godfall – An Introduction into the next generation Godfall is one of the first truly next-generation game to hit the PC marketplace, launching exclusively on next-generation platforms. On consoles, the game is not held back by PlayStation 4 and Xbox One, launching as a timed exclusive on Sony’s...

Godfall PC Performance Review and Optimisation Guide - OC3D

Godfall – An Introduction into the next generation Godfall is one of the first truly next-generation game to hit the PC marketplace, launching exclusively on next-generation platforms. On consoles, the game is not held back by PlayStation 4 and Xbox One, launching as a timed exclusive on Sony’s...www.overclock3d.net

If this numbers are accurate (there's gonna be a lot of variability throughout different levels), then the 12gb VRAM claim is not wrong.

Raytracing update is gonna easily add 1.5-2gb of VRAM on top so you want 12 gb of VRAM to be comfortable.

That said, I doubt having 10 or 8 gb is gonna cause a massive drop in performance, most likely will just means those cards will underperform a bit compared to their theoretical maximum perf if they weren't VRAM constrained.

I wonder if the prices for these 20GB vram cards will ever be reasonable or if their low supply and focus on yearly refreshes means that the only way to get them for cheap is to buy used. At this point, while I'd like to upgrade my PC, the pricing is just too bonkers.

I don't know what their methodology was, but Godfall does not require more than 5GB of VRAM at 2560x1440 on Epic Settings. We are talking about the dedicated VRAM allocation, not the total VRAM allocation (so if they had programs running in the background, then that would explain their results). You can clearly see that the dedicated VRAM usage is between 4-5GB in these screenshots (which are also from an RTX2080Ti like the one they used, so something is definitely wrong with their results).

https://www.dsogaming.com/pc-performance-analyses/godfall-pc-performance-analysis/

https://www.dsogaming.com/pc-performance-analyses/godfall-pc-performance-analysis/

I doubt that it will add as much in this case. But yeah, it will probably go above 10GB in 4K, if only by some megabytes.Raytracing update is gonna easily add 1.5-2gb of VRAM on top so you want 12 gb of VRAM to be comfortable.

Remains to be seen what this will mean for performance though.

Also how did they get those numbers? Through MSI Afterburner? Not very accurate then for actual VRAM usage.If this numbers are accurate (there's gonna be a lot of variability throughout different levels), then the 12gb VRAM claim is not wrong.

Raytracing update is gonna easily add 1.5-2gb of VRAM on top so you want 12 gb of VRAM to be comfortable.

That said, I doubt having 10 or 8 gb is gonna cause a massive drop in performance, most likely will just means those cards will underperform a bit compared to their theoretical maximum perf if they weren't VRAM constrained.

It seems likely given that in their benchmarks even 6GB cards, like the 1060 & 1660S, don't seem to perform worse than equivalent higher VRAM cards: 1060 matches a 580 even at 1440p & 1660S is more or less on par with a 1070 at every resolution.

The only potential sign of VRAM issues I could find in their performance benchmark is that the 2060 has worse 1%s than equivelant higher VRAM cards at 4K, like the 1080. The 2060 has less ROPs than the 1080 though so that's another potential explanation but I have no idea how a ROP bottleneck would show itself in performance numbers.

as someone who doesn't udnerstand PC very much, I appreciate you making this thread.My apologies, Im used to getting a lot of negative feedback on this particular topic.

It cleared the myth of the need for big VRAM ammounts to play at 4k; and that will help when i'll be purchasing my next GPU in a year or 2.

THank you.

More allocation numbers.

Godfall PC Performance Review and Optimisation Guide - OC3D

Godfall – An Introduction into the next generation Godfall is one of the first truly next-generation game to hit the PC marketplace, launching exclusively on next-generation platforms. On consoles, the game is not held back by PlayStation 4 and Xbox One, launching as a timed exclusive on Sony’s...www.overclock3d.net

Tech sites seriously need to get with the times, this is becoming actively annoying.

Threadmarks

View all 8 threadmarks

Reader mode

Reader mode