Link to Tom's blog with the full story.

He's using a Spartan-3E FPGA from 2005 and basically renders the scene, without a frame buffer, as the pixels are sent to the screen.

He's using a Spartan-3E FPGA from 2005 and basically renders the scene, without a frame buffer, as the pixels are sent to the screen.

he used raytracing to get around the fact he could not store the image in memory.

He links to his repro at the end:Ah damn, a Spartan 3E.

Does it say exactly which model does he use? Also is there any code to tinker with?

Is it like a shitty arduino or something?Some degree of ray tracing on a second hand hardware less than $5 from Ebay.

I doubt it, though we have not seen what full rt based chip could do, and I doubt nvidia or anyone else will spend the insane amount of money to make it any time soon.Is this basically insinuating he has found a way to greatly mitigate the impact Ray tracing has on performance?

Thank you for the essay responses, I read both of them; this seems like a tremendously impressive feat.

My experience with coding is limited but my brother is a CS major and he's more or less told me so.The reality is that all computer graphics development is insanely hard. It's one of the most difficult sciences around.

This is one of the best posts I have read on Resetera. Thank you.This is a lot to take in, so strap in:

Firstly, the way computer graphics have traditionally worked is that you have a smaller, miniture machine inside of your computer, now usually called a GPU, that interfaces with the output monitor/television. Your computer talks to this machine using memory, commanding the GPU to draw to the television. The way the GPU draws, is that it sets aside a small area of memory called a frame buffer. Think of a frame buffer like a canvas that artists use. The computer sends all the numeric data that represents the polygons to the GPU, and the GPU crunches the math and figures out how to translate those raw numbers into pixels. As it figures out these pixels, it draws them onto the frame buffer, like an artist painting on a canvas. Once the entire image is drawn on the frame buffer, the GPU then waits for a signal from the television to send the image all at once.

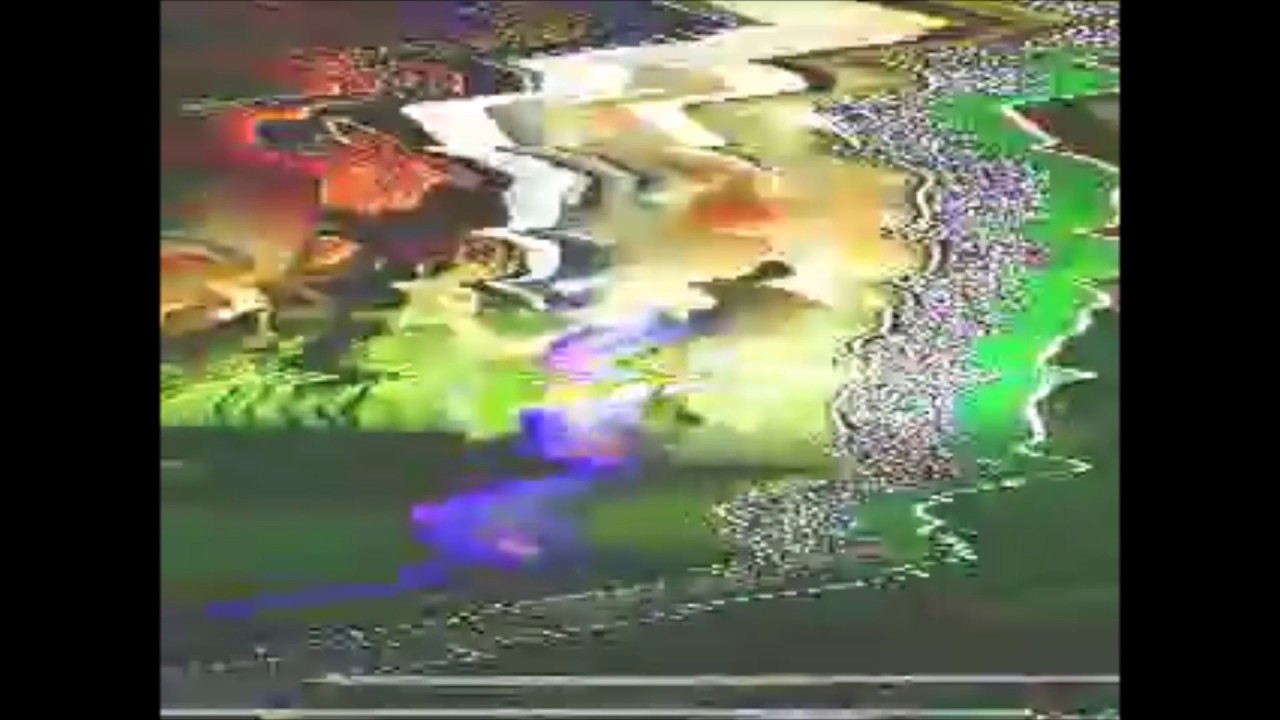

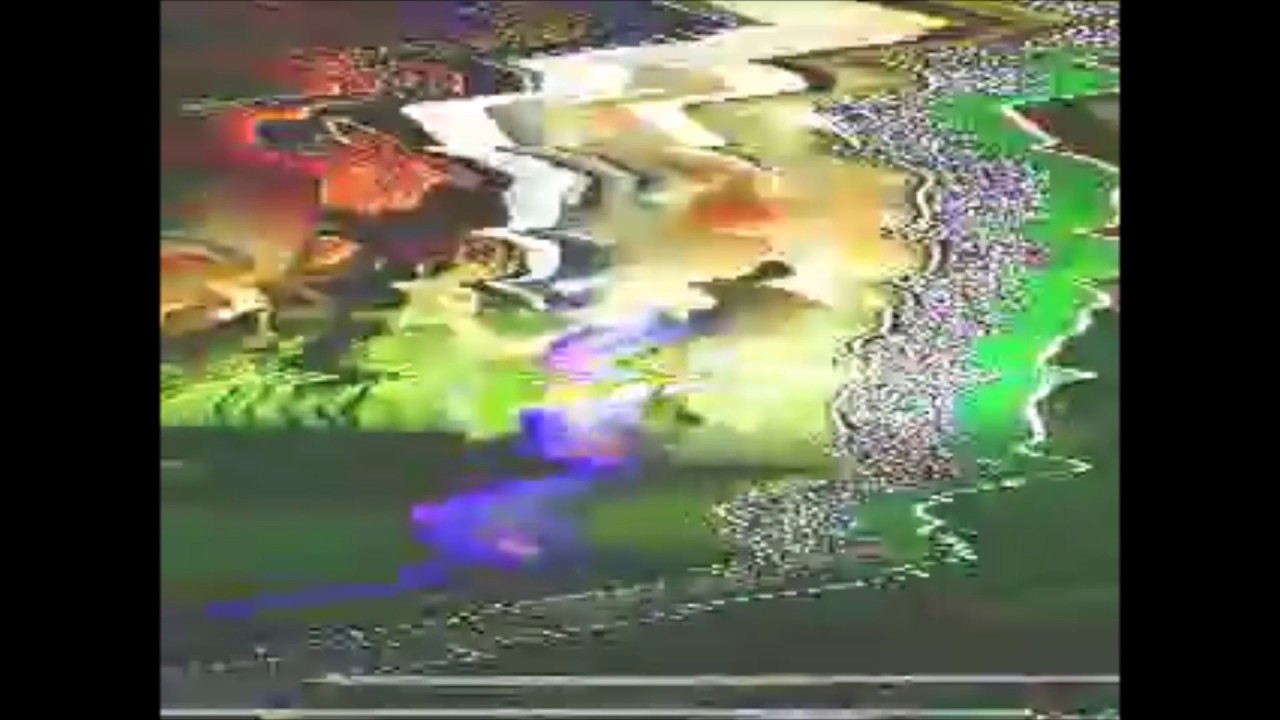

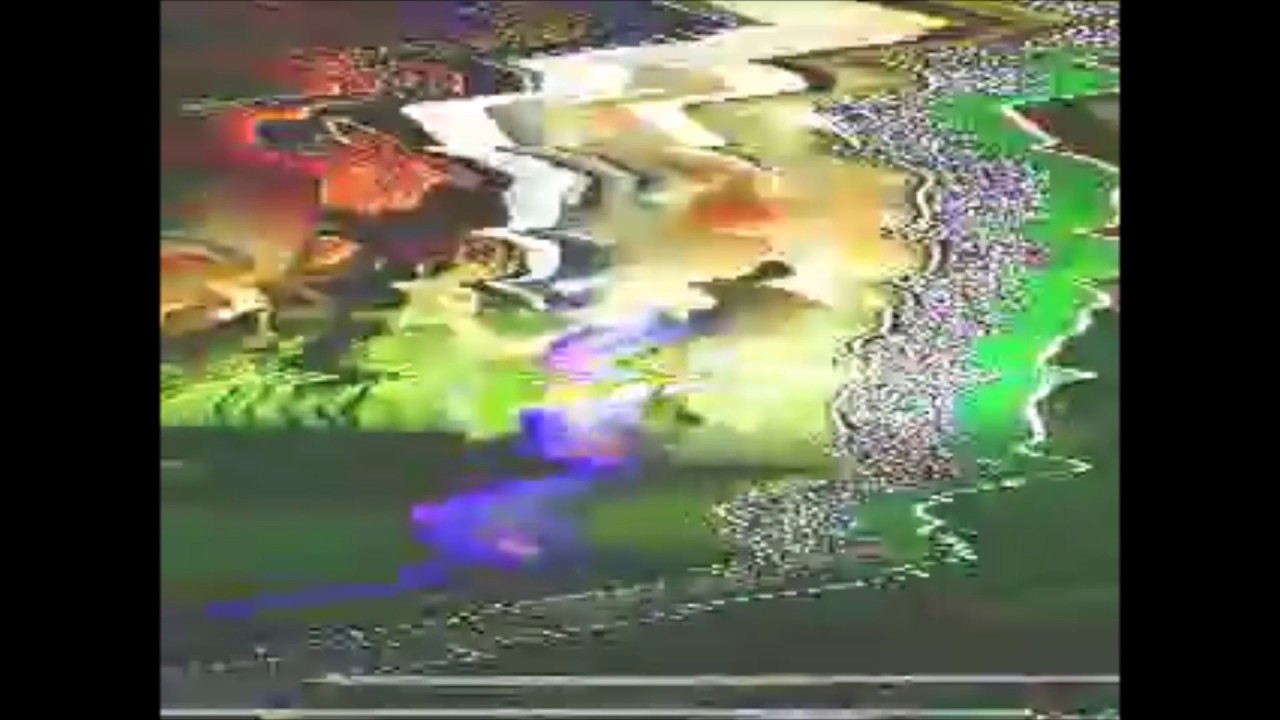

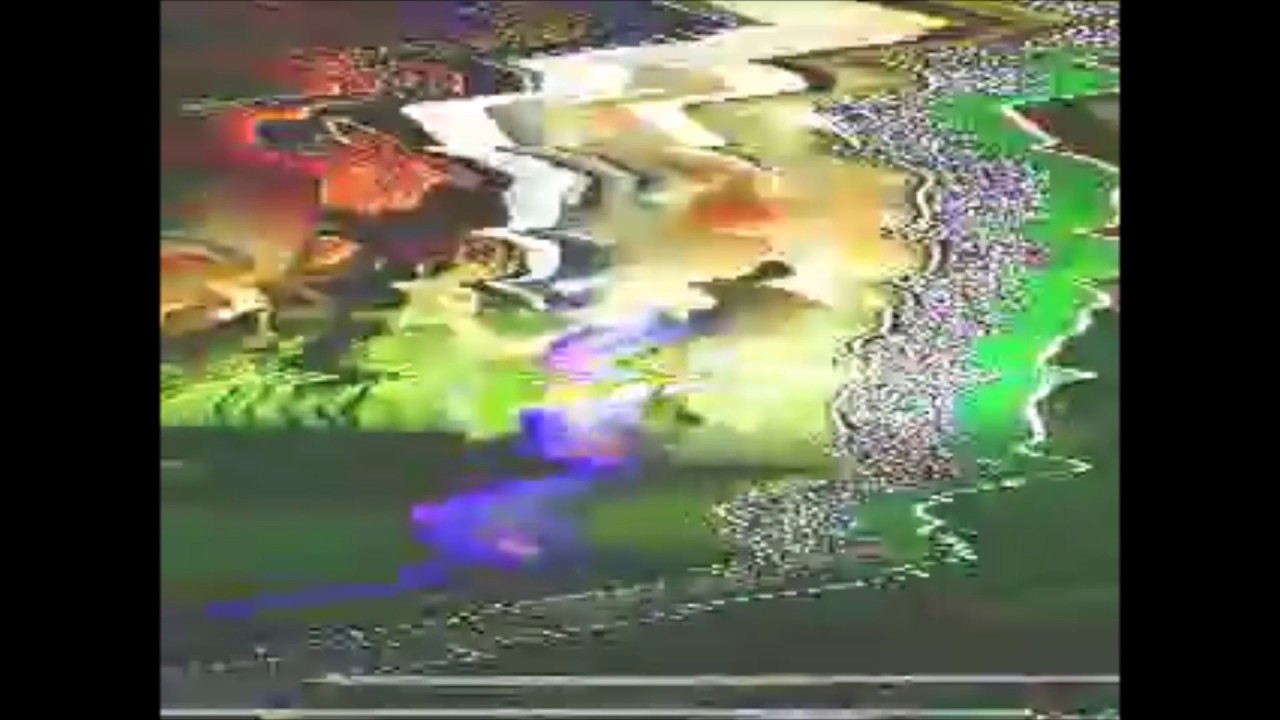

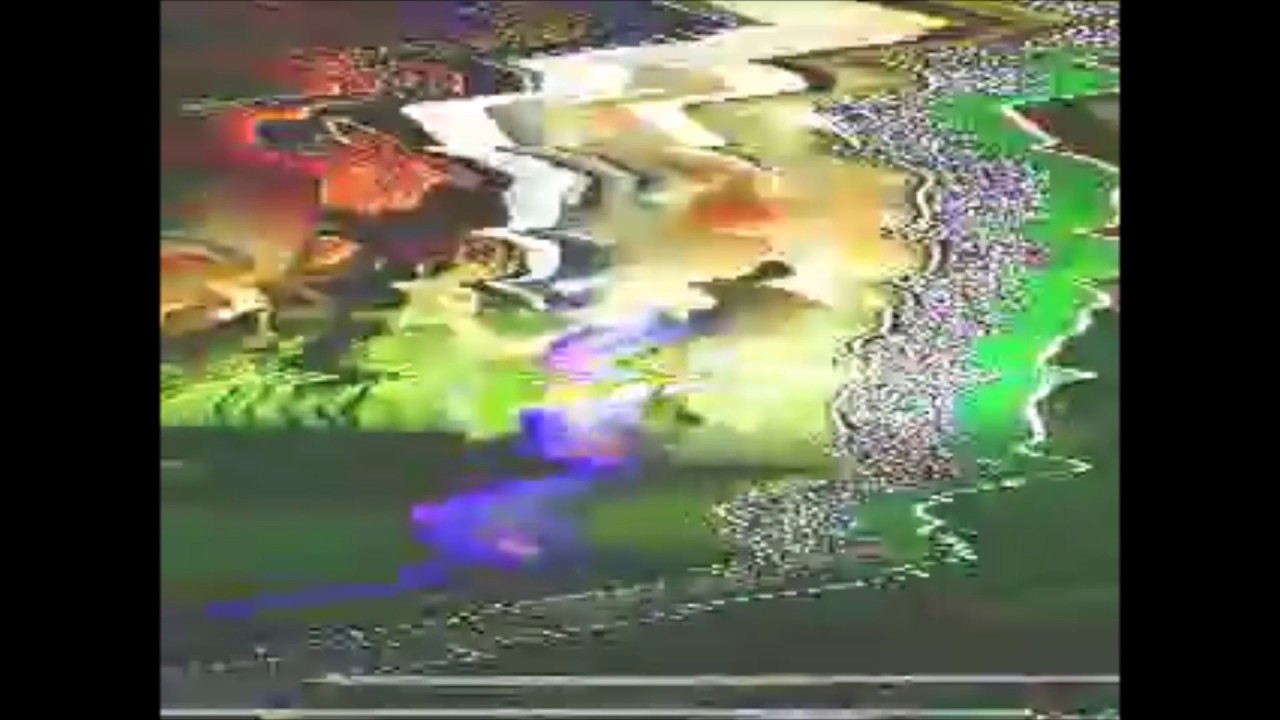

The reason televisions and GPUs talk in this manner, where the graphics chip waits on the timing of the television, is because of how televisions worked going all the way back to the early 1900's. Old CRT televisions (boob tubes) had what was known as a raster gun inside, it was a magnetic beam that would bend light being shot at the screen itself. This gun would literally paint glowing light onto the screen in a pattern, starting from the top left portion of the television screen, to the bottom right portion of the screen. This is a physical gun, the beam actually moves and is drawn line by line going down the screen. While the gun is drawing, data has to be sent from the GPU to the television in precise timing. The moment the television is ready to draw the appropriate pixel, the GPU needs to be sending it. If the GPU is late in sending the pixel, the image will be destroyed and look like gibberish, kind of like this:

Thus, to ensure that the data that needs to be sent is always ready for the gun, you draw the entire scene to the framebuffer, to hold ready-to-send pixels to the television. The opposite of doing this is known as "racing the beam." What that means is that the CPU sends pixel information to the GPU and the GPU immediately passes it to the television as soon as it receives it. This is much tricker, as you need to figure out exactly how long your calculations are going to take. When you send data to the GPU, as I said, it crunches math, and processors actually take time to do math. It might seem instant to us, but that's because computers operate in time miniscule to us. If we could slow everything down, you'd see that when you "race the beam" you are doing precisely that. It's a race for your CPU to crunch all the math necessary before the raster beam is in position. You are under a constant deadline.

Modern televisions technically do not work like this anymore in function, but because old televisions formed the basis for all digital images even today, there are vestigial timing reminants of this entire system. Even on a progressive scan television (where the image isn't painted on by a raster gun, but rather displayed on the screen all at once instantly), it still will fake the timing of a raster gun because that's just how we have decided for a century how digital displays work.

That explains the "Race the beam part," that's only part of what's going on here. The other half pertains to how GPUs turn 3D math into pixels. This is traditionally done through a process known as rasterization. Rasterization is a process where 3 points in space are calculated out and turned into a series of pixels, like so:

Conventionally, computer graphics will do this for every polygon in a scene individually. This is how we "paint" on the framebuffer, the rasterized polygons are stored as pixels in the frame buffer. Individual polygons are thusly usually rendered without regard to the rest of the scene, they only concern their data. Now, with modern graphics technologies, we can share data about the environment, like if the polygon is close to a light source or something, so that the rasterizer can take that into consideration when determining the output pixel of the polygon. Like if this polygon is red, but it's next to a blue light, the rasterizer can take that into account and shade the polygon purple. This degree of interaction between parts of the world is thusly limited and basic compared to how real light works.

See, the way light IRL works is that light can be thought of as a physical element called a photon. Let's assume photons are light tiny balls of light. When a light source emits light IRL, what happens is it shoots photons, uncountable numbers of balls of light, in every direction. These photons bounce around the room until they eventually end up in our eyes. Pure, white light is actually a spectrum, it contains every "color" at first. What we perceive as colors is actually a by product of photons bouncing around. When a photon makes contact with a physical entity, like a wall covered in paint, as it bounces off, the "color" of the paint changes the properties of the photon. That is to say, the paint actually absorbs some of the light's spectrum. Say light begins like this:

Our green paint in the above example actually absorbs every part of the light spectrum EXCEPT the green, like this:

So the photon that riccochets off now only contains that part of the light spectrum. If that photon enters our eye after bouncing off the paint immediately, we will perceive it as green.

What this means in practice is that, IRL, the end light that comes into our eyes is not just a function of a single wall or object in space, we actually see the cumulative traced path of light as it bounces around the room. The end color we see when it enters our eyes, is the result of every single surface the photon bounced off of. Every surface affects the spectral properties of the light.

Our rasterizer doesn't work like this at all. But ray tracing does. Ray tracing is a computer imagery technique that uses linear algebra to work through the path of a photon. It's using math to calculate all the stuff i talked about above. Instead of light entering our eyes, it instead becomes a pixel on the screen, as though that pixel was the rod in our eye that was intercepting the bouncing photon. It's basically like really, really advanced physics for light and color.

Ray tracing is slow because, as I mentioned much earlier in this post, crunching math takes actual time. And the more math you do, the more complex it is, the longer it takes. You might have heard how movies like toy story and jurassic park and all those have "render farms" which takes "days to render a second." This is why. Movies don't need to render frames quickly. They can take as much time as they want to draw a single frame, because that frame will get saved in a file, and then the next frame, and so forth, until all frames are rendered, when they can be played back like a flip book to achieve animation. So how long it takes to render the frame doesn't matter, since you're going to do all of them first before playing it back. Because they have infinite time, they can actually walk through all the math needed for ray tracing.

Computer games need to present images insanely fast. Like, preferably, 60 times a second or faster. That typically works out to .0166 ms per frame. That is so little time to prepare a raytraced image. So new ray tracing hardware, things like the RTX from nvidia, are such hype because they contain circuitry and elements meant to speed the entire process up enough for it to be done in such a short amount of time.

Last bit of what this guy did -- he built his raytracer using an old piece of hardware, which is impressive.

I should note, this is also how many impressive demos on shader toy work:

Things like this are done using ray tracers written in GLSL/HLSL for video cards.

EDIT: For those curious, here's a basic shader that is meant as a cheat sheet for raymarching primitives: https://www.shadertoy.com/view/Xds3zN

you can view the math of the individual functions in that shader, as they're labeled for clarity.

This is a lot to take in, so strap in:

Firstly, the way computer graphics have traditionally worked is that you have a smaller, miniture machine inside of your computer, now usually called a GPU, that interfaces with the output monitor/television. Your computer talks to this machine using memory, commanding the GPU to draw to the television. The way the GPU draws, is that it sets aside a small area of memory called a frame buffer. Think of a frame buffer like a canvas that artists use. The computer sends all the numeric data that represents the polygons to the GPU, and the GPU crunches the math and figures out how to translate those raw numbers into pixels. As it figures out these pixels, it draws them onto the frame buffer, like an artist painting on a canvas. Once the entire image is drawn on the frame buffer, the GPU then waits for a signal from the television to send the image all at once.

The reason televisions and GPUs talk in this manner, where the graphics chip waits on the timing of the television, is because of how televisions worked going all the way back to the early 1900's. Old CRT televisions (boob tubes) had what was known as a raster gun inside, it was a magnetic beam that would bend light being shot at the screen itself. This gun would literally paint glowing light onto the screen in a pattern, starting from the top left portion of the television screen, to the bottom right portion of the screen. This is a physical gun, the beam actually moves and is drawn line by line going down the screen. While the gun is drawing, data has to be sent from the GPU to the television in precise timing. The moment the television is ready to draw the appropriate pixel, the GPU needs to be sending it. If the GPU is late in sending the pixel, the image will be destroyed and look like gibberish, kind of like this:

Thus, to ensure that the data that needs to be sent is always ready for the gun, you draw the entire scene to the framebuffer, to hold ready-to-send pixels to the television. The opposite of doing this is known as "racing the beam." What that means is that the CPU sends pixel information to the GPU and the GPU immediately passes it to the television as soon as it receives it. This is much tricker, as you need to figure out exactly how long your calculations are going to take. When you send data to the GPU, as I said, it crunches math, and processors actually take time to do math. It might seem instant to us, but that's because computers operate in time miniscule to us. If we could slow everything down, you'd see that when you "race the beam" you are doing precisely that. It's a race for your CPU to crunch all the math necessary before the raster beam is in position. You are under a constant deadline.

Modern televisions technically do not work like this anymore in function, but because old televisions formed the basis for all digital images even today, there are vestigial timing reminants of this entire system. Even on a progressive scan television (where the image isn't painted on by a raster gun, but rather displayed on the screen all at once instantly), it still will fake the timing of a raster gun because that's just how we have decided for a century how digital displays work.

That explains the "Race the beam part," that's only part of what's going on here. The other half pertains to how GPUs turn 3D math into pixels. This is traditionally done through a process known as rasterization. Rasterization is a process where 3 points in space are calculated out and turned into a series of pixels, like so:

Conventionally, computer graphics will do this for every polygon in a scene individually. This is how we "paint" on the framebuffer, the rasterized polygons are stored as pixels in the frame buffer. Individual polygons are thusly usually rendered without regard to the rest of the scene, they only concern their data. Now, with modern graphics technologies, we can share data about the environment, like if the polygon is close to a light source or something, so that the rasterizer can take that into consideration when determining the output pixel of the polygon. Like if this polygon is red, but it's next to a blue light, the rasterizer can take that into account and shade the polygon purple. This degree of interaction between parts of the world is thusly limited and basic compared to how real light works.

See, the way light IRL works is that light can be thought of as a physical element called a photon. Let's assume photons are light tiny balls of light. When a light source emits light IRL, what happens is it shoots photons, uncountable numbers of balls of light, in every direction. These photons bounce around the room until they eventually end up in our eyes. Pure, white light is actually a spectrum, it contains every "color" at first. What we perceive as colors is actually a by product of photons bouncing around. When a photon makes contact with a physical entity, like a wall covered in paint, as it bounces off, the "color" of the paint changes the properties of the photon. That is to say, the paint actually absorbs some of the light's spectrum. Say light begins like this:

Our green paint in the above example actually absorbs every part of the light spectrum EXCEPT the green, like this:

So the photon that riccochets off now only contains that part of the light spectrum. If that photon enters our eye after bouncing off the paint immediately, we will perceive it as green.

What this means in practice is that, IRL, the end light that comes into our eyes is not just a function of a single wall or object in space, we actually see the cumulative traced path of light as it bounces around the room. The end color we see when it enters our eyes, is the result of every single surface the photon bounced off of. Every surface affects the spectral properties of the light.

Our rasterizer doesn't work like this at all. But ray tracing does. Ray tracing is a computer imagery technique that uses linear algebra to work through the path of a photon. It's using math to calculate all the stuff i talked about above. Instead of light entering our eyes, it instead becomes a pixel on the screen, as though that pixel was the rod in our eye that was intercepting the bouncing photon. It's basically like really, really advanced physics for light and color.

Ray tracing is slow because, as I mentioned much earlier in this post, crunching math takes actual time. And the more math you do, the more complex it is, the longer it takes. You might have heard how movies like toy story and jurassic park and all those have "render farms" which takes "days to render a second." This is why. Movies don't need to render frames quickly. They can take as much time as they want to draw a single frame, because that frame will get saved in a file, and then the next frame, and so forth, until all frames are rendered, when they can be played back like a flip book to achieve animation. So how long it takes to render the frame doesn't matter, since you're going to do all of them first before playing it back. Because they have infinite time, they can actually walk through all the math needed for ray tracing.

Computer games need to present images insanely fast. Like, preferably, 60 times a second or faster. That typically works out to .0166 ms per frame. That is so little time to prepare a raytraced image. So new ray tracing hardware, things like the RTX from nvidia, are such hype because they contain circuitry and elements meant to speed the entire process up enough for it to be done in such a short amount of time.

Last bit of what this guy did -- he built his raytracer using an old piece of hardware, which is impressive.

I should note, this is also how many impressive demos on shader toy work:

Things like this are done using ray tracers written in GLSL/HLSL for video cards.

EDIT: For those curious, here's a basic shader that is meant as a cheat sheet for raymarching primitives: https://www.shadertoy.com/view/Xds3zN

you can view the math of the individual functions in that shader, as they're labeled for clarity.

Amazing post, very informative and definitely among the best I've seen on here.This is a lot to take in, so strap in:

Firstly, the way computer graphics have traditionally worked is that you have a smaller, miniture machine inside of your computer, now usually called a GPU, that interfaces with the output monitor/television. Your computer talks to this machine using memory, commanding the GPU to draw to the television. The way the GPU draws, is that it sets aside a small area of memory called a frame buffer. Think of a frame buffer like a canvas that artists use. The computer sends all the numeric data that represents the polygons to the GPU, and the GPU crunches the math and figures out how to translate those raw numbers into pixels. As it figures out these pixels, it draws them onto the frame buffer, like an artist painting on a canvas. Once the entire image is drawn on the frame buffer, the GPU then waits for a signal from the television to send the image all at once.

The reason televisions and GPUs talk in this manner, where the graphics chip waits on the timing of the television, is because of how televisions worked going all the way back to the early 1900's. Old CRT televisions (boob tubes) had what was known as a raster gun inside, it was a magnetic beam that would bend light being shot at the screen itself. This gun would literally paint glowing light onto the screen in a pattern, starting from the top left portion of the television screen, to the bottom right portion of the screen. This is a physical gun, the beam actually moves and is drawn line by line going down the screen. While the gun is drawing, data has to be sent from the GPU to the television in precise timing. The moment the television is ready to draw the appropriate pixel, the GPU needs to be sending it. If the GPU is late in sending the pixel, the image will be destroyed and look like gibberish, kind of like this:

Thus, to ensure that the data that needs to be sent is always ready for the gun, you draw the entire scene to the framebuffer, to hold ready-to-send pixels to the television. The opposite of doing this is known as "racing the beam." What that means is that the CPU sends pixel information to the GPU and the GPU immediately passes it to the television as soon as it receives it. This is much tricker, as you need to figure out exactly how long your calculations are going to take. When you send data to the GPU, as I said, it crunches math, and processors actually take time to do math. It might seem instant to us, but that's because computers operate in time miniscule to us. If we could slow everything down, you'd see that when you "race the beam" you are doing precisely that. It's a race for your CPU to crunch all the math necessary before the raster beam is in position. You are under a constant deadline.

Modern televisions technically do not work like this anymore in function, but because old televisions formed the basis for all digital images even today, there are vestigial timing reminants of this entire system. Even on a progressive scan television (where the image isn't painted on by a raster gun, but rather displayed on the screen all at once instantly), it still will fake the timing of a raster gun because that's just how we have decided for a century how digital displays work.

That explains the "Race the beam part," that's only part of what's going on here. The other half pertains to how GPUs turn 3D math into pixels. This is traditionally done through a process known as rasterization. Rasterization is a process where 3 points in space are calculated out and turned into a series of pixels, like so:

Conventionally, computer graphics will do this for every polygon in a scene individually. This is how we "paint" on the framebuffer, the rasterized polygons are stored as pixels in the frame buffer. Individual polygons are thusly usually rendered without regard to the rest of the scene, they only concern their data. Now, with modern graphics technologies, we can share data about the environment, like if the polygon is close to a light source or something, so that the rasterizer can take that into consideration when determining the output pixel of the polygon. Like if this polygon is red, but it's next to a blue light, the rasterizer can take that into account and shade the polygon purple. This degree of interaction between parts of the world is thusly limited and basic compared to how real light works.

See, the way light IRL works is that light can be thought of as a physical element called a photon. Let's assume photons are light tiny balls of light. When a light source emits light IRL, what happens is it shoots photons, uncountable numbers of balls of light, in every direction. These photons bounce around the room until they eventually end up in our eyes. Pure, white light is actually a spectrum, it contains every "color" at first. What we perceive as colors is actually a by product of photons bouncing around. When a photon makes contact with a physical entity, like a wall covered in paint, as it bounces off, the "color" of the paint changes the properties of the photon. That is to say, the paint actually absorbs some of the light's spectrum. Say light begins like this:

Our green paint in the above example actually absorbs every part of the light spectrum EXCEPT the green, like this:

So the photon that riccochets off now only contains that part of the light spectrum. If that photon enters our eye after bouncing off the paint immediately, we will perceive it as green.

What this means in practice is that, IRL, the end light that comes into our eyes is not just a function of a single wall or object in space, we actually see the cumulative traced path of light as it bounces around the room. The end color we see when it enters our eyes, is the result of every single surface the photon bounced off of. Every surface affects the spectral properties of the light.

Our rasterizer doesn't work like this at all. But ray tracing does. Ray tracing is a computer imagery technique that uses linear algebra to work through the path of a photon. It's using math to calculate all the stuff i talked about above. Instead of light entering our eyes, it instead becomes a pixel on the screen, as though that pixel was the rod in our eye that was intercepting the bouncing photon. It's basically like really, really advanced physics for light and color.

Ray tracing is slow because, as I mentioned much earlier in this post, crunching math takes actual time. And the more math you do, the more complex it is, the longer it takes. You might have heard how movies like toy story and jurassic park and all those have "render farms" which takes "days to render a second." This is why. Movies don't need to render frames quickly. They can take as much time as they want to draw a single frame, because that frame will get saved in a file, and then the next frame, and so forth, until all frames are rendered, when they can be played back like a flip book to achieve animation. So how long it takes to render the frame doesn't matter, since you're going to do all of them first before playing it back. Because they have infinite time, they can actually walk through all the math needed for ray tracing.

Computer games need to present images insanely fast. Like, preferably, 60 times a second or faster. That typically works out to .0166 ms per frame. That is so little time to prepare a raytraced image. So new ray tracing hardware, things like the RTX from nvidia, are such hype because they contain circuitry and elements meant to speed the entire process up enough for it to be done in such a short amount of time.

Last bit of what this guy did -- he built his raytracer using an old piece of hardware, which is impressive.

I should note, this is also how many impressive demos on shader toy work:

Things like this are done using ray tracers written in GLSL/HLSL for video cards.

EDIT: For those curious, here's a basic shader that is meant as a cheat sheet for raymarching primitives: https://www.shadertoy.com/view/Xds3zN

you can view the math of the individual functions in that shader, as they're labeled for clarity.

Can anyone point me to the most informative Amiga/Commodore Documentary. Or top two?

My friends and classrooms in the mid 80s-90s had Macs and PCs. Back then I thought the Commodore was just a regular PC, that was branded as a home console.

Commodore produced regular IBM compatible PCs in the 80s as well, aimed at business users with their (relatively) high resolution sharp green monochrome displays. But they were probably more well known among consumers for the C64 and the Amiga.Can anyone point me to the most informative Amiga/Commodore Documentary. Or top two?

My friends and classrooms in the mid 80s-90s had Macs and PCs. Back then I thought the Commodore was just a regular PC, that was branded as a home console.

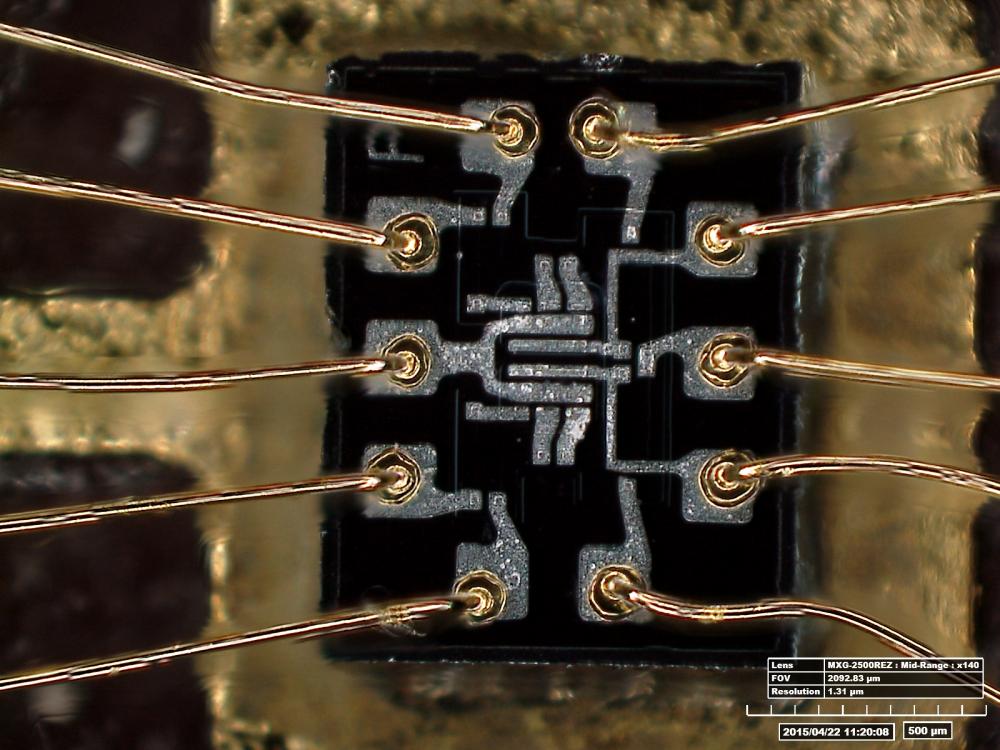

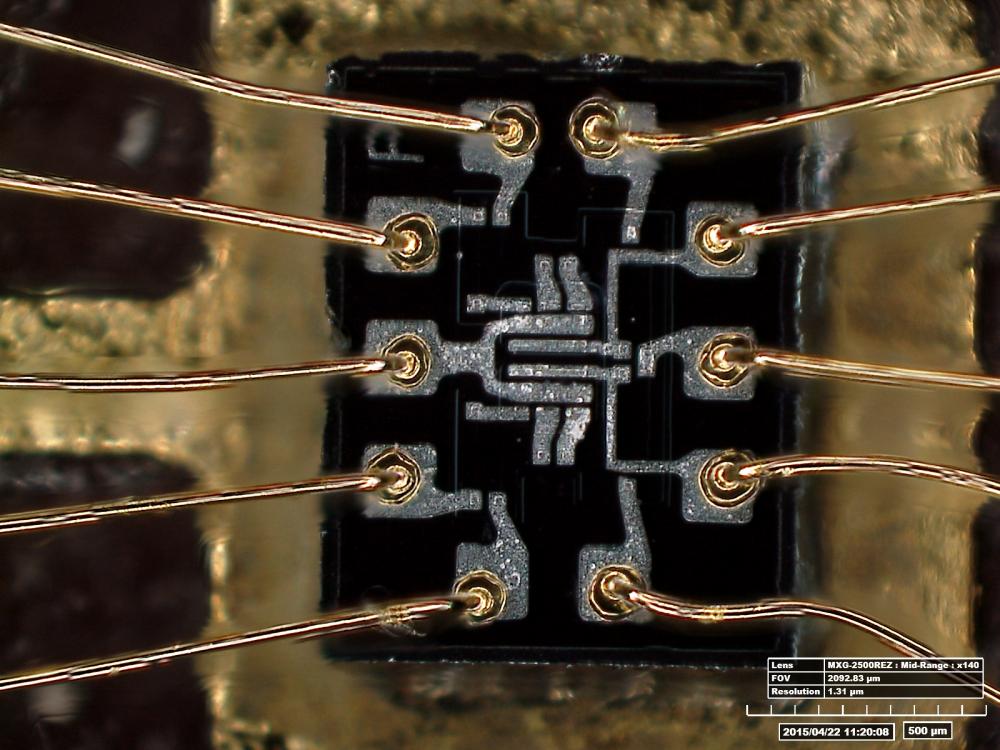

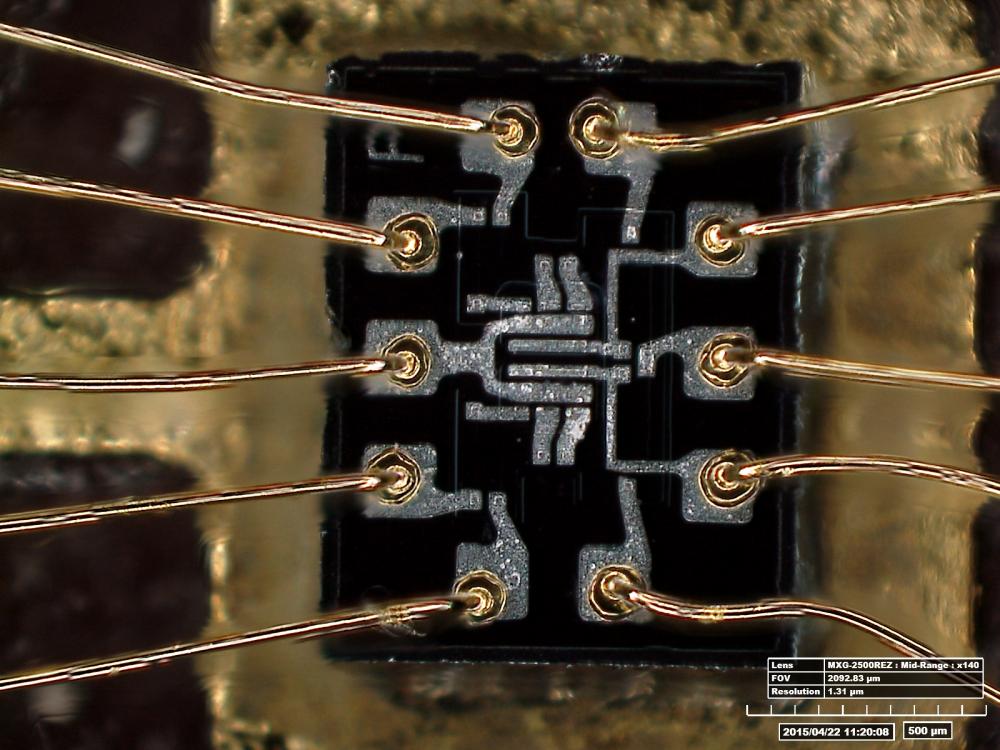

You are an ERA treasure. Thank you.The best way to think of an FPGA is that it works kind of like a hardware chameleon. Computer chips are actually a bunch of very tiny circuits inside, with pins coming out of them to communicate to the outside world. From the outside, a computer chip looks like this:

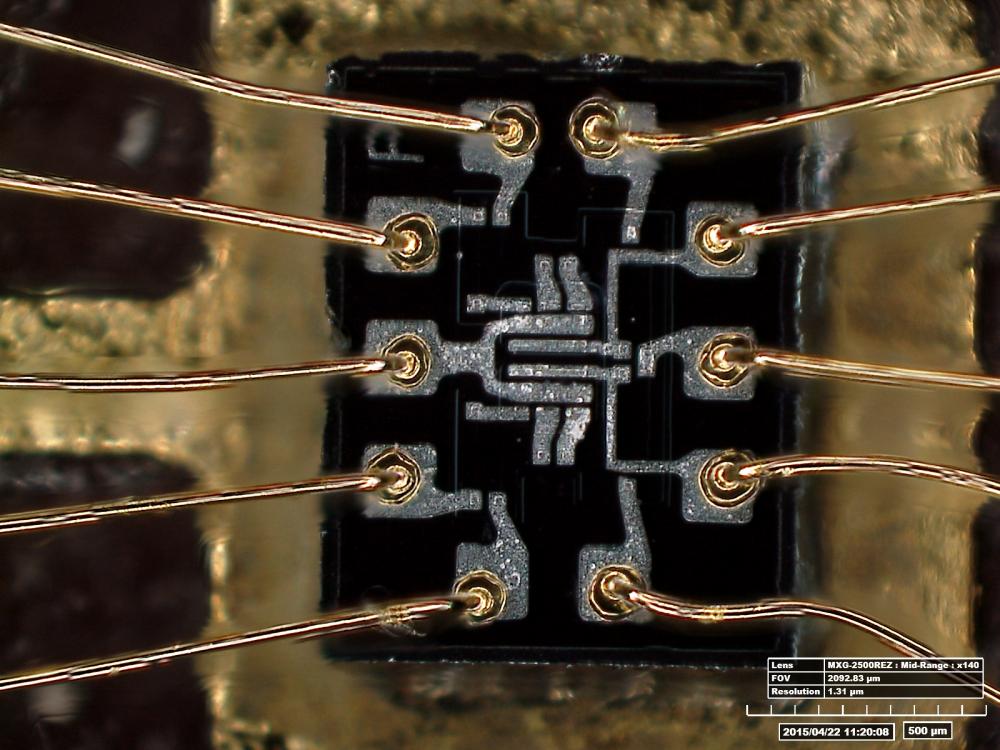

The black bit houses all the circuits, while the metal pins are the way you get data into and out of the circuit via electrical pulses. Inside the chip, it might look like this:

The metal bits are actual conduits to push electricity around. In EE, different kinds of circuits do different things. The "language" of designing a circuit is a bunch of smaller circuits called gates. Gates do individual things, like they might look at the input of two pins, and depending on if electricity is going through one, or both, or neither, it'll route the output electricity to different pins. By stringing together multiple gates, you can build circuits to accomplish specific tasks, called application specific integrated circuits. For example, the circuit for half-addition is made up of 1 XOR gate and 1 AND gate in an array.

When designing hardware, you build these gates onto the chip physically, using wire and metal and all that. You route the metal permanently on the chip, so the chip only does one task ever.

FPGA are field-programmable gate arrays, which is a descriptive name. They're not a set chip. They are a bunch of gates in a row, but which gates they are can change. By providing software to the FPGA, it can physically morph its gates. So the first gate in one program might be an AND gate, and the next program might make that same first gate an XOR gate the next time. In this regard, it's like the chip is actually changing shape. By studying the design of other chips, you can rearrange the FPGA gate into a simulation of the other chip by replicating it's logic.

So, again, it's like a chameleon. It's a chip that can turn into other chips, provided it has enough space. So it's NOT like a shitty arduino at all, pretty much the exact opposite. FPGAs are super useful for allowing people to make "new" versions of chips that haven't been produced in decades through simulation.

In this case, the guy designed some circuitry that facilitates faster raytracing.

Commodore produced regular IBM compatible PCs in the 80s as well, aimed at business users with their (relatively) high resolution sharp green monochrome displays. But they were probably more well known among consumers for the C64 and the Amiga.

So worth at least a $5 rental: https://frombedroomstobillions.com/amiga

Hands down the best amiga documentary. The Amiga was the most ahead of its time computer of all time. A quantum leap forward for computer graphics. Consumers and the games media industry kind of get the story of computer gaming evolution wrong when they break things up into "generations" by company. It makes much more sense when you start following generations by teams, looking at who actually worked on what and what their follow ups are.

The Amiga was the brain child of Jay Miner, one of the most unsung heroes in all of graphics history. Jay Miner was, essentially, the father of the modern graphics chip. He built the revolutionary TIA chip -- the television interface adapter. He was basically the magic behind the Atari 2600. He followed that up with the Atari 400/800, and his second revision of the TIA chip, the ANTIC. The Amiga 400/800 were massive leaps over the 2600, but got buried when Atari released the identical, but incompatible Atari 5200 (with a fucked up joystick) that destroyed the market.

What followed after was an insane series of twists and turns, that saw basically Commodore and Atari switching companies. The Amiga is basically the follow up to the follow up to the Atari 2600. The "third gen" of the tech. The 16-bit evolution of the 2600 design. The Amiga happened because a bunch of the industries best, youngest minds got fed up of being treated like garbage, came together, and decided to make a dream machine.

Get this: Windows 95 is considered revolutionary because it ushered in the real era of multitasking operating systems. It wasn't until 1999 that Apple joined in the multitasking world.

The Amiga had it in 1985.

Thanks! From Bedrooms to Billions huh? I see they have other docs I might be interested in.

Edit- yeah before I posted asking for a documentary, I went to YouTube and came across an old Amiga tutorial....and was like all that back then??? O_o

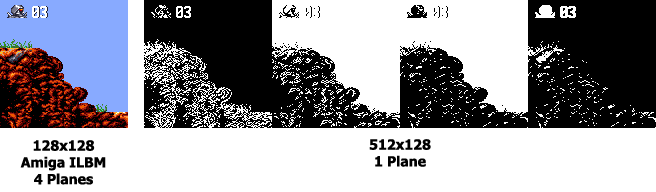

It goes way beyond multitasking. The Amiga has a full graphics co-processor inside, called Copper. It's an entirely separate processor that you write miniture programs for called copperlists for that can adjust memory values independently of the Amiga CPU itself. Copperlists time to the television output directly, and thus provides a way to, automatically, per scanline, adjust directly what is being drawn to the screen. That includes memory locations for things like the palette, sprite locations, background pixels, etc. That's how the Amiga can do crazy gradients and stuff like this:

This was revolutionary. It is essentially the way modern graphics cards work, but back in 1985. In many ways, copperlists are basically the forerunners to modern programmable shaders. Except, where modern shaders are per fragment, or per vertex, the copperlist is per scanline. You can treat them like per-scanline shaders. All the way back in 1985.

Damn, thank you for all this info.

I didn't realise that things like preemptive multitasking existed back then... And these scanline-level manipulations.

Just found this on wikipedia, regarding Copper: "It can be used to change video settings mid-frame. This allows the Amiga to change video configuration, including resolution, between scanlines". Wow, lol. Are these copper lists a form of display list in a way?

This is a lot to take in, so strap in:

Firstly, the way computer graphics have traditionally worked is that you have a smaller, miniture machine inside of your computer, now usually called a GPU, that interfaces with the output monitor/television. Your computer talks to this machine using memory, commanding the GPU to draw to the television. The way the GPU draws, is that it sets aside a small area of memory called a frame buffer. Think of a frame buffer like a canvas that artists use. The computer sends all the numeric data that represents the polygons to the GPU, and the GPU crunches the math and figures out how to translate those raw numbers into pixels. As it figures out these pixels, it draws them onto the frame buffer, like an artist painting on a canvas. Once the entire image is drawn on the frame buffer, the GPU then waits for a signal from the television to send the image all at once.

The reason televisions and GPUs talk in this manner, where the graphics chip waits on the timing of the television, is because of how televisions worked going all the way back to the early 1900's. Old CRT televisions (boob tubes) had what was known as a raster gun inside, it was a magnetic beam that would bend light being shot at the screen itself. This gun would literally paint glowing light onto the screen in a pattern, starting from the top left portion of the television screen, to the bottom right portion of the screen. This is a physical gun, the beam actually moves and is drawn line by line going down the screen. While the gun is drawing, data has to be sent from the GPU to the television in precise timing. The moment the television is ready to draw the appropriate pixel, the GPU needs to be sending it. If the GPU is late in sending the pixel, the image will be destroyed and look like gibberish, kind of like this:

Thus, to ensure that the data that needs to be sent is always ready for the gun, you draw the entire scene to the framebuffer, to hold ready-to-send pixels to the television. The opposite of doing this is known as "racing the beam." What that means is that the CPU sends pixel information to the GPU and the GPU immediately passes it to the television as soon as it receives it. This is much tricker, as you need to figure out exactly how long your calculations are going to take. When you send data to the GPU, as I said, it crunches math, and processors actually take time to do math. It might seem instant to us, but that's because computers operate in time miniscule to us. If we could slow everything down, you'd see that when you "race the beam" you are doing precisely that. It's a race for your CPU to crunch all the math necessary before the raster beam is in position. You are under a constant deadline.

Modern televisions technically do not work like this anymore in function, but because old televisions formed the basis for all digital images even today, there are vestigial timing reminants of this entire system. Even on a progressive scan television (where the image isn't painted on by a raster gun, but rather displayed on the screen all at once instantly), it still will fake the timing of a raster gun because that's just how we have decided for a century how digital displays work.

That explains the "Race the beam part," that's only part of what's going on here. The other half pertains to how GPUs turn 3D math into pixels. This is traditionally done through a process known as rasterization. Rasterization is a process where 3 points in space are calculated out and turned into a series of pixels, like so:

Conventionally, computer graphics will do this for every polygon in a scene individually. This is how we "paint" on the framebuffer, the rasterized polygons are stored as pixels in the frame buffer. Individual polygons are thusly usually rendered without regard to the rest of the scene, they only concern their data. Now, with modern graphics technologies, we can share data about the environment, like if the polygon is close to a light source or something, so that the rasterizer can take that into consideration when determining the output pixel of the polygon. Like if this polygon is red, but it's next to a blue light, the rasterizer can take that into account and shade the polygon purple. This degree of interaction between parts of the world is thusly limited and basic compared to how real light works.

See, the way light IRL works is that light can be thought of as a physical element called a photon. Let's assume photons are light tiny balls of light. When a light source emits light IRL, what happens is it shoots photons, uncountable numbers of balls of light, in every direction. These photons bounce around the room until they eventually end up in our eyes. Pure, white light is actually a spectrum, it contains every "color" at first. What we perceive as colors is actually a by product of photons bouncing around. When a photon makes contact with a physical entity, like a wall covered in paint, as it bounces off, the "color" of the paint changes the properties of the photon. That is to say, the paint actually absorbs some of the light's spectrum. Say light begins like this:

Our green paint in the above example actually absorbs every part of the light spectrum EXCEPT the green, like this:

So the photon that riccochets off now only contains that part of the light spectrum. If that photon enters our eye after bouncing off the paint immediately, we will perceive it as green.

What this means in practice is that, IRL, the end light that comes into our eyes is not just a function of a single wall or object in space, we actually see the cumulative traced path of light as it bounces around the room. The end color we see when it enters our eyes, is the result of every single surface the photon bounced off of. Every surface affects the spectral properties of the light.

Our rasterizer doesn't work like this at all. But ray tracing does. Ray tracing is a computer imagery technique that uses linear algebra to work through the path of a photon. It's using math to calculate all the stuff i talked about above. Instead of light entering our eyes, it instead becomes a pixel on the screen, as though that pixel was the rod in our eye that was intercepting the bouncing photon. It's basically like really, really advanced physics for light and color.

Ray tracing is slow because, as I mentioned much earlier in this post, crunching math takes actual time. And the more math you do, the more complex it is, the longer it takes. You might have heard how movies like toy story and jurassic park and all those have "render farms" which takes "days to render a second." This is why. Movies don't need to render frames quickly. They can take as much time as they want to draw a single frame, because that frame will get saved in a file, and then the next frame, and so forth, until all frames are rendered, when they can be played back like a flip book to achieve animation. So how long it takes to render the frame doesn't matter, since you're going to do all of them first before playing it back. Because they have infinite time, they can actually walk through all the math needed for ray tracing.

Computer games need to present images insanely fast. Like, preferably, 60 times a second or faster. That typically works out to .0166 ms per frame. That is so little time to prepare a raytraced image. So new ray tracing hardware, things like the RTX from nvidia, are such hype because they contain circuitry and elements meant to speed the entire process up enough for it to be done in such a short amount of time.

Last bit of what this guy did -- he built his raytracer using an old piece of hardware, which is impressive.

I should note, this is also how many impressive demos on shader toy work:

Things like this are done using ray tracers written in GLSL/HLSL for video cards.

EDIT: For those curious, here's a basic shader that is meant as a cheat sheet for raymarching primitives: https://www.shadertoy.com/view/Xds3zN

you can view the math of the individual functions in that shader, as they're labeled for clarity.

The best way to think of an FPGA is that it works kind of like a hardware chameleon. Computer chips are actually a bunch of very tiny circuits inside, with pins coming out of them to communicate to the outside world. From the outside, a computer chip looks like this:

The black bit houses all the circuits, while the metal pins are the way you get data into and out of the circuit via electrical pulses. Inside the chip, it might look like this:

The metal bits are actual conduits to push electricity around. In EE, different kinds of circuits do different things. The "language" of designing a circuit is a bunch of smaller circuits called gates. Gates do individual things, like they might look at the input of two pins, and depending on if electricity is going through one, or both, or neither, it'll route the output electricity to different pins. By stringing together multiple gates, you can build circuits to accomplish specific tasks, called application specific integrated circuits. For example, the circuit for half-addition is made up of 1 XOR gate and 1 AND gate in an array.

When designing hardware, you build these gates onto the chip physically, using wire and metal and all that. You route the metal permanently on the chip, so the chip only does one task ever.

FPGA are field-programmable gate arrays, which is a descriptive name. They're not a set chip. They are a bunch of gates in a row, but which gates they are can change. By providing software to the FPGA, it can physically morph its gates. So the first gate in one program might be an AND gate, and the next program might make that same first gate an XOR gate the next time. In this regard, it's like the chip is actually changing shape. By studying the design of other chips, you can rearrange the FPGA gate into a simulation of the other chip by replicating it's logic.

So, again, it's like a chameleon. It's a chip that can turn into other chips, provided it has enough space. So it's NOT like a shitty arduino at all, pretty much the exact opposite. FPGAs are super useful for allowing people to make "new" versions of chips that haven't been produced in decades through simulation.

In this case, the guy designed some circuitry that facilitates faster raytracing.

If I can make an additional point that blew me away when I read it (if people find the above a bit daunting), it's that the "programs" used to write/burn/morph an FPGA into another type of chip are literally the blueprints to make that kind of chip. So if one wanted, say, a replica of the YM2612 chip, they could use this blueprint chip manufacturers would've used, and essentially use it to turn an FPGA into that exact chip.The best way to think of an FPGA is that it works kind of like a hardware chameleon. Computer chips are actually a bunch of very tiny circuits inside, with pins coming out of them to communicate to the outside world. From the outside, a computer chip looks like this:

The black bit houses all the circuits, while the metal pins are the way you get data into and out of the circuit via electrical pulses. Inside the chip, it might look like this:

The metal bits are actual conduits to push electricity around. In EE, different kinds of circuits do different things. The "language" of designing a circuit is a bunch of smaller circuits called gates. Gates do individual things, like they might look at the input of two pins, and depending on if electricity is going through one, or both, or neither, it'll route the output electricity to different pins. By stringing together multiple gates, you can build circuits to accomplish specific tasks, called application specific integrated circuits. For example, the circuit for half-addition is made up of 1 XOR gate and 1 AND gate in an array.

When designing hardware, you build these gates onto the chip physically, using wire and metal and all that. You route the metal permanently on the chip, so the chip only does one task ever.

FPGA are field-programmable gate arrays, which is a descriptive name. They're not a set chip. They are a bunch of gates in a row, but which gates they are can change. By providing software to the FPGA, it can physically morph its gates. So the first gate in one program might be an AND gate, and the next program might make that same first gate an XOR gate the next time. In this regard, it's like the chip is actually changing shape. By studying the design of other chips, you can rearrange the FPGA gate into a simulation of the other chip by replicating it's logic.

So, again, it's like a chameleon. It's a chip that can turn into other chips, provided it has enough space. So it's NOT like a shitty arduino at all, pretty much the exact opposite. FPGAs are super useful for allowing people to make "new" versions of chips that haven't been produced in decades through simulation.

In this case, the guy designed some circuitry that facilitates faster raytracing.

This.Wow! You've done what I thought impossible in actually making a wall of text post on ERA that's actually worth reading. You helped me finally understand FPGAs better.

Do you have any examples of scenes in demos that show this off?This trick is known as chunkycopper, and is the basis for a lot of really impressive amiga demos. It is all possible because the Amiga has an amazingly useful and flexible graphics co-processor.

If I can make an additional point that blew me away when I read it (if people find the above a bit daunting), it's that the "programs" used to write/burn/morph an FPGA into another type of chip are literally the blueprints to make that kind of chip. So if one wanted, say, a replica of the YM2612 chip, they could use this blueprint chip manufacturers would've used, and essentially use it to turn an FPGA into that exact chip.

Do I have that right? That's what I gathered from reading something and it helped me understand the concept of FPGAs quite a bit easier. Though not as detailed as your description... I'm so much more knowledgeable about them now!

Do you have any examples of scenes in demos that show this off?

Again, insanely informative. Thanks for the effort!FPGA cores are written in what is known as a hardware descriptor language, or HDL. There are a few different HDLs, like Verilog or VHDL (which is, confusingly, different than Verilog HDL). These HDLs describe a chip, but that chip it describes is not necessarily the thing it's simulating, depending on how the HDL is derived. In many ways, it's like the difference between normal emulation and cycle accurate emulation -- if you are basing your HDL from eyeballing functionality, then your FPGA core is really no different than emulation in terms of accuracy or speed. What that means is that you are less concerned with actually simulating the original chip correctly, and are just trying to replicate the function without the form. Like, you note that the chip you're replicating has circuitry to, for example, perform a math operation like generating a sine wave, and thus write your own implimentation that does the same, without considering how the original chip actually implemented the same function. The devil is in the detail, and although your chips do the same thing in function, they might differ in timing or other crucial elements.

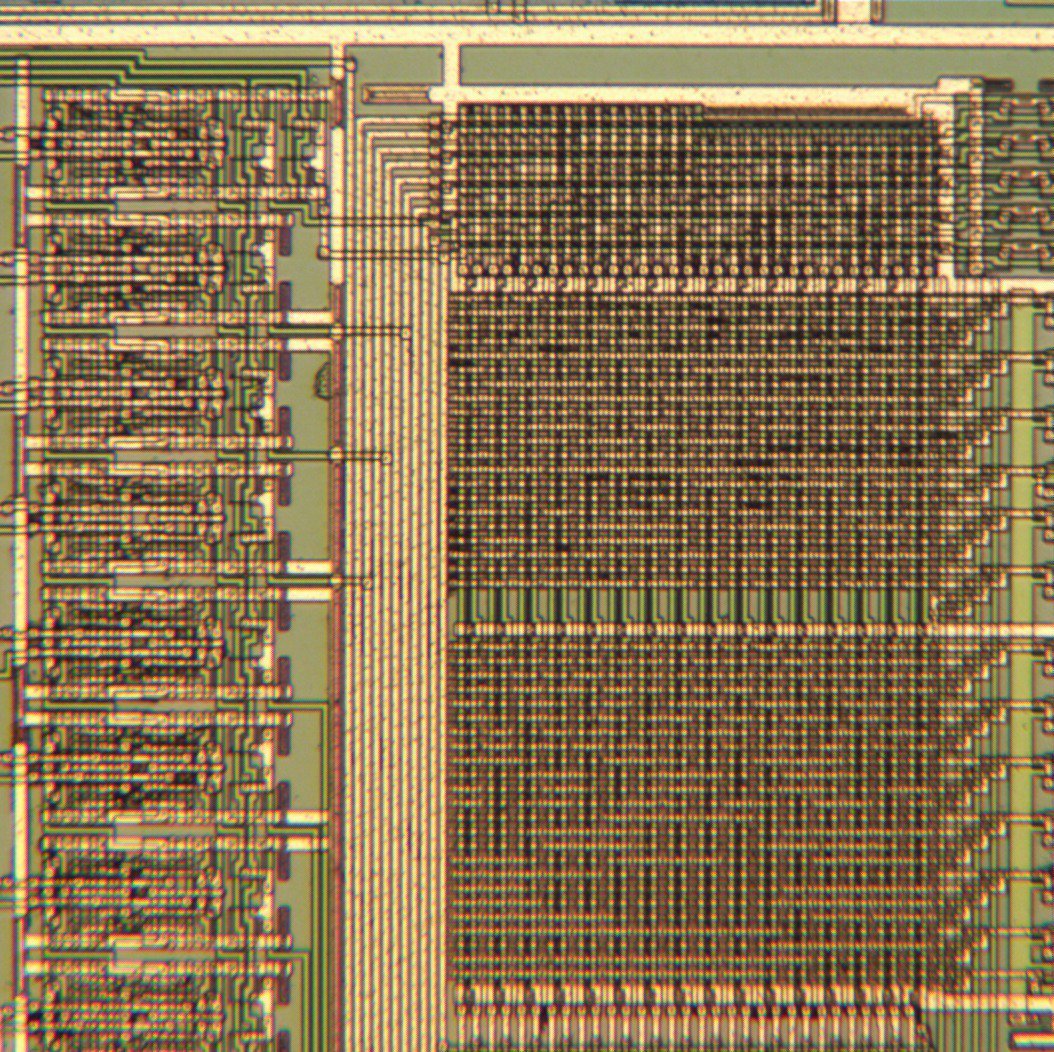

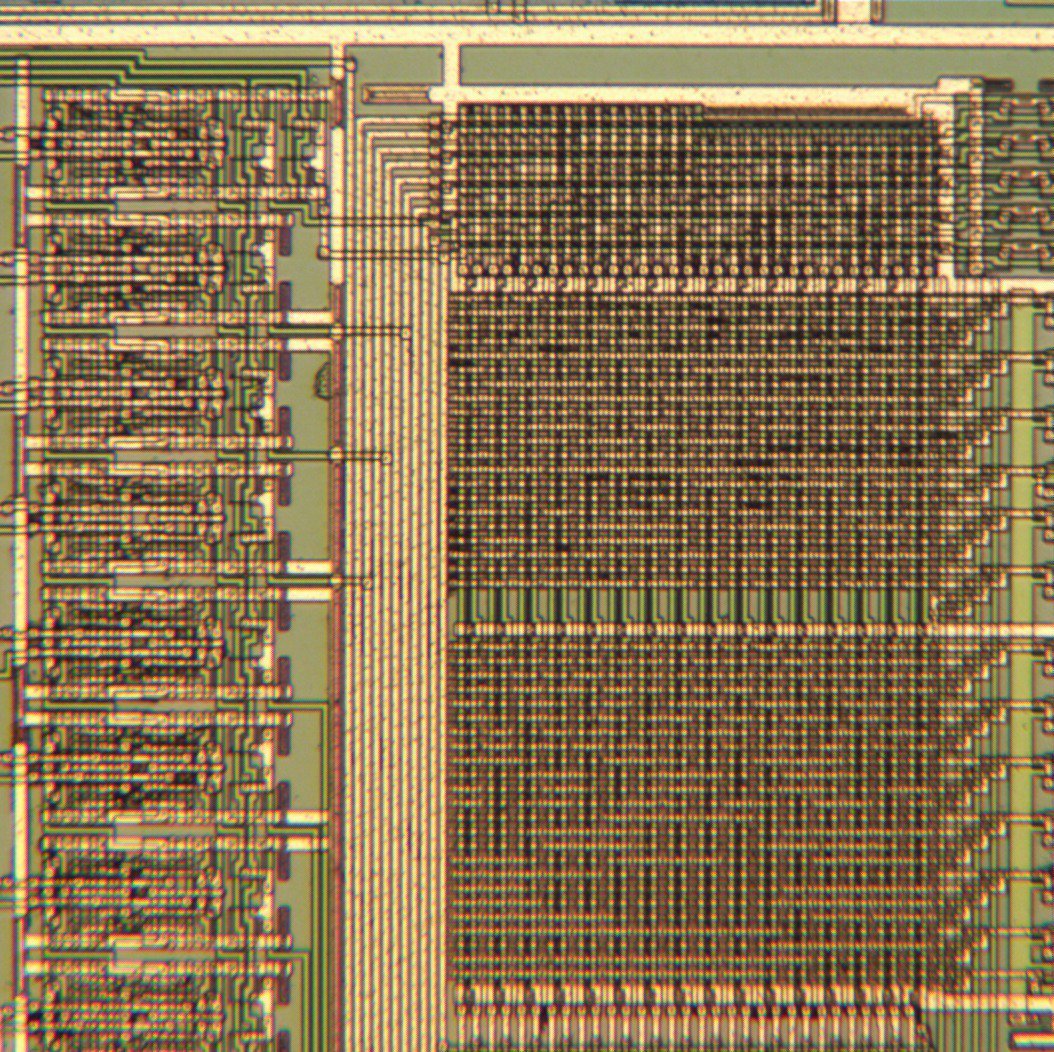

The opposite of doing things this way is to use microphotography, like literaly using extremely sensitive microscopes on a chemically exposed chip, layer by layer, to peel it away and physically examine the traces inside. Like so:

Using microphotography, you can see the exact implimentations in the original chip and mimic the timing. In this regard, if you design your HDL using microphotography, then your HDL becomes literally a blueprint of the original chip. Just like any other software, how it's made matters.

Again, insanely informative. Thanks for the effort!

I also think that's the process used to create Nuked's OPN2 emulator, which is kind of how I found out about the process.