If anybody is wanting to feel better about their PC issues, give this eyepatch wolf twitter thread a read. what a wild roller coaster lol

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So we should have Vsync on with Gsync then?

The fact that there is still disagreement over this, really does highlight what a confusing minefield pc gaming can be.

The fact that there is still disagreement over this, really does highlight what a confusing minefield pc gaming can be.

G-sync onSo we should have Vsync on with Gsync then?

The fact that there is still disagreement over this, really does highlight what a confusing minefield pc gaming can be.

V-sync on in NVCP

Frame cap.

Read the link above to see why v-sync is necessary.

Wait, why should I enable V-SYNC with G-SYNC again? And why am I still seeing tearing with G-SYNC enabled and V-SYNC disabled? Isn't G-SYNC suppose to fix that?

(LAST UPDATED: 05/02/2019)

The answer is frametime variances.

"Frametime" denotes how long a single frame takes to render. "Framerate" is the totaled average of each frame's render time within a one second period.

At 144Hz, a single frame takes 6.9ms to display (the number of which depends on the max refresh rate of the display, see here), so if the framerate is 144 per second, then the average frametime of 144 FPS is 6.9ms per frame.

In reality, however, frametime from frame to frame varies, so just because an average framerate of 144 per second has an average frametime of 6.9ms per frame, doesn't mean all 144 of those frames in each second amount to an exact 6.9ms per; one frame could render in 10ms, the next could render in 6ms, but at the end of each second, enough will hit the 6.9ms render target to average 144 FPS per.

So what happens when just one of those 144 frames renders in, say, 6.8ms (146 FPS average) instead of 6.9ms (144 FPS average) at 144Hz? The affected frame becomes ready too early, and begins to scan itself into the current "scanout" cycle (the process that physically draws each frame, pixel by pixel, left to right, top to bottom on-screen) before the previous frame has a chance to fully display (a.k.a. tearing).

G-SYNC + V-SYNC "Off" allows these instances to occur, even within the G-SYNC range, whereas G-SYNC + V-SYNC "On" (what I call "frametime compensation" in this article) allows the module (with average framerates within the G-SYNC range) to time delivery of the affected frames to the start of the next scanout cycle, which lets the previous frame finish in the existing cycle, and thus prevents tearing in all instances.

And since G-SYNC + V-SYNC "On" only holds onto the affected frames for whatever time it takes the previous frame to complete its display, virtually no input lag is added; the only input lag advantage G-SYNC + V-SYNC "Off" has over G-SYNC + V-SYNC "On" is literally the tearing seen, nothing more.

For further explanations on this subject see part 1 "Control Panel," part 4 "Range," and part 6 "G-SYNC vs. V-SYNC OFF w/FPS Limit" of this article, or read the excerpts below…

Ok thanks.G-sync on

V-sync on in NVCP

Frame cap.

Read the link above to see why v-sync is necessary.

This might be a dumb question, but should triple buffering be on also?

No no no no no no peeps.

I see many posts here saying you should enable V-Sync with G-Sync and while it will work it is a bad idea

You will experience increased latency if you are running at the top of your refresh window. This is due to V-Sync kicking in then and adding its inherent latency into it.

What you all should do is enable G-Sync, disable V-Sync everywhere and then run a framerate cap using RTSS or similar that is 3-5 frames lower than your max referesh (so 140 for 144 for example). This video and channel in general are great for this stuff:

If you want lowest latency/input lag sure. But even he goes into detail about settings for smoothest gameplay and recommends vsync on if that is your priority. Gsync is completely pointless without vsync. Just get a high refresh rate monitor without gsync if you don't plan to use vsync.

Edit: he's also had videos explaining you can lower input lag by not capping frame rate if that is your priority.

Last edited:

It also reduces input lag if you hit vsync ceiling, vs having vsync alone.

Because not everyone shares those same experiences, and it seems the common assumption is that there's tons of tinkering necessary to play PC games.

Everyone posting in this thread is talking about their own experiences. I know I am. So telling us we are stating a "common misconception" is not helpful.

I believe there is no reason for it as with g-sync you don't need to buffer the frames. Triple buffering is used to work with v-sync which on its own requires it.Ok thanks.

This might be a dumb question, but should triple buffering be on also?

I might need correcting on this though, please someone do if so!

I believe there is no reason for it as with g-sync you don't need to buffer the frames. Triple buffering is used to work with v-sync which on its own requires it.

I might need correcting on this though, please someone do if so!

I believe this to be the case as well.

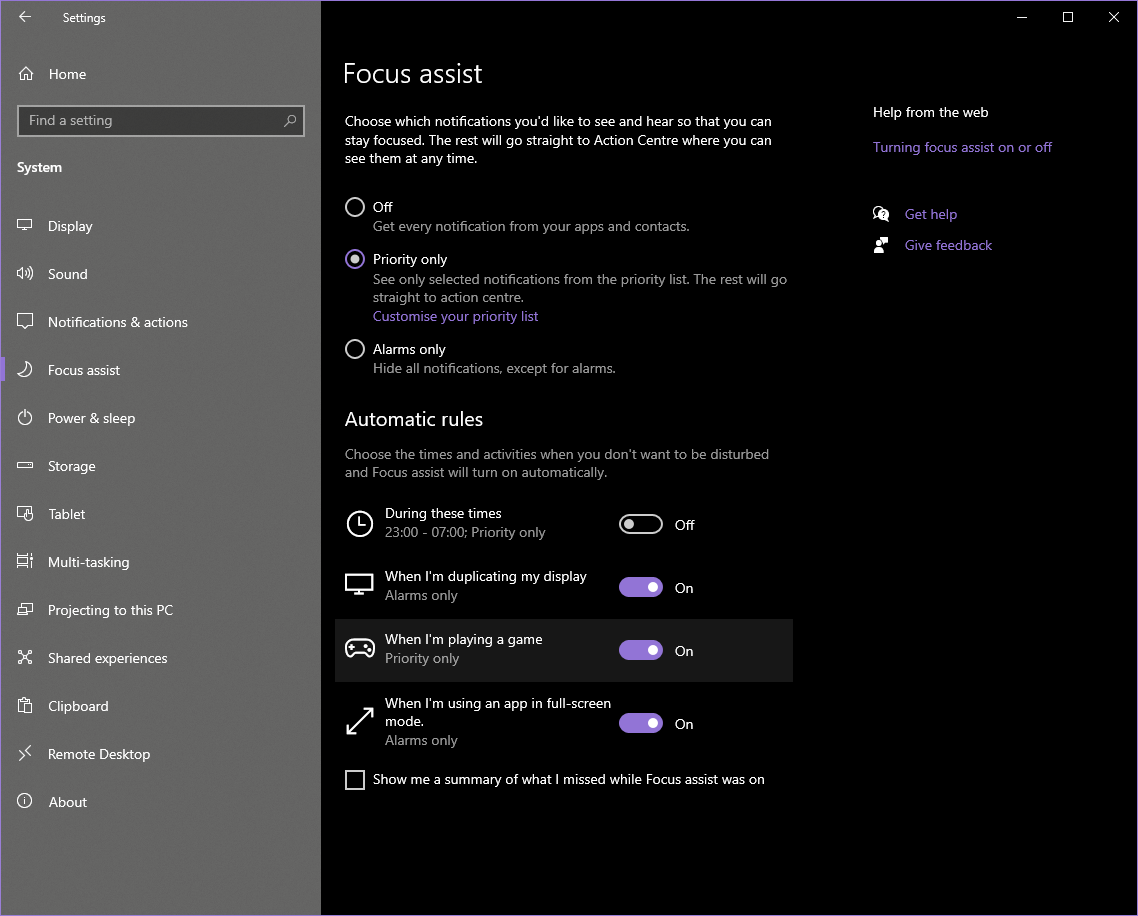

I wish Windows had the ability to go into sort of like a "game mode" when it sees you launched a game. Meaning, everything must stay full screen and all alerts are silenced or won't minimize your game randomly if something needs your attention.

Just one question, do you have all of the issues you mentioned if you are running the desktop at 60Hz and the games at 60Hz?

Yes.

If anybody is wanting to feel better about their PC issues, give this eyepatch wolf twitter thread a read. what a wild roller coaster lol

This was a good read lol. Man, what a nightmare.

Yeah, I use rechargeable batteries and they last forever

OP, I would just try a different display. Not a duplicate unit, a completely different model. It if you have a tv or something just use that for a while and see if this happens over extended use.

It can technically be "anything" but since you already nuked windows and tried a different gpu, that's the next step.

It can technically be "anything" but since you already nuked windows and tried a different gpu, that's the next step.

I have Logitech K400 Plus since I dunno 2015? And I haven't changed the batteries in it once yet.

But this obviously depends on how often you will be using the wireless keyboard.

FYI that guide says to turn on vsync in the control panel. When gaming using vrr you should use vsync.

Read page 9 and 15Vsync should be on globally via Nvidia CP for gsync, and you do not need to cap your frames.

G-SYNC 101: G-SYNC vs. V-SYNC OFF | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!

G-SYNC 101: Closing FAQ | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!

You leave vsync off so as to avoid the large impact to input lag every time it kicks in. The ideal situation is that it never kicks in and gsync is maintained 100%, so it's not even a matter needing discussionG-SYNC adjusts the refresh rate to the framerate. If the framerate reaches or exceeds the max refresh rate at any point, G-SYNC no longer has anything to adjust, at which point it reverts to V-SYNC behavior (G-SYNC + V-SYNC "On") or screen-wide tearing (G-SYNC + V-SYNC "Off").

As for why a minimum of 2 FPS (and a recommendation of at least 3 FPS) below the max refresh rate is required to stay within the G-SYNC range, it's because frametime variances output by the system can cause FPS limiters (both in-game and external) to occasionally "overshoot" the set limit (the same reason tearing is caused in the upper FPS range with G-SYNC + V-SYNC "Off"), which is why an "at" max refresh rate FPS limit (see part 5 "G-SYNC Ceiling vs. FPS Limit" for input lag test numbers) typically isn't sufficient in keeping the framerate within the G-SYNC range at all times.

Setting a minimum -3 FPS limit below the max refresh rate is recommended to keep the framerate within the G-SYNC range at all times, preventing double buffer V-SYNC behavior (and adjoining input lag) with G-SYNC + V-SYNC "On," or screen-wide tearing (and complete disengagement of G-SYNC) with G-SYNC + V-SYNC "Off" whenever the framerate reaches or exceeds the max refresh rate. However, unlike the V-SYNC option, framerate limiters will not prevent tearing.

Last edited:

No it isn't. This is further misinformation we've been trying to educate over and over again. They are not the same thing, they don't even function in the same space or at the same time. One or the other is always in use, never both. Ideally you should never have vsync kick-in

You leave it on, it is epxlained on the same site in the link I gave above.Read page 9 and 15

G-SYNC 101: G-SYNC vs. V-SYNC OFF | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

G-SYNC 101: Closing FAQ | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

You leave vsync off so as to avoid the large impact to input lag every time it kicks in. The ideal situation is that it never kicks in and gsync is maintained 100%, so it's not even a matter needing discussion

The same link you just posted....

G-sync on

V-sync on in NVCP

Frame cap.

Read the link above to see why v-sync is necessary.

No no no no no no peeps.

I see many posts here saying you should enable V-Sync with G-Sync and while it will work it is a bad idea

You will experience increased latency if you are running at the top of your refresh window. This is due to V-Sync kicking in then and adding its inherent latency into it.

What you all should do is enable G-Sync, disable V-Sync everywhere and then run a framerate cap using RTSS or similar that is 3-5 frames lower than your max referesh (so 140 for 144 for example). This video and channel in general are great for this stuff:

This is a good post. I was only commenting on how V-Sync interacts with G-Sync.

You're correct that it also needs to be combined with a frame rate limiter if you want to minimize latency. That's not required for proper G-Sync operation, however. It shouldn't affect smoothness.

I would argue that SpecialK is now the better tool for this, rather than RTSS.

It should also be noted that a limit needs to be set low enough to keep GPU utilization at or below ~95% if you want the absolute lowest latency possible, as latency spikes much higher when the GPU is maxed-out - even if you're far below the refresh rate limit.

If you really want to get into the weeds about latency, I recommend these two videos:

Input Lag and Frame Rate Limiters

Can a modern adaptive-sync display beat a CRT to displaying a frame? In certain situations, yes! Here I measure input lag in Unreal Engine, CS:GO, and ezQuak...

Input Lag Revisited: V-Sync Off and NVIDIA Reflex

V-Sync Off Latency measurements for Quake Champions, Destiny 2, Warzone, and Apex Legends, with NVIDIA Reflex testing as well!0:00 Intro1:37 Testing Setup2:4...

G-Sync should always be combined with V-Sync.So we should have Vsync on with Gsync then?

The fact that there is still disagreement over this, really does highlight what a confusing minefield pc gaming can be.

There are two reasons for the "disagreement".

- This is all AMD's fault.

- People whose thoughts on the matter are nothing more than "V-Sync BAD" without listening to other people, or considering that things may have changed with the introduction of VRR.

FreeSync originally lacked the low frame rate compensation feature (LFC), and the original spec did not place requirements on supported VRR ranges.

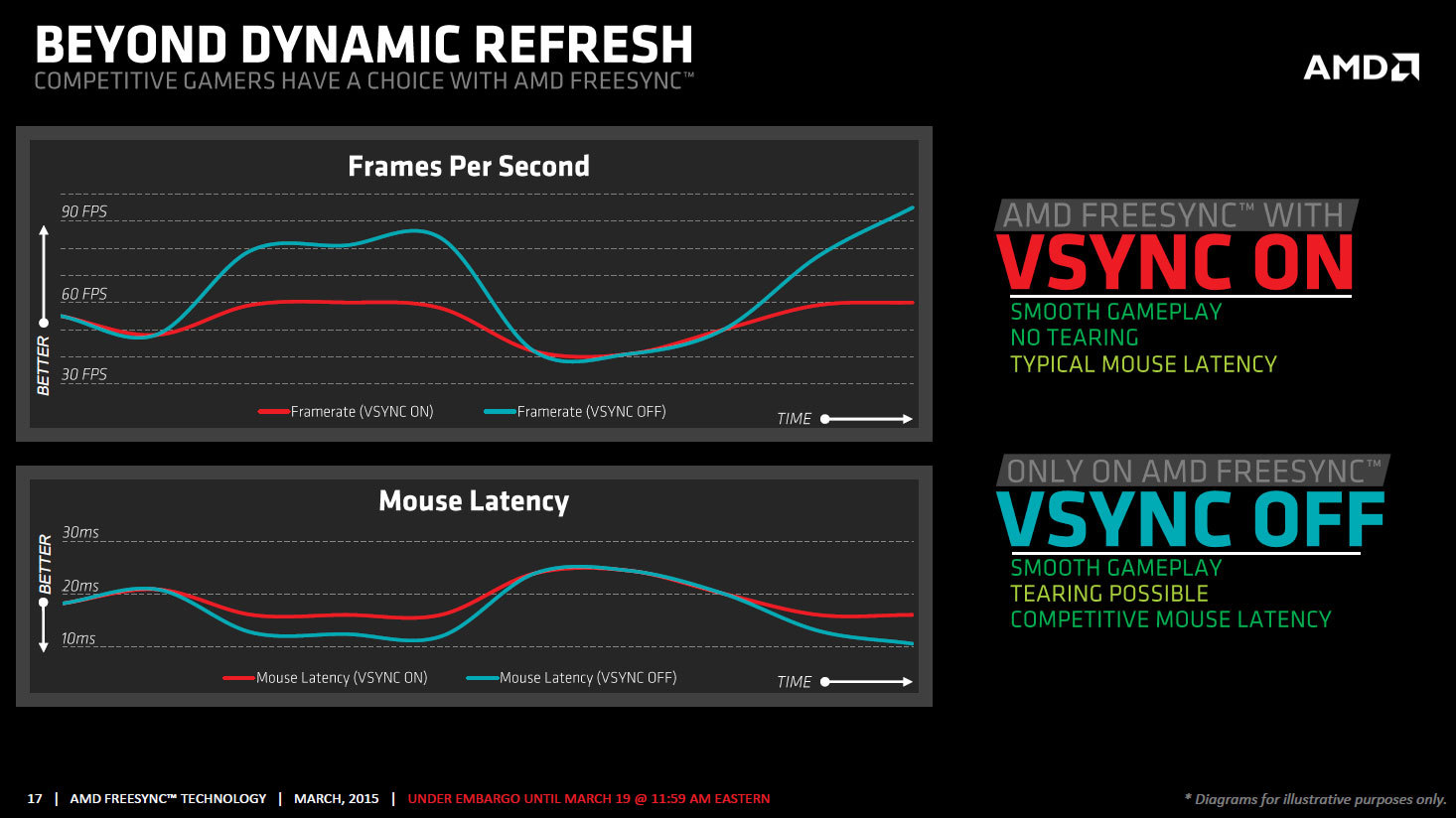

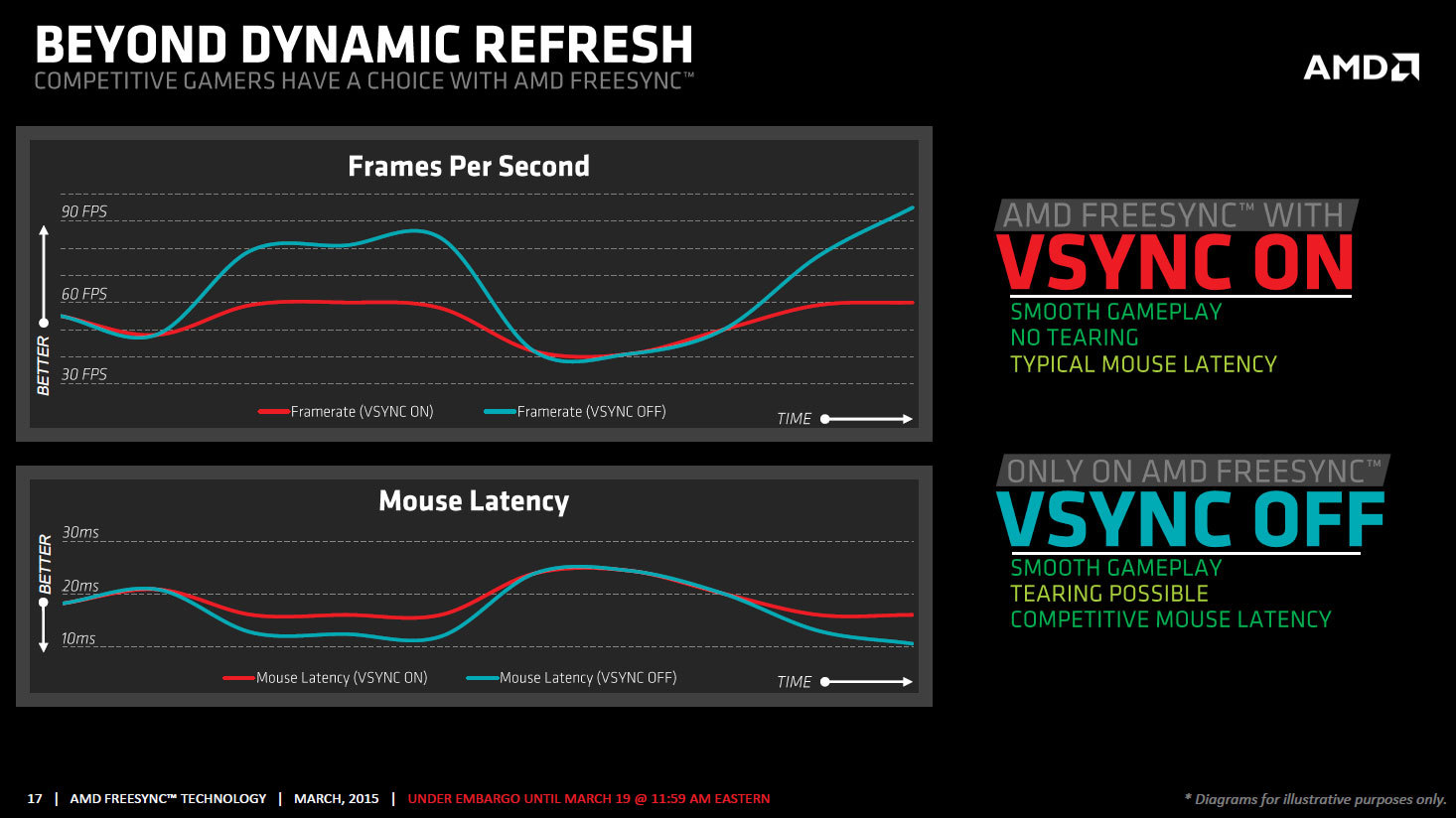

Which resulted in this marketing slide being produced:

Rather than mandating high refresh rates, they allowed 60Hz FreeSync monitors, with limited ranges like 48–60Hz (all the early G-Sync monitors were ≥120Hz).

Rather than supporting LFC—which all G-Sync monitors were required to do from day one—they sold the ability to disable V-Sync when using FreeSync as a feature.

When the frame rate dropped below the minimum refresh rate of a FreeSync monitor, it used to be locked to the minimum and would lag horribly if V-Sync was enabled - since it might've been running at 40Hz rather than even 60Hz.

So they put out this graph which shows that, on a 60Hz display, latency is reduced when you disable V-Sync.

Never mind that G-Sync displays were all high refresh rate monitors at the time, or that frame rate limiting could eliminate that latency without tearing, by preventing the game from hitting the refresh limit and switching over to V-Sync behavior.

Never mind that V-Sync off operation is exactly the same on a VRR display as it is on a fixed refresh display (tearing).

This one graph made it look like it was "better" to disable V-Sync - so the community eventually pressured NVIDIA to add the option to their drivers for the sake of parity.

Originally, whenever you enabled G-Sync, V-Sync was always locked on, and any setting in a game or the NVIDIA control panel was completely ignored.

V-Sync on is what G-Sync was designed for, and how it was always intended to be used.

So now we are stuck with this "debate" where people that don't know what they're talking about continue to insist that V-Sync is harmful or unnecessary on a VRR display.

It doesn't matter how many times you demonstrate that VRR can tear inside the active range when V-Sync is disabled, or if you show latency testing which proves that G-Sync combined with V-Sync and a frame rate limiter has equivalent latency. Sometimes it can be even lower.

There will always be people insisting that no, V-Sync BAD.

Generally, no.Ok thanks.

This might be a dumb question, but should triple buffering be on also?

No, you always need V-Sync.You leave vsync off so as to avoid the large impact to input lag every time it kicks in. The ideal situation is that it never kicks in and gsync is maintained 100%, so it's not even a matter needing discussion

ALWAYS.

Here is an example of my 100Hz G-Sync monitor tearing (lower 1/4), despite there being an 80 FPS frame rate limit set in RTSS, and showing that it's correctly synced to 80Hz (yellow counter), because V-Sync was disabled.

Unless you are limiting the frame rate to something like 30% below the maximum refresh rate, you always need V-Sync to prevent tearing.

Frame rate limits alone cannot replace the need for V-Sync.

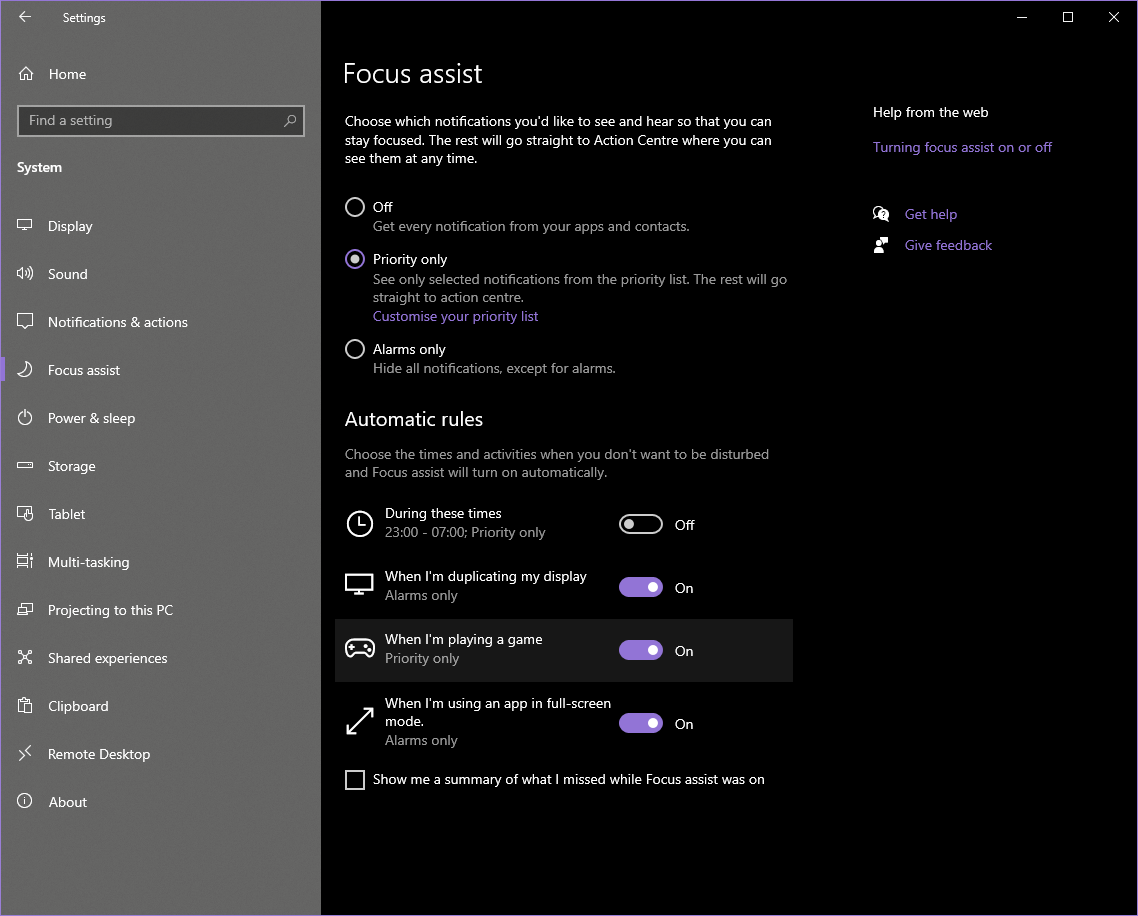

It literally already does though, with Game Mode and Focus Assist.I wish Windows had the ability to go into sort of like a "game mode" when it sees you launched a game. Meaning, everything must stay full screen and all alerts are silenced or won't minimize your game randomly if something needs your attention.

It can't block programs which use non-standard alerts rather than going through the Windows notification system, though.

Some messenger apps may have the option to "use Windows notifications" rather than their own custom pop-ups. Telegram has this, for example.

A lot of these "problems" are really endemic to the Windows development ethos.

With macOS, Apple is constantly pushing developers to keep their applications up to date, using the latest OS features and APIs.

On Windows, developers love to do things like build their own notifications rather than integrate with the system.

There are still new applications being developed with no DPI scaling features whatsoever, because that developer doesn't have a high resolution monitor yet, and doesn't consider it worth their time.

This gives the impression that PCs/Windows are somehow at fault, when it's often the developers.

But people also aren't willing to give up on Windows' decades of compatibility, like Apple does.

Apple will throw everything out every few years and doesn't think twice about it - so if you want to develop for that platform, you have to stay current.

I'm not really sure that there's a good solution to this problem.

My hope is that we will eventually move to a system where each individual Win32 application is containerized, and that starts to solve a lot of these long-standing problems.

People rightfully pushed back against UWP, but it got a lot of things right.

Last edited:

Further in that very FAQ you linked to:Read page 9 and 15

G-SYNC 101: G-SYNC vs. V-SYNC OFF | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

G-SYNC 101: Closing FAQ | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

You leave vsync off so as to avoid the large impact to input lag every time it kicks in. The ideal situation is that it never kicks in and gsync is maintained 100%, so it's not even a matter needing discussion

Alright, I now understand why the V-SYNC option and a framerate limiter is recommended with G-SYNC enabled, but why use both?

(ADDED: 05/02/2019)

Because, with G-SYNC enabled, each performs a role the other cannot:

- Enabling the V-SYNC option is recommended to 100% prevent tearing in both the very upper (frametime variances) and very lower (frametime spikes) G-SYNC range. However, unlike framerate limiters, enabling the V-SYNC option will not keep the framerate within the G-SYNC range at all time.

- Setting a minimum -3 FPS limit below the max refresh rate is recommended to keep the framerate within the G-SYNC range at all times, preventing double buffer V-SYNC behavior (and adjoining input lag) with G-SYNC + V-SYNC "On," or screen-wide tearing (and complete disengagement of G-SYNC) with G-SYNC + V-SYNC "Off" whenever the framerate reaches or exceeds the max refresh rate. However, unlike the V-SYNC option, framerate limiters will not prevent tearing.

You can limit framerate right in NCP now.I would argue that SpecialK is now the better tool for this, rather than RTSS.

Last edited:

Read page 9 and 15

G-SYNC 101: G-SYNC vs. V-SYNC OFF | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

G-SYNC 101: Closing FAQ | Blur Busters

Many G-SYNC input lag tests & graphs -- on a 240Hz eSports monitor -- via 2 months of testing via high speed video!blurbusters.com

You leave vsync off so as to avoid the large impact to input lag every time it kicks in. The ideal situation is that it never kicks in and gsync is maintained 100%, so it's not even a matter needing discussion

If you are going to quote that guide at me you should probably read the whole thing or discuss it with the author.

You leave it on, it is epxlained on the same site in the link I gave above.

Further in that very FAQ you linked to:

You can limit framerate right in NCP now.

None of you are actually reading this properly.If you are going to quote that guide at me you should probably read the whole thing or discuss it with the author.

1) V-sync introduces noticeable input-delay. 2-6 frames on average. You never want this.

2) G-sync tearing only occurs in the very upper (exceeding your monitor's refresh rate, which you set the -3 FPS limit to prevent altogether anyway) and very lower (your machine is struggling to maintain FPS anywhere close to your monitor's refresh rate at this point so why are you even bothering with this) FPS ranges

3) With a -3 FPS limit, V-Sync never engages in the upper-range anyway, regardless if it's on or off, that's why the limiter is there, to prevent V-Sync from ever happening

4) Nobody actually tries to intentionally hit a framerate far below in the very lower ranges anyway (seriously, why are we debating preventing tearing in the bottom range of framerates when we're talking gsync monitors in the 120-240hz range????). You all built PC's to get better performance, not less.

Nuance is hard. Feel free to leave it On if you really feel like it, but you're all failing to understand that you're not even using it, and only adding additional input delay when it kicks in at the expense of... nothing.

Last edited:

I just read through the entire thread, and while I understand that the buyer was a new-ish user, it still seems kind of overdramatic.If anybody is wanting to feel better about their PC issues, give this eyepatch wolf twitter thread a read. what a wild roller coaster lol

None of you are actually reading this properly.

1) V-sync introduces noticeable input-delay. 2-6 frames on average. You never want this.

2) G-sync tearing only occurs in the very upper (exceeding your monitor's refresh rate, which you set the -3 FPS limit to prevent altogether anyway) and very lower (your machine is struggling to maintain FPS anywhere close to your monitor's refresh rate at this point so why are you even bothering with this) FPS ranges

3) With a -3 FPS limit, V-sync never engages in the upper-range anyway, regardless if it's on or off, that's why the limiter is there

4) Nobody actually tries to intentionally hit a framerate far below in the very lower ranges anyway (seriously, why are we debating preventing tearing in the bottom range of framerates when we're talking gsync monitors in the 120-240hz range????). You all built PC's to get better performance, not less.

Nuance is hard. Feel free to leave it On if you really feel like it, but you're all failing to understand that you're not even using it, and only adding additional input delay when it kicks in at the expense of... nothing.

Same FAQ you posted...

Wait, why should I enable V-SYNC with G-SYNC again? And why am I still seeing tearing with G-SYNC enabled and V-SYNC disabled? Isn't G-SYNC suppose to fix that?

(LAST UPDATED: 05/02/2019)

The answer is frametime variances.

"Frametime" denotes how long a single frame takes to render. "Framerate" is the totaled average of each frame's render time within a one second period.

At 144Hz, a single frame takes 6.9ms to display (the number of which depends on the max refresh rate of the display, see here), so if the framerate is 144 per second, then the average frametime of 144 FPS is 6.9ms per frame.

In reality, however, frametime from frame to frame varies, so just because an average framerate of 144 per second has an average frametime of 6.9ms per frame, doesn't mean all 144 of those frames in each second amount to an exact 6.9ms per; one frame could render in 10ms, the next could render in 6ms, but at the end of each second, enough will hit the 6.9ms render target to average 144 FPS per.

So what happens when just one of those 144 frames renders in, say, 6.8ms (146 FPS average) instead of 6.9ms (144 FPS average) at 144Hz? The affected frame becomes ready too early, and begins to scan itself into the current "scanout" cycle (the process that physically draws each frame, pixel by pixel, left to right, top to bottom on-screen) before the previous frame has a chance to fully display (a.k.a. tearing).

G-SYNC + V-SYNC "Off" allows these instances to occur, even within the G-SYNC range, whereas G-SYNC + V-SYNC "On" (what I call "frametime compensation" in this article) allows the module (with average framerates within the G-SYNC range) to time delivery of the affected frames to the start of the next scanout cycle, which lets the previous frame finish in the existing cycle, and thus prevents tearing in all instances.

And since G-SYNC + V-SYNC "On" only holds onto the affected frames for whatever time it takes the previous frame to complete its display, virtually no input lag is added; the only input lag advantage G-SYNC + V-SYNC "Off" has over G-SYNC + V-SYNC "On" is literally the tearing seen, nothing more.

For further explanations on this subject see part 1 "Control Panel," part 4 "Range," and part 6 "G-SYNC vs. V-SYNC OFF

Again, if you'd bother to read anything at all, we set the -3 FPS limit to prevent V-Sync entirely, so that it doesn't kick in and introduce input lag. That's the part you're not reading.

Yep. I typically use vsync in combination with RTSS frame limit (140hz on 144hz screen) with gsync enabled.

Again, if you'd bother to read anything at all, we set the -3 FPS limit to prevent V-Sync entirely, so that it doesn't kick in and introduce input lag. That's the part you're not reading.

There are some games that have tearing even with a framerate cap set below your monitor's refresh rate. World of Warcraft for example. I had a cap of 140 fps set on my 144hz monitor and I'd still get tearing without vsync enabled.

It can tear within the active range of G-Sync...please take your own advice and read.Again, if you'd bother to read anything at all, we set the -3 FPS limit to prevent V-Sync entirely, so that it doesn't kick in and introduce input lag. That's the part you're not reading.

This was explained above by

This is a good post. I was only commenting on how V-Sync interacts with G-Sync.

You're correct that it also needs to be combined with a frame rate limiter if you want to minimize latency. That's not required for proper G-Sync operation, however. It shouldn't affect smoothness.

I would argue that SpecialK is now the better tool for this, rather than RTSS.

It should also be noted that a limit needs to be set low enough to keep GPU utilization at or below ~95% if you want the absolute lowest latency possible, as latency spikes much higher when the GPU is maxed-out - even if you're far below the refresh rate limit.

If you really want to get into the weeds about latency, I recommend these two videos:

Input Lag and Frame Rate Limiters

Can a modern adaptive-sync display beat a CRT to displaying a frame? In certain situations, yes! Here I measure input lag in Unreal Engine, CS:GO, and ezQuak...www.youtube.com

Input Lag Revisited: V-Sync Off and NVIDIA Reflex

V-Sync Off Latency measurements for Quake Champions, Destiny 2, Warzone, and Apex Legends, with NVIDIA Reflex testing as well!0:00 Intro1:37 Testing Setup2:4...www.youtube.com

G-Sync should always be combined with V-Sync.

There are two reasons for the "disagreement".

Why is this all AMD's fault?

- This is all AMD's fault.

- People whose thoughts on the matter are nothing more than "V-Sync BAD" without listening to other people, or considering that things may have changed with the introduction of VRR.

FreeSync originally lacked the low frame rate compensation feature (LFC), and the original spec did not place requirements on supported VRR ranges.

Which resulted in this marketing slide being produced:

Rather than mandating high refresh rates, they allowed 60Hz FreeSync monitors, with limited ranges like 48–60Hz (all the early G-Sync monitors were ≥120Hz).

Rather than supporting LFC—which all G-Sync monitors were required to do from day one—they sold the ability to disable V-Sync when using FreeSync as a feature.

When the frame rate dropped below the minimum refresh rate of a FreeSync monitor, it used to be locked to the minimum and would lag horribly if V-Sync was enabled - since it might've been running at 40Hz rather than even 60Hz.

So they put out this graph which shows that, on a 60Hz display, latency is reduced when you disable V-Sync.

Never mind that G-Sync displays were all high refresh rate monitors at the time, or that frame rate limiting could eliminate that latency without tearing, by preventing the game from hitting the refresh limit and switching over to V-Sync behavior.

Never mind that V-Sync off operation is exactly the same on a VRR display as it is on a fixed refresh display (tearing).

This one graph made it look like it was "better" to disable V-Sync - so the community eventually pressured NVIDIA to add the option to their drivers for the sake of parity.

Originally, whenever you enabled G-Sync, V-Sync was always locked on, and any setting in a game or the NVIDIA control panel was completely ignored.

V-Sync on is what G-Sync was designed for, and how it was always intended to be used.

So now we are stuck with this "debate" where people that don't know what they're talking about continue to insist that V-Sync is harmful or unnecessary on a VRR display.

It doesn't matter how many times you demonstrate that VRR can tear inside the active range when V-Sync is disabled, or if you show latency testing which proves that G-Sync combined with V-Sync and a frame rate limiter has equivalent latency. Sometimes it can be even lower.

There will always be people insisting that no, V-Sync BAD.

Generally, no.

No, you always need V-Sync.

ALWAYS.

Here is an example of my 100Hz G-Sync monitor tearing (lower 1/4), despite there being an 80 FPS frame rate limit set in RTSS, and showing that it's correctly synced to 80Hz (yellow counter), because V-Sync was disabled.

Unless you are limiting the frame rate to something like 30% below the maximum refresh rate, you always need V-Sync to prevent tearing.

Frame rate limits alone cannot replace the need for V-Sync.

It literally already does though, with Game Mode and Focus Assist.

It can't block programs which use non-standard alerts rather than going through the Windows notification system, though.

Some messenger apps may have the option to "use Windows notifications" rather than their own custom pop-ups. Telegram has this, for example.

A lot of these "problems" are really endemic to the Windows development ethos.

With macOS, Apple is constantly pushing developers to keep their applications up to date, using the latest OS features and APIs.

On Windows, developers love to do things like build their own notifications rather than integrate with the system.

There are still new applications being developed with no DPI scaling features whatsoever, because that developer doesn't have a high resolution monitor yet, and doesn't consider it worth their time.

This gives the impression that PCs/Windows are somehow at fault, when it's often the developers.

But people also aren't willing to give up on Windows' decades of compatibility, like Apple does.

Apple will throw everything out every few years and doesn't think twice about it - so if you want to develop for that platform, you have to stay current.

I'm not really sure that there's a good solution to this problem.

My hope is that we will eventually move to a system where each individual Win32 application is containerized, and that starts to solve a lot of these long-standing problems.

People rightfully pushed back against UWP, but it got a lot of things right.

Of course it's overdramatic, nobody is going to read a 70 plus tweet thread of someone clinically rhyming off their pc issues in sequence. he's telling a story and trying to make it funny and entertaining. Was this your first time on twitter?I just read through the entire thread, and while I understand that the buyer was a new-ish user, it still seems kind of overdramatic.

None of you are actually reading this properly.

1) V-sync introduces noticeable input-delay. 2-6 frames on average. You never want this.

2) G-sync tearing only occurs in the very upper (exceeding your monitor's refresh rate, which you set the -3 FPS limit to prevent altogether anyway) and very lower (your machine is struggling to maintain FPS anywhere close to your monitor's refresh rate at this point so why are you even bothering with this) FPS ranges

3) With a -3 FPS limit, V-sync never engages in the upper-range anyway, regardless if it's on or off, that's why the limiter is there

4) Nobody actually tries to intentionally hit a framerate far below in the very lower ranges anyway (seriously, why are we debating preventing tearing in the bottom range of framerates when we're talking gsync monitors in the 120-144hz range????). You all built PC's to get better performance, not less.

Nuance is hard.

- With VRR, V-Sync only adds latency if the frame rate hits the refresh rate limit. V-Sync is not adding latency at all times.

- I already demonstrated tearing with a limit set 20 FPS below a monitor's maximum refresh rate when V-Sync is disabled. In my tests, a frame rate limiter has to be set at least 30% below the maximum refresh rate to completely eliminate tearing without V-Sync.

- The frame rate limiter's job is to eliminate latency, not tearing. The rule to set a limit at least 3 FPS below the maximum refresh rate applies when V-Sync is ON. This allows V-Sync to eliminate tearing without adding latency.

This video has a slow motion demonstration if you don't believe the image I posted above.

You only have to watch the section from 1:35 to 3:05.

You use both a a frame limiter and vsync on. The frame limiter to reduce lag for when frames exceed gsync range and vsync to eliminate tearing when the game is running within gsync range. As long as it's running within gsync range, vsync does not add lag.None of you are actually reading this properly.

1) V-sync introduces noticeable input-delay. 2-6 frames on average. You never want this.

2) G-sync tearing only occurs in the very upper (exceeding your monitor's refresh rate, which you set the -3 FPS limit to prevent altogether anyway) and very lower (your machine is struggling to maintain FPS anywhere close to your monitor's refresh rate at this point so why are you even bothering with this) FPS ranges

3) With a -3 FPS limit, V-Sync never engages in the upper-range anyway, regardless if it's on or off, that's why the limiter is there, to prevent V-Sync from ever happening

4) Nobody actually tries to intentionally hit a framerate far below in the very lower ranges anyway (seriously, why are we debating preventing tearing in the bottom range of framerates when we're talking gsync monitors in the 120-240hz range????). You all built PC's to get better performance, not less.

Nuance is hard. Feel free to leave it On if you really feel like it, but you're all failing to understand that you're not even using it, and only adding additional input delay when it kicks in at the expense of... nothing.

Since you're all just responding in bad faith I'm not going to continue this anymore. None of you seem to understand that V-Sync is not engaged in the optimal solutions but what do I know, I'm just parroting the same article everyone's parroting, just not ignoring what's written in it.

Last edited:

Since you're all just responding in bad faith I'm not going to continue this anymore. None of you seem to understand that V-Sync is not engaged in the optimal solutions but what do I know, I'm just parroting the same article everyone's parroting, just not ignoring what's written in it.

It's pretty clear you're the one who doesn't understand but you do you.

This has been explained to you really patiently despite your weird rudeness, not sure why you needed to be so rude but you really should read the breakdown above.Since you're all just responding in bad faith I'm not going to continue this anymore. None of you seem to understand that V-Sync is not engaged in the optimal solutions but what do I know, I'm just parroting the same article everyone's parroting, just not ignoring what's written in it.

You are wrong about this.

I followed the article and turned on vsync in Nvidia control panel and set my frame limit to 140hz and my experience has been 1000x better then it was before. I'm confused why you are saying vsync is bad when I followed the articles directions ?Since you're all just responding in bad faith I'm not going to continue this anymore. None of you seem to understand that V-Sync is not engaged in the optimal solutions but what do I know, I'm just parroting the same article everyone's parroting, just not ignoring what's written in it.

- With VRR, V-Sync only adds latency if the frame rate hits the refresh rate limit. V-Sync is not adding latency at all times.

- I already demonstrated tearing with a limit set 20 FPS below a monitor's maximum refresh rate when V-Sync is disabled. In my tests, a frame rate limiter has to be set at least 30% below the maximum refresh rate to completely eliminate tearing without V-Sync.

- The frame rate limiter's job is to eliminate latency, not tearing. The rule to set a limit at least 3 FPS below the maximum refresh rate applies when V-Sync is ON. This allows V-Sync to eliminate tearing without adding latency.

This video has a slow motion demonstration if you don't believe the image I posted above.

You only have to watch the section from 1:35 to 3:05.

Cool video. What is "null" and where do I get it? It sounds like a software for setting framerates in games...maybe?

I googled "Null" and get a bunch of non-gaming links.

Edit** Just realized I may be able to start that video from the beginning and find out, haha.

In Assassin's Creed Origins and Odyssey, does the flickering occur in specific scenes, such as menus? Did you change the in-game default brightness setting?

Yeah that was bad even by twitter standards. The bar is already pretty low there.Of course it's overdramatic, nobody is going to read a 70 plus tweet thread of someone clinically rhyming off their pc issues in sequence. he's telling a story and trying to make it funny and entertaining.

No need to be a condescending jackass.

They just aren't understanding what vsync is doing, or not doing, with these settings. AKA, v-sync is virtually disabled by these settings in order to take full advantage of gsync and prevent input lag introduced by it. It's only there to catch edge-cases that either never happen on average or only in the worst-case scenarios/conditions or poorly-optimized games, and even then it's important to note thatI followed the article and turned on vsync in Nvidia control panel and set my frame limit to 140hz and my experience has been 1000x better then it was before. I'm confused why you are saying vsync is bad when I followed the articles directions ?

And not arguing pedantic notions like look I can cause tearing when I cap the FPS below the bottom range of gsync if I don't turn vsync onThere are rare occasions, however, where V-SYNC will only function with the in-game option enabled, so if tearing or other anomalous behavior is observed with NVCP V-SYNC (or visa-versa), each solution should be tried until said behavior is resolved.

So now you are backtracking to say it is doing something?They just aren't understanding what vsync is doing, or not doing, with these settings. AKA, v-sync is virtually disabled by these settings in order to take full advantage of gsync and prevent input lag introduced by it. It's only there to catch edge-cases that either never happen on average or only in the worst-case scenarios/conditions or poorly-optimized games, and even then it's important to note that

- It does not add input lag when set-up this way.

- it catches the "edge cases".

- it should be set on.

Again, this is all explained to you above.

Last edited:

User Banned (2 Week): Antagonizing Fellow Member; Prior Bans for the Same

Yeah that was bad even by twitter standards. The bar is already pretty low there.

Thanks for sharing. you sound like a fun person. So weird that you read an entire 70 tweet thread that was so terrible and bad. I mean that's a LOT of reading to do for something you absolutely did not enjoy. I bet you ran right back here to tell me about it too.

At this point it feels like trolling... You tell people they are not reading properly then post this...And not arguing pedantic notions like look I can cause tearing when I cap the FPS below the bottom range of gsync if I don't turn vsync on

It is NVIDIA's "Ultra Low" Latency setting, found in the "Low Latency Mode" 3D settings of the NVIDIA Control Panel.Cool video. What is "null" and where do I get it? It sounds like a software for setting framerates in games...maybe?

I googled "Null" and get a bunch of non-gaming links.

Edit** Just realized I may be able to start that video from the beginning and find out, haha.

NULL is basically an automatic limiter which adjusts itself based on the refresh rate - as a "set and forget" thing, rather than having to set a limit per-game, or using an external limiter like RTSS or SpecialK.

I find that it has limited effectiveness, and it can cause issues for some games - so it's not quite the "set and forget" solution it aims to be.

It's all about how much effort you want to put into this.

If you enable G-Sync and V-Sync, you may not have the lowest latency at all times, but you should have smoother gameplay with no possibility of tearing.

Setting a frame rate limit - either per-game or via a feature like NULL - is mostly for chasing after the lowest possible latency in all conditions.

It's nice when you have it set up that way, but not an essential thing you are required to configure for every game, if you don't want to.

The monitor is a 30–100Hz native display with a G-Sync module (ASUS PG348Q).And not arguing pedantic notions like look I can cause tearing when I cap the FPS below the bottom range of gsync if I don't turn vsync on

My point was that a high value well within the supported range of the display (80 FPS) and barely taxing the GPU (33%) is still capable of tearing when V-Sync is disabled.

It is V-Sync's job to eliminate tearing, not a frame rate limiter. The limiter is only there to reduce latency.

- V-Sync alone allows for high latency conditions - when frame rates are high enough to reach the maximum refresh rate.

- A limiter alone allows for tearing at medium-to-high frame rates - as much as 30% below the maximum refresh rate.

- Combined, you address both the latency and the tearing.

Been there and never looked back. I keep my pc for utility and game on consoles exclusively. Sometimes read a vn on the laptop tho

In Assassin's Creed Origins and Odyssey, does the flickering occur in specific scenes, such as menus? Did you change the in-game default brightness setting?

No, it occurred randomly. I did not change the brightness.

Yeah, this is part of the reason I ditched PC gaming for the most part. Even ignoring all of the frequent issues and frustrating incompatibilities, it also just made me want to tweak things constantly to try and get the perfect performance out of games. And spend money on upgrades all the time. It wasn't for me.

Yeah, I mean issues certainly do occur, but if all of these teenagers are able to manage to have a PC to play Fortnite and they don't have problems, I don't see how people can act as if this is a normal widespread problem with PCsLiterally every thread like this has the console-era army come in and post about how they had a problem with PC gaming X years ago and gave it up and have never been happier. It's basically a ritual at this point. Anything to convince themselves that these issues are widespread (despite PC gaming exploding in popularity, somehow in spite of all these issues) and they aren't missing out on anything.

OP, my guess is bad cables.

Sounds like pc gaming in a nutshellUpdated an .ini file and my computer literally exploded. Thanks PC gaming!