Pretty sure rdna2 has ipc gains over rdna1 so it should be even better.A ~9.4TF (in game clocks) RDNA1 5700 XT is already a tiny bit faster than a 2070.

A 10.28TF RDNA2 GPU should be at least around 2070S (or better depending on RDNA2 optimizations).

Grafikkarten-Rangliste 2024: 31 GPUs im Benchmark

Grafikkarten-Rangliste 2024 mit Nvidia-, AMD- und Intel-Grafikkarten: Benchmark-Übersicht mit allen wichtigen Grafikchips von Nvidia, AMD und Intel.www.pcgameshardware.de

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

Rumored specs for NVIDIA's upcoming "Ampere" RTX 3000 series graphics cards (RTX 3060, RTX 3070, RTX 3080 and RTX 3090)

- Thread starter Deleted member 3812

- Start date

- Rumor

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

RTX 3000 series power consumption, PCB shape rumor Picture showing two RTX 3080s has leaked UPDATE: NVIDIA event announced for September 1st RTX 3090 Specs Rumor: "20% increase in cores over the GeForce RTX 2080 Ti", up to 24 GB memory with faster GDDR6X pin speeds, 384-bit bus, nearly 1 TB/s bandwidth RTX 30xx series power cable: "It is recommended to use a power supply rated 850W or higher with this cable" 3090 Phoenix GS product images leak REMINDER: Tomorrow, September 1st is the NVIDIA event that is expected to announce the RTX 3000 series graphics cardsConsole prices are more or less irrelevant for PC HW pricing, always been.

Would this be similar to saying something like "a gtx 770 would be ps4 tier" in the past? How long did a 770 last until it felt like it was being left behind? I know eventually, my 780 started giving me trouble but I think it was because I was running out of vram with later games.

A GTX 770 is still better than a PS4. Maybe the 2GB version has some limitations due to VRAM but the 4GB version should still be performing just fine.

It's very reasonable to expect RT to get higher perf gains with Ampere than regular rasterization.What I really want to know is if the ray tracing performance is actually almost quadruple that of equivalent Turing cards.

We should also expect Ampere to support new RT features which aren't exposed in DXR 1.1 (DXR 2.0 or something).

If it will be anything like that Navi launch a year ago it will be AMD who's going to look funny again when they'll have to cut their prices a day prior to announcement due to NV spoiling their play again. Jebaited and all, right?If the 2080Ti crusher is $400 (or $350 if I'm feeling lucky,) that 3070 is going to look real funny at $550+

Around $500.If the 3070 is a GTX 2080ti with a slightly increase in performance how do you guys expect this to be priced?

Most current RT implementations are done with NV's direct help and via their devrel program which uses these things to promote NV's h/w obviously.Whats confusing its what DXR is labeled as in games but I guess when nothing else handles it devs just write Nvidia in the menus:

Modern Warfare says you need "specific Nvidia hardware" and that its accelerated by Nvidia RTX.....so is AMDs RT locked out?

If the code is 100% DXR compatible it will work on any DXR compatible GPU.

It's possible of course that there will be a need for some patching to make it work good on some GPU which wasn't even available back when the code was made.

So you could tell me what would be a better investment in a GPU space between all available options? And why?If this does indeed happen then it's going to retrospectively turn the previous RTX 2000 lineup into one of the worst pc hardware investments of all time.

Same here. I know its overpriced at $500 but its performance is spectacular. I am having fun playing the games at great fidelity. I plan to sell it off at $300-$350 and upgrade to a 3080 once that's out. The experience isn't going anywhere. If one is OK with taking the monetary hit its fine.I mean I can't talk because I bought a 2070 Super in December lol. But I also did it fully admitting I'm impatient and didn't want to build the rest of my computer (3800x build) without a new GPU.

I'd usually go for a Ti card so for what it's worth, I resisted grabbing a 2080 Super or 2080 Ti. I'll probably end up selling it to a friend who's been wanting to upgrade for a while at a good price for them (~300-350 CAD) and get an upper level 3000 card before the year ends.

When the 770 launched it was like ~70% faster than the GPU that went into the PS4 while the 2070S is on par with what is going to go in the PS5. Kepler aged horribly though in recent games so that's a big reason why it fell behind. I'm pretty sure though something like a 4gb 7870 would still do fine in PS4 games right now.Would this be similar to saying something like "a gtx 770 would be ps4 tier" in the past? How long did a 770 last until it felt like it was being left behind? I know eventually, my 780 started giving me trouble but I think it was because I was running out of vram with later games.

How Turing is going to age is hard to predict. I don't think it's going to age as bad as Kepler as it seems to have all the same features that will go in consoles with RDNA2.

Irrelevant in the sense that they do not have a direct effect on one another, but relevant in the sense that they're both competing for the same pool of consumer dollars. If console price/performance makes GPU price/performance look really bad then I think that's a real problem for PC. But that hasn't been a serious problem since the PS1 days, arguably.Console prices are more or less irrelevant for PC HW pricing, always been.

In other words, hypothetically, I don't see how you can sell an $800 GPU if you can get a $400 XSX with the same performance.

In other words, hypothetically, I don't see how you can sell an $800 GPU if you can get a $400 XSX with the same performance.

By that logic PC gaming should have died long, long time ago as consoles out-priced PC HW long, long time ago.

A PS4 Pro is around the level of a GTX 770 4GBA GTX 770 is still better than a PS4. Maybe the 2GB version has some limitations due to VRAM but the 4GB version should still be performing just fine.

Irrelevant in the sense that they do not have a direct effect on one another, but relevant in the sense that they're both competing for the same pool of consumer dollars. If console price/performance makes GPU price/performance look really bad then I think that's a real problem for PC. But that hasn't been a serious problem since the PS1 days, arguably.

In other words, hypothetically, I don't see how you can sell an $800 GPU if you can get a $400 XSX with the same performance.

It's always like that with PC gaming. You pay more for hardware but it comes with advantages. I'm certainly not going back to console only gaming after a cycle with having a gaming PC. I invested 2200$ from scratch to build my first gaming PC and I had something that crushed both the PS4 and Xbox One and I expect to do the same again. And truthfully with the upcoming GPU series an hypothetical 800$ will definitely be better than the one in the series X.

We still have Ars Technica!This is the same thing that's been discussed for like longer than that twitter post, Tweaktown kinda sucks though. Honestly, a lot of these tech sites seem to have gone down the toilet, like WTF happened to Tom's Hardware?!

The price logic on this thread is totally out of reality.By that logic PC gaming should have died long, long time ago as consoles out-priced PC HW long, long time ago.

People think if gpu 2 years later will beat 2080 ti it needs to be $1000+

It is not gonna happen 3080 will be $699 or if Nvidia is crazy $799 max. But I believe in $699 like super.

2080 super is just 10-15% less perf than 2080 ti and costs $699-799

If rumours are true and big navi is somewhere in-between 3080 and 3090/3080Ti, for $999 than another expansive GPU generation is ahead of us.

People would still celebrate "a thousand dollar" big navi because you'd get +50-60% performance over a 2080Ti for ~20% less cash.

But this would allow for the 3080 and a slightly smaller big navi to be priced around 700-800€ and the even faster 3090/3080Ti could be put above thousand dollars again.

We'll see as all rumours should be taken with a grain of salt, but if both AMD and NVIDIA start playing cat and mouse in the 10-15% difference margins, as they did this "gpu generation" than another expansive high-end cycle is ahead of us.

The most interesting part for me is Ray Tracing performance and how it is going to compare. There will be many entertaining benchmarks and tech talks to follow.

People would still celebrate "a thousand dollar" big navi because you'd get +50-60% performance over a 2080Ti for ~20% less cash.

But this would allow for the 3080 and a slightly smaller big navi to be priced around 700-800€ and the even faster 3090/3080Ti could be put above thousand dollars again.

We'll see as all rumours should be taken with a grain of salt, but if both AMD and NVIDIA start playing cat and mouse in the 10-15% difference margins, as they did this "gpu generation" than another expansive high-end cycle is ahead of us.

The most interesting part for me is Ray Tracing performance and how it is going to compare. There will be many entertaining benchmarks and tech talks to follow.

The most interesting part for me is Ray Tracing performance and how it is going to compare. There will be many entertaining benchmarks and tech talks to follow.

That is what I'm maybe most excited about this GPU cycle. How AMDs 1st gen RT cards go against NVs 2nd gen.

At this point I'm just believing people making those sorts of posts have only been around for Turing and haven't paid attention to previous nvidia gensThe price logic on this thread is totally out of reality.

People think if gpu 2 years later will beat 2080 ti it needs to be $1000+

It is not gonna happen 3080 will be $699 or if Nvidia is crazy $799 max. But I believe in $699 like super.

2080 super is just 10-15% less perf than 2080 ti and costs $699-799

what?So you could tell me what would be a better investment in a GPU space between all available options? And why?

That is what I'm maybe most excited about this GPU cycle. How AMDs 1st gen RT cards go against NVs 2nd gen.

Judging by AMD's RT tech demo on RDNA2 recently, it probably won't be up to par but we'll see.

Would this be similar to saying something like "a gtx 770 would be ps4 tier" in the past? How long did a 770 last until it felt like it was being left behind? I know eventually, my 780 started giving me trouble but I think it was because I was running out of vram with later games.

A GTX 770 is still better than a PS4. Maybe the 2GB version has some limitations due to VRAM but the 4GB version should still be performing just fine.

The 770 wasn't the card that was considered PS4-tier, the 760 was.When the 770 launched it was like ~70% faster than the GPU that went into the PS4 while the 2070S is on par with what is going to go in the PS5. Kepler aged horribly though in recent games so that's a big reason why it fell behind. I'm pretty sure though something like a 4gb 7870 would still do fine in PS4 games right now.

How Turing is going to age is hard to predict. I don't think it's going to age as bad as Kepler as it seems to have all the same features that will go in consoles with RDNA2.

I got a 760 when it came out and was able to get performance somewhere just above base PS4 for a while. I was perfectly fine targeting 1080p/30 in most games, 1080p/60 for games that ran at 60 on consoles. The first game on which I remember the 760 being at a distinct disadvantage compared to consoles was Doom 2016, and that was pretty much just because the console versions had dynamic resolution and the PC version didn't.

That was where I upgraded but to be honest I don't know for sure that 760 can't keep up with base consoles today. Maybe the 2GB can't but I wouldn't be surprised if there were games today where the 4GB could. I imagine the 770 can absolutely still beat the base PS4. And that's considering how badly Kepler aged.

To this day we still have people posting about buying new RTX 2080 Ti's (for $1200) and other 2000 cards over on the pc building thread which feels like watching someone walk across a room covered in glass while barefoot. I gave up on trying to correct people on every page; there is no hope.

Others still say "ya duh arr tee ecks 2070 super is still a good card to buy at $500". Well, no it's not. Not at all. For the love of god try to get by on your integrated graphics or an old card for 3 months or something.

It has been a strong reminder of the "WELL I WANT IT NOWWW" generation. Like ok great, your shit is now basically two years old already, straight outta the box. All of these leaks are basically proving that the current RTX lineup is going to lose ~ 40% of their value as soon as the 3000s are available. The 2080 Ti is about to become (probably) about a $500 card (the RTX 3070). And people are still buying them with their stimulus checks...

Would the value of the 2080Ti really drop that much? I also find it likely that the 3070 will be similar in raw GPU capabilities, but what about the RAM? I heard rumours of the 3080 having 10GB, so is it possible the 3070 will only have 8, resulting in the 2080Ti still being better?

Have Nvidia and AMD ever confirmed that the new cards will be HDMI 2.1 compatible?

Don't see why they won't be. But no, there's been no express confirmation.

I didn't buy any of the RTX series because of that. I'll wait another card gen than buy one without HDMI 2.1.

Judging by AMD's RT tech demo on RDNA2 recently, it probably won't be up to par but we'll see.

there's nothing that can be gleamed from that

No, the 2080 ti will still sell for more than $500 used. Older/clearance hardware will maintain higher than deserved used aftermarket pricing levels simply based on demand for those models. But the point is that (what is presumed to be) a $500 card in the RTX 3070 is poised to match its performance -5%.Would the value of the 2080Ti really drop that much? I also find it likely that the 3070 will be similar in raw GPU capabilities, but what about the RAM? I heard rumours of the 3080 having 10GB, so is it possible the 3070 will only have 8, resulting in the 2080Ti still being better?

PC desktop gamers typically buy consoles too. Console gamers dont typically buy a PC.Irrelevant in the sense that they do not have a direct effect on one another, but relevant in the sense that they're both competing for the same pool of consumer dollars. If console price/performance makes GPU price/performance look really bad then I think that's a real problem for PC. But that hasn't been a serious problem since the PS1 days, arguably.

In other words, hypothetically, I don't see how you can sell an $800 GPU if you can get a $400 XSX with the same performance.

The market is different. The GPUs will sell regardless of price because PC gamers want that new shit. It's almost impulsive. Sell the old gpu, get the new gpu. rinse and repeat. a pc gamer likes what the pc offers and a console doesn't allow them to do other things you can do on a pc. diff markets at the enthusiast level.

I feel the same watching people in that thread still spending 700+ bucks on a 2080S etc

The time to buy high end Turing cards is over

I'm pretty sure anyone can survive with a cheap-ish GPU that they can then sell in three months or so when next gen arrives

You've really got me thinking. I can totally live without my 2080 Ti for the next few months if I sold mine now. The resale value is crazy right now on eBay. I could sell my card I bought 18 months ago for $100 less than I paid for it. Very tempting.

This is absolute nonsense. Where do you guys come up with this stuff?

We know that XSX's 12.1TF RDNA2 GPU is basically on par with a RTX2080 (non-Super) based on the DF Gears 5 comparison they were shown at MS.A ~9.4TF (in game clocks) RDNA1 5700 XT is already a tiny bit faster than a 2070.

A 10.28TF RDNA2 GPU should be at least around 2070S (or better depending on RDNA2 optimizations).

Grafikkarten-Rangliste 2024: 31 GPUs im Benchmark

Grafikkarten-Rangliste 2024 mit Nvidia-, AMD- und Intel-Grafikkarten: Benchmark-Übersicht mit allen wichtigen Grafikchips von Nvidia, AMD und Intel.www.pcgameshardware.de

So I guess we're looking at 12.1 RDNA2 TF = 10 Turing TF?

Typical 2080s are about 11 tflops at actual in game clocks.We know that XSX's 12.1TF RDNA2 GPU is basically on par with a RTX2080 (non-Super) based on the DF Gears 5 comparison they were shown at MS.

So I guess we're looking at 12.1 RDNA2 TF = 10 Turing TF?

Exactly.PC desktop gamers typically buy consoles too. Console gamers dont typically buy a PC.

The market is different. The GPUs will sell regardless of price because PC gamers want that new shit. It's almost impulsive. Sell the old gpu, get the new gpu. rinse and repeat. a pc gamer likes what the pc offers and a console doesn't allow them to do other things you can do on a pc. diff markets at the enthusiast level.

I haven't seen that many people who constantly criss-cross between PC and consoles as their main platform. Some might make one or two switches over the course of, like, their entire gaming life, but price/performance usually isn't the deciding factor that makes someone give up PCs for consoles.

I've seen people who actually own PCs way more powerful than consoles and still only play on consoles for reasons completely unrelated to power. Conversely there are people who mostly play on PC for stuff like LoL, WoW, Counter-Strike, or some other obscure game on PCs weaker than consoles and don't need consoles because they don't care about TLOU or Mario or COD at all (or they might still be playing original PC COD4).

The "mainstream" PC users -- the people who make the GTX 1060 the current most popular GPU on Steam's survey followed by the 1050Ti or 2060 or RX580, already know they're spending more money than they would on a console. They want just enough performance to play AAA games but also still want the customization they can only get on PC (and also play a lot of PC exclusives even though they might not be AAA games). Maybe a lot of them live in countries where consoles aren't as popular or as available like China, South Korea, or Brazil or something.

The only thing the 2080ti really improved upon was the resale value of my 1080ti.Would the value of the 2080Ti really drop that much? I also find it likely that the 3070 will be similar in raw GPU capabilities, but what about the RAM? I heard rumours of the 3080 having 10GB, so is it possible the 3070 will only have 8, resulting in the 2080Ti still being better?

The 770 wasn't the card that was considered PS4-tier, the 760 was.

I got a 760 when it came out and was able to get performance somewhere just above base PS4 for a while. I was perfectly fine targeting 1080p/30 in most games, 1080p/60 for games that ran at 60 on consoles. The first game on which I remember the 760 being at a distinct disadvantage compared to consoles was Doom 2016, and that was pretty much just because the console versions had dynamic resolution and the PC version didn't.

That was where I upgraded but to be honest I don't know for sure that 760 can't keep up with base consoles today. Maybe the 2GB can't but I wouldn't be surprised if there were games today where the 4GB could. I imagine the 770 can absolutely still beat the base PS4. And that's considering how badly Kepler aged.

Well said.

Would the value of the 2080Ti really drop that much? I also find it likely that the 3070 will be similar in raw GPU capabilities, but what about the RAM? I heard rumours of the 3080 having 10GB, so is it possible the 3070 will only have 8, resulting in the 2080Ti still being better?

The possible memory size and minor performance edge in most current games could be offset or go completely the other way as the 3070 like Ampere cards are rumored to having significantly improved ray tracing capability in games that use it and more games probably will be as the next generation gets rolling. This is a terrible time to buy a 2080 Ti or any Turing card really.No, the 2080 ti will still sell for more than $500 used. Older/clearance hardware will maintain higher than deserved used aftermarket pricing levels simply based on demand for those models. But the point is that (what is presumed to be) a $500 card in the RTX 3070 is poised to match its performance -5%.

IMO buying a 2080 Ti for more than a 3070 is probably going to be about as good of a decision as buying a 780 Ti when the 970 was close to coming out or after. It's going to be interesting to see how aggressive clearance sales get.

If you can get that much, I would absolutely go for it, would go a long way to buying a 3080 Ti or probably cover the cost of a 3080 with money left over.You've really got me thinking. I can totally live without my 2080 Ti for the next few months if I sold mine now. The resale value is crazy right now on eBay. I could sell my card I bought 18 months ago for $100 less than I paid for it. Very tempting.

weirdly the video is at 24 fps, which really bothered me. Is AMD trying to saboutage how their card performance looks?

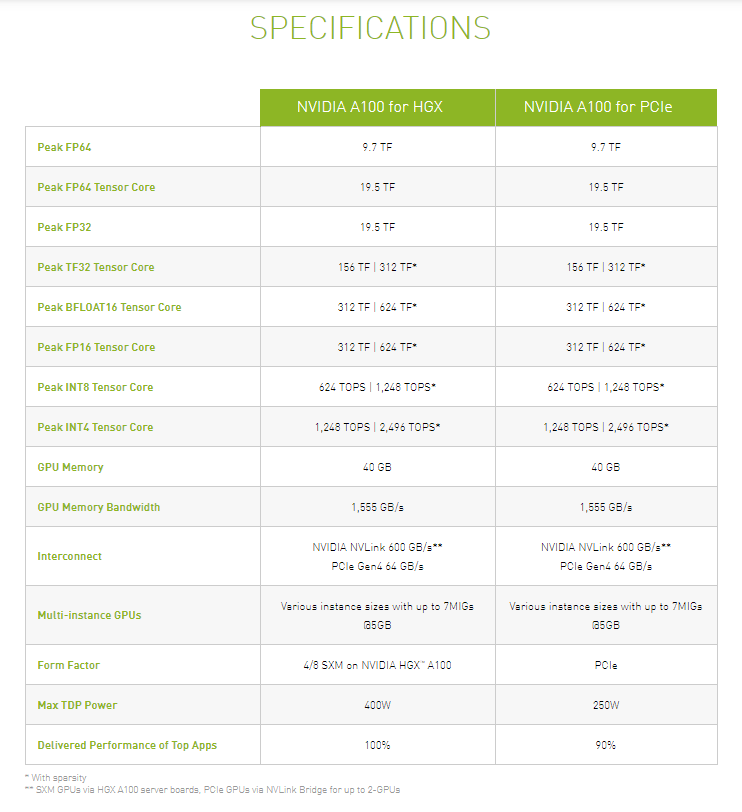

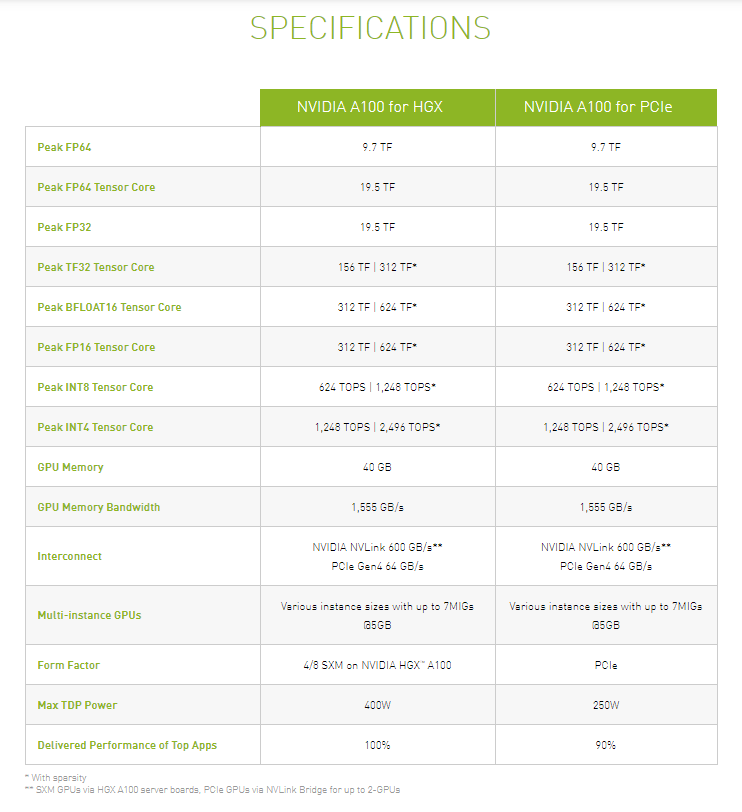

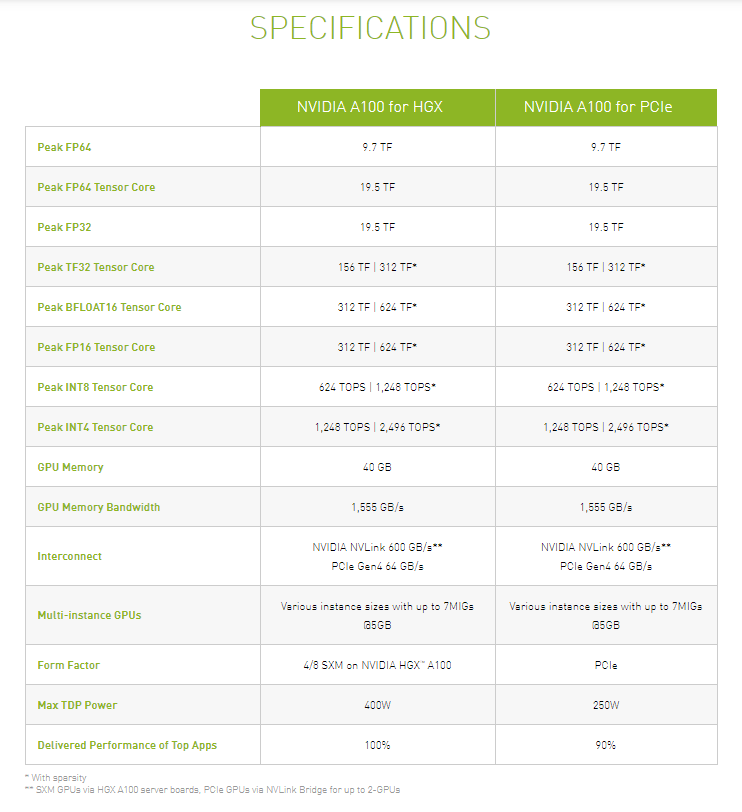

A100 PCIe tops out at 250W power while providing 90% of A100 SXM performance:

So much for Ampere being power hungry I guess.

So much for Ampere being power hungry I guess.

That's all you can back you claim of Turing being the worst h/w investment with?

Last edited:

AMD's marketing has always been terrible remember ''poor volta'' lolweirdly the video is at 24 fps, which really bothered me. Is AMD trying to saboutage how their card performance looks?

Well, the type and the amount of reflections (and nothing else RT based there) does hint on some things. But it's impossible to know if they'll end up being true or not since this could be just an artistic choice really.

It's just a pre-recorded video, not a real time demo.weirdly the video is at 24 fps, which really bothered me. Is AMD trying to saboutage how their card performance looks?

Realistically it can't be more as that would push x80 to $1000 and that's not going to happen with AMD being hopefully competitive now.

That was where I upgraded but to be honest I don't know for sure that 760 can't keep up with base consoles today. Maybe the 2GB can't but I wouldn't be surprised if there were games today where the 4GB could. I imagine the 770 can absolutely still beat the base PS4. And that's considering how badly Kepler aged.

I've been using a factory overclocked GTX 660 since 2013, and I've been able to follow the PS4 generation up until this year with 1080p, high settings and normally around 40fps. Where it finally failed was with Doom Eternal this spring, which is (practically) unplayable with the card.

The 670/760 would likely be a more comfortable PS4 equivalent, but looking at raw specs the 660 is the closer match, with 1.88 TFLOPS vs the PS4's 1.84 (and efficiency etc I suppose similar or better).

Well, then I hope the 3070 is around that. My current 1070 has one 6-pin and one 8-pin, so tops out (but never reaches tbh) 300W.A100 PCIe tops out at 250W power while providing 90% of A100 SXM performance:

So much for Ampere being power hungry I guess.

That's all you can back you claim of Turing being the worst h/w investment with?

Judging by AMD's RT tech demo on RDNA2 recently, it probably won't be up to par but we'll see.

This is just absolutely hilarious, what a shit showcase.

Isn't the PS4's GPU literally just a tweaked HD 7850?The 770 wasn't the card that was considered PS4-tier, the 760 was.

I got a 760 when it came out and was able to get performance somewhere just above base PS4 for a while. I was perfectly fine targeting 1080p/30 in most games, 1080p/60 for games that ran at 60 on consoles. The first game on which I remember the 760 being at a distinct disadvantage compared to consoles was Doom 2016, and that was pretty much just because the console versions had dynamic resolution and the PC version didn't.

That was where I upgraded but to be honest I don't know for sure that 760 can't keep up with base consoles today. Maybe the 2GB can't but I wouldn't be surprised if there were games today where the 4GB could. I imagine the 770 can absolutely still beat the base PS4. And that's considering how badly Kepler aged.

I'm still rocking an R9 280X, which is basically a rebranded 7970 GHz, which is but an updated 7970 from 2012. That card has aged like fine wine, still plays most stuff at medium setting at 1080p. I'm due for an update for next gen though.

£250 pounds for the 3060 and I wouldn't hesitate in buying it. If not I guess it's just more waiting/seeing what AMD offers.

Don't see why they won't be. But no, there's been no express confirmation.

I didn't buy any of the RTX series because of that. I'll wait another card gen than buy one without HDMI 2.1.

I thought I made a fatal error buying RTX because of this, but then those cards wouldn't run my games at 4k/120/HDR anyway, so I'm fine with 1440p/120/HDR until 30x0 (or later).

There is no secret naughty dog magic going on here like quite a few think, it's a standard 1440p resolution with TAA, outputted to 4K. Xbox 360 has been doing this since like 2005, internal res 720p & output at 1080p.As more and more specs leak out I'm struggling to figure out which 3000 series card I want to get... Majority of the time my PC will be connected to 1440p @ 144hz monitor so I know even the 3070 should in theory crush that. I am mainly trying to estimate how often I will bring my PC out to the couch so I can game on a nice screen in 4k. Do PC games utilize any of the rendering techniques that consoles do to avoid rendering in native 4k ? I'm playing TLOU2 and at 1440p the IQ is just amazing.

Playing a PC game at 4K then lowering the internal resolution will provide the same results as TLOU2, as long as it has a good TAA solution to clean up jaggies.

£250 pounds for the 3060 and I wouldn't hesitate in buying it. If not I guess it's just more waiting/seeing what AMD offers.

that aint happening, likely be £349-399

There is no secret naughty dog magic going on here like quite a few think, it's a standard 1440p resolution with TAA, outputted to 4K. Xbox 360 has been doing this since like 2005, internal res 720p & output at 1080p.

Playing a PC game at 4K then lowering the internal resolution will provide the same results as TLOU2, as long as it has a good TAA solution to clean up jaggies.

Nope. ND has likely created a bespoke reconstruction algorithm within their TAA. So its not at all like simply upscaling any random game.

Nope. ND has likely created a bespoke reconstruction algorithm within their TAA. So its not at all like simply upscaling any random game.

Nah. He's right. Watch nx gamer or df. It's 1440p with their own taa solution but there's no reconstruction going on. It's straight 1440p with a 4k output via the console.

Which game exactly? I do not think UC4 UC4 LL or TLOU2 do reconstruction up in their TAA for the output image, just for things like hair, SSR, etc.Nope. ND has likely created a bespoke reconstruction algorithm within their TAA. So its not at all like simply upscaling any random game.

Judging by AMD's RT tech demo on RDNA2 recently, it probably won't be up to par but we'll see.

Man, I'm so psyched for this technology. Watching that video has me so hyped for the raytracing wars. Graphic technology is going to be incredible. Give Me The Powerrrr!!!!!

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

RTX 3000 series power consumption, PCB shape rumor Picture showing two RTX 3080s has leaked UPDATE: NVIDIA event announced for September 1st RTX 3090 Specs Rumor: "20% increase in cores over the GeForce RTX 2080 Ti", up to 24 GB memory with faster GDDR6X pin speeds, 384-bit bus, nearly 1 TB/s bandwidth RTX 30xx series power cable: "It is recommended to use a power supply rated 850W or higher with this cable" 3090 Phoenix GS product images leak REMINDER: Tomorrow, September 1st is the NVIDIA event that is expected to announce the RTX 3000 series graphics cards- Status

- Not open for further replies.