No, you didn't. I've been crazy busy with work. It's coming.

Consider this a brief respite for those to be sacrificed.

No, you didn't. I've been crazy busy with work. It's coming.

Again, PS4's RAM change was a last minute change. Unless PS5 gets delayed, it's too late to change.

RAM is an easier thing to change, that's true, but it still requires a lot of setup.

It was something they wanted to do, but it was a last minute push.It wasn't really a last minute change in fact.

They always wanted to have the 8GB GDDR5 but it takes times to negociate having it at the good price so they planned a "B plan" in case they negociation failed.

And while they speek with devs with the "plan B" just in case, they works in the 2 scenarios in the same time.

At last time, the negociation finally been sucessfull so they switch all to "Plan A" and put that RAM they always wanted to.

"On the decision to have 8GB of RAM…I'm supposed to look at the hardware and talk to the developers. I am not a business guy. There is an organization Andy runs, which is the business organization.

So you don't want to speak up too soon. If you speak up too soon it's like, "That's a billion dollars we are talking about. Mark is good with the bits and the bytes but he doesn't understand this whole business thing." I very intentionally waited. They asked, "What do you think Mark?" I said, "Well, let's get feedback from the developers." I did not speak up until the very final meeting on the topic, and then I said, "I think we have to do this."

I know it's hard to believe, but you'll just have to believe it : )How big is the cache? You've brought it up a few times, but never mentioned what size this cache actually is, which is seemingly a very important factor.

Microsoft themselves state that it's "typically easy for games to more than fill up their standard memory quota with CPU, audio data, stack data, and executable data, script data" with respect to the 3.5GB of slower bandwidth ram, and given that this cache you speak of is probably comparatively tiny in size, I find the notion that this ram won't be accessed frequently hard to believe.

All 10 GDDR6 interfaces operate simultaneously. At any given moment, say the CPU of XSX requests data reside on 2 chips, then the GPU will get to useNever said anything about it being significant... or even that its a bad thing. Its just a by-product of their RAM layout choice. And I would imagine that they did a lot of testing and concluded that the bandwidth hit was acceptable.

320 - 64 = 256 bits of bandwidth, for similar scenario on PS5, the GPU gets 256 - 64 = 192 bits of bandwidth.It's also easy to overstate the impact of cache. It has been a part of every high performance CPU design for decades, and yet we still provision computers today with substantial amounts of DDR4 bandwidth because the CPU still makes good use of it. Estimating exactly how much bandwidth CPU tasks will require is going to be difficult, as current generation titles running on the newer hardware is going to represent a much lighter CPU load than games designed from the ground up for the newer hardware.

It wasn't really a last minute change in fact.

They always wanted to have the 8GB GDDR5 but it takes times to negociate having it at the good price so they planned a "B plan" in case they negociation failed.

And while they speek with devs with the "plan B" just in case, they works in the 2 scenarios in the same time.

At last time, the negociation finally been sucessfull so they switch all to "Plan A" and put that RAM they always wanted to.

I don't think he's overstating the impact of cache. Comparing to GPU's constant stream of data, CPU's memory access still look few and far between. Even in bandwidth's terms, a high end quad channel DDR4 setup can achieve somewhere 70GB/s of bandwidth, say with a CPU running at 3.6 GHz, that gives you a quarter of a typical cache line accessed per cycle. Doesn't sound like a lot but it should already be an overkill for consoles, as I don't think anyone will be rendering 4K raw videos on their gaming consoles.It's also easy to overstate the impact of cache. It has been a part of every high performance CPU design for decades, and yet we still provision computers today with substantial amounts of DDR4 bandwidth because the CPU still makes good use of it. Estimating exactly how much bandwidth CPU tasks will require is going to be difficult, as current generation titles running on the newer hardware is going to represent a much lighter CPU load than games designed from the ground up for the newer hardware.

It was something they wanted to do, but it was a last minute push.

Mark Cerny "PS4 8GB GDDR5 RAM Was Decided in Very Final Meeting"

During an interview with Game Informer, PS4 lead architect Mark Cerny revealed the decision of the PlayStation 4 receiving 8GB of GDDR5 RAM was decided at the very final meeting. At first, it was going to only be limited to 4GB but Cerny knew that GDDR5 is far superior and made for the...www.fraghero.com

I thought it was the opposite. From the onset 4GB was planned. Plan B was 8GB if price allowed. At the last minute, prices dropped and they pushed for 8GB

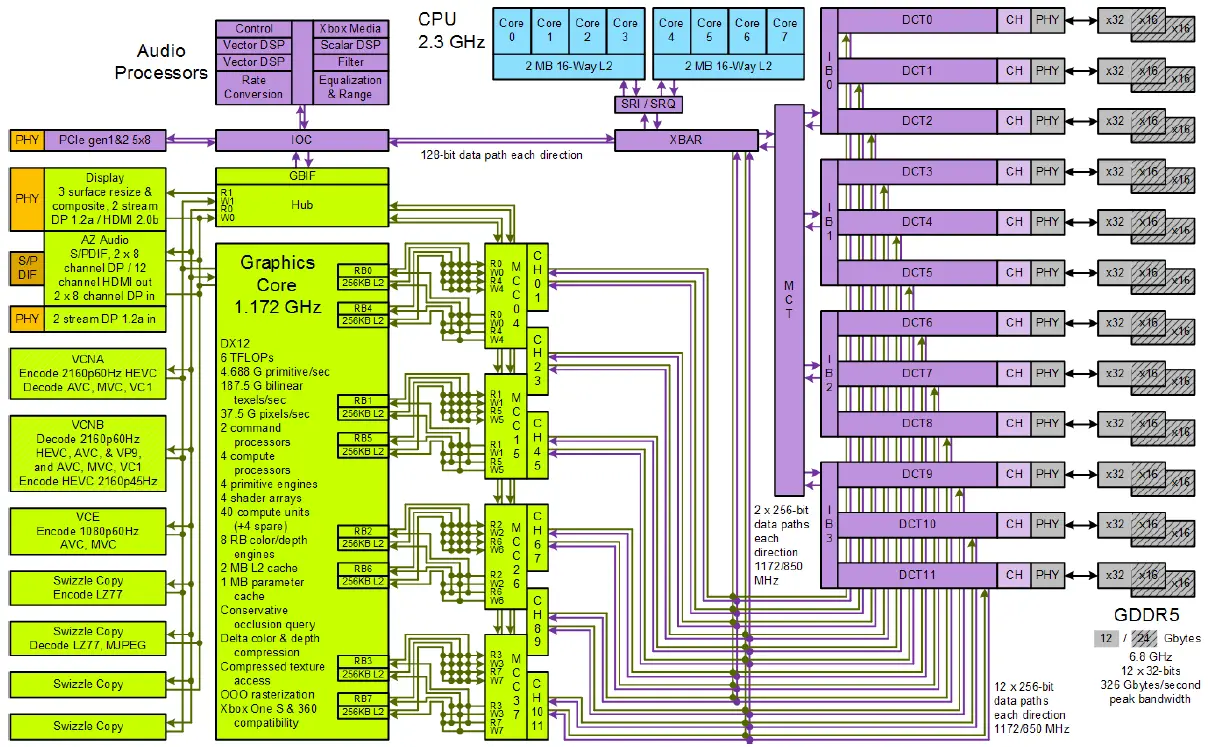

That sounds like an unusual memory setup. When you say GDDR6 interfaces, do you mean every GDDR6 memory chip is addressed separately, like they each have their own memory controller? That's a bit surprising, and if you've got a link that details that I'd like to see it.All 10 GDDR6 interfaces operate simultaneously. At any given moment, say the CPU of XSX requests data reside on 2 chips, then the GPU will get to use320 - 64 = 256 bitsof bandwidth, for similar scenario on PS5, the GPU gets256 - 64 = 192 bitsof bandwidth.

The Tempest Audio Engine is basically one extra compute unit, I doubt the customization added a lot of area. So it's less than 1/36 of the GPU area. MS has put R&D and silicon wafer area into audio on the XSX too from what I understand; and if not, it'll detract from their GPU/CPU advantage.Is that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

It would seem that Cerny's only ace in the hole really is this super fast SSD that makes it a Super PlayStation, and it's the only thing keeping me in the PlayStation camp; but I feel like this is going to be dangerously close to PS3 all over again where it will be an exclusives machine again and the exclusives are never even all that good because they don't have Zelda nor Mario.

So they have an ace in the hole, but their saving grace will be to not fuck up the marketing (by continuing to focus on games, games, games). Let's hope Sony can pull it off by just maintaining their current course. Just let Cerny continue to do all the talking, and lock down that Activision/Call-of-Duty marketing.

Is that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

It would seem that Cerny's only ace in the hole really is this super fast SSD that makes it a Super PlayStation, and it's the only thing keeping me in the PlayStation camp; but I feel like this is going to be dangerously close to PS3 all over again where it will be an exclusives machine again and the exclusives are never even all that good because they don't have Zelda nor Mario.

So they have an ace in the hole, but their saving grace will be to not fuck up the marketing (by continuing to focus on games, games, games). Let's hope Sony can pull it off by just maintaining their current course. Just let Cerny continue to do all the talking, and lock down that Activision/Call-of-Duty marketing.

I don't know about the controller situation, but at least the number of GDDR6 PHY(physical layer interface) on these SoCs and GPUs matches the number of GDDR6 memory chip they have.That sounds like an unusual memory setup. When you say GDDR6 interfaces, do you mean every GDDR6 memory chip is addressed separately, like they each have their own memory controller? That's a bit surprising, and if you've got a link that details that I'd like to see it.

Saw your wife is a nurse. Make sure to treat her extra nicely during these tough times. :)No, you didn't. I've been crazy busy with work. It's coming.

Consider this a brief respite for those to be sacrificed.

<3Saw your wife is a nurse. Make sure to treat her extra nicely during these tough times. :)

And thanks for the update.

I don't recall SKHynix announcing 14Gbps 16GB ICs, and definitely not anything over 14Gbps, for 16Gbps and more you would only find Samsung ICs for both 8Gb and 16Gb, in fact, Digital Foundry showed the PCB memory layout for those wondering. However, both Micron and Samsung can equip the PS5 since it appears it's 8 ICs of 16Gb, 14Gbps.Ideally, I think the two consoles would be better with 16 Gbps GDDR6 module for 512 GB/s of bandwidth for Sony and 640 GB/s for Microsoft but they want to reach a certain MSRP. And only SK Hynix offer 16 Gbps 2 GB module, only in sample phase for Samsung.

I agree with you that the GPU accessing all 10 ICs at 560GB/s will never happen (or at least 99.99999% of the time when the CPU uses any memory). However, I still don't know about the overall cost to CPU memory access, are we sure they use 32bit buses for all 6 2GB ICs ? There is a 2 channel (16 bits wide) mode on GDDR6, wouldn't it make more sense to have the GPU still access 2GB ICs with limited width ?The reason why 48GB/s of bus traffic for the CPU on Series X costs you 80GB/s of the theoretical peak GPU bandwidth is because it's tying up the whole 320-bit bus to transmit only 192 bits of data per cycle from the slower portion of RAM we've been told will be typically used by the CPU. 48GB / 192 bits * 320 bits = 80GB of effective bandwidth used to make that 48GB/s available. This is because only six of the ten RAM chips can contribute to that additional 6GB over and above the 10GB of RAM that can be accessed more quickly when all ten are used in parallel.

The Tempest engine is an audio ASIC, from what I gather it must be part of the APU, much like all the dedicated I/O ASICs are embedded into the SoC of both consoles. I don't think it's part of the GPU, even it apparently is a repurposed RDNA2 CU.Is that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

I think the goal of all that dedicated hardware is to save as much CPU and GPU power as possible. ASICs should be a worthwhile investment if developers suddenly want to make use of all the new functionalities. While the CPU is free from handling I/O and compression/decompression and the audio doesn't need any GPU resources, all that power goes into holding >2.1GHz clocks. It's actually a guarantee that a lot of thought went into being as power efficient as possible, given that probably ~300mm² and a few ICs will burn ~250W of power.In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

GDDR6 ICs use 32 bits data buses to the memory controllers, so the SoC on both consoles has 10 or 8 32 bits GDDR6 controllers to handle them separately. They can also perform in 2 channel operations for 16bits wide transfers with little performance loss.That sounds like an unusual memory setup. When you say GDDR6 interfaces, do you mean every GDDR6 memory chip is addressed separately, like they each have their own memory controller? That's a bit surprising, and if you've got a link that details that I'd like to see it.

en.wikichip.org

en.wikichip.org

Again, PS4's RAM change was a last minute change. Unless PS5 gets delayed, it's too late to change.

RAM is an easier thing to change, that's true, but it still requires a lot of setup.

It was a change decided at the very last meeting. Do you have a source for the MB change?PS4 was a ram config and MB change, not a simple chip swap out.

The Tempest Audio Engine is basically one extra compute unit, I doubt the customization added a lot of area. So it's less than 1/36 of the GPU area. MS has put R&D and silicon wafer area into audio on the XSX too from what I understand; and if not, it'll detract from their GPU/CPU advantage.

The Tempest engine is an audio ASIC, from what I gather it must be part of the APU, much like all the dedicated I/O ASICs are embedded into the SoC of both consoles. I don't think it's part of the GPU, even it apparently is a repurposed RDNA2 CU.

I think the goal of all that dedicated hardware is to save as much CPU and GPU power as possible. ASICs should be a worthwhile investment if developers suddenly want to make use of all the new functionalities. While the CPU is free from handling I/O and compression/decompression and the audio doesn't need any GPU resources, all that power goes into holding >2.1GHz clocks. It's actually a guarantee that a lot of thought went into being as power efficient as possible, given that probably ~300mm² and a few ICs will burn ~250W of power.

Read towards the bottom of the article and come to your own conclusions.

Inside PlayStation 5: the specs and the tech that deliver Sony's next-gen vision

Sony has broken its silence. PlayStation 5 specifications are now out in the open with system architect Mark Cerny deli…www.eurogamer.net

GDDR6 ICs use 32 bits data buses to the memory controllers, so the SoC on both consoles has 10 or 8 32 bits GDDR6 controllers to handle them separately. They can also perform in 2 channel operations for 16bits wide transfers with little performance loss.

Each pair of Render Boxes is wired to one Memory Controller Cluster (MCC). Each MMC consists of two dedicated memory controllers and two more that are shared between two pairs of MCCs. In total there are four clusters with each having two dedicated controllers and two more pair (4 controllers) of channels shared between a pair of MCCs for a total of 12 channels.

Just saw this tweet by Brad Sams:

Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

Is that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

Really? He seemed very biaised about anything MS related.always thought that he is one of the more reputable journalists

I mean, it's thurrott.com. Why should we expect anything but unofficial marketing from them?

Just saw this tweet by Brad Sams:

Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

The notion that dedicating less than 1/40th of your APU to an audio ASIC that'll get its own API so that every game can have quality positional audio is "a waste" should be offensive to anyone with a set of working ears, and comparing it to the band-aid of strategically compromised design that was DDR3+eSRAM is downright trolling.

idk who this person is and what his previous tweets were. But is it wrong to assume performance figures probably should be based on sustainable clocks or realistic scenarios ? Just like MS put forward 560 GB/s of memory bandwidth but can realistically only guarantee at least 224 GB/s to the GPU 100% of time and at least ~392 GB/s in CPU intensive workloads ?Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

It's not like only a few select audiophiles will get to enjoy it either. Anyone with a pair of headphones is getting support on day 1. Any decent binaural demo on YouTube should give you an idea of what it's like - although without the selectable HRTF that is frankly one of the most novel things about it.

Just saw this tweet by Brad Sams:

Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

Just saw this tweet by Brad Sams:

Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

It was a change decided at the very last meeting. Do you have a source for the MB change?

Just saw this tweet by Brad Sams:

Pretty disappointing tbh. First he made up some conspiracy stuff about PS5 actually being 9.2 TFLOPS, and now he is implying that Cerny lied and that the raw SSD speed is actually lower. I always thought that he is one of the more reputable journalists, but he went full tinfoil hat console warrior with this crap. Shame.

I thought they moved from 256 MB modules to 512 MB modules.It was a change to a butterfly design that allowed it, which doubled the number of modules they could use.

I'm curious about this too. I think its info that will come with a proper reveal. That GDC stream was just that, for GDC.I wonder why Sony has not shared how many GB of RAM will be used for games. Maybe it's not yet decided?

The audio stuff is the only thing interesting to me about the ps5 so far. It uses a modified cu that works similar to a cell spuIs that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

It would seem that Cerny's only ace in the hole really is this super fast SSD that makes it a Super PlayStation, and it's the only thing keeping me in the PlayStation camp; but I feel like this is going to be dangerously close to PS3 all over again where it will be an exclusives machine again and the exclusives are never even all that good because they don't have Zelda nor Mario.

So they have an ace in the hole, but their saving grace will be to not fuck up the marketing (by continuing to focus on games, games, games). Let's hope Sony can pull it off by just maintaining their current course. Just let Cerny continue to do all the talking, and lock down that Activision/Call-of-Duty marketing.

Y'all don't know brad Sams has the Ps5 already lol. I mean he's saying that people are downplaying Xbox specs yet he is downplays Sony's ssd 🤦♂️

Brad Sams is on here, care to comment Brad?

It's weird that MS basically has professional fanboys.

I still don't know about the overall cost to CPU memory access, are we sure they use 32bit buses for all 6 2GB ICs?

PS4 was a ram config and MB change, not a simple chip swap out.

it is wrong to assume. the PS5 based on cernys demo will perform closer to the 10.28 than it would the 9.2 and he even said he liked GPUs at a higher frequency for a while and they even had to cap the GPU because it was going at too high a frequency if i remember correctly. any one that claims the 9.2 number doesnt really understand at all whats going on.idk who this person is and what his previous tweets were. But is it wrong to assume performance figures probably should be based on sustainable clocks or realistic scenarios ? Just like MS put forward 560 GB/s of memory bandwidth but can realistically only guarantee at least 224 GB/s to the GPU 100% of time and at least ~392 GB/s in CPU intensive workloads ?

Man I remember the astroturfing on the old site. It was so obviousIt's their job. I remember Microsoft had paid astroturfers on the old site leading up to the launch of the Xbox One.

Honestly MS PR is pretty gross and IMO is detrimental to the Xbox brand.

So you're saying Brad Sams knows more than Cerny about the PS5?Era wonders why there are very few actual sources that come onto this site but then will quickly call anyone that isn't agreeing with them or defending their favorite companies honor biased, astroturfing, etc etc.

Era wonders why there are very few actual sources that come onto this site but then will quickly call anyone that isn't agreeing with them or defending their favorite companies honor biased, astroturfing, etc etc.

So you're saying Brad Sams knows more than Cerny about the PS5?

I'm more convinced Sony is trying to target $399. Or so least cheaper than $499.Is that Tempest audio engine thing a discrete chip or something, or is it taking up silicon space in the main APU by sharing resources of the GPU or whatever?

In either case, I find it concerning that Sony would waste R&D resources and/or wafer acreage on audio matters, when that isn't even what the mainstream audience is going to care about as much as extra graphics power.

Could this audio engine investment be Sony's equivalent to Xbox One's Kinect camera and eSRAM follies/blunder? Are they not jumping through all these other hoops now, like variable frequency, just to contain costs and power budget? Sounds like something that could've been avoidable.

It would seem that Cerny's only ace in the hole really is this super fast SSD that makes it a Super PlayStation, and it's the only thing keeping me in the PlayStation camp; but I feel like this is going to be dangerously close to PS3 all over again where it will be an exclusives machine again and the exclusives are never even all that good because they don't have Zelda nor Mario.

So they have an ace in the hole, but their saving grace will be to not fuck up the marketing (by continuing to focus on games, games, games). Let's hope Sony can pull it off by just maintaining their current course. Just let Cerny continue to do all the talking, and lock down that Activision/Call-of-Duty marketing.

We don't know until the consoles are out where the variable clocks will land most of the timeClaiming PS5 is 9.2TF isn't exactly "not agreeing". It's spreading FUD.