-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Is there a recommended (and/or reliable) "Can my PC run this game?" site or app?

Most of them just have a hierarchy of popular parts and compare with the minimum requirements of the game. In my experience you can usually play a game at lower than minimum requirements so the use case for such a site is kind of questionable. If you have such a low end PC that you need a site like that, you probably understand the experience will be sub-par and the question is, will it be good enough for me (a very subjective qualification).

Personally I would avoid such sites and just youtube videos of people playing on hardware you have. You can see it running and decide for yourself. Check for gameplay with the same graphics card as you have, if it is acceptable, search for gameplay with the same CPU.

If you have a fringe model of anything, find your own hierarchy list and find the closest touchstone. Being able to say, my CPU is 10% worse than this very popular CPU will at least let you ballpark estimate how a game will run based on videos of the game running on that popular model.

If all of this is too much work then Can You Run It-type sites are probably the best you have.

Is there a recommended (and/or reliable) "Can my PC run this game?" site or app?

I just use Youtube. Type in like your CPU and GPU model with whatever game and you and go from there. Usually you can get a pretty decent idea that way.

Most of them just have a hierarchy of popular parts and compare with the minimum requirements of the game. In my experience you can usually play a game at lower than minimum requirements so the use case for such a site is kind of questionable. If you have such a low end PC that you need a site like that, you probably understand the experience will be sub-par and the question is, will it be good enough for me (a very subjective qualification).

Personally I would avoid such sites and just youtube videos of people playing on hardware you have. You can see it running and decide for yourself. Check for gameplay with the same graphics card as you have, if it is acceptable, search for gameplay with the same CPU.

If you have a fringe model of anything, find your own hierarchy list and find the closest touchstone. Being able to say, my CPU is 10% worse than this very popular CPU will at least let you ballpark estimate how a game will run based on videos of the game running on that popular model.

If all of this is too much work then Can You Run It-type sites are probably the best you have.

Thank you! This is very helpful. I'm very flexible about tuning down specs to get a game to run.I just use Youtube. Type in like your CPU and GPU model with whatever game and you and go from there. Usually you can get a pretty decent idea that way.

So I just found out this gem on PC part picker regarding my setup

The Gigabyte Z490 AORUS ELITE AC ATX LGA1200 Motherboard has an additional 4-pin ATX power connector but the EVGA BQ 600 W 80+ Bronze Certified Semi-modular ATX Power Supply does not. This connector is used to supply additional 12V current to the motherboard. While the system will likely still run without it, higher current demands such as extreme overclocking or large video card current draws may require it.

I have a 10700 (not K) and a 2070 Super. Will this bring trouble in the future?

The Gigabyte Z490 AORUS ELITE AC ATX LGA1200 Motherboard has an additional 4-pin ATX power connector but the EVGA BQ 600 W 80+ Bronze Certified Semi-modular ATX Power Supply does not. This connector is used to supply additional 12V current to the motherboard. While the system will likely still run without it, higher current demands such as extreme overclocking or large video card current draws may require it.

I have a 10700 (not K) and a 2070 Super. Will this bring trouble in the future?

What DOCSIS 3.1 cable modem should I buy? Is there any consensus?

I don't pay for modem rental with Charter Spectrum but the modems they give you have the awful Intel Puma chipset with the latency issues and supposedly even after various firmware patches they still perform worse than modems with a Broadcom chipset. To that end I have been looking at obtaining my own so it looks like either the Arris Surfboard SB8200 or the Netgear CM1000 is the way to go as they are both Broadcom based and Charter supports them. However I'm having trouble deciding between the two, as they are both generally well-reviewed but of course like most networking equipment there are a subset of users saying that one or the other is complete trash. I'm just looking for anyone here who may have personal experience with one or the other.

I don't pay for modem rental with Charter Spectrum but the modems they give you have the awful Intel Puma chipset with the latency issues and supposedly even after various firmware patches they still perform worse than modems with a Broadcom chipset. To that end I have been looking at obtaining my own so it looks like either the Arris Surfboard SB8200 or the Netgear CM1000 is the way to go as they are both Broadcom based and Charter supports them. However I'm having trouble deciding between the two, as they are both generally well-reviewed but of course like most networking equipment there are a subset of users saying that one or the other is complete trash. I'm just looking for anyone here who may have personal experience with one or the other.

Quick question: Is there a thread here where I can ask about running old (Windows 98/XP) games on Windows 10?

I'm at my wits end trying to figure things out.

I'm at my wits end trying to figure things out.

Quick question: Is there a thread here where I can ask about running old (Windows 98/XP) games on Windows 10?

I'm at my wits end trying to figure things out.

Maybe try asking here? PC Gaming ERA | January 2021 - New Year Plus OT | ResetEra

You can safely ignore the extra 4-pin connector. As you already surmised, that extra power is for extreme overclocking, like sub-ambient shit. Your video card has nothing to do with the power supplied to your motherboard, and the 10700K should never draw enough power to require that extra 4-pin EPS connector.So I just found out this gem on PC part picker regarding my setup

The Gigabyte Z490 AORUS ELITE AC ATX LGA1200 Motherboard has an additional 4-pin ATX power connector but the EVGA BQ 600 W 80+ Bronze Certified Semi-modular ATX Power Supply does not. This connector is used to supply additional 12V current to the motherboard. While the system will likely still run without it, higher current demands such as extreme overclocking or large video card current draws may require it.

I have a 10700 (not K) and a 2070 Super. Will this bring trouble in the future?

So hoping someone smarter than I can work this one out... it has been an issue for a while and I honestly don't know what else to try at this point (other than getting a new motherboard).

I currently have a i7 9700k on a Gigabyte Aorus Z390 Pro Motherboard with an RTX2080 and 16GB of ram, with a Gsync Ultrawide monitor, it's not a bad system that I have had for just over a year.

I decided to buy a 1TB WD SN550 Blue NVMe Drive to use as my primary OS / some games drive, ever since I added that drive in I had nothing but issues with the PC it would randomly stutter (like a hard FPS drop) randomly. The game it was most obvious in was Doom Eternal, I would be running find at 120FPS then all of a sudden it would drop down to under 30-40 fps and stutter like it was loading something but it would only happen for like a few seconds and then it would do it over and over randomly.

Oddly this didn't happen in all games, mostly in games I was able to hit the 120FPS limit, I never saw it happen in Cyberpunk for example but I did see it in Doom, Doom Eternal, Halo MCC, and a few others.

I tried literally everything I could think off to fix it, I even had to RMA the mainboard as I screwed up a BIOS upgrade (never do it in windows I learnt). I reinstalled window, tried all sorts of CPU settings (changing C-States and turning off Speedshift etc) and made sure Temps never got above 70 Degrees C.

I moved back to my standard SSD (Samsung EVO 840) and reinstalled windows and now all the games are fine again, which likely means all my suspect CPU concerns were making no difference because it was not the issue.

The mainboard manual had that drive as compatible and even the SATA lanes are listed as all free if plugged into the right M2 port, the only thing I wondered is if the GPU was taking up too many lanes or something, there does not appear to be a BIOS option to change the M2 Drive speed.

Part of me just thinks I might have to say this way till I upgrade again, but I am unsure how much an NVMe drive might be needed this generation.

TLDR; Adding NVME drive to my PC caused major frame drop/stuttering in most high framerate games.

I currently have a i7 9700k on a Gigabyte Aorus Z390 Pro Motherboard with an RTX2080 and 16GB of ram, with a Gsync Ultrawide monitor, it's not a bad system that I have had for just over a year.

I decided to buy a 1TB WD SN550 Blue NVMe Drive to use as my primary OS / some games drive, ever since I added that drive in I had nothing but issues with the PC it would randomly stutter (like a hard FPS drop) randomly. The game it was most obvious in was Doom Eternal, I would be running find at 120FPS then all of a sudden it would drop down to under 30-40 fps and stutter like it was loading something but it would only happen for like a few seconds and then it would do it over and over randomly.

Oddly this didn't happen in all games, mostly in games I was able to hit the 120FPS limit, I never saw it happen in Cyberpunk for example but I did see it in Doom, Doom Eternal, Halo MCC, and a few others.

I tried literally everything I could think off to fix it, I even had to RMA the mainboard as I screwed up a BIOS upgrade (never do it in windows I learnt). I reinstalled window, tried all sorts of CPU settings (changing C-States and turning off Speedshift etc) and made sure Temps never got above 70 Degrees C.

I moved back to my standard SSD (Samsung EVO 840) and reinstalled windows and now all the games are fine again, which likely means all my suspect CPU concerns were making no difference because it was not the issue.

The mainboard manual had that drive as compatible and even the SATA lanes are listed as all free if plugged into the right M2 port, the only thing I wondered is if the GPU was taking up too many lanes or something, there does not appear to be a BIOS option to change the M2 Drive speed.

Part of me just thinks I might have to say this way till I upgrade again, but I am unsure how much an NVMe drive might be needed this generation.

TLDR; Adding NVME drive to my PC caused major frame drop/stuttering in most high framerate games.

I hope this is the right place to ask. I would like tp ask about resolution scaling and sharpening.

So as I am still gaming on Gtx 1080 at 1440p, I need to make some graphical sacrifices on newer games like Cyberpunk. So, I used to "static resolution scaler" in game and saw a pretty big performance bumb when I moved it at 90%. And yeah, the game looked extremely fuzzy since I have always gamed on native resolutions.

Then I though to myself, what if I try the geforce experience sharpening? So I just open up the menu and click the sharpening on (I didnt play with the settings). The end result seemed.. literally identical to me.

I thought I was going crazy so I screen captured a picture with native resolution and with sharpening and resolution scaling at 90% and did a side by side comparison and... it looks identical. Maybe there is a bit more "grain" on the ground texture in the distance.

Am I blind? Am I missing something? Maybe my comparison was in a bad place so the difference doesnt show? Or is it possible the end result is almost identical? Should I just do the same thing in every demanding game for the frames?

So as I am still gaming on Gtx 1080 at 1440p, I need to make some graphical sacrifices on newer games like Cyberpunk. So, I used to "static resolution scaler" in game and saw a pretty big performance bumb when I moved it at 90%. And yeah, the game looked extremely fuzzy since I have always gamed on native resolutions.

Then I though to myself, what if I try the geforce experience sharpening? So I just open up the menu and click the sharpening on (I didnt play with the settings). The end result seemed.. literally identical to me.

I thought I was going crazy so I screen captured a picture with native resolution and with sharpening and resolution scaling at 90% and did a side by side comparison and... it looks identical. Maybe there is a bit more "grain" on the ground texture in the distance.

Am I blind? Am I missing something? Maybe my comparison was in a bad place so the difference doesnt show? Or is it possible the end result is almost identical? Should I just do the same thing in every demanding game for the frames?

I hope this is the right place to ask. I would like tp ask about resolution scaling and sharpening.

So as I am still gaming on Gtx 1080 at 1440p, I need to make some graphical sacrifices on newer games like Cyberpunk. So, I used to "static resolution scaler" in game and saw a pretty big performance bumb when I moved it at 90%. And yeah, the game looked extremely fuzzy since I have always gamed on native resolutions.

Then I though to myself, what if I try the geforce experience sharpening? So I just open up the menu and click the sharpening on (I didnt play with the settings). The end result seemed.. literally identical to me.

I thought I was going crazy so I screen captured a picture with native resolution and with sharpening and resolution scaling at 90% and did a side by side comparison and... it looks identical. Maybe there is a bit more "grain" on the ground texture in the distance.

Am I blind? Am I missing something? Maybe my comparison was in a bad place so the difference doesnt show? Or is it possible the end result is almost identical? Should I just do the same thing in every demanding game for the frames?

If you are still using static resolution 90% in cyberpunk while enabling Nvidia's sharpening you are double-ing up on sharpening filters as Cyberpunk automatically includes FidelityFX. I would guess you'll see some sharpening artifacts and maybe some in-motion irregularities. Maybe you can throw up the comparison you made. Cyberpunk also does some upscaling of it's own before the sharpening filter that may be better or worse than other games; replicating the improvement might not be universal.

All that matters is it looks good to you, play for a bit and if you forget it isn't native res then take the win, I wouldn't even necessarily worry about switching back and forth to catch a difference either.

So hoping someone smarter than I can work this one out... it has been an issue for a while and I honestly don't know what else to try at this point (other than getting a new motherboard).

I currently have a i7 9700k on a Gigabyte Aorus Z390 Pro Motherboard with an RTX2080 and 16GB of ram, with a Gsync Ultrawide monitor, it's not a bad system that I have had for just over a year.

I decided to buy a 1TB WD SN550 Blue NVMe Drive to use as my primary OS / some games drive, ever since I added that drive in I had nothing but issues with the PC it would randomly stutter (like a hard FPS drop) randomly. The game it was most obvious in was Doom Eternal, I would be running find at 120FPS then all of a sudden it would drop down to under 30-40 fps and stutter like it was loading something but it would only happen for like a few seconds and then it would do it over and over randomly.

Oddly this didn't happen in all games, mostly in games I was able to hit the 120FPS limit, I never saw it happen in Cyberpunk for example but I did see it in Doom, Doom Eternal, Halo MCC, and a few others.

I tried literally everything I could think off to fix it, I even had to RMA the mainboard as I screwed up a BIOS upgrade (never do it in windows I learnt). I reinstalled window, tried all sorts of CPU settings (changing C-States and turning off Speedshift etc) and made sure Temps never got above 70 Degrees C.

I moved back to my standard SSD (Samsung EVO 840) and reinstalled windows and now all the games are fine again, which likely means all my suspect CPU concerns were making no difference because it was not the issue.

The mainboard manual had that drive as compatible and even the SATA lanes are listed as all free if plugged into the right M2 port, the only thing I wondered is if the GPU was taking up too many lanes or something, there does not appear to be a BIOS option to change the M2 Drive speed.

Part of me just thinks I might have to say this way till I upgrade again, but I am unsure how much an NVMe drive might be needed this generation.

TLDR; Adding NVME drive to my PC caused major frame drop/stuttering in most high framerate games.

This is probably not easy to solve

Have you run a crystaldiskmark check on the drive? See if speed is expected.

Which BIOS are you on? Older or newer versions could be tested.

Is simply having the drive installed enough to cause it, were all the games installed to that drive when stuttering? Does the issue happen when Windows itself is on a different drive, but the game is on the NVMe.

Last edited:

This is probably not easy to solve

Have you run a crystaldiskmark check on the drive? See if speed is expected.

Which BIOS are you on? Older or newer versions could be tested.

Is simply having the drive installed enough to cause it, were all the games installed to that drive when stuttering? Does the issue happen when Windows itself is on a different drive, but the game is on the NVMe.

Thanks for the reply, yeah its a tough one. I never did try the NVMe as anything but a boot drive so that might be worth testing as a storage drive. The Bios is F11 which I believe is the latest stable one.

And no it didn't matter if the game was installed the NVMe or not they all suffered regardless, its an odd one.

Yes I am using the static one. I tried to spot artifacts while moving but I just cant seem to spot any that are not present without Fidelity and Nvidia sharpening. I am playing the game with motion blur on low and my frames mostly hover around 50-60 so that might make it harder to spot artifacts caused by the sharpening.If you are still using static resolution 90% in cyberpunk while enabling Nvidia's sharpening you are double-ing up on sharpening filters as Cyberpunk automatically includes FidelityFX. I would guess you'll see some sharpening artifacts and maybe some in-motion irregularities. Maybe you can throw up the comparison you made. Cyberpunk also does some upscaling of it's own before the sharpening filter that may be better or worse than other games; replicating the improvement might not be universal.

All that matters is it looks good to you, play for a bit and if you forget it isn't native res then take the win, I wouldn't even necessarily worry about switching back and forth to catch a difference either.

Whats easiest way to share pictures?

I'm about to buy a motherboard. This one (TUF Gaming Plus) has two 4.0 PCI-e x16 slots. However, I saw that only one of them actually has x16 speed. The other one operates at x4 speed.

I`m putting an RTX 3070 in it (needs 4.0 PCI-e x16) and an Elgato 4k60 Pro (needs 2.0 PCI-e x4). I assume that won't be an issue, because I can just fit it in the other slot.

However, am I future proof with only one PCI-e x16 fast slot?

Edit: reason why I want this MB is because of the good VRM and temperature for it's price.

I`m putting an RTX 3070 in it (needs 4.0 PCI-e x16) and an Elgato 4k60 Pro (needs 2.0 PCI-e x4). I assume that won't be an issue, because I can just fit it in the other slot.

However, am I future proof with only one PCI-e x16 fast slot?

Edit: reason why I want this MB is because of the good VRM and temperature for it's price.

I'm about to buy a motherboard. This one (TUF Gaming Plus) has two 4.0 PCI-e x16 slots. However, I saw that only one of them actually has x16 speed. The other one operates at x4 speed.

I`m putting an RTX 3070 in it (needs 4.0 PCI-e x16) and an Elgato 4k60 Pro (needs 2.0 PCI-e x4). I assume that won't be an issue, because I can just fit it in the other slot.

However, am I future proof with only one PCI-e x16 fast slot?

Edit: reason why I want this MB is because of the good VRM and temperature for it's price.

Regrettably just the name of the motherboard without including the chipset (I assume X570) is not that insightful. Also while related, the amount of lanes (x1, x4, x16 etc.) is not the same as the revision (3.0, 4.0). In a not practical matter of speaking: to archieve the same bandwidth when going down a PCIe revision you would need to double the amount of lanes. (e.g. PCIe 3.0 x16 = PCIe 4.0 x8)

But regardless, and in real world terms: You will be fine: About the only realistic use you will find for a PCIe x16 slot is the GPU. And it seems unlikely that SLI/Crossfire for multi GPU use will make a comeback anytime soon. So having 1 PCIe 4.0 x16 slot is about all you will need for the foreseeable future. I don't really understand your confusion about your video capture card in regards with what slot to put it in, as PCIe is fully compatible between revisions and also between the amount of lanes - you can put an x1 card into an x16 slot.

Thanks!You can safely ignore the extra 4-pin connector. As you already surmised, that extra power is for extreme overclocking, like sub-ambient shit. Your video card has nothing to do with the power supplied to your motherboard, and the 10700K should never draw enough power to require that extra 4-pin EPS connector.

Many thanks for your reply. It's my first time building a PC so that's why I'm a bit confused. Indeed it's an X570. Looks like I don't have any problems then :-) I got a good deal (EUR 120,-) so I'm going forward with it.Regrettably just the name of the motherboard without including the chipset (I assume X570) is not that insightful. Also while related, the amount of lanes (x1, x4, x16 etc.) is not the same as the revision (3.0, 4.0). In a not practical matter of speaking: to archieve the same bandwidth when going down a PCIe revision you would need to double the amount of lanes. (e.g. PCIe 3.0 x16 = PCIe 4.0 x8)

But regardless, and in real world terms: You will be fine: About the only realistic use you will find for a PCIe x16 slot is the GPU. And it seems unlikely that SLI/Crossfire for multi GPU use will make a comeback anytime soon. So having 1 PCIe 4.0 x16 slot is about all you will need for the foreseeable future. I don't really understand your confusion about your video capture card in regards with what slot to put it in, as PCIe is fully compatible between revisions and also between the amount of lanes - you can put an x1 card into an x16 slot.

Hey, so I'm looking to jump into PC gaming soon and was looking at something like this:

- 10th Gen Intel® Core™ i9-10900F processor(10-Core, 20M Cache, 2.8GHz to 5.2GHz)

- NVIDIA® GeForce® RTX 2070 SUPER™ 8GB GDDR6

- 16GB, 1x16GB, DDR4, 2933MHz

- 1TB M.2 PCIe NVMe Solid State Drive

Hey, so I'm looking to jump into PC gaming soon and was looking at something like this:

and wondering what something like this would cost if I were buidling it myself (looking at prebuilt ones and something like this is about $1700).

- 10th Gen Intel® Core™ i9-10900F processor(10-Core, 20M Cache, 2.8GHz to 5.2GHz)

- NVIDIA® GeForce® RTX 2070 SUPER™ 8GB GDDR6

- 16GB, 1x16GB, DDR4, 2933MHz

- 1TB M.2 PCIe NVMe Solid State Drive

It would probably be better to ask here:

The PC Builders Thread ("I Need a New PC") v3 PC - Tech - OT

Welcome to the PC Builders Thread, where we talk about computer hardware! Whether you're upgrading your existing computer, want to build a new one, have a question, or just like talking about computers in general - we've got you covered! Now with even more ARGB! CPUs: BUY (5/2023) Best: Ryzen...

And it would be helpful if you also look at the questions listed in the OP and include the answers in your post.

Doesn't matter in latest versions of Win10, it doesn't really do anything.

I'm not really sure how to get retro controllers to work with Steam? I have a Retrobit Saturn controller and also bought a 4Play so I can use my Dreamcast controllers, but when I try to use Steam's controller configuration I run into the issue of not having enough buttons/sticks to map, e.g. no right analogue stick. Is there somewhere else I should be configuring controllers for Steam?

I have a game specific question, hopefully this is an okay place to ask it. I have a budget PC (GTX 1650 Super, Ryzen 5 1600AF, 16GB RAM, SSD) and I started playing Death Stranding last night. It's running pretty well, I'm getting 60+FPS on the "Default" settings at 1080p. Only issue is, performance tanks during the cutscenes. The FPS is getting down to the 30s, and the constant up and down in very distracting. I'm pretty certain this isn't a case of my PC being unable to run it - it runs the game itself perfectly fine, but not pretty basic cutscenes indoors?

Is this normal? Is there any way to accommodate for this? I looked at some benchmark videos on YouTube for this game and my GPU, and they all have it running on "Very High" at 1080p and getting 60+FPS, which leads me to believe something is wrong here. My drivers are updated.

Is this normal? Is there any way to accommodate for this? I looked at some benchmark videos on YouTube for this game and my GPU, and they all have it running on "Very High" at 1080p and getting 60+FPS, which leads me to believe something is wrong here. My drivers are updated.

Last edited:

Try pausing and unpausing the game next time it happens.I have a game specific question, hopefully this is an okay place to ask it. I have a budget PC (GTX 1650 Super, Ryzen 5 1600AF, 16GB RAM, SSD) and I started playing Death Stranding last night. It's running pretty well, I'm getting 60+FPS on the "Default" settings at 1080p. Only issue is, performance tanks during the cutscenes. The FPS is getting down to the 30s, and the constant up and down in very distracting. I'm pretty certain this isn't a case of my PC being unable to run it - it runs the game itself perfectly fine, but not pretty basic cutscenes indoors?

Is this normal? Is there any way to accommodate for this? I looked at some benchmark videos on YouTube for this game and my GPU, and they all have it running on "Very High" at 1080p and getting 60+FPS, which leads me to believe something is wrong here. My drivers are updated.

Interesting, I'll give it another shot but I don't think that'll fix it. I paused during a few cutscenes to see if I could adjust the settings (you can't), and to turn on the FPS counter, and when I unpaused I still saw the issue.

My trusty Xbox 360 pad breathed its last recently, and after a frustrating amount of time trying and failing to hook up my console controllers I feel should probably play it safe and grab an Xbox One pad.

My question is, if I get a 3rd party Xbox One controller could I miss out on any of the plug and play 'it just works' magic that the Microsoft equivalent would have?

My question is, if I get a 3rd party Xbox One controller could I miss out on any of the plug and play 'it just works' magic that the Microsoft equivalent would have?

My trusty Xbox 360 pad breathed its last recently, and after a frustrating amount of time trying and failing to hook up my console controllers I feel should probably play it safe and grab an Xbox One pad.

My question is, if I get a 3rd party Xbox One controller could I miss out on any of the plug and play 'it just works' magic that the Microsoft equivalent would have?

I think if you use the official USB wireless adapter it connects to the PC using the same driver as on Xbox, so in theory should be treated the same. Not sure about bluetooth or USB - I personally found bluetooth awful for Xbox controllers on PC.

I have a game specific question, hopefully this is an okay place to ask it. I have a budget PC (GTX 1650 Super, Ryzen 5 1600AF, 16GB RAM, SSD) and I started playing Death Stranding last night. It's running pretty well, I'm getting 60+FPS on the "Default" settings at 1080p. Only issue is, performance tanks during the cutscenes. The FPS is getting down to the 30s, and the constant up and down in very distracting. I'm pretty certain this isn't a case of my PC being unable to run it - it runs the game itself perfectly fine, but not pretty basic cutscenes indoors?

I haven't played DS in a while, but weren't the cutscenes limited to 30FPS anyways?

How long does thermal past last while in the tube? I have a tube since October 2018 I think, read online 2, 3, 5 years... anyone with experience? Artic Silver 4 if that matters

Give it a taste, if it's spicy then it's still good. :)How long does thermal past last while in the tube? I have a tube since October 2018 I think, read online 2, 3, 5 years... anyone with experience? Artic Silver 4 if that matters

But really, 3 or 4 years is generally considered the "shelf life" for most thermal paste.

I wish, but definitely not. The framerate is all over the place for me during the cutscenes, as low as 30 but as high as 60. Locking it at 30 would be more ideal than the way it is currently. Still can't figure it out.I haven't played DS in a while, but weren't the cutscenes limited to 30FPS anyways?

Should be fine. This video shows someone testing on a similar miniPC:

Is a Ryzen 3600 a good pairing with a 3080? Is it worth upgrading right now. Not sure if the cpu will be bottlenecked at 1440p or higher.

Personally have this combo, can tell you specific game info if you want but yes it's a fine pairing. Above 1080p it's pretty ideal. At 1080p CPU bottlenecking happens, but not that often.

Great to hear! I figure I can stick it out at 1440p and if the cpu ever gets bottlenecked I can upgrade to 4k which I plan to do in the next year anyway.Personally have this combo, can tell you specific game info if you want but yes it's a fine pairing. Above 1080p it's pretty ideal. At 1080p CPU bottlenecking happens, but not that often.

If it's still a paste which you can squeeze out then it's likely fine.How long does thermal past last while in the tube? I have a tube since October 2018 I think, read online 2, 3, 5 years... anyone with experience? Artic Silver 4 if that matters

Give it a taste, if it's spicy then it's still good. :)

But really, 3 or 4 years is generally considered the "shelf life" for most thermal paste.

If it's still a paste which you can squeeze out then it's likely fine.

Thank you !

I have a v-sync/framerate/refresh rate question. I have a 1440p, 75z monitor, but a really low powered PC (GTX 1650 Super, Ryzen 5 1600AF, 16GB RAM, SSD). For games like TF2 and L4D2 I can hit 75fps no problem, but for others I obviously can't. For example, MGSV maxes out at 60fps (this is a fault of the game itself, not my system) and Death Stranding is too beefy for my PC, so I've been targeting 60fps @ 1080p (by using the ingame 60fps max setting). My question is, since my monitor has been set to 75hz, am I causing issues running games at 60fps? MGSV is completely locked at 60fps @ 1440p, I never see any drops. But since the monitor is displaying in 75hz, would it cause the framerate to appear less than 60? For Death Stranding, I'd imagine that a 75hz monitor displaying 60fps, but then dropping occasionally below 60 with v-sync on is causing all sorts of issues.

Would it be better to keep things as is? Is the monitor smart enough to adjust to 60hz when displaying 60fps content? Or would it be best for me to set the monitor to 60hz before playing any game that will be running in 60fps?

Additionally, how does v-sync come into play here? If I'm playing DS at 60fps with v-sync on, and then framerate drops to 55fps, is the v-sync further compensating and bringing it way below 55fps to avoid tearing? The Steam FPS counter shows it dropping to 55fps, but is it in reality even lower? It's pretty jarring, even for mild drops. Would even something like 59fps screw everything up? What would be the workaround for something like this? I'm using an HDMI cable, I know that has some effect on it.

Would it be better to keep things as is? Is the monitor smart enough to adjust to 60hz when displaying 60fps content? Or would it be best for me to set the monitor to 60hz before playing any game that will be running in 60fps?

Additionally, how does v-sync come into play here? If I'm playing DS at 60fps with v-sync on, and then framerate drops to 55fps, is the v-sync further compensating and bringing it way below 55fps to avoid tearing? The Steam FPS counter shows it dropping to 55fps, but is it in reality even lower? It's pretty jarring, even for mild drops. Would even something like 59fps screw everything up? What would be the workaround for something like this? I'm using an HDMI cable, I know that has some effect on it.

I have a v-sync/framerate/refresh rate question. I have a 1440p, 75z monitor, but a really low powered PC (GTX 1650 Super, Ryzen 5 1600AF, 16GB RAM, SSD). For games like TF2 and L4D2 I can hit 75fps no problem, but for others I obviously can't. For example, MGSV maxes out at 60fps (this is a fault of the game itself, not my system) and Death Stranding is too beefy for my PC, so I've been targeting 60fps @ 1080p (by using the ingame 60fps max setting). My question is, since my monitor has been set to 75hz, am I causing issues running games at 60fps? MGSV is completely locked at 60fps @ 1440p, I never see any drops. But since the monitor is displaying in 75hz, would it cause the framerate to appear less than 60? For Death Stranding, I'd imagine that a 75hz monitor displaying 60fps, but then dropping occasionally below 60 with v-sync on is causing all sorts of issues.

Would it be better to keep things as is? Is the monitor smart enough to adjust to 60hz when displaying 60fps content? Or would it be best for me to set the monitor to 60hz before playing any game that will be running in 60fps?

Additionally, how does v-sync come into play here? If I'm playing DS at 60fps with v-sync on, and then framerate drops to 55fps, is the v-sync further compensating and bringing it way below 55fps to avoid tearing? The Steam FPS counter shows it dropping to 55fps, but is it in reality even lower? It's pretty jarring, even for mild drops. Would even something like 59fps screw everything up? What would be the workaround for something like this? I'm using an HDMI cable, I know that has some effect on it.

Unfortunately the monitor is not smart enough to do that. You can either manually change it, or you can have the monitor always at 60hz, and within Nvidia Control Panel set the games you run at 75hz to "run at highest available" refresh rate. This will make it smart the other direction, but unfortunately Windows will now be 60hz when not in a game.

As for vsync, the vsync that does what you describe is double buffered vsync. Most games use triple buffered at this point and don't drop when falling short of the target.

Thanks, that makes sense. Is triple buffering turned off by default? I went into the Nvidia control panel and saw that it was off, but went ahead and turned it on for DS. Is that all I need to do, or was it already working?Unfortunately the monitor is not smart enough to do that. You can either manually change it, or you can have the monitor always at 60hz, and within Nvidia Control Panel set the games you run at 75hz to "run at highest available" refresh rate. This will make it smart the other direction, but unfortunately Windows will now be 60hz when not in a game.

As for vsync, the vsync that does what you describe is double buffered vsync. Most games use triple buffered at this point and don't drop when falling short of the target.

Also, would G-Sync alleviate all of these issues altogether? My monitor supports Freesync but I found out it also works with G-Sync, I just need a displayport cable. Would something like that be worth it for such a low powered machine running games at 60/75fps?

Thanks, that makes sense. Is triple buffering turned off by default? I went into the Nvidia control panel and saw that it was off, but went ahead and turned it on for DS. Is that all I need to do, or was it already working?

Also, would G-Sync alleviate all of these issues altogether? My monitor supports Freesync but I found out it also works with G-Sync, I just need a displayport cable. Would something like that be worth it for such a low powered machine running games at 60/75fps?

By default Nvidia control panel will let the applications decide what vsync to use. As I said, most modern games already use triple buffered, even if they don't specify. You shouldn't have any issue just letting Death Stranding use it's own vsync.

Gsync would do the job ideally, yea. Given your monitor supports a sufficient gsync window and has no other issues with Gsync compatibility enabled.

You can search this table to see if your model has been tested: Rtings g-sync compatibility test

When it comes to gsync you want to keep vsync on (can force it in the control panel) and cap the framerate at 71 fps (can also do this in the control panel).

edit: as for would it be worth it, I think it would be very worth it provided the displayport cable is good enough to not cause flickering and your monitor supports Gsync compatibility with no issues. You can just let the games go wild with the framerate, typically I would expect any framerate from 45-75 would sync perfectly and you'd free yourself from any of your previous hz-related worries.

So I've just received the ASrock Phantom Gaming D 6800 XT and the thing is a beast of a card, both in size and sheer capability compared to my GTX 1070. I did the right thing and removed all of Nvidia's drivers through DDU safe mode, so no issues there at all, but I'm wanting to ask a question about fine-tuning. Specifically I saw when playing Deliver Us the Moon (amazing game so far btw) that temperatures were hitting around 86°-92° with junction temperature around 98-99°. Now I have a mid-sized case with an ATX so I know that spacing can and is an issue with air flow, so I'm going to test without the case panel to see if a major difference is felt in temperatures while playing, however I wanted to know what it is I can do to ensure I don't cook this GPU.

I understand that fan tuning can be a solution to decreasing temps, and I had a look through the Radeon software but was left a bit confused as to the "right" settings. Googling has not helped one bit so I am hoping Era can lend some insight otherwise I might just have to bite the bullet and get a bigger case with better fan flow. Side question as well, I've got the Mi 34" 144hz 1440p gaming monitor, and I'm wanting to know what is the best setup for getting the most out of freesync? I heard windowed fullscreen doesn't actually enable freesync, and I am best to have fullscreen with no vsync. Correct?

I understand that fan tuning can be a solution to decreasing temps, and I had a look through the Radeon software but was left a bit confused as to the "right" settings. Googling has not helped one bit so I am hoping Era can lend some insight otherwise I might just have to bite the bullet and get a bigger case with better fan flow. Side question as well, I've got the Mi 34" 144hz 1440p gaming monitor, and I'm wanting to know what is the best setup for getting the most out of freesync? I heard windowed fullscreen doesn't actually enable freesync, and I am best to have fullscreen with no vsync. Correct?

So I've just received the ASrock Phantom Gaming D 6800 XT and the thing is a beast of a card, both in size and sheer capability compared to my GTX 1070. I did the right thing and removed all of Nvidia's drivers through DDU safe mode, so no issues there at all, but I'm wanting to ask a question about fine-tuning. Specifically I saw when playing Deliver Us the Moon (amazing game so far btw) that temperatures were hitting around 86°-92° with junction temperature around 98-99°. Now I have a mid-sized case with an ATX so I know that spacing can and is an issue with air flow, so I'm going to test without the case panel to see if a major difference is felt in temperatures while playing, however I wanted to know what it is I can do to ensure I don't cook this GPU.

I understand that fan tuning can be a solution to decreasing temps, and I had a look through the Radeon software but was left a bit confused as to the "right" settings. Googling has not helped one bit so I am hoping Era can lend some insight otherwise I might just have to bite the bullet and get a bigger case with better fan flow.

Creating a custom fan curve can definitely help. The right settings well be dependent on your specific setup, but maybe try something like 50% fan speed in the 60C range and then ramp up to 65% or higher at 75C. Of course, you'll have to play around with the settings to find a good balance of noise and temperature.

You might also want to look into undervolting as well. You'll lose like 1-5% of performance at most (or even gain/maintain performance like my 3080 FE) but your temps can drop by 4-9 degrees.

Bit of a random question but why aren't there many USB C hubs out there which actually contain and extra 2+ USB C ports?

I'd like to get a usb hub that contains 2-3 usb c ports.

Is there any reason why these are hard to come by?

I'd like to get a usb hub that contains 2-3 usb c ports.

Is there any reason why these are hard to come by?

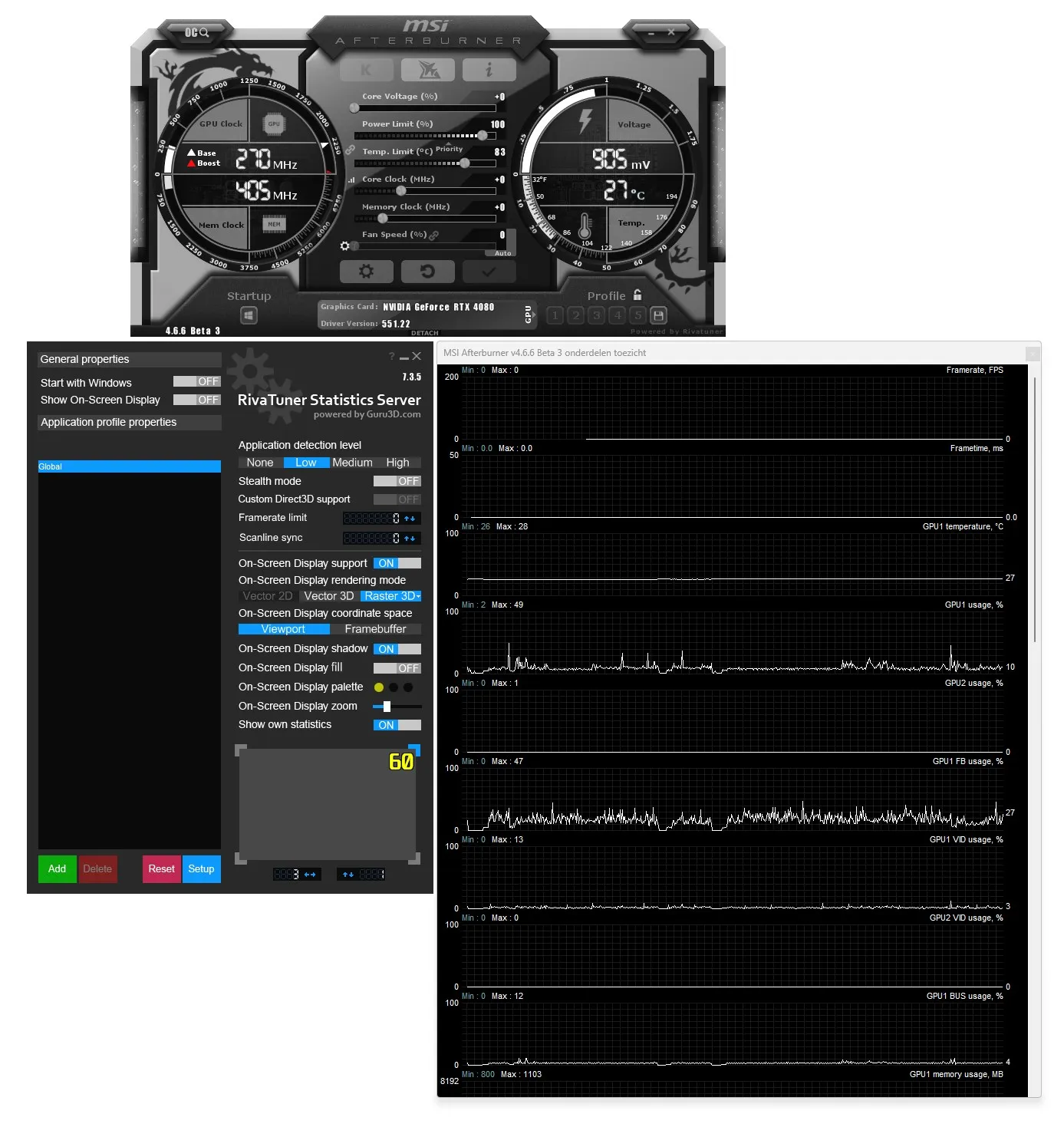

What's the best overlay to see FPS in game? I use Steam but I figure there's something better out there.

MSI Afterburner and Rivatuner Statistics Server

MSI Afterburner 4.6.6 Download

MSI Afterburner 4.6.6 download - Guru3D and MSI have been working hard on AfterBurner, we released an updated Beta revision of Afterburner; this application successfully secured the leading position on graphics card utilities in relation to monitoring and tweaking. We are the only official...

How do i activate subtitles so that they launch automatically when i open a video? Everytime i have to right click and select the subtitle even if there's only one available! The program is set to "no subtitles" by default and i can't find anything in the options ot change that