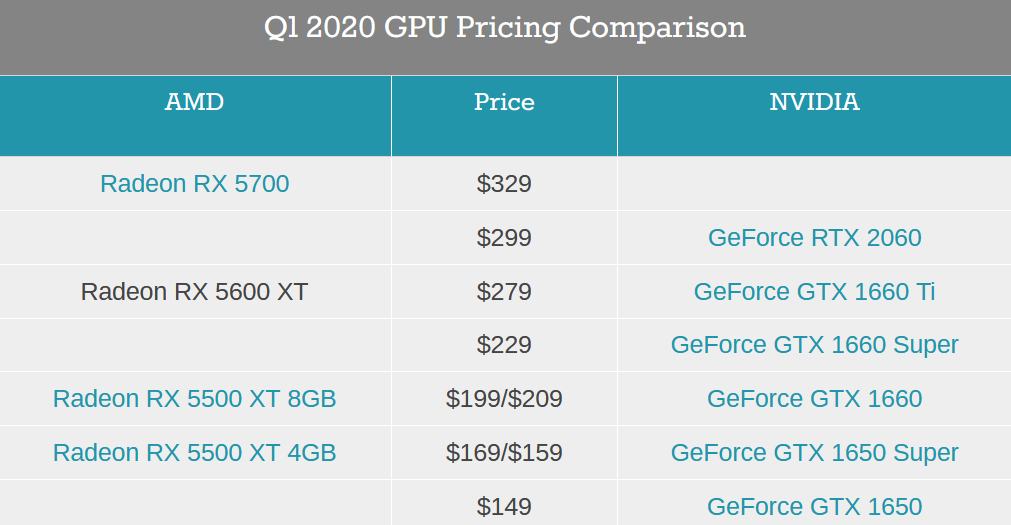

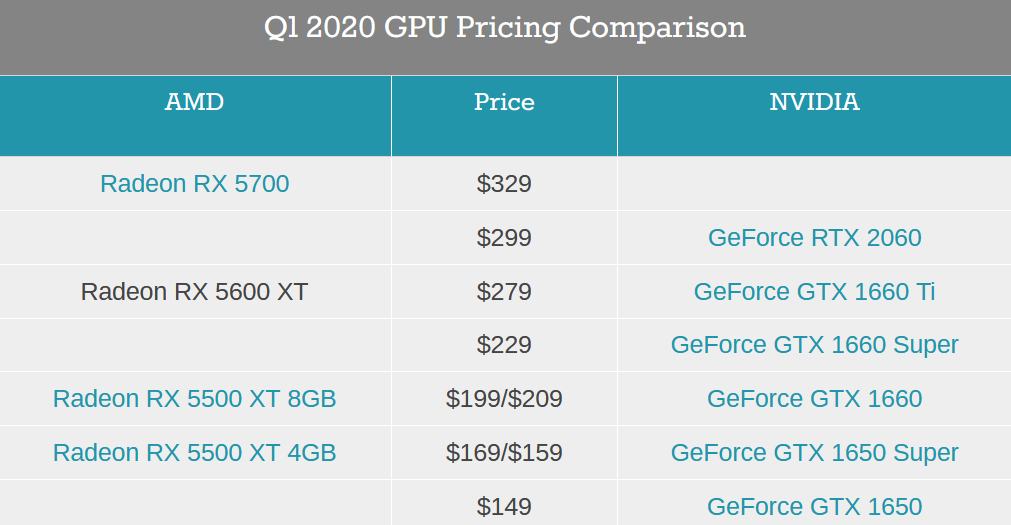

NVIDIA Cuts Price of GeForce RTX 2060 To $299

With AMD set to launch their new 1080p-focused Radeon RX 5600 XT next Tuesday, NVIDIA isn't wasting any time in shifting their own position to prepare for AMD's latest video card. Just in time for next week's launch, the company and its partners have begun cutting the prices of their GeForce RTX 2060 cards. This includes NVIDIA's own Founders Edition card as well, with the company cutting the price of that benchmark card to $299.