I don't know if this was posted but its good one :D

Kugoson (Son goku) sounds like a believable codename for a GPU.

I don't know if this was posted but its good one :D

Phil did the correct thing by saying he doesn't know, but then Matt comes in and says he aboslutely believes xbox will be the most powerful and emerssive console. Like what the heck?

Indeed ;d Dragon ball is from Japan, Sony also...Kugoson (Son goku) sounds like a believable codename for a GPU.

Matt booty also made another mistake.he came out and said 4x performance refers to combination of things like gpu CPU ram ssd etc... But the next day Phil said it was just for cpu

Matt Booty is channeling Yusuf Mehdi, lolMatt booty also made another mistake.he came out and said 4x performance refers to combination of things like gpu CPU ram ssd etc... But the next day Phil said it was just for cpu

Correct. So clocking GPU core and the memory interface.

It all depends on economics and whether the savings is worth the engineering effort.

As Rylen points out below, it's about balancing clock speed with CU count. The RX 570 uses 75W less than RX 590 with just 4 less CU and a couple hundred MHz lower clocks.

I believe the decoder has no entry for the cache part of the string, so it is unknown.

HBCC is beneficial regardless of RAM setup. Also I agree with others that the remaining two are likely Lockhart and Anaconda.

Previous console APUs leaked out in a similar manner. Read the DF story on Gonzalo.

dont you know that the most reliable method of comparing power levels is through dragon ball characters ;pKugoson (Son goku) sounds like a believable codename for a GPU.

Team HBCC rise again just need to know if they are using HBM...

TEAM HBM!!!Seems like the new navi 10 post by komachi further points at HBCC and the HBM + DDR4 rumor.

And this just the calm before the storm of the big leaks. We are not ready for when jason clicks publish lol.

Damn those Teraplots sounds sick!I don't know if this was posted but its good one :D

Dude.. No one is taking his quote as fact lmao. We're talking about the marketing and wondering why they're confident Anaconda is going to be more powerful.

I seriously don't know what you're doing. Some people in here are seriously need to stop reading shit that isn't there. No where did I say that lol.

And some people are reading books from Reiner's tweets lol.

HBM2 hypeIn what way HBM2 + DDR4 setup will be more beneficial than just GDDR6? Power consumption?

PS4 got leaked out from a desktop linux commit ? are you sure you are following the thread closely ?...

Previous console APUs leaked out in a similar manner. Read the DF story on Gonzalo.

Sorry, I was referencing TBP. I specifically reference RX 590 because its TBP matches RX 590, suggesting it's GPU is pushed similarly hard.First, RX 570 has a TDP rated to 120W and RX 590 of 175W. As for power consumption, I rather compare the RX 570 to 580. RX 590 is pushing far beyond its optimal clocks in terms of thermals. Or even the RX 480 to the RX 470. You're taking two extreme exemples to explain a difference in power consumption.

Compare the RX 5700 to XT.

The difference in thermals isn't that extreme for comparable clocks.

Some people here are talking about adding 35% more CU at the same clocks as the RX 5700 XT for the same power consumption.

HBM2 hype

PS4 got leaked out from a desktop linux commit ? are you sure you are following the thread closely ?

Sorry, I was referencing TBP. I specifically reference RX 590 because its TBP matches RX 590, suggesting it's GPU is pushed similarly hard.

I would really like to see a 5600 with 36 CUs and lower clocks to compare.

Previous codenames were found via commits and/or 3DMark results, as stated in DF article. Yup, pretty sure I can read. Thanks for checking.

Fact is xbots leaked a document and blocked out the Teraflops number since it us lower than PS5.

I'm aware. There is no comparable to RX 570 in terms of TBP, which is what I'm talking about. If you get another ~30W back by clocking 125MHz lower and disabling 4 CU like 570 does to 580, then those clocks make a hell of a lot more sense for a console. The Xbox One X clocks are very close to RX 570 and it has more CUs than any Polaris GPU.The RX 5700 has 36CUs and lower clocks. Rated at 180W of TBP.

The RX 5700 XT had 40CUs and higher clocks. Rated at 225W of TBP.

Your assertion that Klob did something like is laughable, but the fact that you used the term xbot shows me that you won't be around long.Fact is xbots leaked a document and blocked out the Teraflops number since it us lower than PS5.

I can only assume this one.

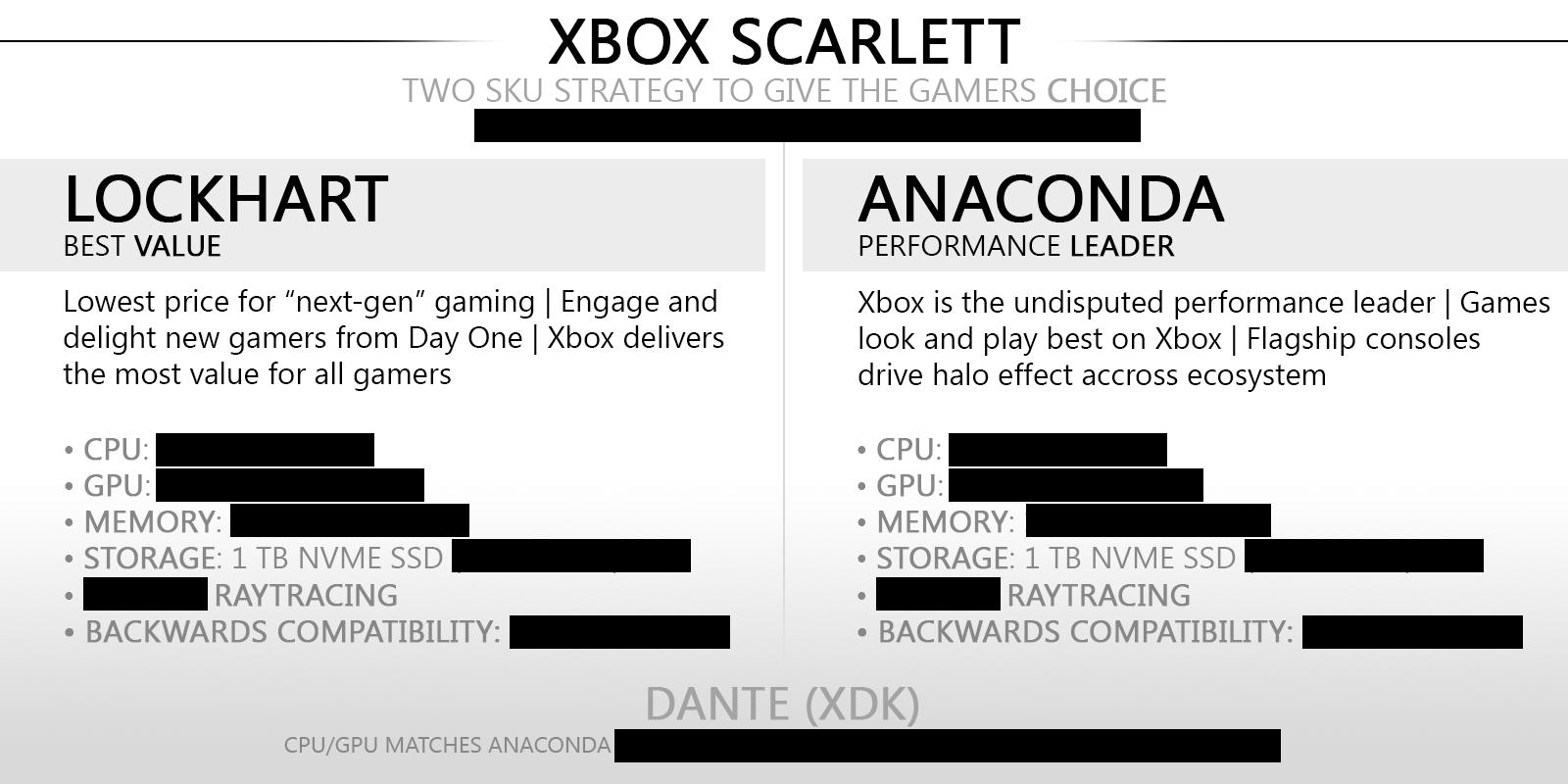

After cernys wired interview, some ms guys produced a document (mid april) of specs featuring anaconda and lockhart but blacked out the Teraflop number.

I'm aware. There is no comparable to RX 570 in terms of TBP, which is what I'm talking about. If you get another ~30W back by clocking 125MHz lower and disabling 4 CU like 570 does to 580, then those clocks make a hell of a lot more sense for a console. The Xbox One X clocks are very close to RX 570 and it has more CUs than any Polaris GPU.

It's interesting that none of the marketing buzzwords seem to match what Microsoft shown at E3 with them using words like "immersive". It's almost like this could have been made up.

Apologize if there was any hate speech used. Im not sure of the colloqial term for fanboys.Your assertion that Klob did something like is laughable, but the fact that you used the term xbot shows me that you won't be around long.

In your case its called a pony.

You save about 33% TBP in that scenario. They're talking about adding 35% more CUs. It will all come down to clocks.Right, but then again, it's about a modulation to get the same power. Not more.

People here are arguing that they'd add 14 (!!!) more CUs (for the record, Xbox One X has 2 more CUs than RX 580) and the same 1800mhz clockspeed as RX 5700 XT to reach 12.44 Tflops. Even at a lower clockspeed, like 1500mhz for 10.3Tflops. You're still adding 14 more CUs, and that doesn't make up for the loss of 300mhz.

I'm aware. There is no comparable to RX 570 in terms of TBP, which is what I'm talking about. If you get another ~30W back by clocking 125MHz lower and disabling 4 CU like 570 does to 580, then those clocks make a hell of a lot more sense for a console. The Xbox One X clocks are very close to RX 570 and it has more CUs than any Polaris GPU.

In summary:

RX 590: 36 CU, 1545 MHz core, 7000 MHz memory, 225W TBP.

RX 570: 32 CU, 1244 MHz core, 6000 MHz memory, 150W TBP.

5700 XT: 40 CU, 1900 MHz core, 14000 MHz memory, 225W TBP.

5600???: 36 CU, 1600 MHz core, 14000 MHz memory, ~160W TBP???

You save about 33% TBP in that scenario. They're talking about adding 35% more CUs. It will all come down to clocks.

Why would they start marketing when the console is 18 months out and the they have not even announced a name?It's interesting that none of the marketing buzzwords seem to match what Microsoft shown at E3 with them using words like "immersive". It's almost like this could have been made up.

seems like komachi backtracked off the previous speculation that navi 12 LITE and navi 21 LITE are Arden.

And/or ? That's your answer to my specific question ?...

Previous codenames were found via commits and/or 3DMark results, as stated in DF article. Yup, pretty sure I can read. Thanks for checking.

Furthermore, if you read the DF article, you can see he expresses the exact same skepticism and provides a potential answer, too.

10% higher performance at the same TDP is negation territory? The more pertinent question is if it justifies the die space. But if you already weren't going to clock it that high anyway, the perf gain is higher than 10%.And in that scenario, you're at 10.3 Tflops. Not 12.44 like some expects.

There's a possibility to have more CUs. But it'll be at lower clocks. To the point it'll nearly negate the performance gain.

That's a lot of words to say you didn't read the article.And/or ? That's your answer to my specific question ?

I am sorry but you can't approximate "linux commits" with "3DMarks results". Both things are very different kind of 'leaks'.

Relax, it is just about the codenames.And/or ? That's your answer to my specific question ?

I am sorry but you can't approximate "linux commits" with "3DMarks results". Both things are very different kind of 'leaks'.

Can the passive aggressive platform wars posters please cease and desist?

This isn't the thread for that.

I'm at the point now where I think combining both Next-Gen consoles into one thread was a huge mistake. If folks can't play nice and be civil then maybe the mods should reconsider their position on it.

It becomes increasingly unbearable trying to sift through pages and pages of shitflinging posts just to try and find the few quality or insightful ones.

I would actually personally prefer a pure dedicated tech thread where if you're not there to contribute or learn you get banned.

Welcome. You're a little late to the club, but we can read out the minutes.

I would at least consider it.Can the passive aggressive platform wars posters please cease and desist?

This isn't the thread for that.

I'm at the point now where I think combining both Next-Gen consoles into one thread was a huge mistake. If folks can't play nice and be civil then maybe the mods should reconsider their position on it.

It becomes increasingly unbearable trying to sift through pages and pages of shitflinging posts just to try and find the few quality or insightful ones.

I would actually personally prefer a pure dedicated tech thread where if you're not there to contribute or learn you get banned.

10% higher performance at the same TDP is negation territory? The more pertinent question is if it justifies the die space. But if you already weren't going to clock it that high anyway, the perf gain is higher than 10%.

That's a lot of words to say you didn't read the article.

Can the passive aggressive platform wars posters please cease and desist?

This isn't the thread for that.

I'm at the point now where I think combining both Next-Gen consoles into one thread was a huge mistake. If folks can't play nice and be civil then maybe the mods should reconsider their position on it.

It becomes increasingly unbearable trying to sift through pages and pages of shitflinging posts just to try and find the few quality or insightful ones.

I would actually personally prefer a pure dedicated tech thread where if you're not there to contribute or learn you get banned.

VaporI would go ahead and do that. It seems like a great idea.

Does anyone know what sort of cooling solution these machines will adopt?

Sony's PlayStation 5 specifications announced during the event, include a 2K HDR video-only display, an 8-core Intel i7 processor, 32GB of storage (up to 320GB in the US), 512GB of RAM, HDMI 2.0 out and AV output, dual front-facing speakers, a 5 megapixel rear camera unit, the ability to play up to 6 simultaneous videos on each TV.

Sony isn't going that far with the Sony Bravia line of 4K HDR TVs. This has resulted in Sony going with one product: the BQ Bravia. While the BQ Bravia was officially announced last month , it wasn't until late November that we got a good look at the 4K HDR features implemented in the Bravia 4K HDR set. This led to some mixed emotions at first; on one hand, it was impressive to see a company with such an established reputation on 4K HDR technology, and on the other hand, to see how they'd actually implement it into their own products (which seemed to be a mix of Sony and Microsoft).

Despite being a bit of a late-conclusion by itself, it certainly made me reflect back on my time at Sony, and especially my time with the Bravia 4K TVs, that this was an important product announcement in that I was pretty much just blown away by the sheer power of