8K right along with 120fps is just a buzzword. Completely meaningless one. They might as well just have said we will have HDMI 2.1. But saying 8K and 120fps just sounds better. Those consoles will struggle to hit 4K 60fps and we will see something very similar to this gen with 1080p where they will both run @4K, 30fps for most games and run at 4K, 60fps for a handful of games. No matter what, there will always remain that tradeoff of how much better a game can look if it were at 30fps as opposed to it being at 60fps. I hope I am wrong on the 4K,60fps thing but I doubt that.I have a guess for one of the areas of differentiation in the PS5 and Xbox Scarlett silicon for next generation that could end up being talked about in Digital Foundry videos and such. Both sides have made mention of 8K and 120 fps support, which is somewhat checking the box for HDMI 2.1 compliance. However, to even provide any options for such things and arguably even still dealing with 4K resolutions and 60 fps on many games that are demanding, some type of "checkerboarding" or similar approach is going to be in play.

- PS5 - Digital Foundry and others have praised the approach to checkerboarding on the PS4 Pro, and I suspect that they will have some custom silicon (or "secret sauce" if you will) that provides support to an improved version of this intended to deal with resolutions all the way up to 8K as necessary.

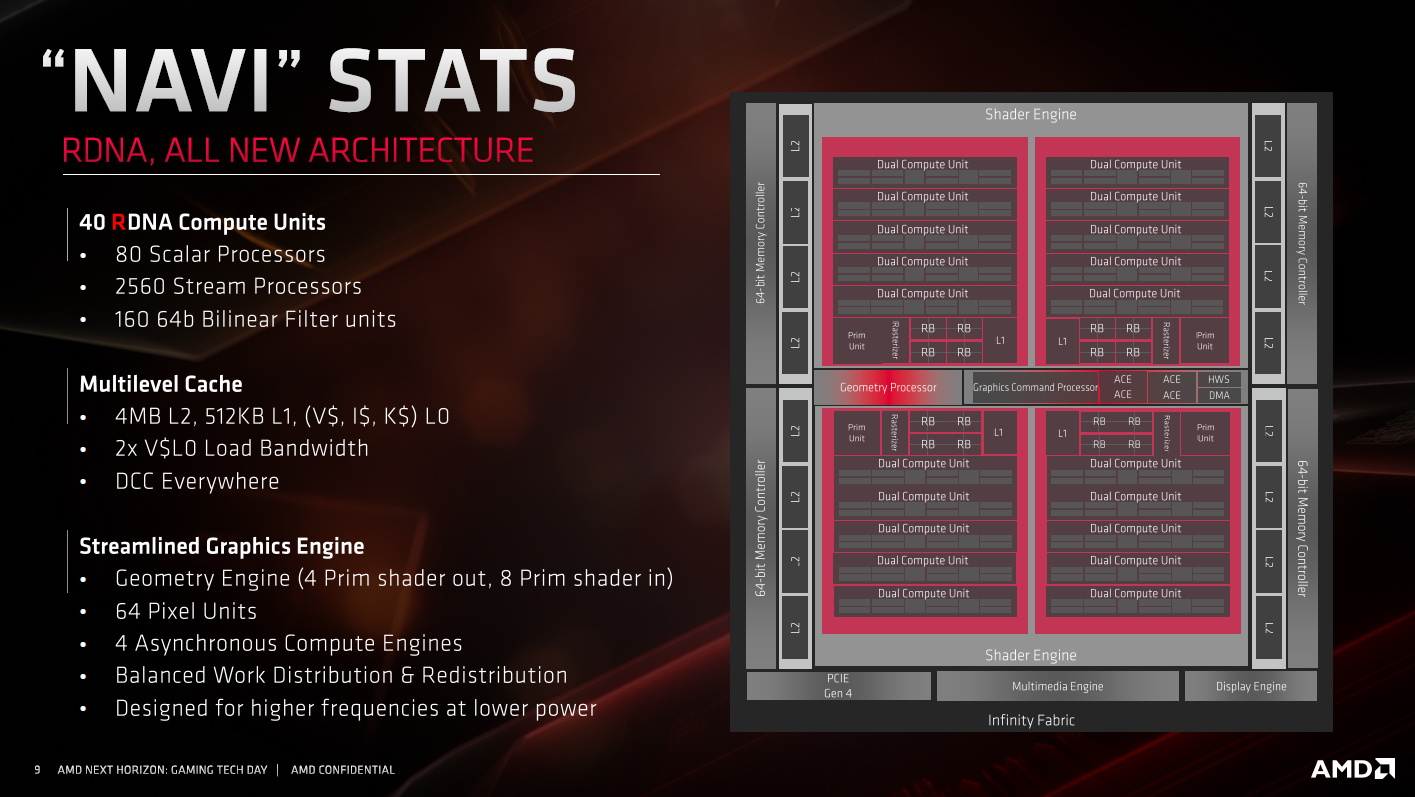

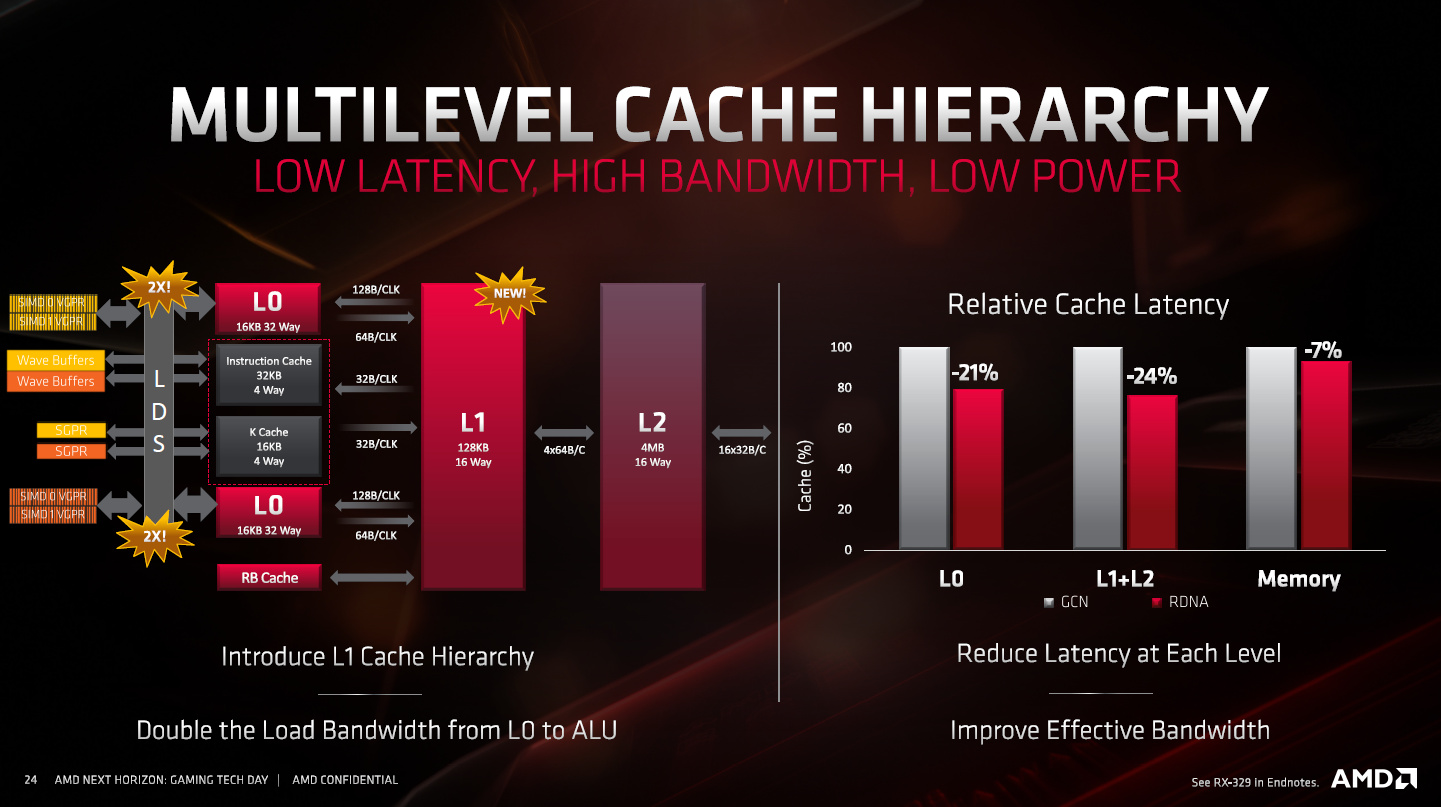

- Xbox Scarlett - Notice that Navi does not include hardware support for Variable Rate Shading (VRS) which was disappointing to many, and VRS is definitely another approach to deal with the issue of scaling to different resolutions like with checkerboarding. Microsoft has been working deeply on VRS with DirectX (https://devblogs.microsoft.com/directx/variable-rate-shading-a-scalpel-in-a-world-of-sledgehammers/), and I suspect that their custom silicon will support VRS.

I am not going to predict which hypothetical solution may end up being better, but this does seem to be a possible area of differentiation given no Navi support for VRS.

I do however expect all 30fps games to have two gfx modes; Resolution and frame rate. With one focusing on 4K,30fps at High settings and the other focusing on 4K.cb,60fps with medium to high settings respectively. Should become the norm if the hardware for CB is baked-into the base models so that way it becomes a part of the dev pipeline as opposed to something that as to be made specifically for one platform (eg. PS4pro).