Mr Colbert...I'm sticking with 10 to 12 TF for PS5! I don't care what you tell me la la la la la la la!!!! Xbox guess?.....6.5 for baby and...........10/12 for daddy, BUT....as we've been talking about it...it's the other parts of the equation that are right up in the air, mem, clock freqs etc etc. So yes...i'm sticking!

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

Next-gen PS5 and next Xbox speculation launch thread - MY ANACONDA DON'T WANT NONE

- Thread starter Phoenix Splash

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 14 threadmarks

Reader mode

Reader mode

Recent threadmarks

[USE IN CASE OF NO LEAKS BEFORE E3!!] AdoredTV - Analysing Navi part 2 Teraflop Peak Performance Lookup Table Computex: AMD's keynote May 27th Memory bandwidth calculations summary Sony and Microsoft collabotaring for cloud gaming streaming AMD at Computex May 27th livestream time Submit vote for Next thread title and we need a new OPHaha, 2 weeks is NOTHING when many of us including myself have been anticipating next-gen info for years!

Can't wait to see Halo on the next gen Xbox, I feel they're going to knock it out of the park with this one!!

Halo 4 already looks great on the X. Can't wait to see Infinite on the Anaconda.

Exactly but MS has in the past tried to focus on showing it running on a console even with PC versions available. I would expect the same for the majority of the titles they show.

Please, please PLLEEEEEASE let one of those devkits be for a PS5 Portable :)

This :( I wonder when streaming will become good enough on the go

OH HERE IT COMES

Well played, Sir Anthony aka Antoine ;)Mr Colbert...I'm sticking with 10 to 12 TF for PS5! I don't care what you tell me la la la la la la la!!!! Xbox guess?.....6.5 for baby and...........10/12 for daddy, BUT....as we've been talking about it...it's the other parts of the equation that are right up in the air, mem, clock freqs etc etc. So yes...i'm sticking!

This :( I wonder when streaming will become good enough on the go

the google stadia reveal has already shown that its ready for the masses.

the only problem is going to be gaming over LTE networks, but from what i hear Verizon and AT&T networks already provide unlimited data plans and their speeds go up to 40 MBps.

If you are referring to the lowspeed comment there's two possibilities I see that ar plausible

A) most likely, since the context of that comment was in relation to SSD and load times. By "low speed" he was referring to the SSD speed

B) everyone has the Gonzalo kit with the 1 gig clocks and not the near production kits with the 1.8 gig (or there abouts) clock

First option seems most likely to me. Or it could be both as well I suppose

I'm just thinking there is no way 19 months from release either console devkit has even very early real hardware. Last time round the consoles changed dramatically tech wise and yet real hardware kits only went out 8-12 months from release.

Something isn't adding up....(Spring releases for both?)

Not really true anymore, for quite some time now. A new node is giving power consumption benefits only for the first several years of its existence. Price per mm^2 is considerably higher, to a point where even price per one transistor is considerably higher. Which is why you only see smaller and lower performing chips being made on a new node during the first couple of years of its production ramp. Which is also why we'll get the next gen consoles only in the end of 2020 despite the fact that 7nm node they'll use is available for production since about autumn 2018.More power for less money, basically. It's smaller and cooler so you can fit more with higher clocks in a cheaper package.

I really think they are launching first.And even just as of the interview a few days ago Cerny said devs would be getting the up to date kits "soon". They could have literally gotten them today for all we know or get them next week. So Anaconda stuff first. Interesting that Ms got the final kit out sooner

I know they can't wait for this gen to be over for them.

Might explain their final kit going out sooner.

I'm just thinking there is no way 19 months from release either console devkit has even very early real hardware. Last time round the consoles changed dramatically tech wise and yet real hardware kits only went out 8-12 months from release.

Something isn't adding up....(Spring releases for both?)

Spring release is what's likely I think, Ive been an advocate of a spring release. I didn't expect both though. Someone mentioned on this thread, when final devkits for ps4 went out it was 14 months till launch, assuming the same you'd be looking at June July August....but that was with a huge switch in architecture. So a spring seems VERY plausible to me. Plot twist, what if Xbox launches spring and PlayStation fall?

On the subject of devkits, just for clarity here is what was said in the Wired article:

" A number of studios have been working with it, though, and Sony recently accelerated its deployment of devkits so that game creators will have the time they need to adjust to its capabilities. "

So I think it's a given that most Sony first party studios had devkits, and probably some very trusted and close studios like Koji Pro and Insomniac, and now most of the others are getting theirs.

" A number of studios have been working with it, though, and Sony recently accelerated its deployment of devkits so that game creators will have the time they need to adjust to its capabilities. "

So I think it's a given that most Sony first party studios had devkits, and probably some very trusted and close studios like Koji Pro and Insomniac, and now most of the others are getting theirs.

I think Sony will to capitalize on hype for one of the big three (likely TLoU2) launching alongside the console. I also don't think that they would talk about next gen plans April 2019 if they were launching Fall 2020 and it's been longer since their last hardware release. Plus if they are at a power disadvantage being out a few months earlier helps mitigate that.

I think TLOU2 will come fall 19

Death stranding or Ghosts will be there PS5 big ones

Thought I was following you already?!

The majority will be shown on X or PC, especially for everything releasing this year/early next year. They might have a teaser but I wouldn't expect much to be shown running on dev hardware for Scarlett.

E3 2020 it is then :p

Ugh, I feel like I just bought my One X.

On the subject of devkits, just for clarity here is what was said in the Wired article:

" A number of studios have been working with it, though, and Sony recently accelerated its deployment of devkits so that game creators will have the time they need to adjust to its capabilities. "

So I think it's a given that most Sony first party studios had devkits, and probably some very trusted and close studios like Koji Pro and Insomniac, and now most of the others are getting theirs.

I need to read the article again. Isn't that quote in context of the current devkits but they don't have the high speed ones yet? Or am I remembering wrong?

Also just remembered, alot of studios up until relatively recently with ps4 kits expected 4gigs of ram, hell even guerilla. So having devs work on lower speced kits with old instructions isn't exactly unheard of for them. I suppose from their point of view it's no harm no foul since they'll have more to work with in the end. Not suddenly less

You left me out of the sudden some time ago. I think it was Twitters fault. They like to mess it up from time to time. I also unfollowed people I did not unfollow by my own actions. The magic of their code base I would suppose.

Also: Memories ;)

I need to read the article again. Isn't that quote in context of the current devkits but they don't have the high speed ones yet? Or am I remembering wrong?

Also just remembered, alot of studios up until relatively recently with ps4 kits expected 4gigs of ram, hell even guerilla. So having devs work on lower speced kits with old instructions isn't exactly unheard of for them. I suppose from their point of view it's no harm no foul since they'll have more to work with in the end. Not suddenly less

The devkit that was being used to demo Spider-Man was described as a low speed one. We have no idea if the ones that studios already have or are receiving in this newest wave are low speed or not.

Spring release is what's likely I think, Ive been an advocate of a spring release. I didn't expect both though. Someone mentioned on this thread, when final devkits for ps4 went out it was 14 months till launch, assuming the same you'd be looking at June July August....but that was with a huge switch in architecture. So a spring seems VERY plausible to me. Plot twist, what if Xbox launches spring and PlayStation fall?

I've been dead against the possibility of a Spring release but a number of details in recent weeks is slowly changing my mind. Xbox in Spring and PlayStation in Fall would be a huge shock for many. So I say Microsoft should do that if they can.

GDDR6 only is better in regards to price/performance. Both right now and also in the distant future.As I've said before, if the HBM2 + DDR4 rumor turn out to be true, it will be a winning price/performance combination for Sony. No GDDR6 only solution will be competitive on price/performance.

Sony would only benefit if they reduce other bottlenecks and tradeoffs with that solution. And Sony only saves money if they get a super good deal and get their economy of scale projections right.

As I've said before, if the HBM2 + DDR4 rumor turn out to be true, it will be a winning price/performance combination for Sony. No GDDR6 only solution will be competitive on price/performance.

24gb of gddr6 would have more bandwidth the HBM2, so gddr6 would have better performance overall.

And this rumour Sony got some sweet deal "on the bad chips" sounds like such fandream nonsense, what happens when these "bad chips" are all used up, the price go back up lol, and only Sony has access to this deal cos reasons.

Might as well say Sony have unlocked dark matter power and have secret power over AMD 🤣

Random number generator and preference for MS console

We've already been told by several insiders they are going for late 2020.I really think they are launching first.

I know they can't wait for this gen to be over for them.

Might explain their final kit going out sooner.

You left me out of the sudden some time ago. I think it was Twitters fault. They like to mess it up from time to time. I also unfollowed people I did not unfollow by my own actions. The magic of their code base I would suppose.

Also: Memories ;)

Yeah, but lets leave those memories behind!

That flow of special sauce. Can't wait.

They are already lost in translation. They only come up if someone has the bad idea to post tweets of certain people here ...

When did you expect next gen to come?

GDDR6 only is better in regards to price/performance. Both right now and also in the distant future.

That is factually incorrect. A 24GB GDDR6 only would be excellent performance, but very expensive, now and in the future. The rumored Sony solution would only use 8GB of expensive HBM2 and use 16GB of low cost DDR4. It's the better price/performance tradeoff.

Didn't the secret sauce meme start with Gies?

but gddr6 will drop in price over time. also at that point your just creating a sudo cache teir set up, even though you dont have to sync files, you want your assets ect to always be in the hbm2 than. its extra work for devs.That is factually incorrect. A 24GB GDDR6 only would be excellent performance, but very expensive, now and in the future. The rumored Sony solution would only use 8GB of expensive HBM2 and use 16GB of low cost DDR4. It's the better price/performance tradeoff.

Yup, that's the reference.

He confirmed HBM for Xbox?So based on hmqq we are "insider" confirmed for hbm on Xbox, yes?

24gb of gddr6 would have more bandwidth the HBM2, so gddr6 would have better performance overall.

It would depend on the bus width used. There are cards out there with 24GB of GDDR6 with about 575GB/s of bandwidth (on a 384-bit bus) - that's not much more than the proposed HBM2+DDR4 setup overall. There'd be other advantages to the GDDR6 approach, but I'm not sure bandwidth would necessarily be a big one, pending bus choice.

Both of those ideas seem very expensive based on published pricing though.

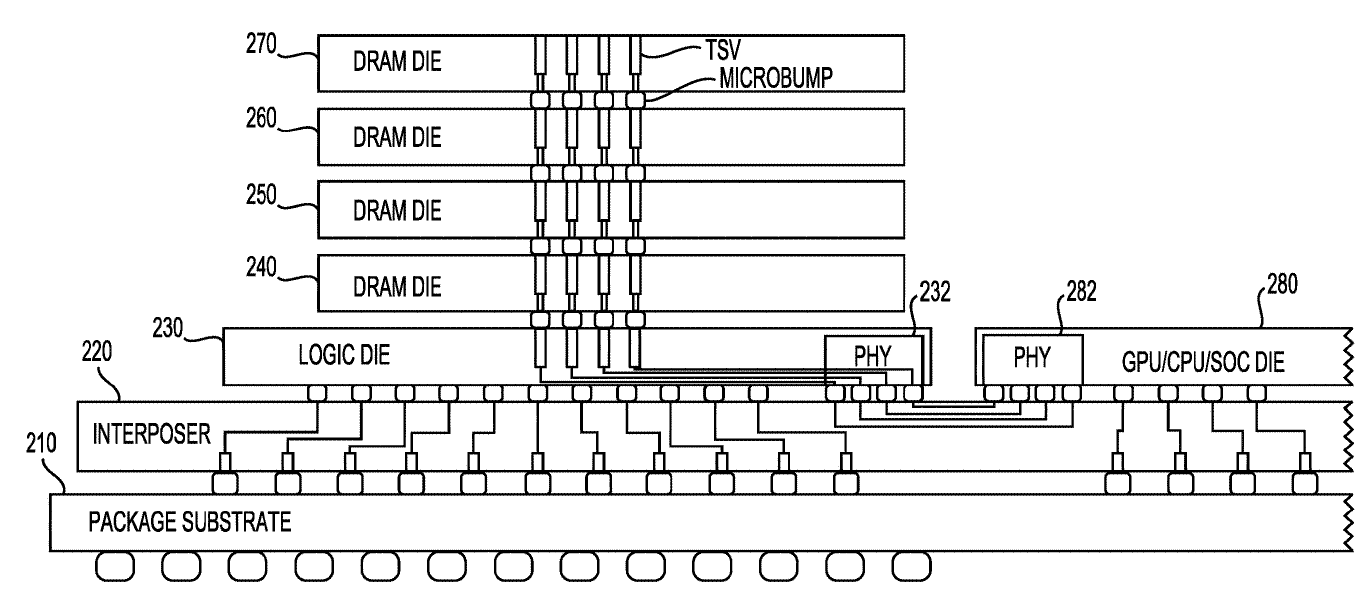

Someone posted that picture of the ms patent with hbm and he said "someone found argalus" or something along those lines. Maybe I misunderstood what was meant? I've been shocked people haven't been talking about it more

Find itSomeone posted that picture of the ms patent with hbm and he said "someone found argalus" or something along those lines. Maybe I misunderstood what was meant? I've been shocked people haven't been talking about it more

Oh eww. Although I assume it started much more innocently than how it is now with console warriors using it to make arguments

Someone posted that picture of the ms patent with hbm and he said "someone found argalus" or something along those lines. Maybe I misunderstood what was meant? I've been shocked people haven't been talking about it more

The following patent is associated with Mark S. Grossman, lead GPU architect of Xbox

http://www.freepatentsonline.com/20180364944.pdf

Lots of good stuff on Variable Rate Shading coming from Mr. Grossman

http://www.freepatentsonline.com/20190005712.pdf

http://www.freepatentsonline.com/10152819.pdf

Texture filtering

http://www.freepatentsonline.com/WO2018151870A1.pdf

Depth-aware reprojection (for HMD)

http://www.freepatentsonline.com/10129523.pdf

Tile-based texture operations

http://www.freepatentsonline.com/8587602.pdf

He is a former AMD engineer, so you can find him on lots of cross-fire multi-GPU patents as well.

A tweet for some context

It's not really clear what he's confirming.Nice to see someone found out what's Arden.

Hope Argalus to be decrypted soon ;)

No, just no.That is factually incorrect. A 24GB GDDR6 only would be excellent performance, but very expensive, now and in the future. The rumored Sony solution would only use 8GB of expensive HBM2 and use 16GB of low cost DDR4. It's the better price/performance tradeoff.

24GB GDDR6 is 12*2Gb chips on a 384bit GDDR6 memory interface.

8GB HBM2 + 16GB of low cost DDR4 is 2 HBM2 stack + 8*2Gb DDR4 chips on a 256bit DDR4 memory interface.

The second options still requires you to put dram chips on the motherboard. The second options still requires you to use a lot of die space for the memory interface and controller. All while you also have a more expensive and complicated package to produce because of HBM.

It's the worst of both world you combined.

When people talk about the benefit of HBM, they talk about ditching traditional memory for HBM. When you combine it you lose most of them.

The only real advantage for that solution is lower power consumption compared to GDDR6. But even in that case one could argue 16GB of HBM without any DDR4 would be smarter to use.

You really need everything going for you to make that better on a cost/performance basis. And even in that case I remain highly sceptical Sony could do it.

Last edited:

That's the one. Mixed up Arden and argalus. Completely disregard the Twitter troll, a developer on here and tech minded people on beyind3d say he often draws together disparate things and makes wrong conclusions. But yeah, unless I'm mistaken. That's a patent pertaining to hbm

Not necessarily HBM, might be a successor to the esram stack.Someone posted that picture of the ms patent with hbm and he said "someone found argalus" or something along those lines. Maybe I misunderstood what was meant? I've been shocked people haven't been talking about it more

Yes, They also include VRAM. All 8GB of it.Don't they also include the entire card, and not just the GPU die?

Yep yep. I think Panello's estimates are getting people to question themselves all over again. We have been through all of this months ago and are now back to discussing sub 10 tflops consoles. lolNice post....... I have given up on talking to Colbert about his charts. Now I just look at te as nothing more than his pure personal opinions and not necessarily objective ones. Its like he has some srt f 10TF limit in his head and adjust everything else to meet it.

I mean as you have planted out,he also reference the chart adore TV GPU leak chart (at least now he's acknowledging its existence) and he just somehow ignored the details in that chart. For my calculations I am going to assume that when performance comparisons were made they were referring to the base spec. So no water cooling, just looking at what clocks these GPUs need to be r to hit those specs.

Navi 12 (40CU) is equivalent in power to a Vega 56 (which is an 8-10TF GPU) thats 1570Mhz (8TF) or 1960Mhz (10TF)

Navi 10 (48CU) is equivalent to vega 64 +10%, thats a 10 - 12TF GPU even without adding the 10-15%. So to gt Navi 10 to that performance level clocks will be 1630Mhz (10TF) to 1960Mhz (12TF). Again, stillnot even accounting for the 10-15% better performance which if I did would translate to all round lower clocks.

I am not even going to get into Navi 10 SE.

I actually think t's ok to trust Panello since he is pretty much the only one here with direct knowledge of MS's plans but he did leave 9 months ago back when MS was sure of having a more powerful console since everyone assumed Sony would be going with a $399 standard fan cooled system. But things have changed since then. When Brad Sams all of a sudden pointed out that MS was only trying to match Sony's performance targets instead of outright blowing past them, it became obvious that MS had underestimated Sony once again and were playing catch up. Panello wouldnt know this because he was already long gone. I would say Brad's sources are much more current than Panello's.

It's very curious that right around the time Brad said that bit about MS matching Sony's performance, we had begun to discuss the SSD rumors and started to take them seriously. I think MS caught wind of it around the same time and made the assumption that Sony wasnt going to go with a $399 console anymore and has since shifted focus to making sure they get the most tflops out of a $499 console. Which time and time again, seems to point to the 14 tflops number.

Anyway, with Cerny confirming devkits are going out soon and MS insiders doing the same, we will find out soon enough.

I am going with

PS5 - 14 tflops

Anaconda - 14-16 tflops.

No Lockheart.

If they're using HBCC, which they should, then it's not a sudo cache tiering setup, it is a cache tiering setup and shouldn't be messed with by the devs. The hardware must make cacheing decisions in that kind of setup, not the software.also at that point your just creating a sudo cache teir set up, even though you dont have to sync files, you want your assets ect to always be in the hbm2 than. its extra work for devs.

There's other problems with that design, but that ain't one.

You can't really build faster memory than a heavily interleaved SRAM design. I could see more SRAM being used as a L4 cache for the CPU and/or GPU, but not much truly significant there. SRAM is almost incomprehensibly expensive compared to anything else.

Honest question. What possible reason could they have for using esram?

Honest question. What possible reason could they have for using esram?

To make up for the high latency from the GDDR6 or something like that is what I read.

In terms of upscaling techniques, are we expecting checkerboard rendering to still be the go-to standard for next gen, or are we aware of newer techniques that might surpass the results it can give? We know the PS4 Pro has dedicated hardware for CB rendering, I have to imagine the PS5 would have something like that as well.

Has anybody really looked into it? The first patent (that incorporates HBM) is about memory that has a logic layer and can perform simple operations without using CPU or GPU resources. It is not about HBM it is about a logical layer that allows independent background operations performed by the memory itself (any DRAM) and not a CPU and GPU. I am not sure if this is something useful for a gaming console quite honestly.That's the one. Mixed up Arden and argalus. Completely disregard the Twitter troll, a developer on here and tech minded people on beyind3d say he often draws together disparate things and makes wrong conclusions. But yeah, unless I'm mistaken. That's a patent pertaining to hbm

Last edited:

Threadmarks

View all 14 threadmarks

Reader mode

Reader mode

Recent threadmarks

[USE IN CASE OF NO LEAKS BEFORE E3!!] AdoredTV - Analysing Navi part 2 Teraflop Peak Performance Lookup Table Computex: AMD's keynote May 27th Memory bandwidth calculations summary Sony and Microsoft collabotaring for cloud gaming streaming AMD at Computex May 27th livestream time Submit vote for Next thread title and we need a new OP- Status

- Not open for further replies.