So John Linneman has a new Doom video about the latest patch. Both the video and the patch are awesome. The only thing that's not awesome about the patch is one item in particular and John expertly describes it. Upscaling the frame to the display.

Watch the video first.

With apologies to John for ripping off his hard work of creating this example in his video, the effect I'm looking at in particular is this:

John mentions a linear interpolation and I was thinking about a way to resize it from the native rendered 16:10 to intended 4:3 with less artifacts for pixel art.

If we take a 4:3 960x600p frame buffer from the Switch port for example. We need to get that into either a 1280x720p or 1920x1080p display. If we do that with some sort of bilinear or bicubic upscaling we're going to get blur. My preferred method would be a linear supersample then a bicubic downsample.

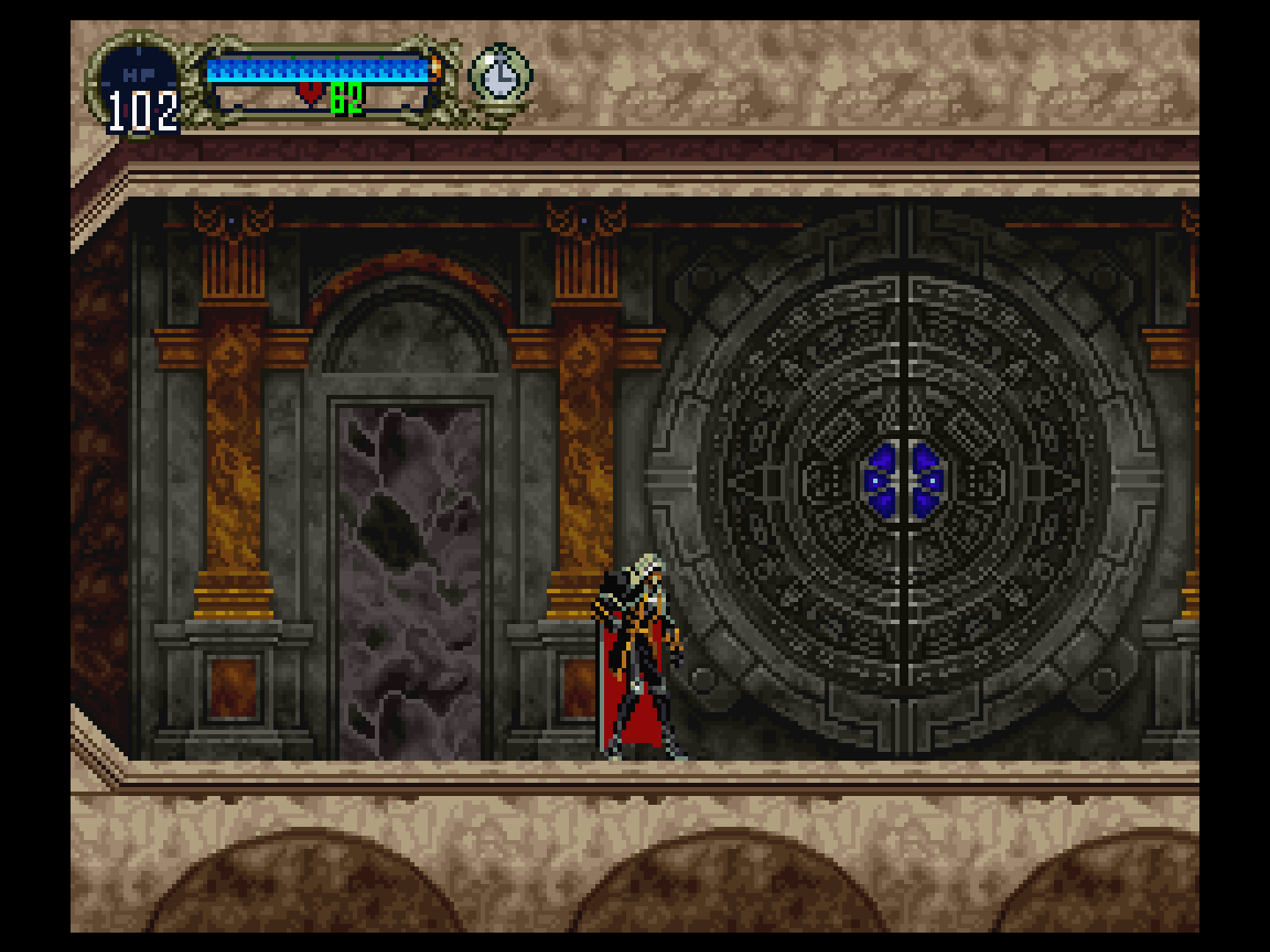

Now I don't have a 960x600p frame buffer so I'm going to be using a 320x200 image as an example.

This is the original image:

This is a bicubic upscale from 320x200 to 1440x1080:

It's the right aspect ratio but blurry as all fuck. What they should do is first a straight pixel multiply on each axis. For a 320x200 to 1440x1080p conversion this would first involve a 45x upscale to 14,400 pixels horizontal and a 54x upscale on the vertical. We're left with a 14,400 x 10,800 pixel image. At that point we can bicubic back down to 1440x1080p and get this:

*mic drop*

So what have we done here? Let's take a look at the bottom right corner of the left wall.

Well instead of smearing the entire image over a bicubic interpolation or having jagged nearest neighbour we've instead interpolated only at the smaller resolution's old pixel boundaries. So where our nearest neighbour upscale has to decide between 4 or 5 pixels for each old pixel, we've got 4 for each and then a 5th pixel on the boundary which is interpolated between the two colors. Maximum sharpness because we're not smearing the difference between two colors over 9 pixels, interpolated stairstep.

Now going from a 960x600 would involve the same thing, but it would be an upscale of 15x across the horizontal and 18x on the vertical. Then you'd do the bicubic back down to 1080p. Going to 720p wold be a lot more involved because the lowest number with both 720 and 600 as a common factor would be 54,000. This would be atrocious because you'd be scaling 14.5GB per frame * 60fps means 869GB/sec of memory bandwidth compared to 1080p which is 0.57GB per frame and 34.8GB/sec needed to scale. You might just need to bicubic from 14,400 x 10,800 back to 960x720 and take the slight quality hit of doing a bicubic resample on non-integer factors.

Anyway, those are my thoughts about how to implement pixel art upscaling from integer multiplied native presentations.

Love the videos, John. Keep making them.

Watch the video first.

With apologies to John for ripping off his hard work of creating this example in his video, the effect I'm looking at in particular is this:

John mentions a linear interpolation and I was thinking about a way to resize it from the native rendered 16:10 to intended 4:3 with less artifacts for pixel art.

If we take a 4:3 960x600p frame buffer from the Switch port for example. We need to get that into either a 1280x720p or 1920x1080p display. If we do that with some sort of bilinear or bicubic upscaling we're going to get blur. My preferred method would be a linear supersample then a bicubic downsample.

Now I don't have a 960x600p frame buffer so I'm going to be using a 320x200 image as an example.

This is the original image:

This is a bicubic upscale from 320x200 to 1440x1080:

It's the right aspect ratio but blurry as all fuck. What they should do is first a straight pixel multiply on each axis. For a 320x200 to 1440x1080p conversion this would first involve a 45x upscale to 14,400 pixels horizontal and a 54x upscale on the vertical. We're left with a 14,400 x 10,800 pixel image. At that point we can bicubic back down to 1440x1080p and get this:

*mic drop*

So what have we done here? Let's take a look at the bottom right corner of the left wall.

Well instead of smearing the entire image over a bicubic interpolation or having jagged nearest neighbour we've instead interpolated only at the smaller resolution's old pixel boundaries. So where our nearest neighbour upscale has to decide between 4 or 5 pixels for each old pixel, we've got 4 for each and then a 5th pixel on the boundary which is interpolated between the two colors. Maximum sharpness because we're not smearing the difference between two colors over 9 pixels, interpolated stairstep.

Now going from a 960x600 would involve the same thing, but it would be an upscale of 15x across the horizontal and 18x on the vertical. Then you'd do the bicubic back down to 1080p. Going to 720p wold be a lot more involved because the lowest number with both 720 and 600 as a common factor would be 54,000. This would be atrocious because you'd be scaling 14.5GB per frame * 60fps means 869GB/sec of memory bandwidth compared to 1080p which is 0.57GB per frame and 34.8GB/sec needed to scale. You might just need to bicubic from 14,400 x 10,800 back to 960x720 and take the slight quality hit of doing a bicubic resample on non-integer factors.

Anyway, those are my thoughts about how to implement pixel art upscaling from integer multiplied native presentations.

Love the videos, John. Keep making them.