Intel (Xe) DG1 spotted with 96 Execution Units

The Xe architecture will span across many segments. Starting from entry-level mobile gaming to high-performance computing. The DG1 graphics are believed to be Intel's first discrete graphics for gamers. Rumors also suggest Intel will launch the DG2 variant as well. Both of these names are not product names though, so we still do not know how the new series will be called.

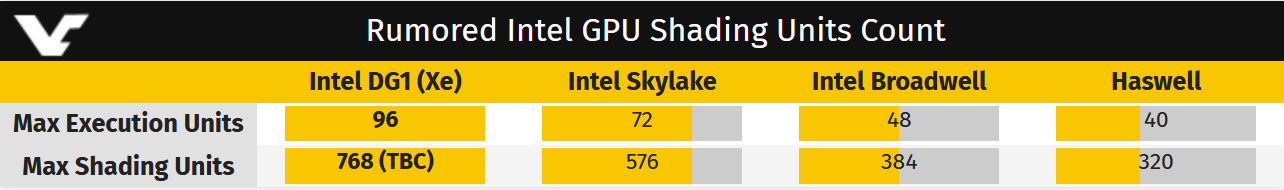

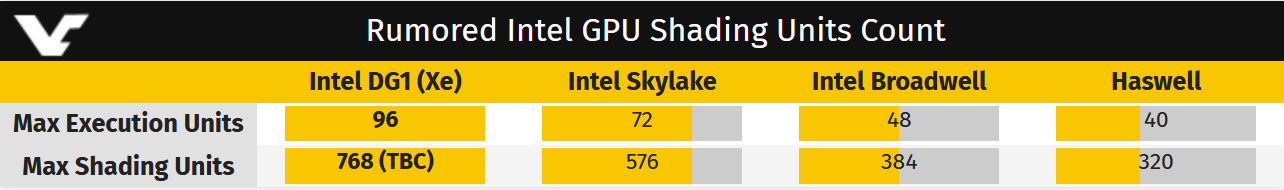

The latest leak from EEC points towards 96 Execution Units. If Intel was to keep the same design principle for DG1 as for Intel mobile HD/UHD graphics, then we should expect 96*8 shading units, that's 768 in total. It is pointless to speculate if this is 768 or 1536 (*16) shading units, but it appears that DG1 focuses on entry-level graphics.