-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Intel 10th Gen Core (Comet Lake-S) Review Roundup

- Thread starter kostacurtas

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

So yeah if you are playing at 1440p or above there is pretty much no reason to pick Intel over AMD even now. If you are looking for a CPU upgrade might as well wait for the 15-20% IPC gain Ryzen 4000 which come with more Cores, less heat and much lower TDP.

This is exactly where I'm at.

I'm seriously hoping the 4900x will be 16 cores / 32 threads, though I think there's only like a 10% chance of that happening. Intel doesn't have 16 cores so no reason they'll charge less.

Puget Systems' test still puts the 10900K behind AMD for the "live playback" score in Premiere rather than rendering, for what that's worth.

But Intel is still best for most of Adobe's apps, like Photoshop/Lightroom and others.

I do generally agree with you that, in cases where real-time performance matters, rather than rendering performance, Intel is generally still on top.

Ehh, I don't know about that when they lock you out of using ECC memory unless you're buying Xeons.

Meanwhile my Ryzen build has ECC memory and has been completely reliable. Intel really need to do something about that. ECC memory should really have replaced standard memory a long time ago.

Look at the lows though.

The 7700K is at 59 FPS in Total War while the 10900K is at 94 FPS.

In The Division 2 the 7700K is at 101 FPS while the 10900K is at 127 FPS.

People need to stop caring about average frame rates. It's the lows that you actually feel on a modern VRR display.

It's the same story when you compare AMD against Intel. Intel is often leading by 15–20 FPS.

But for some reason most of these sites are all testing the same games, and pick ones which easily run at over 100 FPS on average with most CPUs.

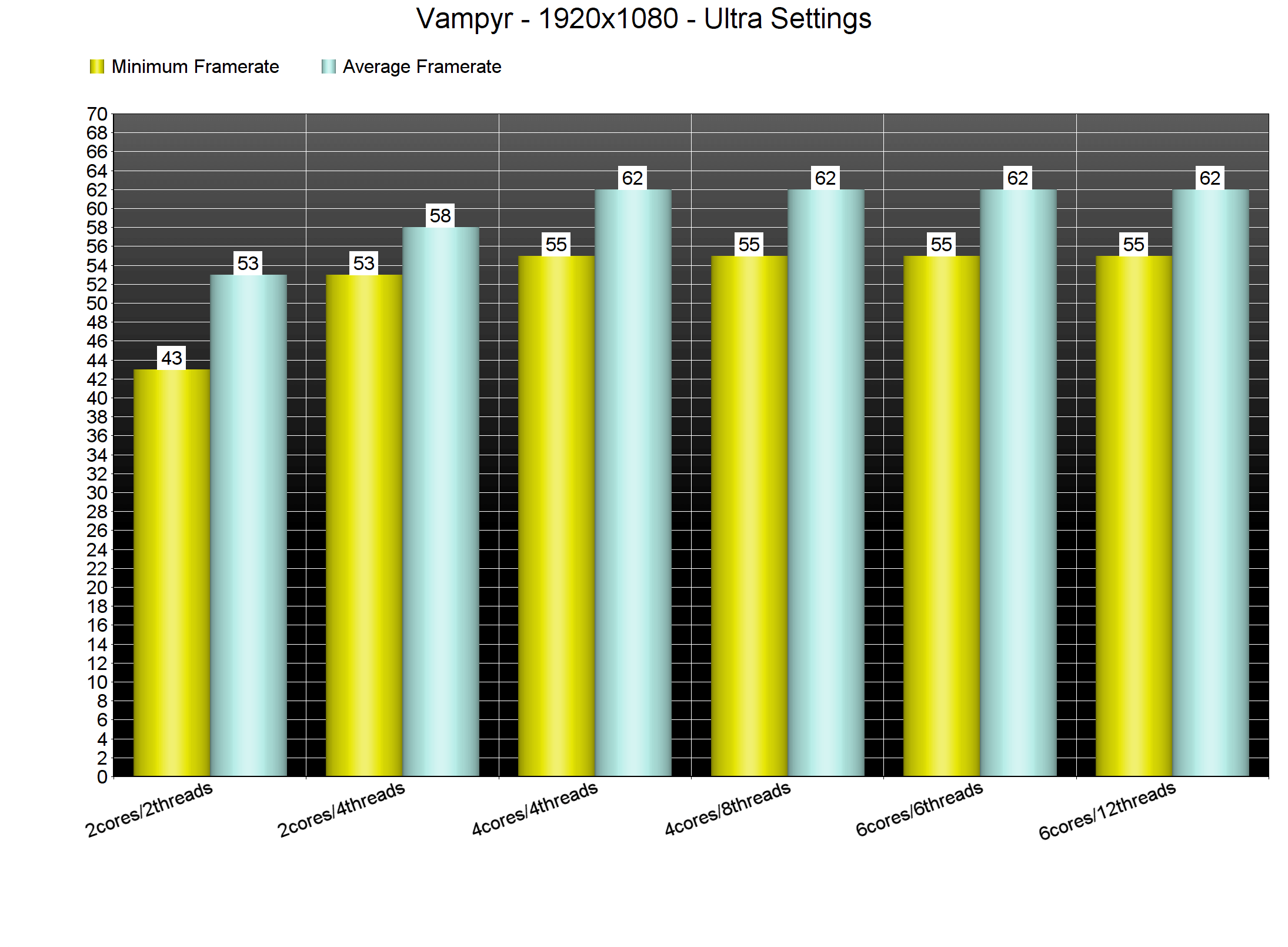

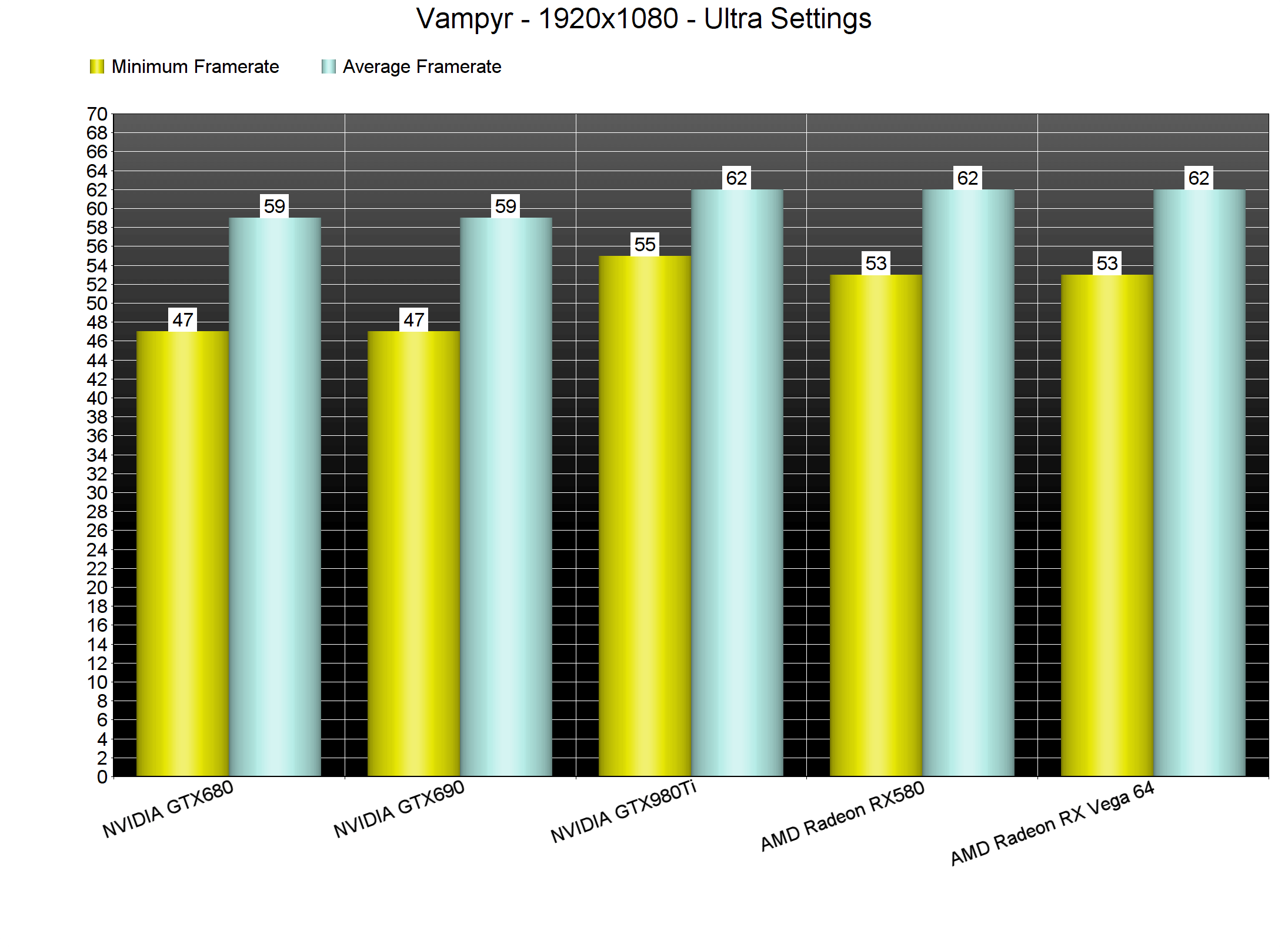

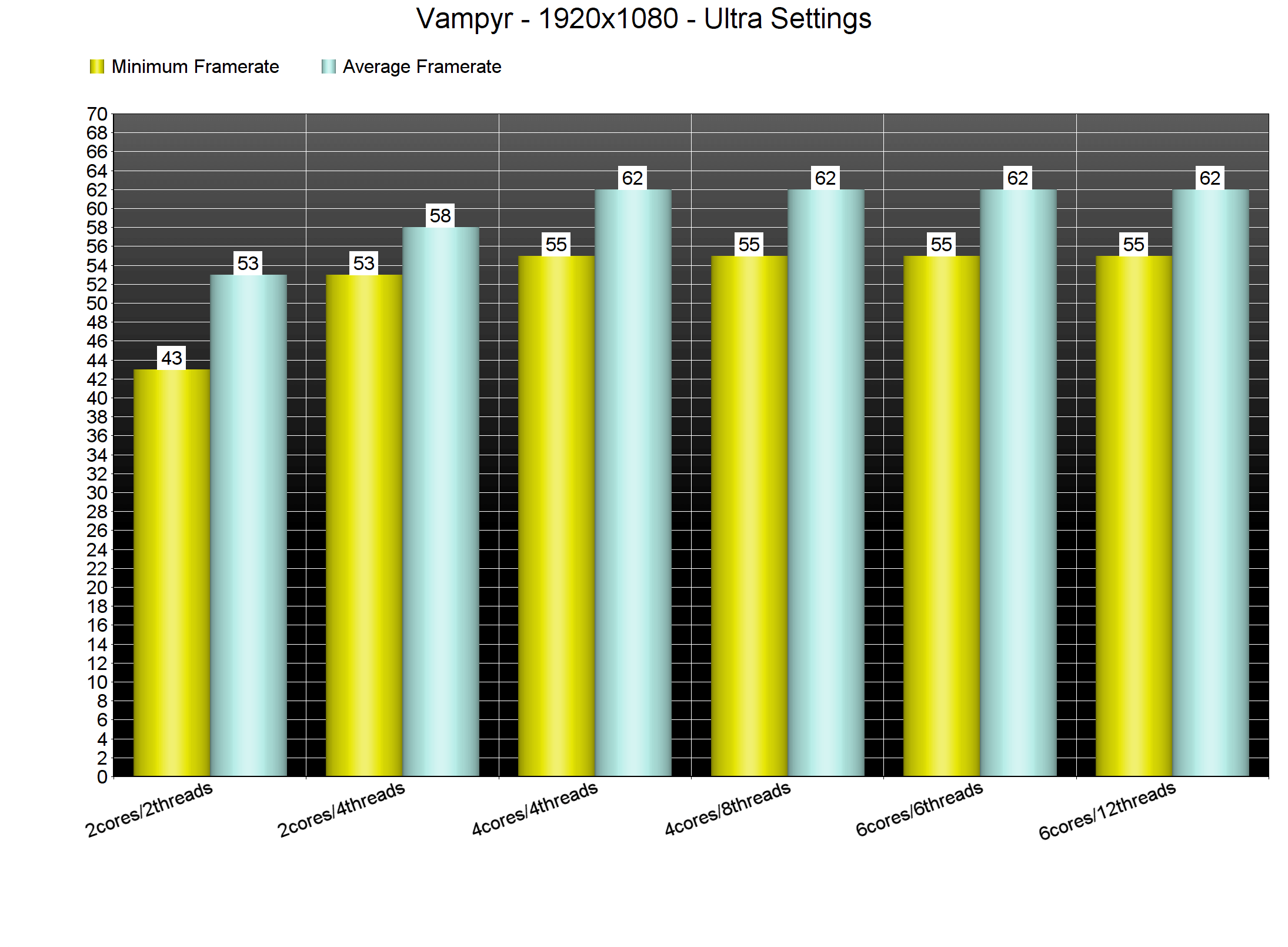

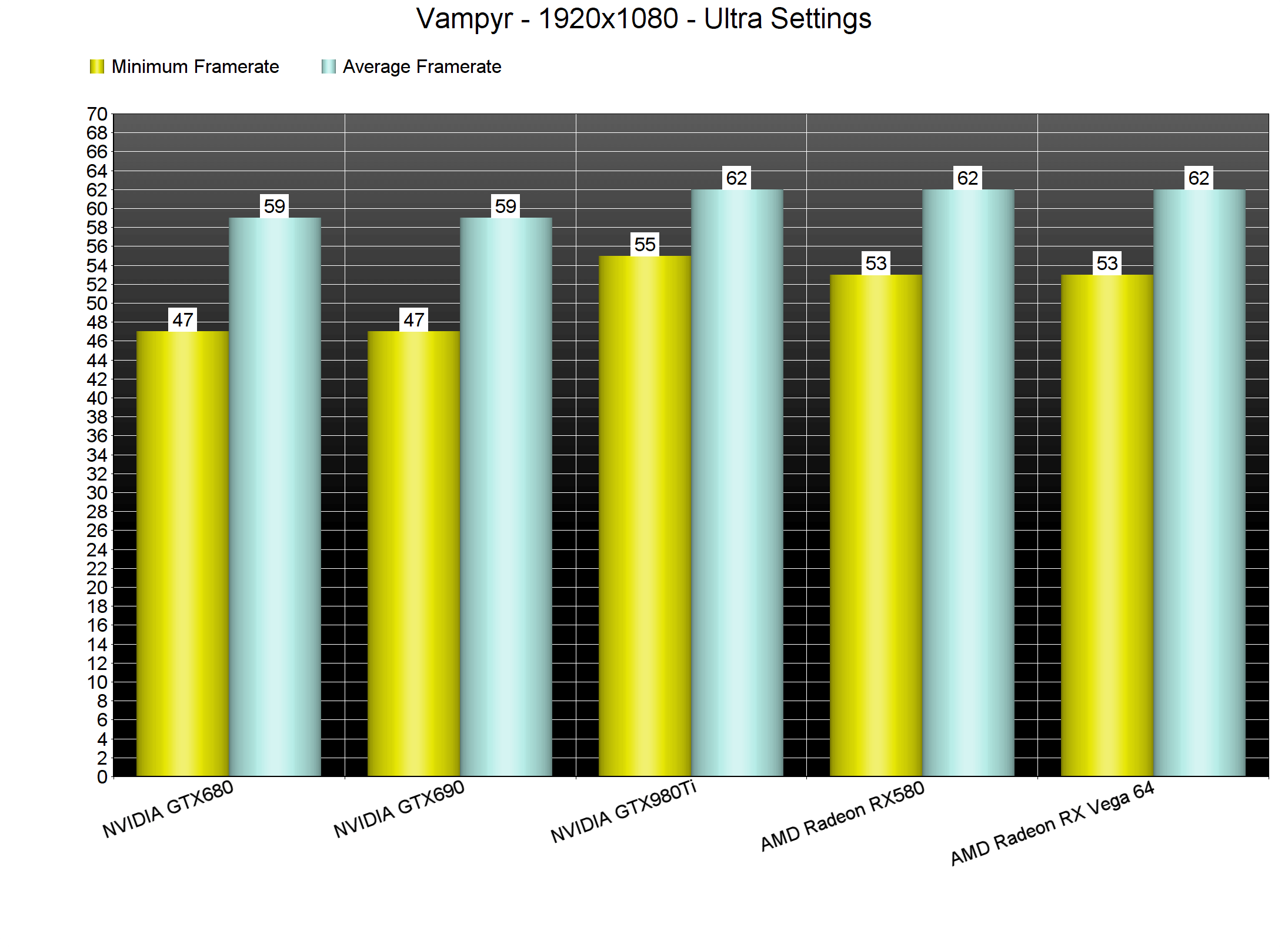

I'd love to see what the difference was in less-optimized games. Vampyr doesn't even hit 60 FPS on my 1700X. How does a game like that compare on a 3950X vs a 10900K? Those are the kind of results that are interesting to me.

Unless I cared about things like video export times, batch-processing images, or cpu-based 3D rendering, I'd probably buy Intel rather than AMD right now.

Actual editing tasks seem to perform best on Intel, as does gaming.

Right,

500 dollar CPU to play at 1080p Medium?

At 1440p with any serious settings and the difference is minimal.

For people hunting that last frame to get to 240Hz sure Intel is the way to go.

Pretty much every other gamer AMD is still the bang for buck king and Intel still hasnt brought anything to really look at.

It's not a matter of hitting 90 and calling it a day, it's about getting as high as possible. Why go AMD when Intel is still faster?What games does Intel hit 90+ where AMD fails?

You say that almost like AMD isnt making it to the GPU bound limit in pretty much every title.

Right, that's why I said for Cyberpunk. I won't be playing anything that will require an upgrade before then. If Zen 3 isn't out by then I'll just get the 10600k and plan to upgrade to the Rocket Lake i9 at some point down the road.So you should wait for Ryzen 4000, if the leaks are to be believed, these 10th gen Intel are about to be outdated very soon and I wouldn't pay such high premium only to get the best for few months.

That's why I specifically said that I would like to see tests using games which don't run well on most CPUs.

Vampyr drops to 45 FPS and worse in many areas on my 1700X. The Evil Within 2 is closer to 30. Dishonored 2 doesn't work properly with VRR displays and must stay at 60 or 120 to remain smooth—which is often on the CPU rather than the GPU.

Those are the kind of games I want a faster CPU for, not 400 vs 450 FPS in CSGO or whatever.

It's the one thing Intel has been piss poor at in Australia, pricing. The 10900 is nearly $1k which is fucking ridiculous and arrogantly priced considering the barely legible difference compared to a much cheaper 3900X or something from AMD. Plus a much higher L3 cache with far better TDP makes AMD still perfectly valid here in Australia.So the reason they're not talking about those chips is that nothing was shipped to reviewers. Intel mostly just sent out the top chip, or the top 2 chips for reviews right now. Others will presumably come later, or maybe not, who knows. I 100% expect Digital Foundry to source the f series chips for their own interest, even if they're not given them for reviews.

The 10400f is the one I think that will wind up being a bit of a value sweet spot for hexacores in this perf range. In Australia it's about 30-40 dollars cheaper than the R5 3600, and it should in theory have ballpark similar gaming and productivity performance. Probably slightly ahead in gaming, slightly behind in productivity.

I do not look forward to Nvidia card prices.

It's the one thing Intel has been piss poor at in Australia, pricing. The 10900 is nearly $1k which is fucking ridiculous and arrogantly priced considering the barely legible difference compared to a much cheaper 3900X or something from AMD. Plus a much higher L3 cache with far better TDP makes AMD still perfectly valid here in Australia.

I do not look forward to Nvidia card prices.

The AUD went down between z2 launch and this new Intel launch, so I expect new z3 stuff to also be pricier than we'd like. And yeah 3000 series will bleed me dry!

Ehhh, considering current top 3000 series prices I doubt we'll see AMD going higher then Intel. Even when our dollar was just as bad it was still cheap in comparison.The AUD went down between z2 launch and this new Intel launch, so I expect new z3 stuff to also be pricier than we'd like. And yeah 3000 series will bleed me dry!

Sorry to drop in with a question without doing a *ton* of research, but what's the best mid-tier CPU right now for gaming (approx. $250-$300 MSRP)?

If waiting for Ryzen 4000 isn't an option, then the Ryzen 3700X.

Thank you!If waiting for Ryzen 4000 isn't an option, then the Ryzen 3700X.

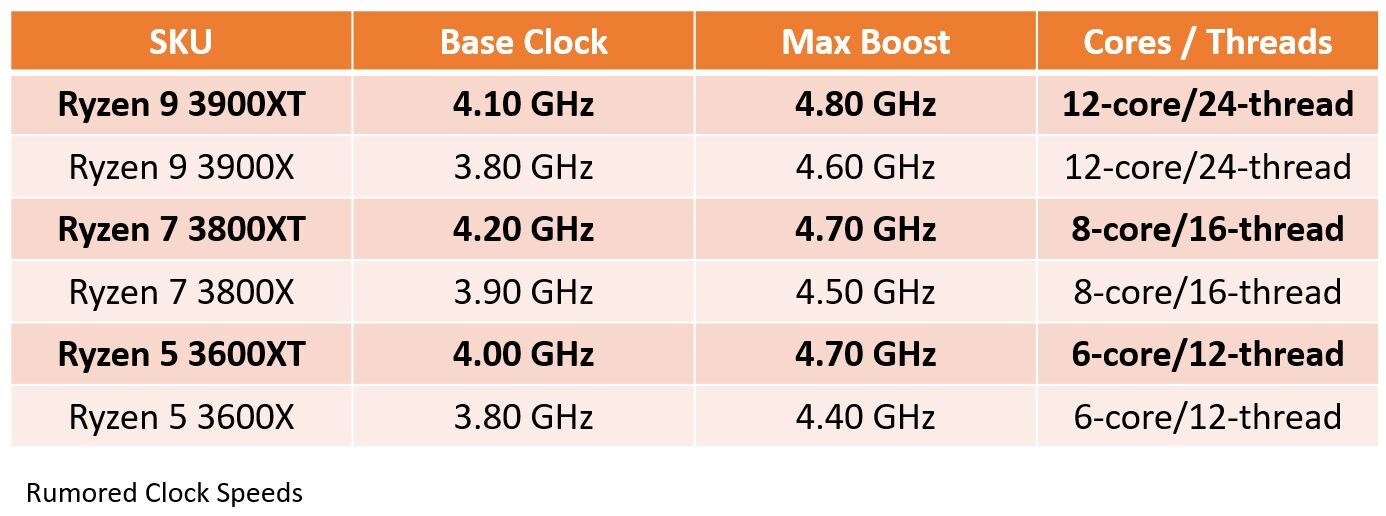

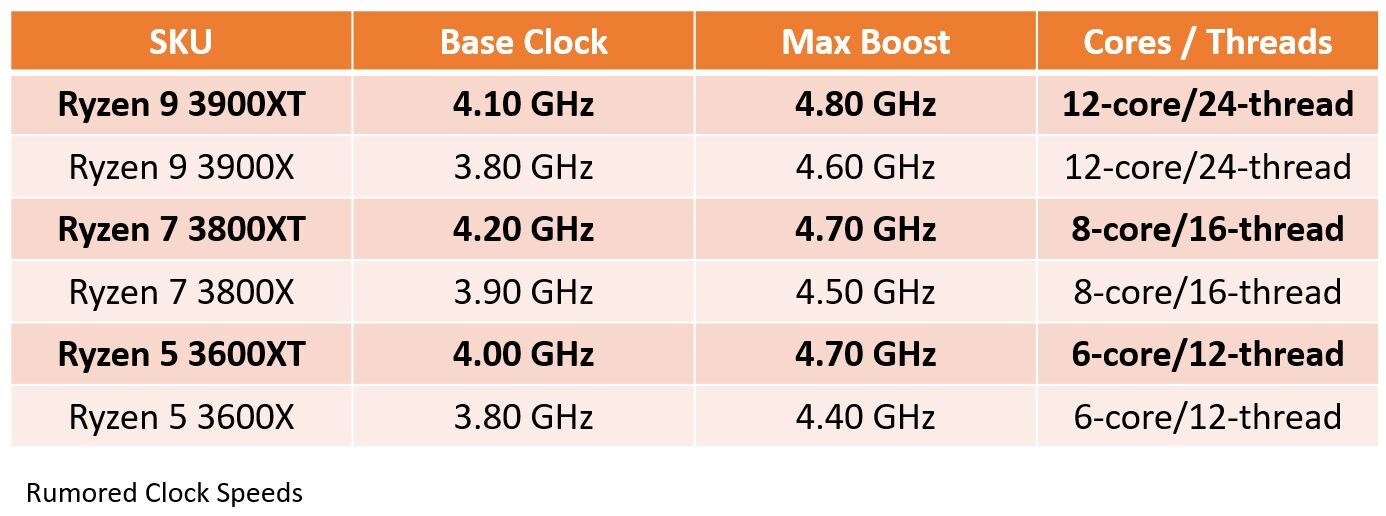

It looks like we are getting a complete Zen2 refresh in early July, courtesy of a optimization of 7nm production and a bit of CPU work optimization.

wccftech.com

wccftech.com

AMD Ryzen 9 3900XT (Ryzen 9 3900X Replacement)

AMD Ryzen 7 3800XT (Ryzen 7 3800X Replacement)

AMD Ryzen 5 3600XT (Ryzen 5 3600X Replacement)

Few hundred mhz of speed boost, with original Zen2 prices. Launch Zen2 lineup will get pricedrops.

Since the source of this is only Wccftech for now, we could wait on new thread creation.

AMD Ryzen 9 3900 XT, Ryzen 7 3800 XT, Ryzen 5 3600 XT 'Matisse Refresh' Desktop CPUs Confirmed - Same Core Config, Higher Clocks & Price Cuts For Existing Models

AMD is preparing three new Ryzen CPUs as part of its Matisse Refresh family that include Ryzen 9 3900XT, Ryzen 7 3800XT & Ryzen 5 3600XT.

AMD Ryzen 9 3900XT (Ryzen 9 3900X Replacement)

AMD Ryzen 7 3800XT (Ryzen 7 3800X Replacement)

AMD Ryzen 5 3600XT (Ryzen 5 3600X Replacement)

Few hundred mhz of speed boost, with original Zen2 prices. Launch Zen2 lineup will get pricedrops.

Since the source of this is only Wccftech for now, we could wait on new thread creation.

Good additional curiosity piece from GN. Going into how turbo boost works in these CPUs, BIOS effects on benching etc.

www.youtube.com

www.youtube.com

I like how GN always goes ham with coverage during review periods. Reviews and then openly breaking it all down so viewer can have better understanding about what affects what is perceived performance.

Intel i9-10900K "High" Power Consumption Explained: TVB, Turbo 3.0, & Tau

We're revisiting the Intel's Turbo Boost & Power discussion for the 10900K, 10600K, et al., plus talk on if boards impact performance, Thermal Velocity Boost...

I like how GN always goes ham with coverage during review periods. Reviews and then openly breaking it all down so viewer can have better understanding about what affects what is perceived performance.

So yeah if you are playing at 1440p or above there is pretty much no reason to pick Intel over AMD even now. If you are looking for a CPU upgrade might as well wait for the 15-20% IPC gain Ryzen 4000 which come with more Cores, less heat and much lower TDP.

If you're playing at 1440p there's no reason to pick anything over a quad core based on this chart alone! I don't think we'll see many people recommending the 3300x or i3 10100 for anyone playing at this res, though.

So frustrating. I'm still on a 4770k, wanting to upgrade this year.. doesn't feel like the most opportune time to upgrade. I only use my desktop PC for gaming, my dev work is all done on a Macbook pro, so extra performance from Ryzen on that side wouldn't really make much of a difference to me unless I attempted to shift my dev setup (for work and personal) over to Linux, which I'd prefer not to, especially for work.

There was a rumor about it happening a while ago but it was shot down by people in the know. What is actually happening is 8core CCX and unification of L3 cache to reduce latency. That along with an average IPC increase of 10-15% could have a significant impact on performance, the latency reduction more so that the minor IPC gains. I see the core count increase to be more likely with Zen 4 and the shrink.This is exactly where I'm at.

I'm seriously hoping the 4900x will be 16 cores / 32 threads, though I think there's only like a 10% chance of that happening. Intel doesn't have 16 cores so no reason they'll charge less.

What this graph does not show at all is all the frametime inconsistencies and lack of smoothness that results from using a 4 core CPU in modern AAA games. What does that mean? You can have stutter even at 60fps or over with your frametimes being all over the place. Techdeals actually did a video showing that. Look at the timeframe rolling graph but keep in mind it does not show in the video since not every single frame is recorded. I have a 4770k and can verify that frametime inconsistency is indeed a thing and very much annoying.If you're playing at 1440p there's no reason to pick anything over a quad core based on this chart alone! I don't think we'll see many people recommending the 3300x or i3 10100 for anyone playing at this res, though.

There was a rumor about it happening a while ago but it was shot down by people in the know. What is actually happening is 8core CCX and unification of L3 cache to reduce latency. That along with an average IPC increase of 10-15% could have a significant impact on performance, the latency reduction more so that the minor IPC gains. I see the core count increase to be more likely with Zen 4 and the shrink.

Yeah agree there. And if they bumped up the 4900x that doesn't leave the 4950x with anywhere to go in terms of core count, much easier for them to leave the core counts alone at the top end. And I don't think they're going to lower the price of the 4950x when Intel can't compete in terms of core counts.

UNLESS they want to bring out a 10 core/20 thread out to directly compete with Intel which would mean it'd also be possible to get a 20 core/40 thread beast 4950x CPU and the 4900x takes the 16c/32t position. Don't think its happening though. :D

I built my new pc around the i9 9900k. Doesn't seem to logical to want to upgrade to the new i9 10900k, but two more cores and higher clocks is pretty interesting.

The graph doesnt show frametimes.If you're playing at 1440p there's no reason to pick anything over a quad core based on this chart alone! I don't think we'll see many people recommending the 3300x or i3 10100 for anyone playing at this res, though.

A 3rd gen Intel i5 can get Assassins Creed Odyssey to 60fps but the frame times will be utter shit and the experience will feel much much worse even if the bench says you are averaging above 60fps.

I built my new pc around the i9 9900k. Doesn't seem to logical to want to upgrade to the new i9 10900k, but two more cores and higher clocks is pretty interesting.

Its not that interesting actually.

If you are a 9900K owner upgrading to a 10900K for gaming is pointless....you are currently GPU bottlenecked upgrading the CPU will lead to you still be GPU bottlenecked.

If you really want to do an upgrade wait for Ampere and games that make the 9900K sweat.

That's why I specifically said that I would like to see tests using games which don't run well on most CPUs.

Vampyr drops to 45 FPS and worse in many areas on my 1700X. The Evil Within 2 is closer to 30. Dishonored 2 doesn't work properly with VRR displays and must stay at 60 or 120 to remain smooth—which is often on the CPU rather than the GPU.

Those are the kind of games I want a faster CPU for, not 400 vs 450 FPS in CSGO or whatever.

Why would someone use an unoptimized game for benchmarking.

I could get Vampyr to 1080p60 with a GTX 1070 and an old i5.....so you NOT being able to hit 60 is likely a bug and a sign of bad optimization.

The titles that are used for benchmarking are usually titles that give consistent and expected results across many SKUs.

A game thats random isnt worth using as a benchmarking tool because your CPU which logically will get better performance might just not work well for the fuck of it.

Vampyr is a UE4 titles that clearly has issues because weve seen UE4 fly on relatively mediocre hardware.

The game literally just didnt work well whenever it wanted to.

CPU/GPU/RAM were never maxed out but you would find the game running sub optimally on one system, then running better on a weaker system.

Not really useful as a benchmark tool.

Is a 6 core 12 thread cpu going to be enough for the next 5 years? I have an 8700k delided at 4.9 all cores, i play all my games at 4k if possible or 1800p with most settings on high or ultra.

The PS5 and X1X both has 8 cores CPU, so logically next gen will start optimizing towards 8 cores I think. Next 1-2 years 6 cores should be enough, but 5 years, maybe not.Is a 6 core 12 thread cpu going to be enough for the next 5 years? I have an 8700k delided at 4.9 all cores, i play all my games at 4k if possible or 1800p with most settings on high or ultra.

I'm not saying that Vampyr specifically should be a standard benchmark, I'm saying that I personally care more about which CPU can run the challenging/unoptimized games best rather than which CPU hits 200 FPS vs 205 FPS in the games which do scale to many cores.Why would someone use an unoptimized game for benchmarking.

I could get Vampyr to 1080p60 with a GTX 1070 and an old i5.....so you NOT being able to hit 60 is likely a bug and a sign of bad optimization.

The titles that are used for benchmarking are usually titles that give consistent and expected results across many SKUs.

A game thats random isnt worth using as a benchmarking tool because your CPU which logically will get better performance might just not work well for the fuck of it.

Vampyr is a UE4 titles that clearly has issues because weve seen UE4 fly on relatively mediocre hardware.

The game literally just didnt work well whenever it wanted to.

CPU/GPU/RAM were never maxed out but you would find the game running sub optimally on one system, then running better on a weaker system.

Not really useful as a benchmark tool.

And to be clear, the game would run above 60 in some locations, but I only care about the low points in performance. Those are what matters for smooth gameplay.

I'm not saying that Vampyr specifically should be a standard benchmark, I'm saying that I personally care more about which CPU can run the challenging/unoptimized games best rather than which CPU hits 200 FPS vs 205 FPS in the games which do scale to many cores.

And to be clear, the game would run above 60 in some locations, but I only care about the low points in performance. Those are what matters for smooth gameplay.

The problem with unopimized games....and more specifically Vampyr since you mentioned it.

IS that it is impossible to say that, unless you see a benchmark then ask the outlet to send you that exact computer.

Because even in like for like tests the game will have different results....so.........

Basically what you are asking for is a deck of cards that when shuffled will have the most royal flushes.

You are asking to somehow engineer probability without cheating.

An unoptimized game wont even tell you which CPU does the best at the worst case because that will change depending on the mood the game is in on what day.

Its why outlets settle on certain title because they work.....i could tell you right now what CPU will get you a smooth 70+ fps.

A 2500K at 4.5GHz.....itll never dip into the 40s.

The most challenge game on CPUs right now is probably Ashes of the Singularity and they test that all the time.

If you want something that is just unoptimized so seemingly decides it only wants to use Core_3 at 50% during section A on Wednesdays when its snowing in Norfolk until you reboot the game.

Well thats a very odd thing to demand.

The PS5 and X1X both has 8 cores CPU, so logically next gen will start optimizing towards 8 cores I think. Next 1-2 years 6 cores should be enough, but 5 years, maybe not.

You need also take threads into count.

How many threads are reserved for OS and its related functions this time around, 1 or 2?

CPU with 12 threads can last quite a while against, lets say, 14 thread consoles. Especially if you OC that 12 threaded CPU a bit.

5 years is still optimistic I think, but I think we are in 3-4 year spread instead of 1-2 years.

If you want something that is just unoptimized so seemingly decides it only wants to use Core_3 at 50% during section A on Wednesdays when its snowing in Norfolk until you reboot the game.

Well thats a very odd thing to demand.

I don't think that's how these games work at all and what he's demanding.

You can still reliably test them, they just perform worse than they should if they were optimized.

I don't think that's how these games work at all and what he's demanding.

You can still reliably test them, they just perform worse than they should if they were optimized.

Err he mentioned Vampyr and The Evil Within 2.

Both are games that throw near random performance results depending on hardware. A CPU that clearly should do better does worse than a CPU in a lower tier.

Pretty much every other major title will scale in a reasonable way with few outliers here and there.

Kingdom Come Deliverance is known as a CPU cruncher for no apparent reason, but you can see it still scaling logically as you go from one CPU to the next which is why it is also used as a benchmark.

Maybe you are right and I dont understand what they are asking for.....but if asking for CPU killers as benchmarks is what they want. Ashes of the Singularity, Kingdom Come Deliverance and ACO are all CPU heavy titles that are constantly used as benchmark titles.

What titles specifically are they looking for then?

Because unoptimized games can at times lock themselves to say 3 cores and regardless of how powerful or how many threads you have the game simply acts like shit....launch it on another CPU thats supposedly weaker and it chooses to run better and spread the load in a reasonable fashion.

Vamp specifically almost never actually stresses the CPU, it simply decides sometimes it doesnt want to. How would you use that title as a benchmark when the performance it gets in one machine cant really be replicated on another using the exact same parts?

You seem to be under the impression that performance in Vampyr is completely random. I'm not sure why.The problem with unopimized games....and more specifically Vampyr since you mentioned it.

IS that it is impossible to say that, unless you see a benchmark then ask the outlet to send you that exact computer.

Because even in like for like tests the game will have different results....so.........

Basically what you are asking for is a deck of cards that when shuffled will have the most royal flushes.

You are asking to somehow engineer probability without cheating.

An unoptimized game wont even tell you which CPU does the best at the worst case because that will change depending on the mood the game is in on what day.

Its why outlets settle on certain title because they work.....i could tell you right now what CPU will get you a smooth 70+ fps.

A 2500K at 4.5GHz.....itll never dip into the 40s.

The most challenge game on CPUs right now is probably Ashes of the Singularity and they test that all the time.

If you want something that is just unoptimized so seemingly decides it only wants to use Core_3 at 50% during section A on Wednesdays when its snowing in Norfolk until you reboot the game.

Well thats a very odd thing to demand.

It's just heavily CPU-bottlenecked in many locations. You find a spot which shows that bottleneck the most (lowest FPS) and test CPUs there. It should produce consistent results.

Will it show the difference between a 10600K, 10700K, and 10900K? Maybe not.

But I'm sure it would show a difference between different generations of Ryzen, and between Intel/AMD.

As I said though, it does not specifically have to by Vampyr.

My point is that when I am buying a CPU I only care which one provides the best performance at all times, not just the games which happen to run well for that specific architecture.

Intel CPUs seem to be far more consistent in the performance that they deliver across games, while some games seem to be "good for Ryzen" or "bad for Ryzen" based on a number of factors.

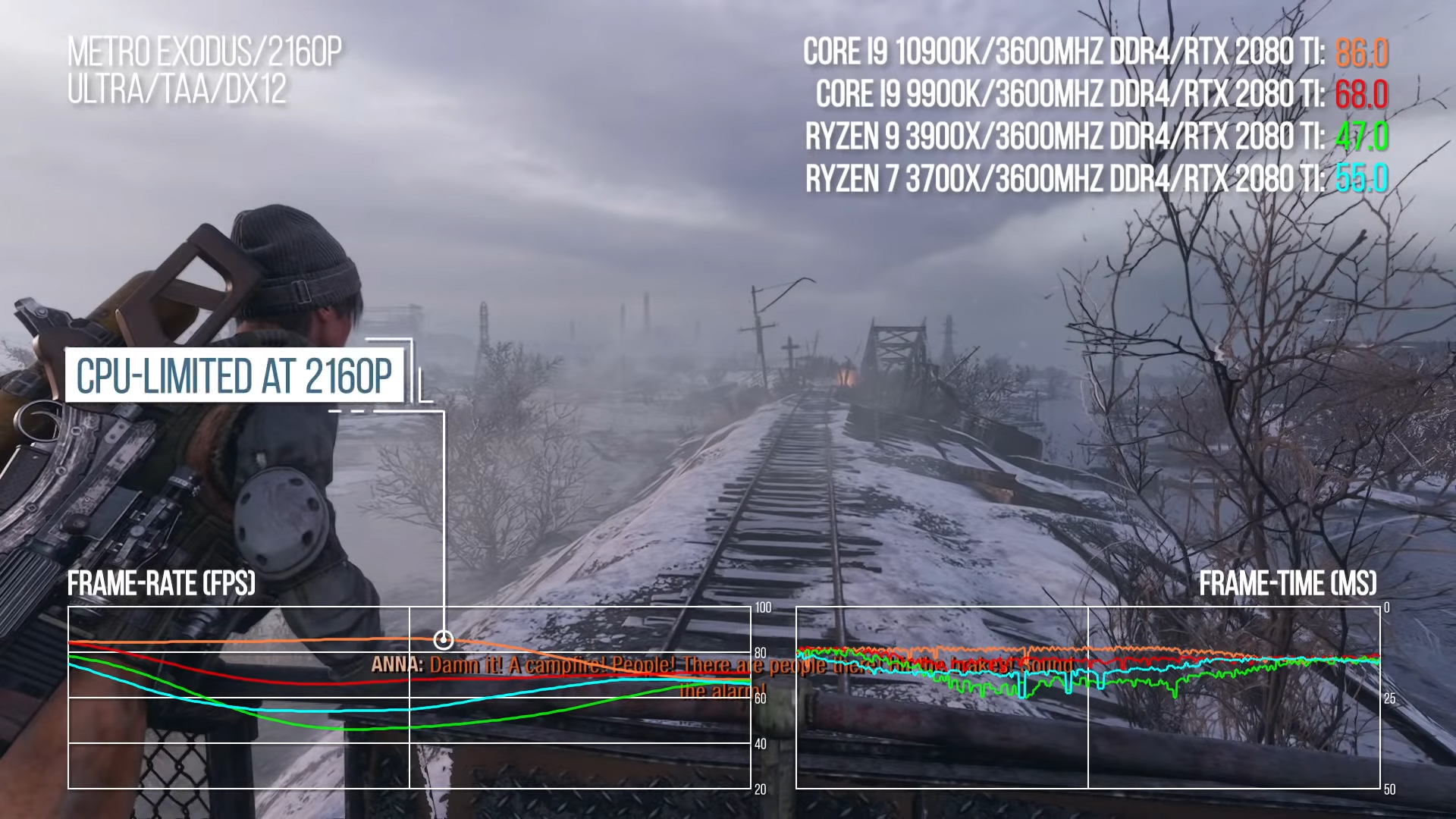

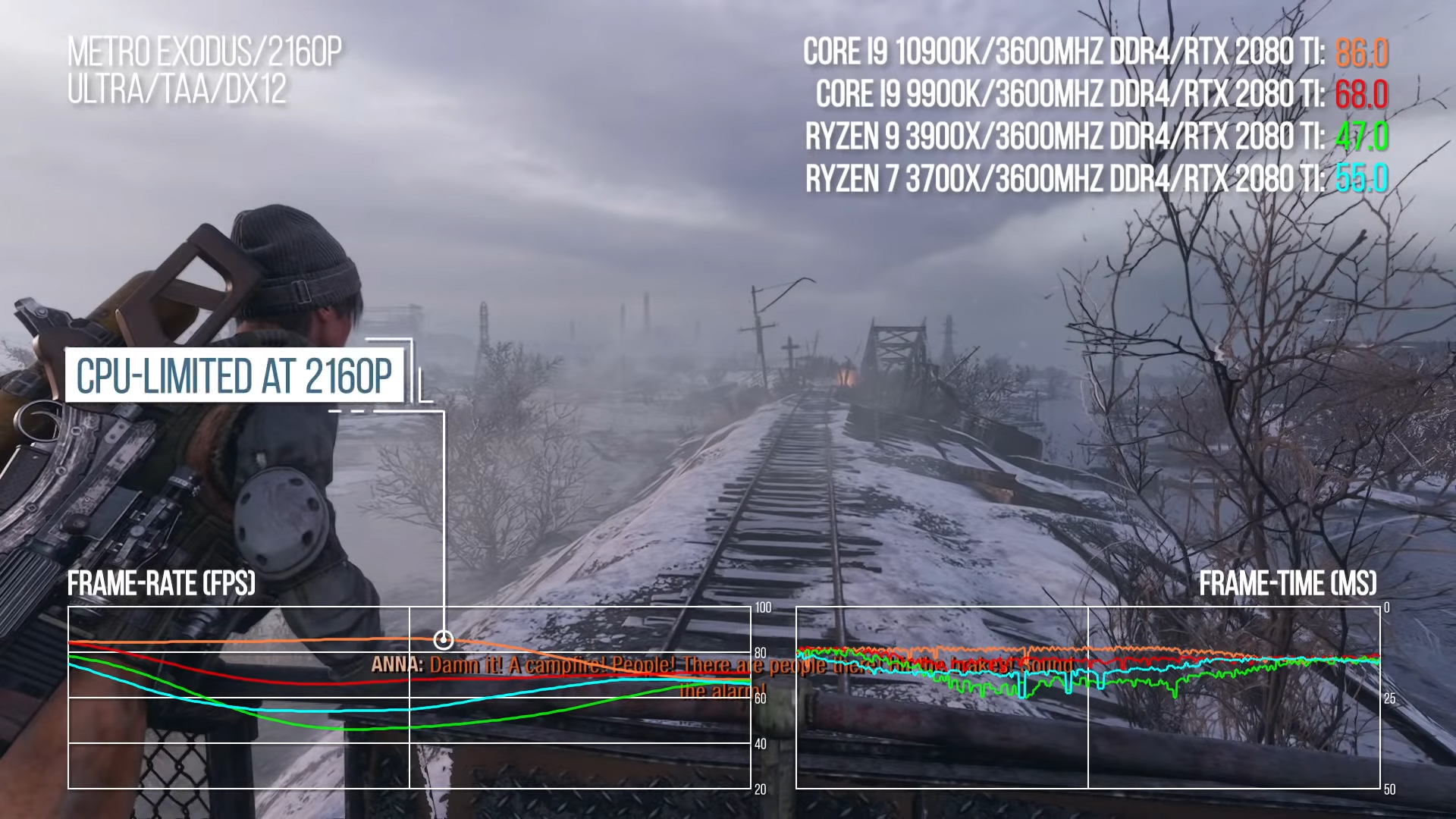

Just look at these numbers from Digital Foundry's Metro Exodus testing:

At 2160p, which is mostly GPU-limited, they still ran into some situations that were heavily CPU-bottlenecked.

That's 86 FPS on the 10900K vs 47 FPS on the 3900X.

I don't care if the 3900X is the same as the 10900K on average - I care about avoiding those low points in performance as much as possible.

Digital Foundry's tests also showed what I have described in other posts: that the 2x 4-core CCX setup of the 3700X can actually perform better than the 4x 3-core CCX design of the 3900X, even though 12 cores perform better than 8 in tasks which scale well and do not require real-time performance, such as rendering/encoding.

That's why I would only consider a 3700X (2x4) or a 3950X (4x4) if I was buying a Ryzen CPU today, not a 3900X (4x3) - and why I think that Zen 3's move to 8-core CCXes is going to be a bigger deal than some people expect.

Yes, thank you. If Zen3 has a sneaky gaming model of 8C/16T single CCX, it could be the ideal 9th gen gaming CPU.

For now, my 3700X is my workstation, 9900KS is my gaming PC, they're just good at different things. And gaming at 1440p/144, the 9900KS OC vs OC has an absurd advantage. What reviews seem to get wrong is only trying to do all ultra with max AA on 1440p/4k settings, when that's kind of a waste in many cases. I dislike most AA results as too soft, and I ease back shadows as well unless it's a really slow paced game. These two changes alone give me better performance at 1440p than 1080 ultra in virtually all cases.

This also leads people to believe that mid-range GPUs can't do 1440p, and yes OF COURSE a 1660 or RX580 or 5500XT will suffer at 1440 maxed out settings. But judiciously dial back things to a nice medium, and watch performance become quite nice in many, even most cases. And because there is very little impact from texture settings as long as you have enough VRAM on the card, it can still look incredibly good even with some settings dialed back.

For now, my 3700X is my workstation, 9900KS is my gaming PC, they're just good at different things. And gaming at 1440p/144, the 9900KS OC vs OC has an absurd advantage. What reviews seem to get wrong is only trying to do all ultra with max AA on 1440p/4k settings, when that's kind of a waste in many cases. I dislike most AA results as too soft, and I ease back shadows as well unless it's a really slow paced game. These two changes alone give me better performance at 1440p than 1080 ultra in virtually all cases.

This also leads people to believe that mid-range GPUs can't do 1440p, and yes OF COURSE a 1660 or RX580 or 5500XT will suffer at 1440 maxed out settings. But judiciously dial back things to a nice medium, and watch performance become quite nice in many, even most cases. And because there is very little impact from texture settings as long as you have enough VRAM on the card, it can still look incredibly good even with some settings dialed back.

Thanks Black_Stride, needed a bit of sense talked into me. I've got the 2080 ti currently, will probably be temped to switch over to the Ampere cards when those drop.

Hard to Justify: Intel Core i7-10700K CPU Review & Benchmarks vs. 3900X, 3700X, 10600K

Here's our review and benchmarks of the Intel i7-10700K CPU, with a focus on the 10700K vs. AMD Ryzen 7 3900X, 3700X, and Intel i5-10600K. Tests for thermals...

Despite this latest GN review I'm still tempted to switch to the i7 10700K (or KF), I need more single core power than the 10600K can offer (and don't want to bother with precise OC to reach the 10900K with a 10600K).

I'm currently using a more than 5 year old 5930K @4.3 GHz, it used to be at 4.6 GHz but through the years it became unstable and had to lower it a few times.

My motherboard is having issues with USBs and rebooting the PC takes a 2 to 3 minutes initiating USB controllers (even unplugged).

I've checked some single core benchmarks of the 10700K on AnandTech that I could test on my 5930K, it's twice more powerful on single core tasks, my CPU is really old now. Even a 8700K is faster... (even on all cores benchs)

I can still wait a bit but in some games relying too much on single core power I'm definitely CPU-limited, I'm playing a lot of iRacing since a few weeks and high car number races overload my CPU cores (not 100% on all but 100% on single-threaded tasks like rendering or physics).

I'm currently using a more than 5 year old 5930K @4.3 GHz, it used to be at 4.6 GHz but through the years it became unstable and had to lower it a few times.

My motherboard is having issues with USBs and rebooting the PC takes a 2 to 3 minutes initiating USB controllers (even unplugged).

I've checked some single core benchmarks of the 10700K on AnandTech that I could test on my 5930K, it's twice more powerful on single core tasks, my CPU is really old now. Even a 8700K is faster... (even on all cores benchs)

I can still wait a bit but in some games relying too much on single core power I'm definitely CPU-limited, I'm playing a lot of iRacing since a few weeks and high car number races overload my CPU cores (not 100% on all but 100% on single-threaded tasks like rendering or physics).

I'm running a 3440x1440 100hz ultrawide and I look at those 1440p benchmark averages and I just don't know if a time will come when it's worth upgrading my 1700. I've been super tempted by the 3600 at just £140. But it doesn't really seem like there'd be any difference in performance.

Might as well just save for an upgrade to my Titan X Pascal.

Might as well just save for an upgrade to my Titan X Pascal.

Wow the 10400 gets hit pretty bad with 2666 RAM. Figured it'd be the most sensible option but it's solidly in the pack of Ryzen CPUs unless you splurge for a Z series board w/decent RAM

Why this if they have zen3 in September?It looks like we are getting a complete Zen2 refresh in early July, courtesy of a optimization of 7nm production and a bit of CPU work optimization.

AMD Ryzen 9 3900 XT, Ryzen 7 3800 XT, Ryzen 5 3600 XT 'Matisse Refresh' Desktop CPUs Confirmed - Same Core Config, Higher Clocks & Price Cuts For Existing Models

AMD is preparing three new Ryzen CPUs as part of its Matisse Refresh family that include Ryzen 9 3900XT, Ryzen 7 3800XT & Ryzen 5 3600XT.wccftech.com

AMD Ryzen 9 3900XT (Ryzen 9 3900X Replacement)

AMD Ryzen 7 3800XT (Ryzen 7 3800X Replacement)

AMD Ryzen 5 3600XT (Ryzen 5 3600X Replacement)

Few hundred mhz of speed boost, with original Zen2 prices. Launch Zen2 lineup will get pricedrops.

Since the source of this is only Wccftech for now, we could wait on new thread creation.

You know what's more surprising to me?

The fact that Intel can still outperform the competition despite being stuck on the old node. What happens when they finally get their 10 or 7nm out? This is also the same situation for the GPU side.

The fact that Intel can still outperform the competition despite being stuck on the old node. What happens when they finally get their 10 or 7nm out? This is also the same situation for the GPU side.

Rumors are, Zen2 refresh will have 200-300mhz higher base&boost.

www.techpowerup.com

www.techpowerup.com

Possible 3rd Gen AMD Ryzen "Matisse Refresh" XT SKU Clock Speeds Surface

Last week, we brought you reports of AMD inching closer to launch its 3rd generation Ryzen "Matisse Refresh" processor lineup to ward off the 10th gen Intel Core "Comet Lake" threat, by giving the "Zen 2" chips possible clock speed-bumps to shore up performance. The lineup included the Ryzen 9...

The thing with all of these reviews is that, to glean any real difference between CPUs in the last like 4 years in gaming you need to run benchmarks at 1080p, and often with medium/low settings. In that way you shift the workload from being gpu bound to primarily CPU bound to expose the maximum difference in performance, and even then the difference between something a few years old like the 7700k with a moderate OC and a stock 10700k is relatively small.

Really though, what PC gamer that is interested in and has the money to buy a newer high end Intel or AMD chip is going to be playing at 1080p and medium settings?

When you increase the resolution even to just 1440p, the % differences are so small that it's not even worth upgrading if you are still on something like a 6700k and have even a moderate OC on it. At 4K it seems pretty much pointless, you might get 1-2 fps increase with a 10900k from your 6700k. 1% lows often improve, but even that isn't at a level to justify $500+ on a new platform (CPU & Mobo).

Seems like we are running use case scenarios from 2013 to analyze and review new chips in 2020. 1080p medium/low is like early current console gen territory. I get that it's the only way to show differences on the benchmark charts, but it just doesn't seem realistic to me. I wish with all of these reviews, they would quickly show some gaming benchmarks at 1440p and 4k with the caveat that it's almost pointless to upgrade if you are at higher resolutions.

Of course this may change with the new consoles having 8 actually competent cores, but I'm skeptical it will make a huge difference, especially not in the first few years of the gen where many AAA games are still releasing a current gen version too.

With all that said , I've only ever had Intel builds and I've been building for 20+ years. Right now I would go AMD. At least you are getting more cores and threads for cheaper, with more platform features. And if you do anything other than gaming, you get way more for cheaper with AMD. If you want to chase % gains at 1080p medium then I guess Intel is the way to go.

Really though, what PC gamer that is interested in and has the money to buy a newer high end Intel or AMD chip is going to be playing at 1080p and medium settings?

When you increase the resolution even to just 1440p, the % differences are so small that it's not even worth upgrading if you are still on something like a 6700k and have even a moderate OC on it. At 4K it seems pretty much pointless, you might get 1-2 fps increase with a 10900k from your 6700k. 1% lows often improve, but even that isn't at a level to justify $500+ on a new platform (CPU & Mobo).

Seems like we are running use case scenarios from 2013 to analyze and review new chips in 2020. 1080p medium/low is like early current console gen territory. I get that it's the only way to show differences on the benchmark charts, but it just doesn't seem realistic to me. I wish with all of these reviews, they would quickly show some gaming benchmarks at 1440p and 4k with the caveat that it's almost pointless to upgrade if you are at higher resolutions.

Of course this may change with the new consoles having 8 actually competent cores, but I'm skeptical it will make a huge difference, especially not in the first few years of the gen where many AAA games are still releasing a current gen version too.

With all that said , I've only ever had Intel builds and I've been building for 20+ years. Right now I would go AMD. At least you are getting more cores and threads for cheaper, with more platform features. And if you do anything other than gaming, you get way more for cheaper with AMD. If you want to chase % gains at 1080p medium then I guess Intel is the way to go.

Many reviewers touch on that, including Gamers Nexus and Digital Foundry. DF even does more actual use case benches, but, as you said, those don't show the absolute differences. Then the whole point of benchmarking goes out the window. The only other option is to not benchmark games until proper next Gen games come along or just bench a very small handful of games (at which you now don't have a representative of the whole spectrum). Hell I seen some outlets put Crysis 1 cpu renderer on the board comparisonsThe thing with all of these reviews is that, to glean any real difference between CPUs in the last like 4 years in gaming you need to run benchmarks at 1080p, and often with medium/low settings. In that way you shift the workload from being gpu bound to primarily CPU bound to expose the maximum difference in performance, and even then the difference between something a few years old like the 7700k with a moderate OC and a stock 10700k is relatively small.

Really though, what PC gamer that is interested in and has the money to buy a newer high end Intel or AMD chip is going to be playing at 1080p and medium settings?

When you increase the resolution even to just 1440p, the % differences are so small that it's not even worth upgrading if you are still on something like a 6700k and have even a moderate OC on it. At 4K it seems pretty much pointless, you might get 1-2 fps increase with a 10900k from your 6700k. 1% lows often improve, but even that isn't at a level to justify $500+ on a new platform (CPU & Mobo).

Seems like we are running use case scenarios from 2013 to analyze and review new chips in 2020. 1080p medium/low is like early current console gen territory. I get that it's the only way to show differences on the benchmark charts, but it just doesn't seem realistic to me. I wish with all of these reviews, they would quickly show some gaming benchmarks at 1440p and 4k with the caveat that it's almost pointless to upgrade if you are at higher resolutions.

Of course this may change with the new consoles having 8 actually competent cores, but I'm skeptical it will make a huge difference, especially not in the first few years of the gen where many AAA games are still releasing a current gen version too.

With all that said , I've only ever had Intel builds and I've been building for 20+ years. Right now I would go AMD. And if you do anything other than gaming, you get way more for cheaper with AMD. At least you are getting more cores and threads for cheaper, with more platform features. If you want to chase % gains at 1080p medium then I guess Intel is the way to go.

But that isn't the case really. Just look a few posts above:The thing with all of these reviews is that, to glean any real difference between CPUs in the last like 4 years in gaming you need to run benchmarks at 1080p, and often with medium/low settings. In that way you shift the workload from being gpu bound to primarily CPU bound to expose the maximum difference in performance, and even then the difference between something a few years old like the 7700k with a moderate OC and a stock 10700k is relatively small.

Really though, what PC gamer that is interested in and has the money to buy a newer high end Intel or AMD chip is going to be playing at 1080p and medium settings?

As I said though, it does not specifically have to by Vampyr.

My point is that when I am buying a CPU I only care which one provides the best performance at all times, not just the games which happen to run well for that specific architecture.

Intel CPUs seem to be far more consistent in the performance that they deliver across games, while some games seem to be "good for Ryzen" or "bad for Ryzen" based on a number of factors.

Just look at these numbers from Digital Foundry's Metro Exodus testing:

At 2160p, which is mostly GPU-limited, they still ran into some situations that were heavily CPU-bottlenecked.

That's 86 FPS on the 10900K vs 47 FPS on the 3900X.

I don't care if the 3900X is the same as the 10900K on average - I care about avoiding those low points in performance as much as possible.

Considering GN had to purchase theirs and Intel isn't supplying them to reviewers I wouldn't be too surprised if outside of very dedicated outlets (Linus, maybe?) we wont see more reviews for it.

I think Steve summarized possible reason as to why well when he described i7 as sandwiched between i5 and i9 more so than before.

And if you do anything other than gaming, you get way more for cheaper with AMD.

Whenever this argument comes up, it makes me wonder what fraction of home users are actually spending significant amounts of time doing these CPU-heavy parallel processing tasks.

Sure, I occasionally use 7zip, Visual Studio, and Photoshop. But I bet if you were to add up the time spent in compression / compile / render and average it out, these non-gaming CPU-heavy tasks would probably combine to less than an hour per week. It's a different story for my work computer, but that uses a workstation-class CPU.

The higher end Ryzen parts are in sort of this "jack of all trades, master of none" situation. If you are focused on gaming performance, then Intel CPUs are still the best. If you are doing full-time production work, you are better served spending more for a Threadripper. So I guess the ideal market is gamers with semi-serious production hobbies?

as a hobbyist 3d modeler, AMD has been topping my suggestions. pretty much the reason I've been wanting more i7 reviews as I could use the more cores but just don't have the budget to shell out for a i9/3900xSo I guess the ideal market is gamers with semi-serious production hobbies?