Copy pasting something I wrote some time ago.

You've probably been playing games or watching content in HDR via your PC, while missing a critical component – 10bit video.

Windows 10, unlike game consoles does not auto-switch the bit depth of the outgoing video when launching HDR games.

By default, both NVIDIA and AMD GPUs are configured to output RGB 8bit.

You might be wondering "But my TV turns on its HDR modes and games look better" this is indeed true – HDR is a collection of different pieces that when working together create the HDR effect. Your PC is sending the WCG(Wide Color Gamut)/BT.2020 metadata as well as other information to the TV which triggers its HDR mode, but the PC is still only sending an 8bit signal.

How to output 10-bit video on an NVIDIA GPU

NVIDIA GPUs have some quirks when it comes to what bit depths can be output with formats. The list is as follows:

What does this mean for you? Not much – 12-bit has the same bandwidth requirements as 10-bit. If you do require RGB/YUV444, and send a 12-bit signal to the TV, that signal still only is contains a 10-bit signal being sent in a 12-bit container. The TV will convert the signal back down to 10-bit.

- RGB/YUV444:

- 8-Bit

- 12-Bit

- YUV422

- 8-Bit,

- 10-Bit

- 12-Bit

However, if you want to output true 10-bit, then you'll need to step down to YUV422 signal. Again, not the end of the world. At normal TV viewing distances (and even in 4K monitors) it is very difficult to tell the difference between 4:4:4 and 4:2:2.

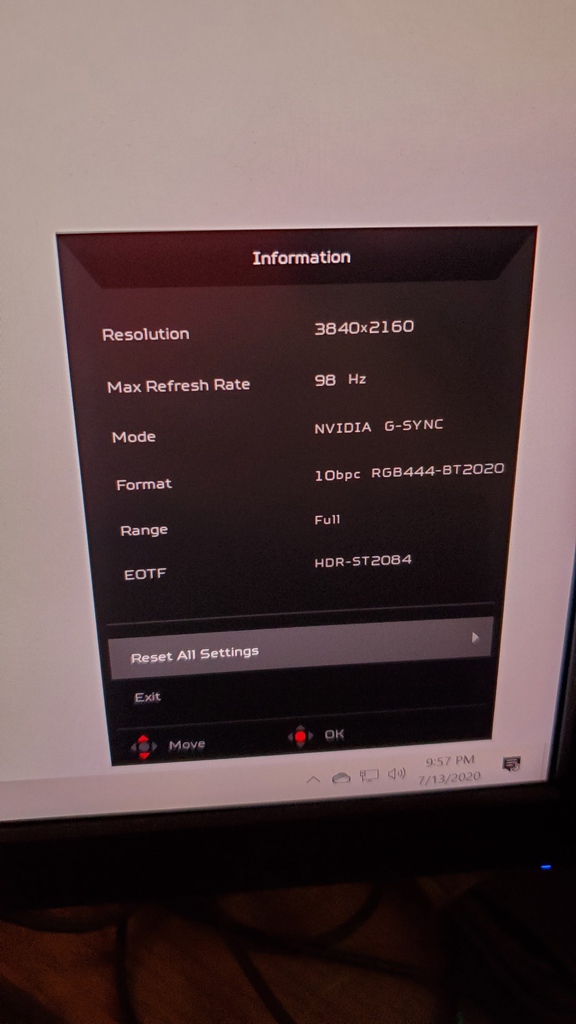

The recommended setting in this case is YUV422 video, at 10-bit, for both SDR and HDR. This will make the switch seamless and does not require you to do any extra work.

How to configure NVIDA GPUs

Thats it. Your GPU is now outputting YUV422 10-bit video to your TV or monitor.

- Right click on the Windows desktop

- Open the NVIDA control panel

- On the left side, click on Resolutions

- click on the Output Color Formatdropdown menu and select YUV422

- Click on Apply

- Now click on the Output Color Depth dropdown menu and select 10bpc(bits per color)

- Click on Apply

Now launch an HDR game and you'll see the full 10-bit color depth!

I dont have YUV as an option only RGB and YCbCr