8GB DDR3 to 16GB GDDR6 is a pretty damn nice upgradeI thought next gen would have 24GB of RAM too, but they did not and I agree probably due to cost and the fast SSD.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Graphic Fidelity I Expect Next Gen.

- Thread starter ResetEraVetVIP

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Current gen uses GDDR5, no?

Xbox One uses DDR3.

PS4 has 8GB of GDDR5, Xbox Series X has 12GB GDDR5 but the Xbox One uses 8GB of DDR3 and 32mb esram.

kinda is what fumbled the original console. (in reality the numbers are less as parts of it are reserved for OS)

at the end of the day 16GB of GDDR6 is damn nice but RAM was the thing that needed the least amount of upgrading coming from the last gen machines which ended with 12GB GDDR5 on Xbox Series X. CPU and SSD were the top 2 upgrades the platforms needed and we thankfully got it and Xbox went nuts and added a 12TF RDNA2 GPU lmao

I wonder what Unreal Engine 5 will mean. That demo shows the potential for basically CGI level image quality and effectively infinite detail, from large open areas down to minute details. It looks so damn clean and basically pre rendered and from my limited understanding kind of unlocks a hell of a lot of potential (they did say limitless geometry and buzzword terms like that). You can have tons of high quality assets but not even need all of them resident in RAM, but only whatever parts are actually needed for the scene, it sounds like, in a sense, once you have a resolution and framerate that works for the hardware, then you can kinda do what you want on screen and it should run fine. There will obviously be limits but I think those limits are going to be defined by other factors like just how many characters the CPU can power on screen rather than actually the GPU budget to render it all, but I'm probably wrong.

Still it's damn impressive and one of those out of the blue announcements that really changes and advances things beyond just what we expected from the raw spec bump. So who knows where PS6 etc will be by the time that comes.

Still it's damn impressive and one of those out of the blue announcements that really changes and advances things beyond just what we expected from the raw spec bump. So who knows where PS6 etc will be by the time that comes.

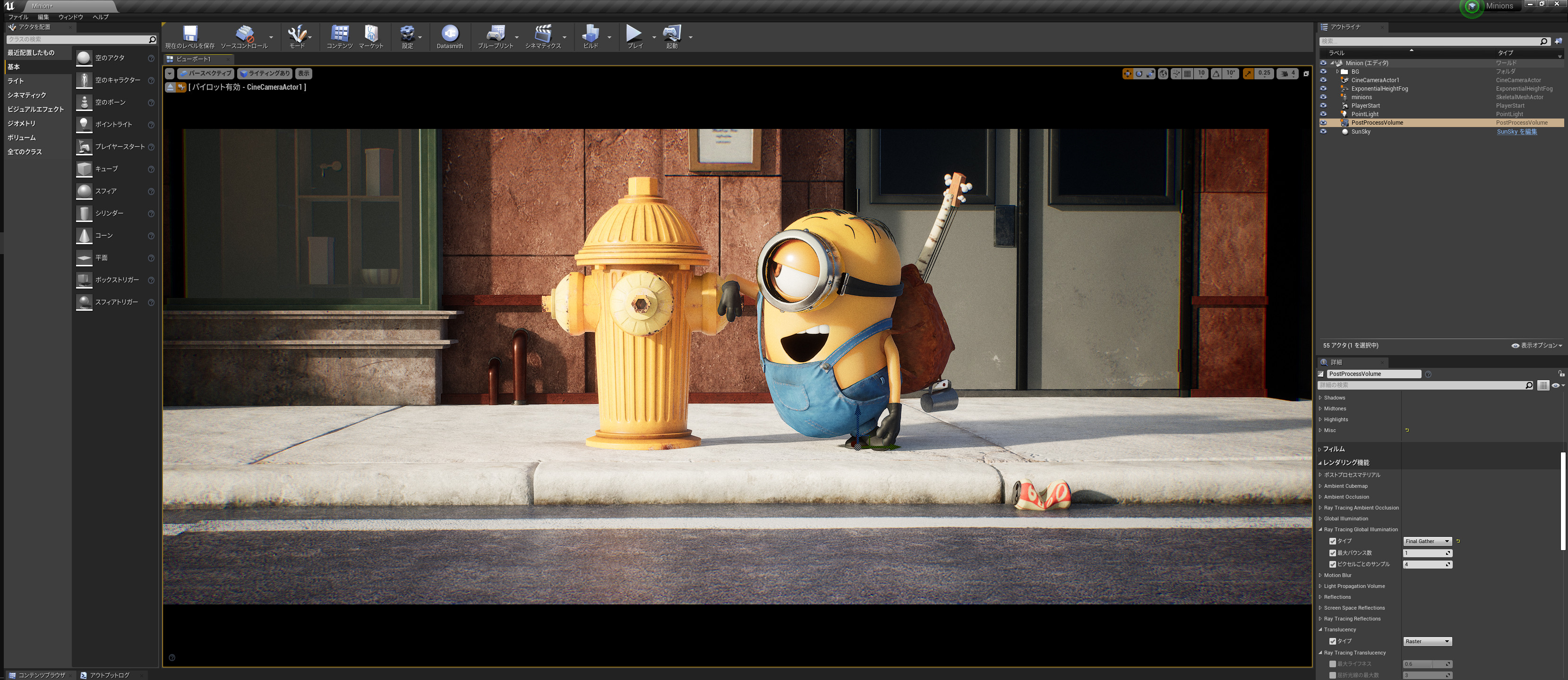

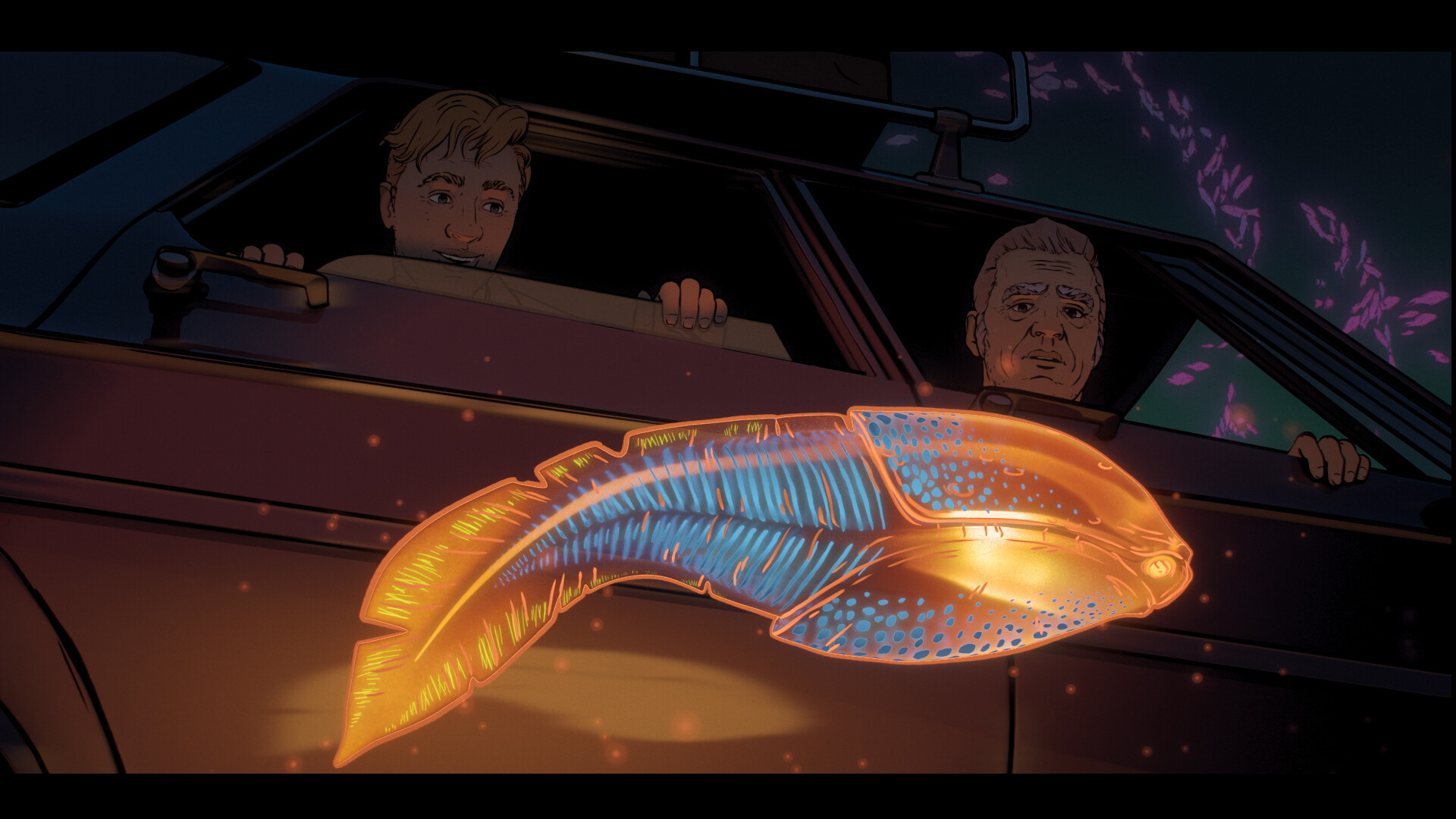

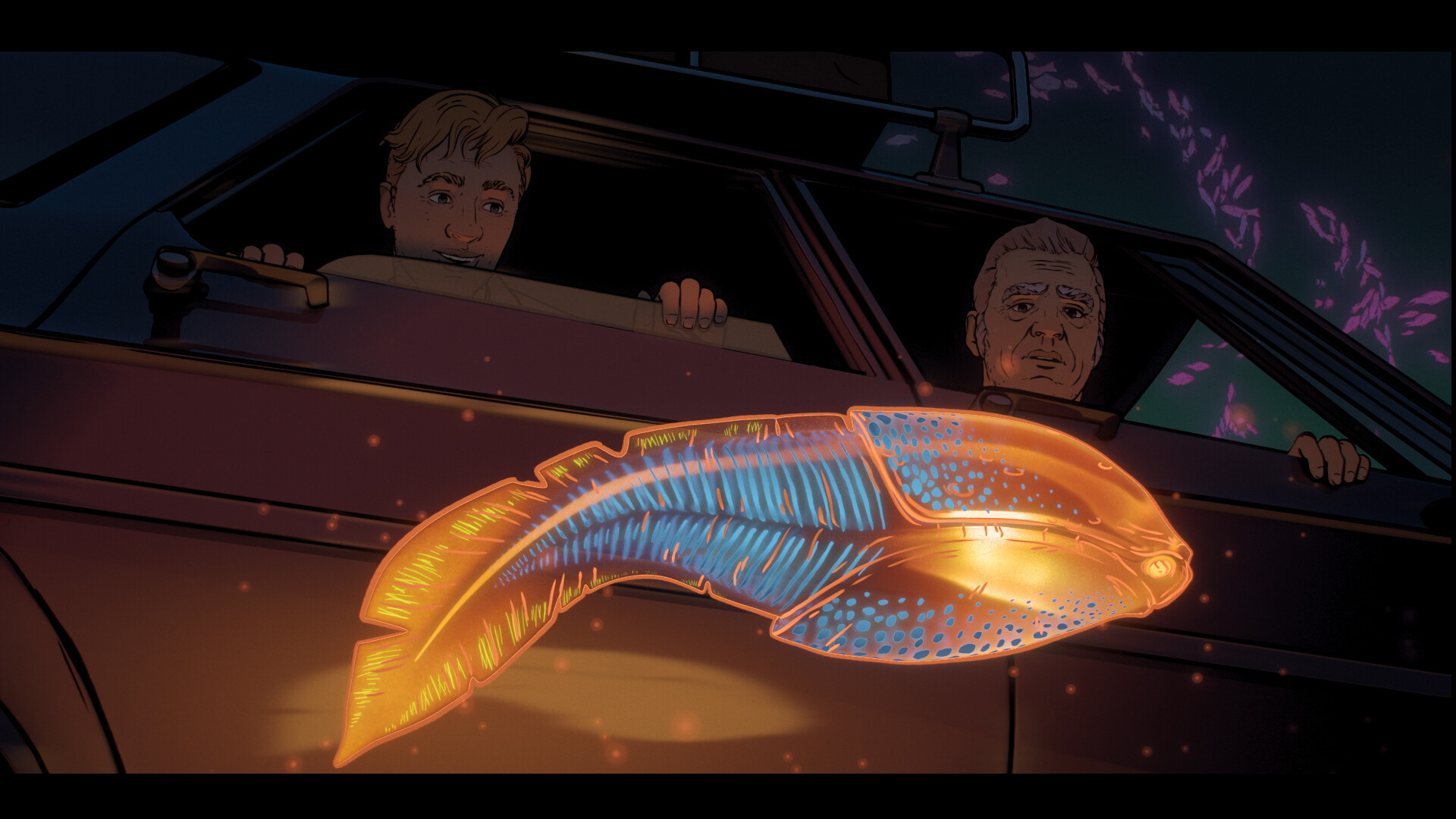

Not sure if already posted, but i think something like this is realistic, for the first half of next gen at least.

I feel like these kind of mods rely too much on wet floors just like the gta mods.

As for next next gen, I kinda assume the next big bottleneck to be storage space. The PS6 and Xbox Whatever X will have better CPUs GPUs and storage speed, more RAM and better machine learning hardware but if a game must be printed on disc or must be fitted on a 2-3TB drive things will get dicey. I wouldn't be surprised if the next GTA is 250-350gigs. For next next gen, they really can't ship a 1 TB game, this is where cloud solutions have to pick up. Data caps will render that impossible for certain regions though.

As for next next gen, I kinda assume the next big bottleneck to be storage space. The PS6 and Xbox Whatever X will have better CPUs GPUs and storage speed, more RAM and better machine learning hardware but if a game must be printed on disc or must be fitted on a 2-3TB drive things will get dicey. I wouldn't be surprised if the next GTA is 250-350gigs. For next next gen, they really can't ship a 1 TB game, this is where cloud solutions have to pick up. Data caps will render that impossible for certain regions though.

Yes, this is my concern as well. Games will want to get BIG very soon now, and even by 2027 I don't see a new technology bumping up the storage capacity beyond a few TBs. Speed yes, capacity no. Maybe an extra cache layer or something of the sort, a 20TB HDD coupled with a 2TB SSD or similar.

Yes, this is my concern as well. Games will want to get BIG very soon now, and even by 2027 I don't see a new technology bumping up the storage capacity beyond a few TBs. Speed yes, capacity no. Maybe an extra cache layer or something of the sort, a 20TB HDD coupled with a 2TB SSD or similar.

My assumption is that AI will be used to de-noise textures. You don't need to store the 8K version of everything if you can get it back to pretty much the same quality from the 1k version. When you see what they can do with DLSS in real time I assume the same can be applied to single textures and with a lot less artifacts.

I would also love for UE and Unity to add some procedural texture generation for a lot of things. Remember the 96k fps demo https://en.wikipedia.org/wiki/.kkrieger. That was done because most of the textures were generated on the fly at runtime. I just don't see the point of using Qixel megatextures for everything when they could be generated on the fly with probably the same level of quality.

Upsampling is not free and you cannot really upsample from 1K to 8K.My assumption is that AI will be used to de-noise textures. You don't need to store the 8K version of everything if you can get it back to pretty much the same quality from the 1k version. When you see what they can do with DLSS in real time I assume the same can be applied to single textures and with a lot less artifacts.

I would also love for UE and Unity to add some procedural texture generation for a lot of things. Remember the 96k fps demo https://en.wikipedia.org/wiki/.kkrieger. That was done because most of the textures were generated on the fly at runtime. I just don't see the point of using Qixel megatextures for everything when they could be generated on the fly with probably the same level of quality.

Regarding created textures procedurally, you can do only some stuff algorithmically. Writting a function that reproduce something is not easy, so unique texture, which are most texture cannot be really done programmatically. You already have a lot of procedural textures in games as shaders ;)

I agree, I expected this big jump just assuming from the spec sheet. The infinite geometry is soooo awesome. I mean games could come close to great CGI.I wonder what Unreal Engine 5 will mean. That demo shows the potential for basically CGI level image quality and effectively infinite detail, from large open areas down to minute details. It looks so damn clean and basically pre rendered and from my limited understanding kind of unlocks a hell of a lot of potential (they did say limitless geometry and buzzword terms like that). You can have tons of high quality assets but not even need all of them resident in RAM, but only whatever parts are actually needed for the scene, it sounds like, in a sense, once you have a resolution and framerate that works for the hardware, then you can kinda do what you want on screen and it should run fine. There will obviously be limits but I think those limits are going to be defined by other factors like just how many characters the CPU can power on screen rather than actually the GPU budget to render it all, but I'm probably wrong.

Still it's damn impressive and one of those out of the blue announcements that really changes and advances things beyond just what we expected from the raw spec bump. So who knows where PS6 etc will be by the time that comes.

Yes.

Here's hoping big open world games like ES or Dragon's Dogma etc have density and fidelity like this w/o egregious LoD pop ins and shifts:

No. Behind those stylized faces there's still a lot of those "things done only in offline rendering" things going on in Spiderverse. Stuff that even the UE5 demo didn't pretend was possible.

Realtime.

Fuck me.

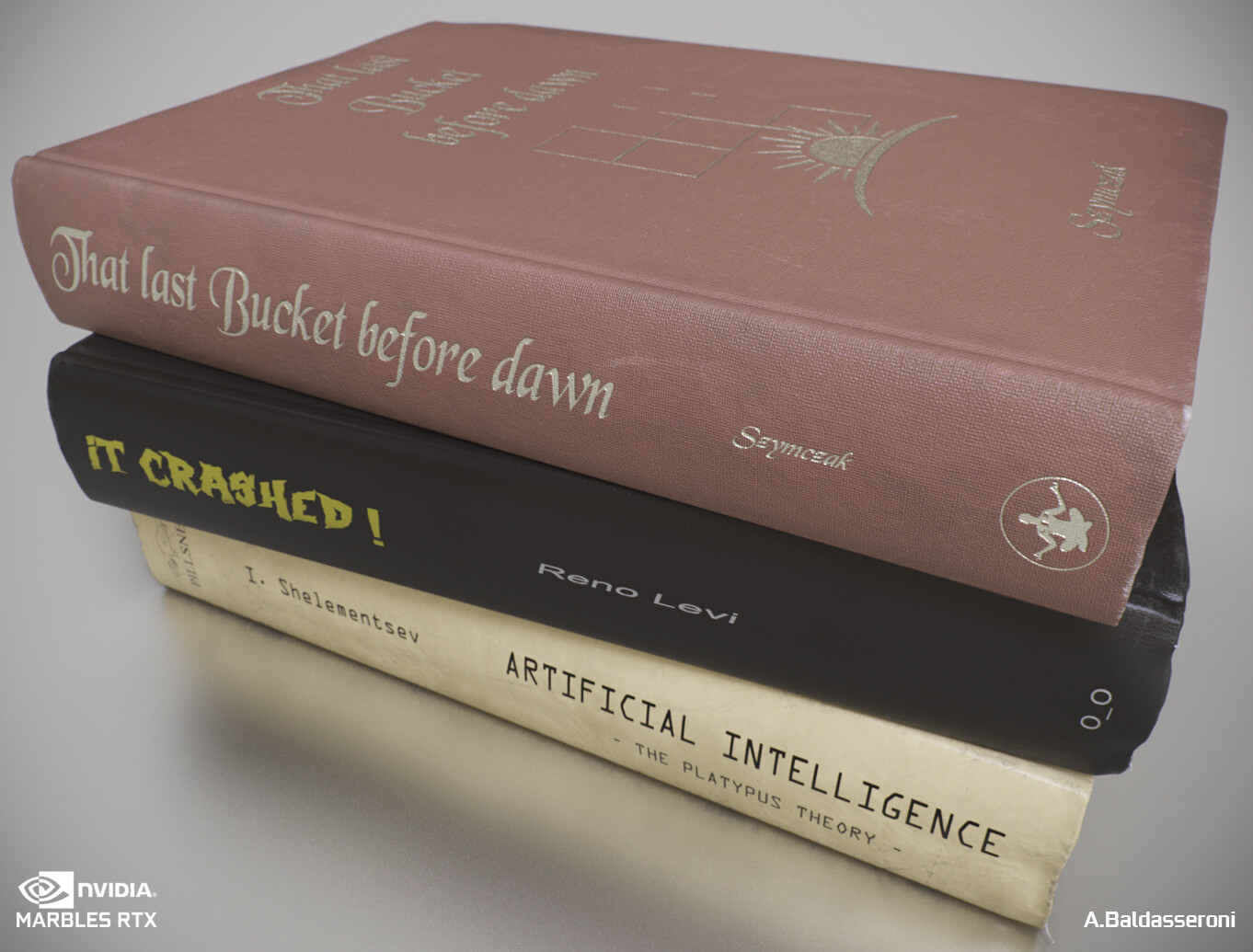

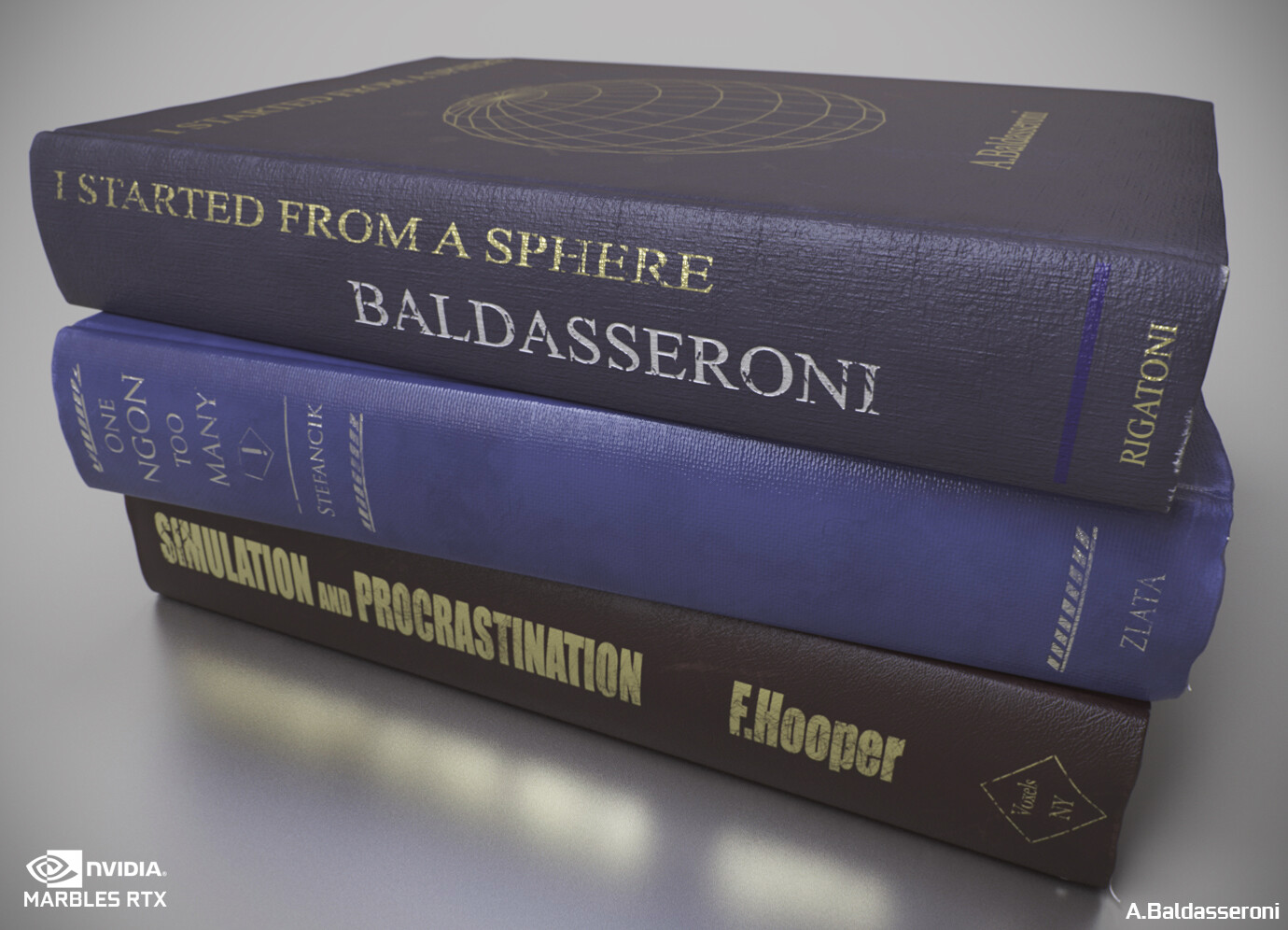

PS The books are all easter eggs.

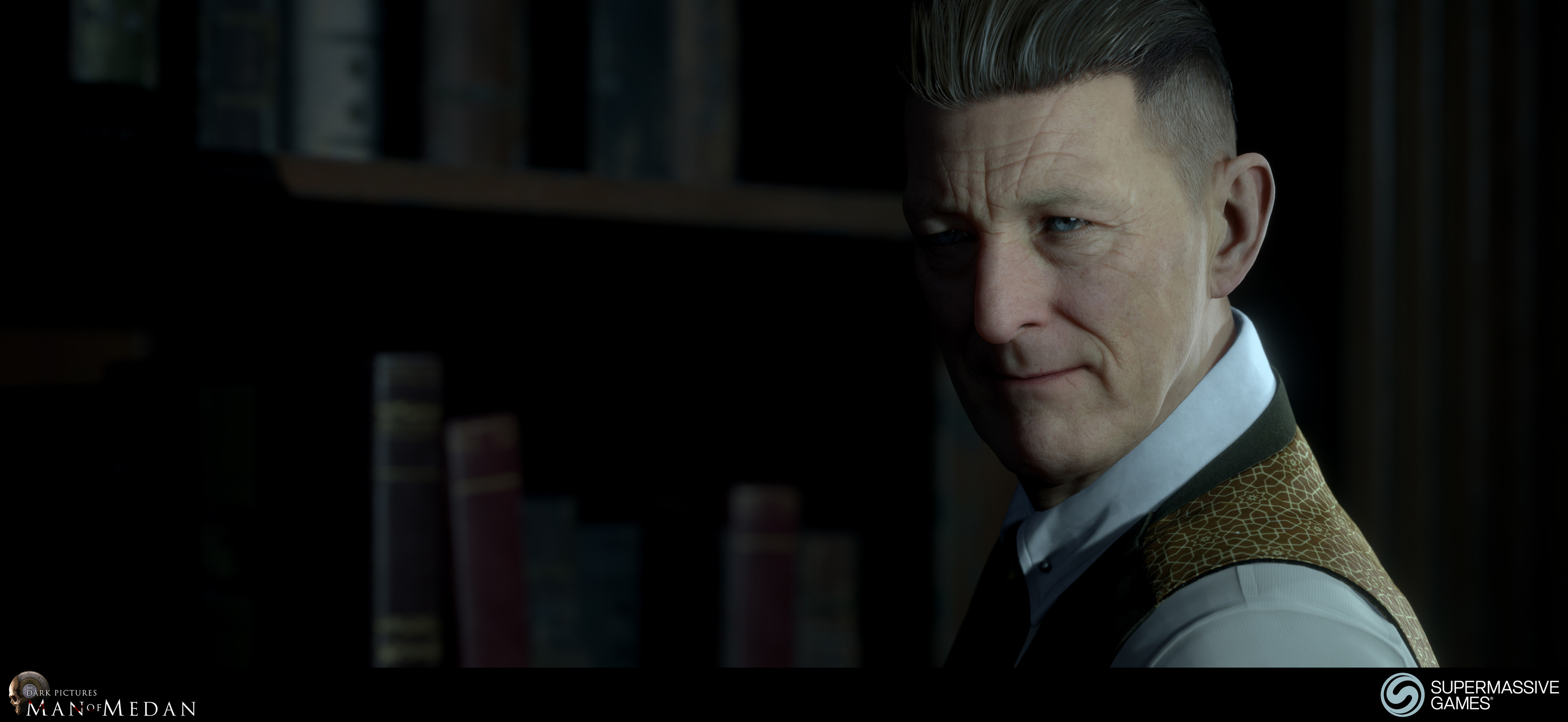

With UE4/5 and the power of nextgen Im expecting every "realistic" game to have Man of Medan levels of fidelity for faces but I need Textures and cloth modeling/simulation to be pushed way way further.

Some of the characters are absolutely astounding....the clothing and animations pretty much betray that this isnt offline

I'm wandering if we'll see wider FOVs being used.

The Sony low and up close third person camera might be changed.

The Sony low and up close third person camera might be changed.

We will also see more interesting hair styles next gen, since hair rendering will make huge leaps.

This is nightmare inducing

at least the enemies in horror games will have fantastic hair

lol

The wait for real footage of nextgen gameplay is becoming unbearable for me...

we need to see what this consoles are going to be able to do...

I buyed ffvii remake last night and some scenes are absolute beautiful...

some scenes made me question if it was in engine or cg...

i need to see somes scenes in a huge metrópole made specific for nextgen hardware...

the level of immersion can be absolute incredible...

we need to see what this consoles are going to be able to do...

I buyed ffvii remake last night and some scenes are absolute beautiful...

some scenes made me question if it was in engine or cg...

i need to see somes scenes in a huge metrópole made specific for nextgen hardware...

the level of immersion can be absolute incredible...

These are awesome. the first video looks real and could be done next gen for sure.

I hope we will again get tech demos like this :D

And it was running live on a PS4

youtu.be

youtu.be

And it was running live on a PS4

E3 2013 Trip Footage Day 3 Part 33: Quantic Dream Dark Sorcerer PS4 Graphics Trailer

This is all of my footage from the biggest gaming event in the world, E3 2013. The footage consists of vlogs, interviews, exclusive gameplay, and exhibits an...

Not sure if already posted, but i think something like this is realistic, for the first half of next gen at least.

Nah, I'll raise you this:

I firmly believe first party next-gen only games (read, HZD2 or whatever GG's doing) will trounce this right from the start in most areas, except perhaps in overall image clarity and frame rate. And RDR2 is probably the best thing on PC graphically these days, even without mods. (I'm also curious to see HZD PC and how far GG will take it)

Even though we won't match I hope we can get something closer to this when it comes to stylized games. This looks cleannnnnnnnn

ofc shit like this is impossible though:

ofc shit like this is impossible though:

Impressive that its realtime. It looks perfectly comparable to the CGI in the movies.

Old Paris Game Week 2017 Footage, some of the best animations etc I've ever seen. Look at the first part where her nose bridge moves on the ground as she moves her head on the ground. Crazy attention to detail. Nice naughty dog. (P.S don't read the YouTube comment section full of spoilers.)

Man I already thought GT sport photomode was crazy, cant wait for the raytraced version of it.

Should be nice, especially as they have been working with pathtracing.Man I already thought GT sport photomode was crazy, cant wait for the raytraced version of it.

Half-Life: Alyx adds bottles that have physics based liquid inside, most authentic liquid simulation to date? (UP: dev: "liquid shader")

Edit: Shader based as shared by developer himself, his tweet marked Source: https://steamcommunity.com/games/546560/announcements/detail/2229791404478061089 I don't know about you, but to me that is the most believable real time liquid simulation have seen video game. Yes it's very small...

I hope we will again get tech demos like this :D

And it was running live on a PS4

E3 2013 Trip Footage Day 3 Part 33: Quantic Dream Dark Sorcerer PS4 Graphics Trailer

This is all of my footage from the biggest gaming event in the world, E3 2013. The footage consists of vlogs, interviews, exclusive gameplay, and exhibits an...youtu.be

Looks good even now but it still looks like a video game. Next gen we will see games that make it hard to tell.

Isn't the first one mostly a high detail skybox made out of actual photographs? The buildings look really flat.

Isn't the first one mostly a high detail skybox made out of actual photographs? The buildings look really flat.

Yes the background is quite obviously just a flat texture. I dont find it photorealistic at all.

Okay that's pretty insane

" For compute my expectation is also in the 40-50 TFLOPS range. I hope I'm wrong but I doubt the industry will be able to keep up as they have over next generation for reasonable amounts of $$$, and next-gen transistors like CNTs etc probably won't be ready yet. The really interesting question here I think is what kind of architecture will be used. Is the UE5 demo a sign we're moving away from GPUs and towards GPGPU solutions? If any devs or programmers have insight or opinions about this I'd be all ears. " - cjn83

I too would love to hear some insight on this.

Electrical Engineer / programmer (not game dev) here, but I think my insight may clear the air on this topic.

GPGPU is kinda a misnomer, at least from the modern perspective of a gamer. It seems self descriptive "general processing on a graphics processing unit", right? And furthermore is described as a GPU offloading CPU tasks. Not so much.

The term GPGPU first came around in 2005 or so. And from the view of a data scientist or engineer it was pretty accurate.

GPU vs CPU is more about the design philosophy of semiconductor engineering and the capabilities of hardware with various designs. When it comes to designing a device with any given number of transistors the main goals are a) to provide the most efficient use those transistors, b) not completely break away from previous designs so abruptly that the previous software still works.

These different design philosophies have their own approach that has resulted in the unique differences of the various components produced over the years.

CPU:

When it comes to design of a CPU the fundamental driving factor is speed. We all remember or at least heard of the clock speed wars of old between AMD and Intel. The truth is that wasn't the end of it and I'm not just talking about the shift from MHz to GHz. Transistor frequencies were always going to be roughly whatever we can reach at a given process node size. CPUs are so focused on doing a single thing as fast as possible that over time as designers were given exponentially more transistors but were still limited by frequency they decided the best thing to do with them was attempt to predict what would happen if an given transistor were to flip in two, three, four, etc. clock cycles and then if it were to flip again in X number of cycles. This is know as predictive branching.

The vast majority of the time a transistors output is exactly the same as the last cycle. On average a transistor switches once every 7+ cycles iirc. That's a lot of calculations happening for nothing to change, but knowing what will happen when it happens upwards of 20 cycles ahead has been invaluable to the advancement of CPUs. Effectively this is offsetting the latency of actually waiting for the system to perform a task.

Thus, CPUs are good at performing tasks that a) rarely change, b) are always being performed, c) absolutely have to have the result to immediately or everything falls out of place. This is practically the computer definition of resource management.

GPU:

The GPU takes a completely different approach to design. They are massively parallel. They perform the simplest of calculations, but they they can perform trillions of them per second. This is essentially Linear Algebra or "Matrices". In fact they can perform so many of them that they can create mathematically abstract many dimensional models ahead of time with large datasets to estimate what the result of a calculation should be in real time when the given situations are similar enough or enough data has been processed beforehand to estimate unusual circumstances. This also happens to be the fundamental idea behind AI, neural networks, and things using it like DLSS.

GPGPU:

This actually has nothing to do with the design of the hardware. The idea of GPGPU is to perform certain "traditionally" CPU tasks on the GPU. Believe it or not given enough time and specialized coding CPUs and GPUs can both ultimately perform the same operations. Fun fact: you can run the original crysis on a $4k server CPU by itself now, the same can't be said about only a GPU. That said, it is absolutely not efficient to attempt to reverse their true roles. These "traditional" CPU tasks are actually things that a GPU is extremely well suited for (e.g. matrices). A GPU is not going to be effective at performing predictive branching just like a CPU is not going to be effective at DLSS.

Background info:

For quite a long time CPUs were performing matrix math because anyone wanting to use a GPU for general purpose programming had to deal with OpenGL or Direct X, which had arbitrary limits relating to graphical primitives. Scientists and engineers were trying to do this for highly complex physical simulations back in 2000 or so. In the following 8 years or so there was a massive move towards general purpose programming languages like OpenCL and notably CUDA in 2006.

In the same time frame of 7 years or so after that scientists and engineers were rapidly advancing simulations with all this newfound GPU power while video games were just pushing for higher poly counts and resolutions.

Before the start of the 8th gen we really started to hit that diminishing return curve on resolution and poly count front. It actually made a lot of sense for game programmers to start honing in on using well known simulation models to improve interactivity and graphical fidelity.

What better way to do just that than using GPGPU on the very GPU already in your hardware. GPGPU is moreso a testament to how the capabilities and balance of CPUs and GPUs were and are shifting than an actual indication that a GPU is performing a CPU's task. It is literally just software running on the portion of hardware where it is most efficient. A GPU is never going to replace a CPU, and if it does it would deserve some sort of reclassification.

Looking forward:

As hardware continues to progress you can expect the the CPU/GPU balance to continue to follow the progression that the scientists and engineers are demanding now, which is swinging extremely in favor of GPUs, massive datasets, and AI based workloads.

If you can logically connect these two opposite end of the spectrum design philosophies, improve performance across the board, and not break every piece of code written in the last 10 years you have a trillion dollar design on your hands.

We went from ~35 MTr/mm^2 at the 14nm node to ~100 MTr/mm^2 at the 7 nm node. We may eventually create a single design that performs both effectively purely due to the shear number of transistors, and having no other efficient use, but that's a long way off from a design standpoint.

With regards to the physical limit of transistor size I believe there is a well understood path to about ~10 atoms, which would be a 6x reduction from where we are. I also expect a lot of other changes other than just size to take place in that time frame which will improve efficiency in other characteristics. Single atom and subatomic transistors (whatever they may be called) is an entirely different ballpark and I don't know if we would even go that route.

Great post.Electrical Engineer / programmer (not game dev) here, but I think my insight may clear the air on this topic.

GPGPU is kinda a misnomer, at least from the modern perspective of a gamer. It seems self descriptive "general processing on a graphics processing unit", right? And furthermore is described as a GPU offloading CPU tasks. Not so much.

The term GPGPU first came around in 2005 or so. And from the view of a data scientist or engineer it was pretty accurate.

GPU vs CPU is more about the design philosophy of semiconductor engineering and the capabilities of hardware with various designs. When it comes to designing a device with any given number of transistors the main goals are a) to provide the most efficient use those transistors, b) not completely break away from previous designs so abruptly that the previous software still works.

These different design philosophies have their own approach that has resulted in the unique differences of the various components produced over the years.

CPU:

When it comes to design of a CPU the fundamental driving factor is speed. We all remember or at least heard of the clock speed wars of old between AMD and Intel. The truth is that wasn't the end of it and I'm not just talking about the shift from MHz to GHz. Transistor frequencies were always going to be roughly whatever we can reach at a given process node size. CPUs are so focused on doing a single thing as fast as possible that over time as designers were given exponentially more transistors but were still limited by frequency they decided the best thing to do with them was attempt to predict what would happen if an given transistor were to flip in two, three, four, etc. clock cycles and then if it were to flip again in X number of cycles. This is know as predictive branching.

The vast majority of the time a transistors output is exactly the same as the last cycle. On average a transistor switches once every 7+ cycles iirc. That's a lot of calculations happening for nothing to change, but knowing what will happen when it happens upwards of 20 cycles ahead has been invaluable to the advancement of CPUs. Effectively this is offsetting the latency of actually waiting for the system to perform a task.

Thus, CPUs are good at performing tasks that a) rarely change, b) are always being performed, c) absolutely have to have the result to immediately or everything falls out of place. This is practically the computer definition of resource management.

GPU:

The GPU takes a completely different approach to design. They are massively parallel. They perform the simplest of calculations, but they they can perform trillions of them per second. This is essentially Linear Algebra or "Matrices". In fact they can perform so many of them that they can create mathematically abstract many dimensional models ahead of time with large datasets to estimate what the result of a calculation should be in real time when the given situations are similar enough or enough data has been processed beforehand to estimate unusual circumstances. This also happens to be the fundamental idea behind AI, neural networks, and things using it like DLSS.

GPGPU:

This actually has nothing to do with the design of the hardware. The idea of GPGPU is to perform certain "traditionally" CPU tasks on the GPU. Believe it or not given enough time and specialized coding CPUs and GPUs can both ultimately perform the same operations. Fun fact: you can run the original crysis on a $4k server CPU by itself now, the same can't be said about only a GPU. That said, it is absolutely not efficient to attempt to reverse their true roles. These "traditional" CPU tasks are actually things that a GPU is extremely well suited for (e.g. matrices). A GPU is not going to be effective at performing predictive branching just like a CPU is not going to be effective at DLSS.

Background info:

For quite a long time CPUs were performing matrix math because anyone wanting to use a GPU for general purpose programming had to deal with OpenGL or Direct X, which had arbitrary limits relating to graphical primitives. Scientists and engineers were trying to do this for highly complex physical simulations back in 2000 or so. In the following 8 years or so there was a massive move towards general purpose programming languages like OpenCL and notably CUDA in 2006.

In the same time frame of 7 years or so after that scientists and engineers were rapidly advancing simulations with all this newfound GPU power while video games were just pushing for higher poly counts and resolutions.

Before the start of the 8th gen we really started to hit that diminishing return curve on resolution and poly count front. It actually made a lot of sense for game programmers to start honing in on using well known simulation models to improve interactivity and graphical fidelity.

What better way to do just that than using GPGPU on the very GPU already in your hardware. GPGPU is moreso a testament to how the capabilities and balance of CPUs and GPUs were and are shifting than an actual indication that a GPU is performing a CPU's task. It is literally just software running on the portion of hardware where it is most efficient. A GPU is never going to replace a CPU, and if it does it would deserve some sort of reclassification.

Looking forward:

As hardware continues to progress you can expect the the CPU/GPU balance to continue to follow the progression that the scientists and engineers are demanding now, which is swinging extremely in favor of GPUs, massive datasets, and AI based workloads.

If you can logically connect these two opposite end of the spectrum design philosophies, improve performance across the board, and not break every piece of code written in the last 10 years you have a trillion dollar design on your hands.

We went from ~35 MTr/mm^2 at the 14nm node to ~100 MTr/mm^2 at the 7 nm node. We may eventually create a single design that performs both effectively purely due to the shear number of transistors, and having no other efficient use, but that's a long way off from a design standpoint.

With regards to the physical limit of transistor size I believe there is a well understood path to about ~10 atoms, which would be a 6x reduction from where we are. I also expect a lot of other changes other than just size to take place in that time frame which will improve efficiency in other characteristics. Single atom and subatomic transistors (whatever they may be called) is an entirely different ballpark and I don't know if we would even go that route.