no, only RTX cards

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

DLSS seems too good to be true

- Thread starter Deleted member 2834

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

DirectML will never be as good as DLSS because it's not hardware accelerated. DLSS uses the AI/Tensor cores that are implemented in every rtx gpu to accelerate the neural network that will generate a high resolution image from a lower one. It's very expensive to accelerate neural network on gpu compute alone. I know because I worked with image processing with neural networks. It takes a long time to get a neural to generate an image after training. Doing dozens of images every second requires a lot of resources. Enough that it would probably negate any performance advantage of such method. Which is why I am skeptical of amd next gpu having any similar method without having the extra hardware to do it. We might have to wait a generation of gpu to see it. There is no way I am buying any amd gpu because of this. I think anyone who bought the 5700/xt were screwed over because of reviewers and YouTubers downplaying the extra hardware Nvidia integrated into their gpus.

I'll humbly suggest that someone more in the know about these things should chime in but, RDNA2 has support for double-rate 16-bit float performance and more machine learning precision support at 8bit/4bit in each CU. It's quite litterally doing the same thing as a tensor core.

Their 4-bit integer gives 97 TOPS, that's a LOT of operations for AI HDR, AI denoiser, AI DLSS equivalent, etc etc. All this in parallel processing as to not sacrifice a CU to only one task.

So again, maybe i'm totally off and i'll humbly learn something new, but, while AMD does not call it "tensor" core, they obviously have an hardware solution for ray-rating / machine learning of their own. Even Microsoft's website goes all in on this, saying :

DirectML – Xbox Series X supports Machine Learning for games with DirectML, a component of DirectX. DirectML leverages unprecedented hardware performance in a console, benefiting from over 24 TFLOPS of 16-bit float performance and over 97 TOPS (trillion operations per second) of 4-bit integer performance on Xbox Series X. Machine Learning can improve a wide range of areas, such as making NPCs much smarter, providing vastly more lifelike animation, and greatly improving visual quality.

It's clear Microsoft has something in the pipeline for a DLSS equivalent, I'm pretty sure also Sony does, they won't simply let these CUs be under utilized for long.

If it's better than Nvidia? Well i kind of doubt it, having the hardware is one thing, finding the algorithm and having the ressources to make something nice out of it is something different entirely. Nvidia is in its own little bubble of GPU tech as of now and all their investements in AI deep learning for car manufacturers is paying off big time.

I really don't expect Nintendo to have had the foresight to make sure their next hardware supported DLSS. However, if it does, and it were to regularly receive next-gen ports, I think that would also inevitably lead to much more DLSS support on the PC. As it stands, DLSS only being included in games that have a marketing deal with Nvidia has me doubting it'll see much more support than PhysX got back in the day.

Nintendo, luckily does not design the chipsets when in collaboration with Nvidia so far. Their vision stopping right at the nose, thankfully will not impact Nvidia's development. Nintendo is cheap, sure, but Nvidia is going full steam with AI in all domains, their new Tegra chips for cars and so on are fully focused on AI. I'm also pretty sure someone at Nvidia has their best interest to showcase the best bang for the buck chipset and features for Nintendo to sell these chips en masses, and nothing using brute force in the mobile chipsets will even come close to a DLSS enabled chipset. They reduce the price to compete, Nintendo likes that..

If we're lucky maybe it'll get patched in?A shame that Horizon isn't going to include it, right now the few titles with DLSS 2.0 aren't really much to my liking so I hope to see it become more common in the future.

I really regret buying a laptop with a 1660ti instead of an RTX 2060. It made sense at the time (performance was very similar for much less money) but now that DLSS benefits are starting to become apparent, it's quite clear it was a mistake. :(

Same. I bought a 1660super because I figured I don't need ray tracing. Turns out DLSS was the real killer app for the RTX line of graphics cards.

I think the next gen version of the GTX 1660 (sub $200) will come out spring 2022. about 1 1/2 years after 3080.

Do you think a 2060 is a decent "upgrade" to my 1660super?

if you're able to sell your 1660 for near MSRP, and have the extra ~$120ish dollars then maybe? You'll see a small 10-15% bump in performance. But I think the bigger noticeable upgrade would be ray tracing with DLSS enabled.

Honestly, I'd ride with the 1660 super until the 3000 series release there 3060 this winter (Nov-March). Or, if you're not noticing any performance bottlenecks waiting a couple years for the 4000 series or see what AMD does.

I am with you, and I love AMD as a company so I wish them well. But there are some real concerns here, and again I will explain it via photography analogy, because I am very aware of that industry.I have a 2080 ti so I have no chip on my shoulder but I'm sure amd is working to be competitive here. MS have their own solution as well, no need to be dismissive to AMD's future efforts in this space.

Sony cameras have a state of the art auto focus system that can track human eye and dog/cat eyes. So the tracking AF system stays on the eye, even if the subject moves. For photographers, that's the most important thing, to keep the eye of subject in focus. And this is based on AI and deep learning that Sony has developed over years.

Comparatively, Fuji's AF system isn't accurate. Its "good" but not state of the art. Their cameras are fantastic, sublime to use and lovely in all other ways, but they fall short in tracking AF. Fuji is a very small company (compared to Sony, specifically the camera division), they do not have a massive RnD budget like Sony, and they also entered the market later. Are they improving? absolutely. Will they take a while to catch up? Absolutely. It takes a lot of time and resources to improve these systems.

Meanwhile Canon has been developing a similar system, which is as good, if not better than Sony's. And they can even do bird eye AF...which is very important for wildlife pros. Canon is also massive, and they have the budget, and they also have decades of experience doing this.

So no matter what, I would not expect AMD to match NVIDIA at all. But, I would expect them to have some sort of a solution.

Honestly, I'd ride with the 1660 super until the 3000 series release there 3060 this winter (Nov-March). Or, if you're not noticing any performance bottlenecks waiting a couple years for the 4000 series or see what AMD does.

This is what I'm thinking as well. Thanks for the advice.

VR magnifies every single temporal artifact by 10x (at least). And DLSS still has a ton of those - so no - current incarnation would likely look awful.

That said - it's not out of the question to train a model that specifically optimizes for VR rendering - even if it's just AA and not reconstruction.

Well - PCVR has had limited use of foveated/lens-matching thanks to the mess of hw-vendors support (NVidia has like 3 distinct approaches to this now). VRS won't be as effective as some of the others but it's at least relatively non-invasive implementation (unlike others) for this use-case, so maybe it'll finally pick-up in use.But that said Nvidia has VRSS for VR and it works pretty great. It's not the same as DLSS at all but similar in it's goals

Now maybe but we'll see in 3 years. There's also the possibility of eGPU/SCD docked only. DLSS might not make sense on a 1080p screen undocked but might pay dividends on your 4k tv with Switch 2 keeping pace or even exceeding PS5 and XSX IQ.The RTX Turing cards with tensor cores are on a whole other level of power consumption and performance compared to the Tegra mobile chips

Pretty wild that it could actually be true for some games.

DLSS 1.0 needed per game training but the current 2.0 iteration is universal and can be implemented without extra training.The DLSS model needs to be trained on a per game basis so Nvidia needs push driver updates if they added support for specific games.

However, that said, it still needs to be integrated at an engine level because the algorithm uses motion vector data.

Well the catch is you have to pay out the ass to get a high end GPU that has DLSS 2.0.

It used to be you could get a GPU that's 2x what you had 2 years ago for $500. Now you have to spend a grand and have a game with DLSS 2.0 support. The 2080 ti is a rip off, Nvidia is selling flagship GPUs at Titan prices

It used to be you could get a GPU that's 2x what you had 2 years ago for $500. Now you have to spend a grand and have a game with DLSS 2.0 support. The 2080 ti is a rip off, Nvidia is selling flagship GPUs at Titan prices

Well the catch is you have to pay out the ass to get a high end GPU that has DLSS 2.0.

It used to be you could get a GPU that's 2x what you had 2 years ago for $500. Now you have to spend a grand and have a game with DLSS 2.0 support. The 2080 ti is a rip off, Nvidia is selling flagship GPUs at Titan prices

It works on all 2000 series cards. You can buy something like 2060 super for less than $500. Not saying it's cheap but you don't need a grand to utilize DLSS 2.0.

It works on all 2000 series cards. You can buy something like 2060 super for less than $500. Not saying it's cheap but you don't need a grand to utilize DLSS 2.0.

I mean to get 2x a 1080 to you'd probably need a 2080 ti with a DLSS enabled game right?

Now maybe but we'll see in 3 years. There's also the possibility of eGPU/SCD docked only. DLSS might not make sense on a 1080p screen undocked but might pay dividends on your 4k tv with Switch 2 keeping pace or even exceeding PS5 and XSX IQ.

Eh? DLSS makes all the sense in the world undocked. Right now, some people use DLSS 1080p Performance Mode (rendering res 540p) to get full RT and a good framerate on the 2060, and it works really well even on TVs and monitors. If the Switch 2 had a 7" 1080p screen, that would be a fantastic performance profile for its games to aim for. It would actually be a less taxing resolution than what many undocked Switch games run at. If the raw undocked GPU power was similar to a PS4 and it had an SSD, the visuals would be mindblowing.

I mean to get 2x a 1080 to you'd probably need a 2080 ti with a DLSS enabled game right?

Probably, though we're only a couple of months away from the 30-series cards. It's looking likely that the 3070 will give you approximately the power of a 2080Ti (with less RAM, admittedly) for $499, though no guarantees yet.

2080ti will give you ~2x over 1080 without DLSS.I mean to get 2x a 1080 to you'd probably need a 2080 ti with a DLSS enabled game right?

With DLSS, even 2060S will give you ~2x over 1080.

No idea why some still compare DLSS to RIS like it's the same thing or like it's something new

People have been using stuff like Reshade for years.

And NV also has image sharpening at the driver level

Do you feel like DLSS 2 and FidelityFX give comparable results here?

Source: https://wccftech.com/death-stranding-and-dlss-2-0-gives-a-serious-boost-all-around/

2080ti will give you ~2x over 1080 without DLSS.

With DLSS, even 2060S will give you ~2x over 1080.

The first graph shows the 1080 TI running 75% as fast as a card that came in it 2 years later at over twice the price.

I'm not sure how to interpret the 2nd graph without an apples to apples compare.

Can games save space on textures by relying on DLSS? Since DLSS upscales and super samples things, aren't the textures in those games also super sampled and upscaled? If so, couldn't developers save space by storing lower resolution textures and letting DLSS do all the work? I know they probably won't do this since not all hardware supports DLSS, but if say they had only 1 hardware to think about and that hardware has DLSS, would this be a viable way to save space and shrink down the size of games some what?

Second graph shows that 2060S with DLSS performance mode runs at ~95 fps (slightly sub-100) at 4K. Which is twice as fast as 1080 (47 fps, first graph).The first graph shows the 1080 TI running 75% as fast as a card that came in it 2 years later at over twice the price.

I'm not sure how to interpret the 2nd graph without an apples to apples compare.

Basically it's hardware deep learning that interpolates a higher resolution from a smaller one. DLSS 2.0 is so good it's very hard to tell the difference between native and DLSS. You basically get something like 1.5-2.5x the speed and you can get a cheap card to beat a relatively high end one (see my discussion above). You have to have the current gen GPUs or newer to get 2.0.

Previous technologies that improved performance for cheap had lots of catches, like screenshots may look great but in motion the temporal IQ takes a huge hit. DLSS doesn't seem to have as many drawbacks.

Second graph shows that 2060S with DLSS performance mode runs at ~95 fps (slightly sub-100) at 4K. Which is twice as fast as 1080 (47 fps, first graph).

So it does seem to provide. A pathway to getting the 2x for cheap. There's probably caveats but I agree the feature is very desirable.

with this tech progressing so rapidly from v1 to v2, if the next gen consoles don't have a viable alternative to this, they will be left in the dust pretty quick.

hope thats not the case.

maybe should have waited one more year. seems like they are being released just in cusp of something revolutionary and barely missing it.

hope thats not the case.

maybe should have waited one more year. seems like they are being released just in cusp of something revolutionary and barely missing it.

Do people think they're going to try and monetize DLSS through a sub?

Impressions are great. Sounds like it's going to be big and too good to be true.

Impressions are great. Sounds like it's going to be big and too good to be true.

with this tech progressing so rapidly from v1 to v2, if the next gen consoles don't have a viable alternative to this, they will be left in the dust pretty quick.

hope thats not the case.

maybe should have waited one more year. seems like they are being released just in cusp of something revolutionary and barely missing it.

There's always something major in computing tech. Like the last gen narrowly missed cheap SSDs and Ryzen. Before that Moore's law with raw compute would do it every 18 months.

Honestly that's almost always the case, and there's always something new on the horizon. Last generation it was variable refresh rates, that started to become a thing in 2013 just as new consoles were coming out.with this tech progressing so rapidly from v1 to v2, if the next gen consoles don't have a viable alternative to this, they will be left in the dust pretty quick.

hope thats not the case.

maybe should have waited one more year. seems like they are being released just in cusp of something revolutionary and barely missing it.

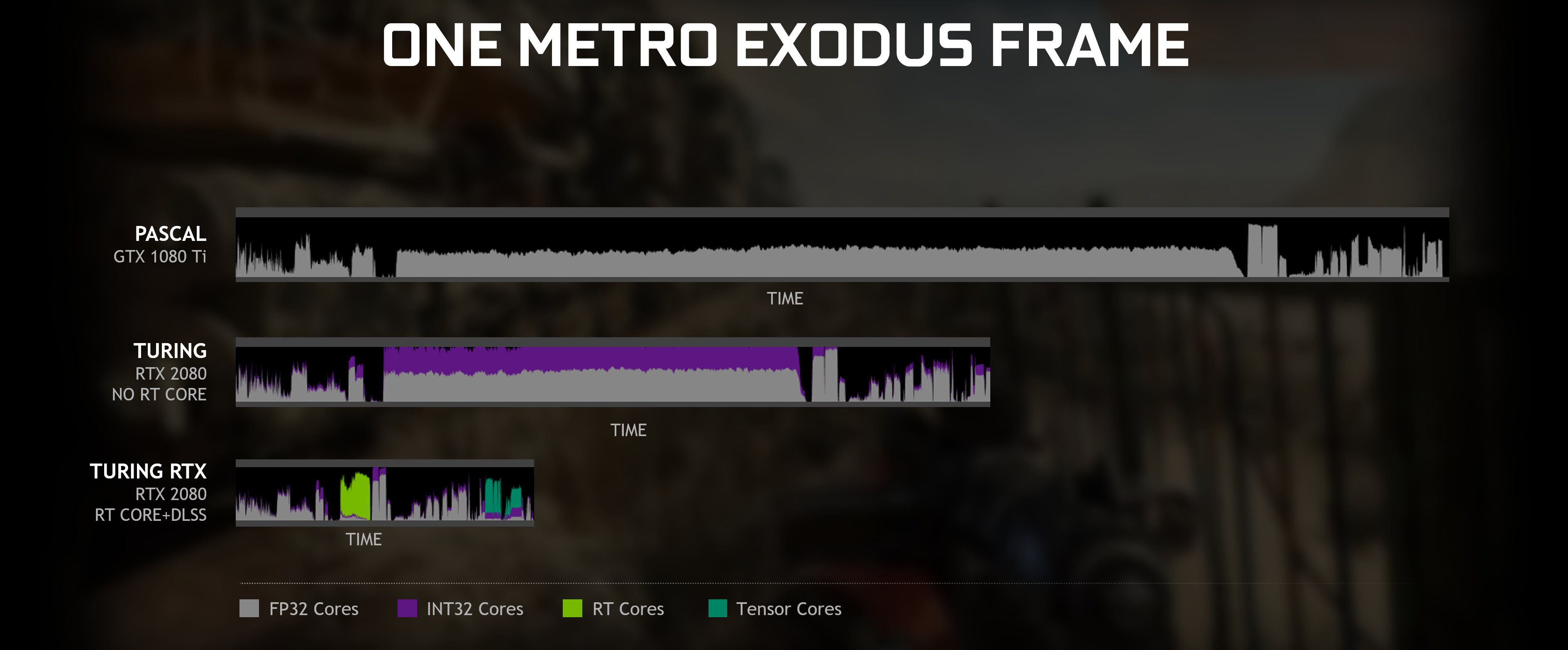

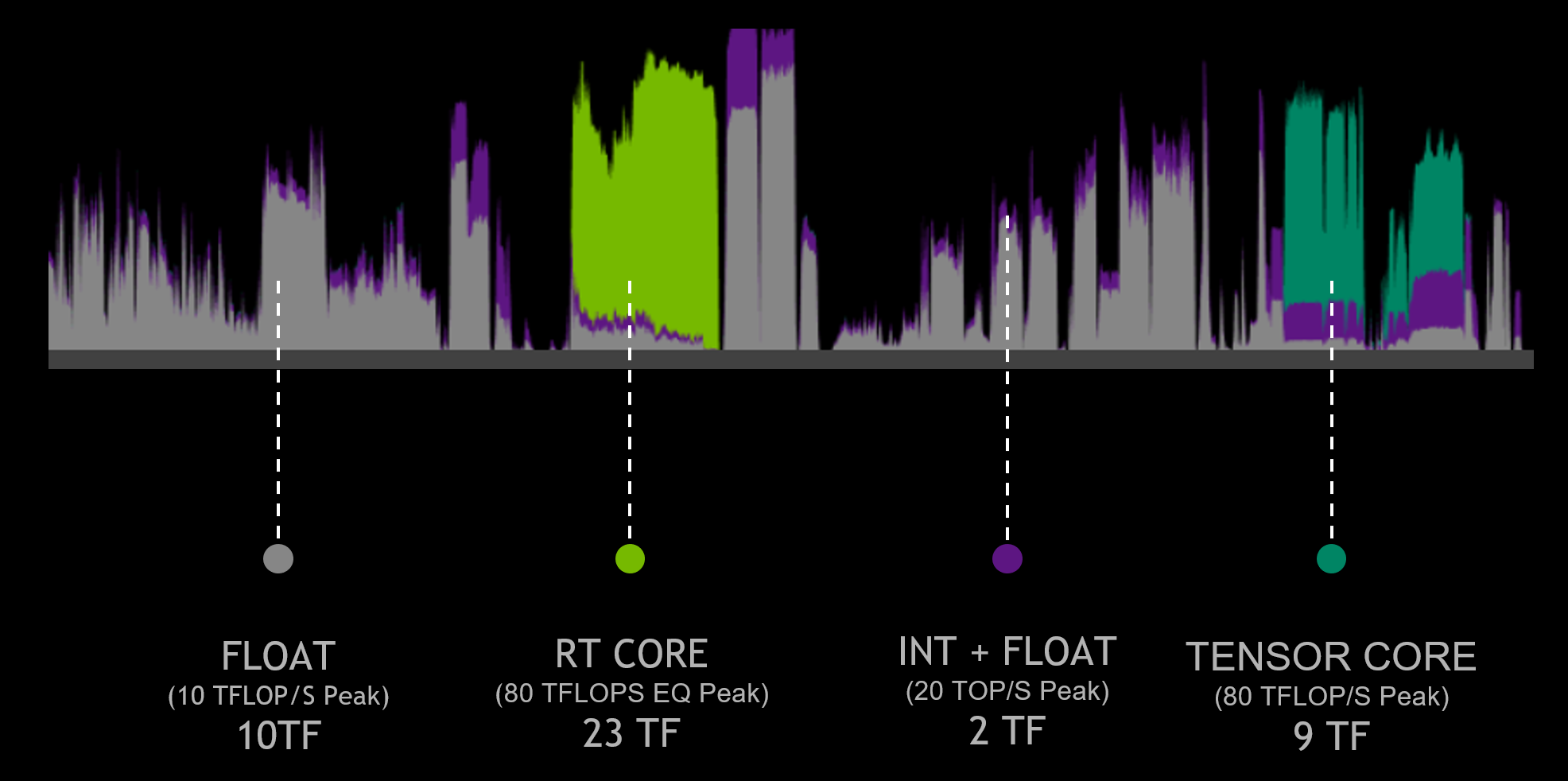

Basically rendering a lower resolution frame and then using AI to construct sharp higher resolution images. This is only possible by the dedicated AI processors called Tensor Cores which are only on RTX graphic cards (and subsequent future Nvidia cards from here on). I guess an example is that you can get the game to render at 1080P but DLSS construct it to 4K without performance drop. The 4K image constructed by DLSS is comparable if not better than native 4K with TAA

You can learn more here

NVIDIA DLSS: Your Questions, Answered

NVIDIA’s Technical Director of Deep Learning answers your questions about DLSS in Battlefield V and Metro Exodus.

www.nvidia.com

Those wondering where consoles fit in, there is a DLSS cost:

The 2060 super's tensors at the bottom of the chart is about 2.5x faster than XSX's RPM and about 3x faster than PS5's should be, this means that a 4K reconstructed image if it can be as fast as DLSS will still take the XSX over 6ms and PS5 will take nearly 8ms. A 60fps window is 16ms, 30fps is 33ms so the consoles might be limited to 30fps for 4K if they are using reconstruction to get there.

Meanwhile it's similar for a Switch successor, with a 1.2tflops portable mode, it should take a Turing configured GPU ~4ms to produce a 1080p image out of a 540p image, docked a 3tflops mode would take 6ms to produce a 4K image, so a switch successor would be better off targeting 1440p when docked, allowing more time for fancier graphics when docked over portable since it would only take around 2.5ms.

This is based on Turing, without any improvements to tensor core performance in Ampere, and giving AMD/Sony/Microsoft the same cost as Nvidia's DLSS.

Switch's successor will likely be based on Ampere or Hopper, but the performance numbers given here are really the worst they could really use if they want to use DLSS.

The 2060 super's tensors at the bottom of the chart is about 2.5x faster than XSX's RPM and about 3x faster than PS5's should be, this means that a 4K reconstructed image if it can be as fast as DLSS will still take the XSX over 6ms and PS5 will take nearly 8ms. A 60fps window is 16ms, 30fps is 33ms so the consoles might be limited to 30fps for 4K if they are using reconstruction to get there.

Meanwhile it's similar for a Switch successor, with a 1.2tflops portable mode, it should take a Turing configured GPU ~4ms to produce a 1080p image out of a 540p image, docked a 3tflops mode would take 6ms to produce a 4K image, so a switch successor would be better off targeting 1440p when docked, allowing more time for fancier graphics when docked over portable since it would only take around 2.5ms.

This is based on Turing, without any improvements to tensor core performance in Ampere, and giving AMD/Sony/Microsoft the same cost as Nvidia's DLSS.

Switch's successor will likely be based on Ampere or Hopper, but the performance numbers given here are really the worst they could really use if they want to use DLSS.

Well, there is always work to be put in, if you want to get the most out of your machine. Or you accept it as it is.Thanks for the reply. Yeah I noticed my CPU throttling down from 3.8ghz to avg 3.3ghz. How does throttlestop work ?

Issue with my l is it's only an inch thick and so it gets hot. Avg CPU temp sticks around 90c at full load. Recon it would be safe to un-throttle it ?

Your laptop sounds a beast as well, what laptop do you have if you have desktop parts in ?

What do you recon the odds are of playing Cyberpunk on fairly good settings with my 2070 max q using DLSS ? I thought it was good until I read the recent play throughs we're on a 2080ti at 1080p WITH DLSS on. Thought at that point my chances of max settings were dashed lol

Redoing the thermal paste is a major thing for gaming laptops, the factory paste really just doesn't do it since we went 6+ cores. Then you will see maximum gains from undervolting the CPU. Then you can push it to run at its full boost of 3.9Ghz on all six cores with no throttling.

I have an MSI GT76. Pure desktop replacement. What make/model laptop do you have?

You'll definitely play Cyberpunk at 60fps at 1080p, especially with DLSS. It's running on the Xbox One and PS4 after all.

The ray-tracing I can't speak on, that will be interesting.

They already monetize it by charging RTX cards higher than the previous cards.Do people think they're going to try and monetize DLSS through a sub?

Impressions are great. Sounds like it's going to be big and too good to be true.

The difference is that tensor cores are separate, additional hardware. The RPM for AMD runs on the general compute units. Therefore, running a DLSS-alike will use up far more resources that can't be used for the rest of rendering. It's analogous to running raytracing in software as opposed to RT hardware.I'll humbly suggest that someone more in the know about these things should chime in but, RDNA2 has support for double-rate 16-bit float performance and more machine learning precision support at 8bit/4bit in each CU. It's quite litterally doing the same thing as a tensor core.

DLSS has plenty of drawbacks, it generates just as many artifacts and temporal instabilities as other reconstruction techniques. (More than some, less than others.) Its usefulness derives not from absolute quality, but because it achieves that quality while running primarily on otherwise underused tensor cores. It thus has very little impact on general compute, meaning larger performance improvements versus other methods.Previous technologies that improved performance for cheap had lots of catches, like screenshots may look great but in motion the temporal IQ takes a huge hit. DLSS doesn't seem to have as many drawbacks.

No idea why some still compare DLSS to RIS like it's the same thing or like it's something new

People have been using stuff like Reshade for years.

And NV also has image sharpening at the driver level

Do you feel like DLSS 2 and FidelityFX give comparable results here?

Source: https://wccftech.com/death-stranding-and-dlss-2-0-gives-a-serious-boost-all-around/

People compare DLSS to RIS because in the early days of DLSS, it was a very fair comparison. If you haven't kept on the massive step up DLSS has taken with 2.0 over 1.x, then it's perfectly understandable why the memories of that old comparison still linger.

The criticisms were quite fair 12-18 months ago. I don't really blame people for shrugging at DLSS 2.0 if they just haven't kept up with just how much it has improved over the 1.x version.

If someone remembered watching videos like this in early 2019 and wrote off DLSS, I get it.

It's mostly up to Nvidia to make their case again. The DLSS 2.0 tech itself does that in spades, but getting the word out to folks is still a bit of a hurdle.

Last edited:

With the inclusion of tensor cores the RTX series cards were always great performers in GPU accelerated model training. While these cards may not have been interesting for the PC gaming community they definitely were a pretty big leap forward in efficiency for modelling. It's great that finally this specialised compute power can be leveraged in improving performance and potential IQ in gaming.

As other people have alluded to, deep learning models are very good at encoding the essence of the input data to generate high dimensional representations from low dimensional states. Doing a quick google of use cases of variational autoencoders and GANs will illuminate the wealth of literature on the power of these methods. DLSS makes complete sense from within the world of generative models and I'm excited to see what will be developed further as GPUs continue to be specialised and improved in the domain of machine learning.

As other people have alluded to, deep learning models are very good at encoding the essence of the input data to generate high dimensional representations from low dimensional states. Doing a quick google of use cases of variational autoencoders and GANs will illuminate the wealth of literature on the power of these methods. DLSS makes complete sense from within the world of generative models and I'm excited to see what will be developed further as GPUs continue to be specialised and improved in the domain of machine learning.

Last edited:

DLSS 2 is temporal AA/upscaler in it's heart.Can games save space on textures by relying on DLSS? Since DLSS upscales and super samples things, aren't the textures in those games also super sampled and upscaled? If so, couldn't developers save space by storing lower resolution textures and letting DLSS do all the work? I know they probably won't do this since not all hardware supports DLSS, but if say they had only 1 hardware to think about and that hardware has DLSS, would this be a viable way to save space and shrink down the size of games some what?

Texture detail needs to be in previous frames for it to be visible.

Which is why image is rendered with mipmap settings fitting the target resolution.

DLSS doesn't change the source textures, so as is the idea for saving storage space wouldn't work.

Looking at comparisons AMDs CAS 4K actually didnt look too bad against native 4K. DLSS seemed to work like magic with the AA like affect though.

I wonder if AMD could re-purpose CAS and combine it with a DLSS like reconstruction technique.

I wonder if AMD could re-purpose CAS and combine it with a DLSS like reconstruction technique.

This right here is why I'm glad I didn't listen to people who said to get a 1080Ti instead of a 2070 back around when it came out. This is awesome and the future of gaming at higher resolutions, especially 8K and beyond.

Will this tech apply to VR games in the future? Seems like it'd be perfect for that.

Will this tech apply to VR games in the future? Seems like it'd be perfect for that.

I think one thing that can help people understand why DLSS can look better than native is to consider what it's actually trying to do compared to other upscaling algorithms. Something like checkerboard rendering is trying to look as close as possible to a natively rendered image while actually rendering far fewer pixels. Since the goal is to look like native, the best case scenario would then be if it looked identical to native, but in reality it generally looks slightly worse since it can't reproduce the native image perfectly.

In comparison, DLSS isn't actually trying to look like a native 4K image though. It's been trained to take lower resolution images and make them look like 16K anti-aliased images. If you were to compare DLSS to a 16K native resolution, I would guess that the end result is noticeably worse than native, but when compared to a native 4K image DLSS is able to reproduce a lot of smaller detail that otherwise might only be visible if you had super sampled your 4K image down from 16K. This is why, even though DLSS can create a few odd artifacts from the upscaling, the overall image quality can still look better than native 4K.

In comparison, DLSS isn't actually trying to look like a native 4K image though. It's been trained to take lower resolution images and make them look like 16K anti-aliased images. If you were to compare DLSS to a 16K native resolution, I would guess that the end result is noticeably worse than native, but when compared to a native 4K image DLSS is able to reproduce a lot of smaller detail that otherwise might only be visible if you had super sampled your 4K image down from 16K. This is why, even though DLSS can create a few odd artifacts from the upscaling, the overall image quality can still look better than native 4K.

I think the last time AMD even talked about the subject was when they briefly spoke about DirectML running on the radeon VII. So presumably they would be piggy backing off that.Even if we discount the hardware side, wouldn't a theoretical AMD competitor still be years behind nvidia's tech? They've been working on machine learning techniques for like a decade now

Will this tech apply to VR games in the future? Seems like it'd be perfect for that.

Honestly this and Nvidias VRS Foveated rendering has me very optimistic for bigger VR experiences that look amazing and perform amazing. Especially as we get higher resolution VR headsets.

Good to see people finally coming around. When Nvidia announced this tech and their RTX cards, the response was pretty awful.

Response to RTX cards is still awful, will only stay as awful when they announce 3000 -series and 3080Ti isn't 699.

Something like DLSS 2.0 wont do shit when people can't get over prices.

As I understood it, DLSS runs mostly sequentially at the end of a frame, rather than in parallel - so that might be less of a problem than you think.The difference is that tensor cores are separate, additional hardware. The RPM for AMD runs on the general compute units. Therefore, running a DLSS-alike will use up far more resources that can't be used for the rest of rendering. It's analogous to running raytracing in software as opposed to RT hardware.

It's far higher quality and more temporally-stable than anything I've seen in other games.DLSS has plenty of drawbacks, it generates just as many artifacts and temporal instabilities as other reconstruction techniques. (More than some, less than others.) Its usefulness derives not from absolute quality, but because it achieves that quality while running primarily on otherwise underused tensor cores. It thus has very little impact on general compute, meaning larger performance improvements versus other methods.

The main drawback I've seen is that certain effects suffer from ghosting over many frames; but still far less than other TAA/reconstruction methods.

You will see in an upcoming video that DLSS 2.0 in a direct comparison with checkerboarding rendering does not produce nearly as many ghosts and instabilities or artefacts, not by a long shot. It in fact crushes checkerboarding rendering from a quality and Stability stand point, with lesser real pixels as well. Have you done the comparison already that you type that it is just as unstable, ghosty, or artefacts with such certainty and conviction?The difference is that tensor cores are separate, additional hardware. The RPM for AMD runs on the general compute units. Therefore, running a DLSS-alike will use up far more resources that can't be used for the rest of rendering. It's analogous to running raytracing in software as opposed to RT hardware.

DLSS has plenty of drawbacks, it generates just as many artifacts and temporal instabilities as other reconstruction techniques. (More than some, less than others.) Its usefulness derives not from absolute quality, but because it achieves that quality while running primarily on otherwise underused tensor cores. It thus has very little impact on general compute, meaning larger performance improvements versus other methods.

i hadn't thought of that but.... oh yeah

Something like DLSS 2.0 wont do shit when people can't get over prices.

There is some truth to what you say. But DLSS could be coming to every tier of cards with NVidias 3000 series. They might be ready to let go of GTX and the weird 1600 series sidestep and focus on RTX from here on out. Something like a 180€ RTX 3050Ti could be a possibility.

Not to mention with most games using TAA these days they already have temporal artifacts and a "pristine and clean native image" isn't really a thing these days. And it does seem that DLSS can get rid of some of those artifacts, even if it has some of its own like that hair in Death Stranding.You will see in an upcoming video that DLSS 2.0 in a direct comparison with checkerboarding rendering does not produce nearly as many ghosts and instabilities or artefacts, not by a long shot. It in fact crushes checkerboarding rendering from a quality and Stability stand point, with lesser real pixels as well. Have you done the comparison already that you type that it is just as unstable, ghosty, or artefacts with such certainty and conviction?