I understand and respect your point of view but I believe that the overwhelming majority of gamers have vastly different priorities. If you are able to achieve 4K60 at maximum detail then issues such as the ones you describe would matter. For people who don't have bleeding-edge hardware the choices would be a) don't use DLSS and upscale from a lower resolution, b) don't use DLSS and drop settings, c) don't use DLSS and put up with bad performance and d) use DLSS, avoid all that and perhaps get a slightly altered image.

The choice becomes a true no-brainer if you're using low-end or mid-range hardware. it's no longer an issue of how good the game looks and in what way, it's an issue of whether the game is playable at decent framerates or not. For most people DLSS is a free graphics card upgrade, it saves them a lot of money. They are not going to care about small details that DLSS doesn't get right.

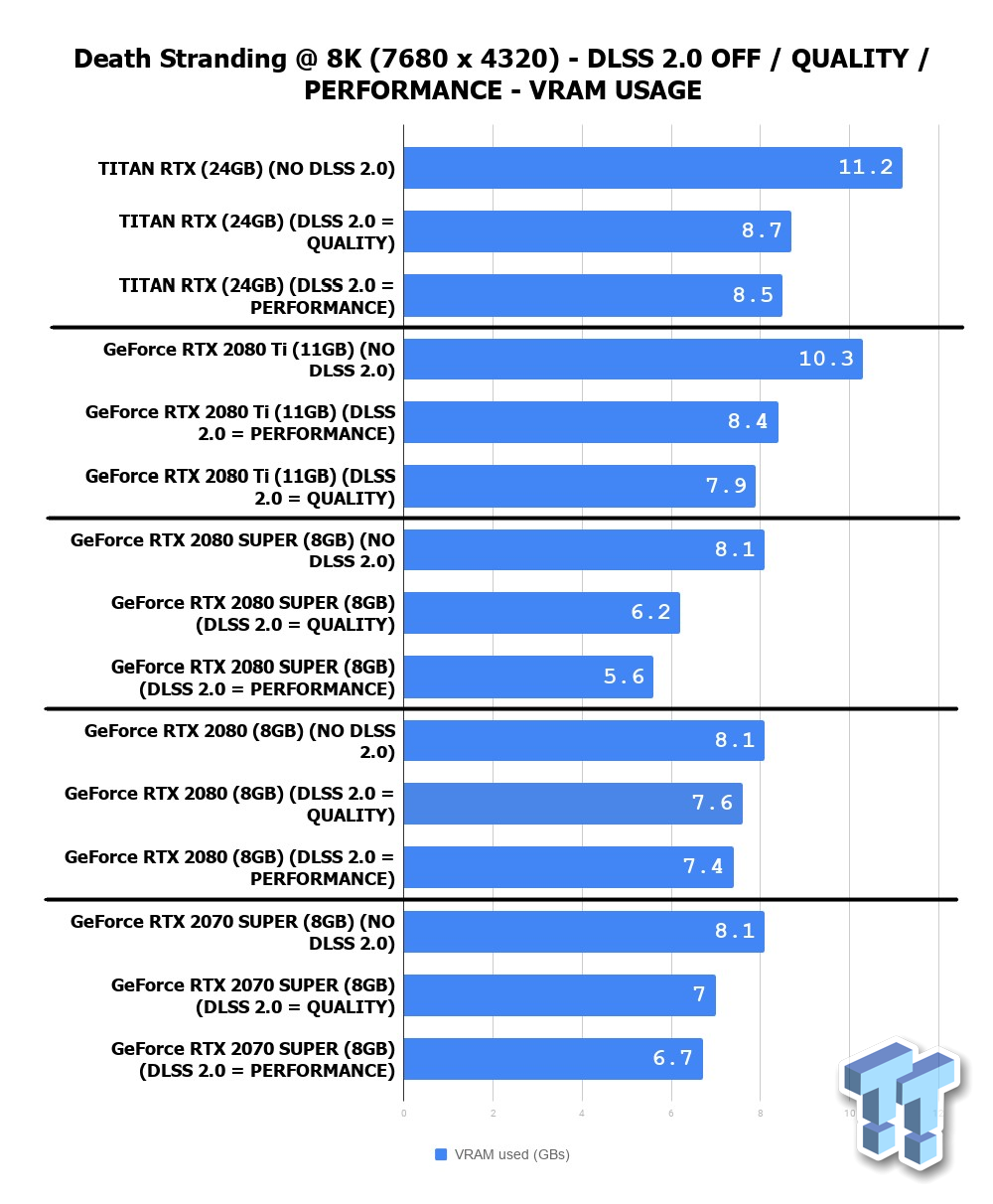

Excellent post. If you value performance, DLSS is a godsend.