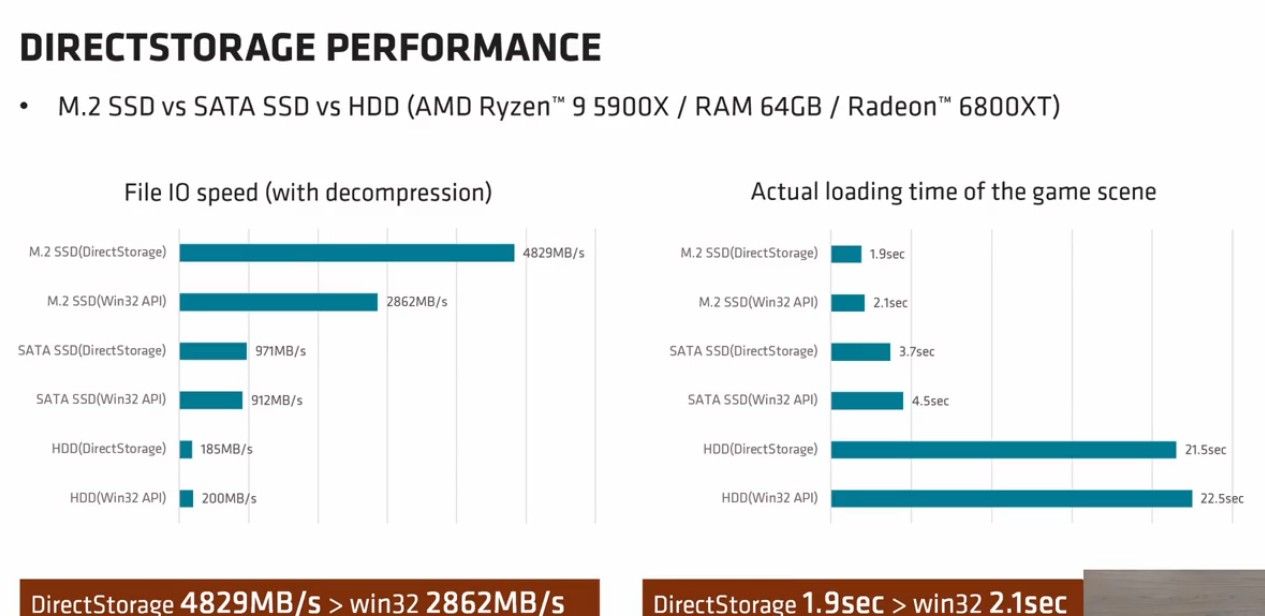

While that is true for Gen 3, what about those that target Gen 4 speed? From what I heard Gen 4 is suppose to be better once utilized. Otherwise, what would be the point of getting Gen 4 Nvmes if Gen 3 Nvmes are nearly as good? While I expect Gen 3 to be the norm, certain games will probably use Gen 4 to make those Nvmes worthwhile.Eh, Xbox Series NVME drive is pretty much the same as the PCI Gen 3 drive for read/write speeds, in fact it is slower than my PCI express gen 3 drive. And the 2 lanes of PCI express 4.0 bandwidth on the Series X is equivalent bandwidth to 4 lanes of PCI Express 3.0 on a PC. So I don't think there is going to be such a dramatic difference since I am sure devs will go for what's easiest, ie, having DirectStorage support be similar to what the Series X is doing in games with storage.

Last edited:

/cdn.vox-cdn.com/uploads/chorus_asset/file/23341223/forspoken_ssd_speed_3.jpg)