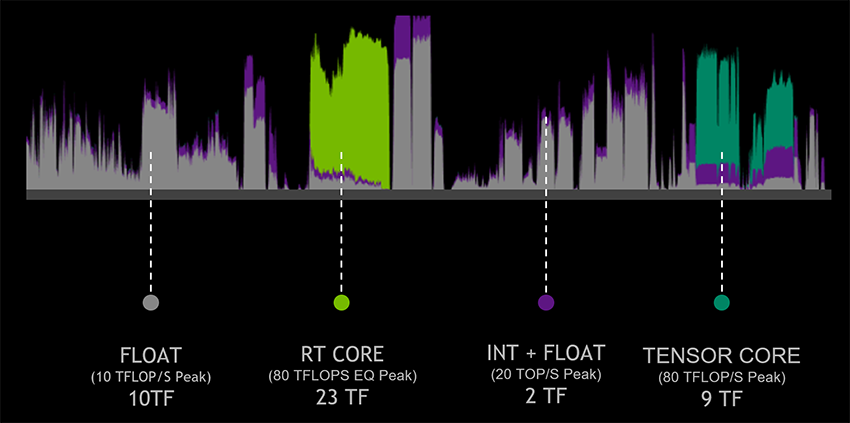

I'm just very glad that both new consoles (and upcoming amd gpus) support this so people can stop pretending it isn't great due to platform preference etc.

Whatever the performance difference may be. RT will play a key part in next gen engines etc.

agree 100%, RT makes developers lives a lot easier apparently, and anything that can help reduce crunch and other industry nonsense is great. on average this should also lead to more games looking awesome. While its true that top tier games using traditional workarounds can achieve results basically almost as good as what RT is doing already, the time and budget that costs is not feasible for 99% of games. RT is great and i'm glad its going to be a thing now.