I would have loved Nintendo to release Mariko 16nm day one (essentially TX2 without Denver cores, and double ram and bandwidth of tx1), but it's 2020 hindsight for nvidia. Word has been that Nvidia wanted to get rid of their millions of their 20nm chip TX1s anyway, and Nintendo came around and got a sweet discount. Truth be told, even 20bm TX1 in March 2017 was among the top in GPU mobile performance vsflagsjip smartphones outside of apple ones.I would love for history to vindicate your view - but i think it helps to be a bit unexpectant of next gen hardware always. Especially if the company making it errs on the side of producing products based on their manufacturing price and mark-up.

The production timelines could have definitely made it so X2 was in the switch. I mean, consoles have debuted new archs throughout their history.

I am positive the reason for the switch's hardware is very much related to the idea that NV had a bunch of HW and a design which did not sell as much as they wanted and Nintendo was happy to grab that up. It was about price.

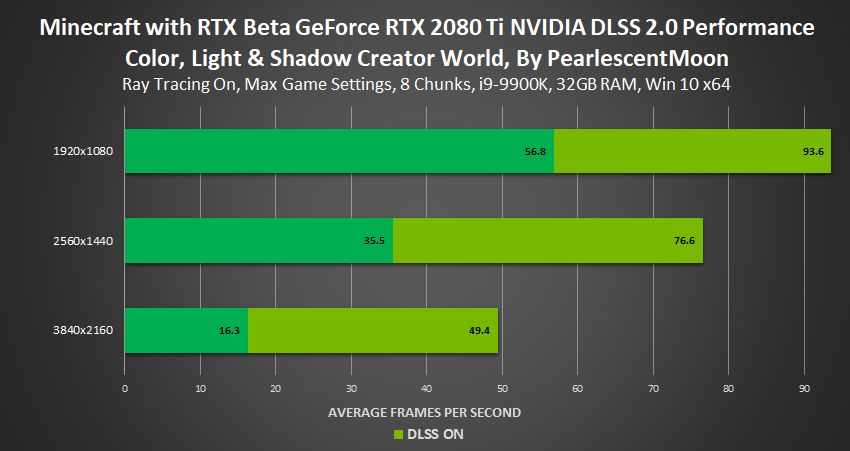

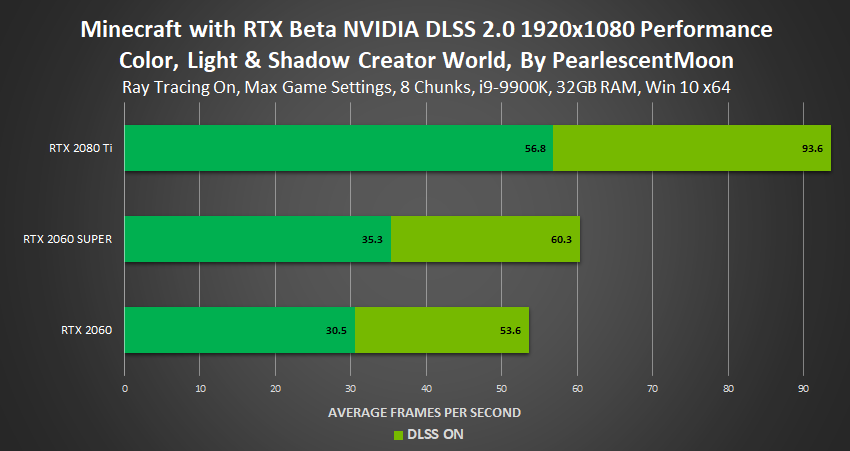

Anyway, Nintendo has been consistently 2-3 years behind in tech since 3ds/Wii u era ateast, so expecting in 2023 a switch 2 to have 7nm (or even 5nm)ampere with DLSS and even 2-2.5TFLOPs GPU with A78(or whatever the latest one coming is called and pairing with ampere) isn't far-fetched at all.