I know, but I don't think it'll get "close".

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

Digital Foundry || Control vs DLSS: Can 540p Match 1080p Image Quality? Full Ray Tracing On RTX 2060?

- Thread starter ILikeFeet

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I havent made any metric for the S2 vs PS5 yet, and I wont until we actually know what the specifications are.We're talking about PS5 and X Series X CPU.

I don't doubt that A77 will close the gap with ps5/xsx CPU. Hopefully Switch 2's CPU, Hercules or something around there has a narrower gap than switch vs xbone/ps4, which was around 2.5. I think the last time we had a conversation about this, you guys were thinking we'd get around 60% of ps5/xsx CPU maybe? That would be crazy good for a handheld/hybrid. As of now, switch vs next gen CPU has a 10x peformance gap (2.5 X 4). Maybe we'll get current gen ports for 2 years, but we really are going to rely on switch 2 for next gen ports...

I do imagine that it will be a much narrower gap then a blank "10x" statement, nor do I think multipling tflops difference by other metrics is a good way to define it.

This actually looks very good.

Wow this is seriously very impressive. I really hope we get to see this tech on the next Nintendo Switch, seems like a game changer.

Actually they wont be due to CPU, but anything on pair with current gen games should be possible.I think the Switch 2 will support this. PS5 and XSX games should be possible this way.

I actually think Switch -> Switch 2 should see a bigger jump in CPU performance than PS4/XB1 -> PS5/XSX, so I'm not convinced this will be the case.

Same has been said this gen and here we are

The relative performance of jaguar to its desktop components, vs. XSX/PS5 CPUs vs. its current desktop components is different this time though, and therefore their comparison to their mobile counterparts.

But CPU perf jump from current gen to next gen is like at least 4x, but i think its closer even to 6x.I actually think Switch -> Switch 2 should see a bigger jump in CPU performance than PS4/XB1 -> PS5/XSX, so I'm not convinced this will be the case.

You forget that Switch is a handheld and still most of its TDP will go to GPU, not CPU. The whole machine cannot pass like 15W with a screen and need to compete with 30W CPUs.

Current gen CPUs were hardly an upgrade in comparison to past-gen, thats not the case now.

Question:

So this stuff looks impressive and it feels that from here it can only get better. It seems so that up scaling tech like this can save bandwidth etc to achieve an almost identical image quality (depending on the difference)

my question is, isn't this something that is/can be applied in the development stage?

for example, let say X developer is making a game and during development they use machine learning to teach how the output should look like.

Like they have two samples already, one at 720p (which is what the one they want to use because of bandwidth, other resources etc) and one at 1440 (or the desired output). So by using machine learning in this stage, the game engine is "taught" how/what to upscale and how it should look like (because it has already seen a reference image of how it is supposed to look like at native 1440)

the final version is a game that has "all" the benefits of being 720p but looks like 1440 (or whatever was the desired). And with all the extra resources being freed up, perhaps this can go towards Raytracing or whatever..

So, is it really a must to have tensor cores or whatever in the hardware or can these kind of tech already be applied in the dev stage going from machine learning > machine taught when the game is out?

(obviously I'm no dev, just curious to see how these stuff works. feels like it boils down to algorithms and processing power and if it can be done already in the development phase. Perhaps it is already like this.. but like I said, I'm No developer)

So this stuff looks impressive and it feels that from here it can only get better. It seems so that up scaling tech like this can save bandwidth etc to achieve an almost identical image quality (depending on the difference)

my question is, isn't this something that is/can be applied in the development stage?

for example, let say X developer is making a game and during development they use machine learning to teach how the output should look like.

Like they have two samples already, one at 720p (which is what the one they want to use because of bandwidth, other resources etc) and one at 1440 (or the desired output). So by using machine learning in this stage, the game engine is "taught" how/what to upscale and how it should look like (because it has already seen a reference image of how it is supposed to look like at native 1440)

the final version is a game that has "all" the benefits of being 720p but looks like 1440 (or whatever was the desired). And with all the extra resources being freed up, perhaps this can go towards Raytracing or whatever..

So, is it really a must to have tensor cores or whatever in the hardware or can these kind of tech already be applied in the dev stage going from machine learning > machine taught when the game is out?

(obviously I'm no dev, just curious to see how these stuff works. feels like it boils down to algorithms and processing power and if it can be done already in the development phase. Perhaps it is already like this.. but like I said, I'm No developer)

The Jaguar in PS4 ane Xbox One was always bad in my opinion... Too weak. I think everyone expected more after 8 years of Xbox 360 when the One launched :)The relative performance of jaguar to its desktop components, vs. XSX/PS5 CPUs vs. its current desktop components is different this time though, and therefore their comparison to their mobile counterparts.

Hmm, I'm interested why you think it would be closer to 6x.But CPU perf jump from current gen to next gen is like at least 4x, but i think its closer even to 6x.

You forget that Switch is a handheld and still most of its TDP will go to GPU, not CPU. The whole machine cannot pass like 15W with a screen and need to compete with 30W CPUs.

But to the point: Onix555 tracked the IPC improvements of the ARM CPUs from A57 to A77. The result is a 2.72x IPC improvement from what the Switch currently has (A57) to what a Switch 2 most likely has (actually, A78 is more likely imo because NVIDIA already are using that chip in their future Orin product, but let's stick with what we have numbers for here). That's the expected improvement at similar clock speed. Because of node efficiencies, the power draw at the 1 GHz level is also vastly smaller than the original A57 cores draw. The A77's can clock higher than the A57 as a result, and a 1.9 GHz frequency for the A77 should give a lower power consumption than the A57 according to AnandTech:

As mentioned in the quote, the A76 (in the Kirin 980) is capable of 2.14W, and the standard configuration is 4 ARM A76, 2 at 2.6 GHz and 2 at 1.92 GHz, along with 4 A55 cores. The Comparion, the Exynos 5433 (see below), has a setup of 4 A57 cores and 4 A53 cores, but the test results shown below try to isolate the A57s as much as possible, keeping the A53s as idle as possible. This is not completely possible in practice, but it gives us a decent idea.Anandtech said:

Looking at the SPEC efficiency results, they seem more than validate Arm's claims. As I had mentioned before, I had made performance and power projections based on Arm's figures back in May, and the actual results beat these figures. Because the Cortex A76 beat the IPC projections, it was able to achieve the target performance points at a more efficient frequency point than my 3GHz estimate back then.

The results for the chip are just excellent: The Kirin 980 beats the Snapdragon 845 in performance by 45-48%, all whilst using 25-30% less energy to complete the workloads. If we were to clock down the Kirin 980 or actually measure the energy efficiency of the lower clocked 1.9GHz A76 pairs in order to match the performance point of the S845, I can very easily see the Kirin 980 using less than half the energy.

With the setup as described above, the Kirin 980 draws 2.14W between 2 A76 cores at 2.6 GHz, 2 A76 cores at 1.92 GHz, and 4 A55 cores at 1.8 GHz. Even if we fully ignore the A55 core contribution, the 2.14W being split between 2 A76s at 2.6 GHz and 2 A76s at 1.92 GHz would translate (using the square law of power draw vs. frequency) to 1.64W approximately if we were to convert that to 4 A76 cores running at 2 GHz (see the math at the bottom of the post in the addendum) - and that's without accounting for the extent of the utilisation of the A55 cores in the Kirin 980 and their share in the 2.14W of power draw, but I will drop that consideration in favour of making the point without introducing difficulties into the analysis. So, clocking the A76 cores at 2 GHz appears to consume less power (1.64W) than 4 A57 cores running at 1 GHz (1.83W). As a result, the expectation of running the A76/A77/A78 cores at 2 GHz seems quite reasonable from the above argument to me.

The conclusion, then, would be that the Switch 2 would be able to run A77 or A78 at 2 GHz or more, assuming roughly the same power budget is allocated for the CPU. So, we have a 2.72x IPC improvement along with a 2x frequency improvement (using the A77), which indicates a 5.4x increase in raw CPU power, which is better than the number that Microsoft have cited (and close to your expected performance increase). If they use the A78 (which is on 5nm instead of the A76's and A77's 7nm), then they would be able to get to 6x or better I believe.

Note: the numbers here are for the A76, not the A77. The A77 should perform better than the A76, and the A78 even better than that.

Addendum:

Let A be the power draw at 1 GHz for a single core. We know that:

P_kirin980_A76 = 2* 2.6*2.6 * A + 2*1.92*1.92*A = 2.14 W (because power consumption is a function of the square of the frequency)

A = 0.10 W/GHz

Now, we clock all 4 A76 cores at 2 GHz:

P = 4 * 2.0*2.0 * A = 4* 2.0*2.0*0.10 = 1.64W

Last edited:

Tensor cores aren't required, as Control shown, but the implementation wasn't great as the artifacting was immense.So, is it really a must to have tensor cores or whatever in the hardware or can these kind of tech already be applied in the dev stage going from machine learning > machine taught when the game is out?

Nope. I mean devs can try creating assets than can be upscaled better by some specific AI based algorithms, but it wont prevent upscaling in other machines, just quality could be worse.Question:

So this stuff looks impressive and it feels that from here it can only get better. It seems so that up scaling tech like this can save bandwidth etc to achieve an almost identical image quality (depending on the difference)

my question is, isn't this something that is/can be applied in the development stage?

for example, let say X developer is making a game and during development they use machine learning to teach how the output should look like.

Like they have two samples already, one at 720p (which is what the one they want to use because of bandwidth, other resources etc) and one at 1440 (or the desired output). So by using machine learning in this stage, the game engine is "taught" how/what to upscale and how it should look like (because it has already seen a reference image of how it is supposed to look like at native 1440)

the final version is a game that has "all" the benefits of being 720p but looks like 1440 (or whatever was the desired). And with all the extra resources being freed up, perhaps this can go towards Raytracing or whatever..

So, is it really a must to have tensor cores or whatever in the hardware or can these kind of tech already be applied in the dev stage going from machine learning > machine taught when the game is out?

(obviously I'm no dev, just curious to see how these stuff works. feels like it boils down to algorithms and processing power and if it can be done already in the development phase. Perhaps it is already like this.. but like I said, I'm No developer)

Last edited:

The Jaguar in PS4 ane Xbox One was always bad in my opinion... Too weak. I think everyone expected more after 8 years of Xbox 360 when the One launched :)

Yeah, the Jaguar architecture was designed for low-power devices, such as budget tablets and entry-level laptops, not gaming systems, and unfortunately for MS and Sony, it was the best SoC-friendly architecture AMD had to offer at the time.

Last edited:

Jaguars were 1,6ghz vs 3.5ghz in current gen consoles and they could only use 6,5 cores in their work, where next gen will probably can use 7,5, thats 2,5x difference right there. Next gen consoles have multithreading and thats like 50-60% speed-up, so 2.5 * 1,5 = 3,75x.

With how the IPC of Zen3 is better like 1,7-2.3x better than Jaguars based on below post => 3,75 * 1,7 = 6,325 or 3,75 * 2,3 = 8,625x . Thats of course fully theoretical, but even reducing this by 20% gives you at least 5x performance boost, which can be greater.

r/Amd - What's the difference in IPC between jaguar and zen/zen+?

4 votes and 10 comments so far on Reddit

Remember that Switch CPU was already like at least 2x slower than jaguars.

2023? Oh come on :)

Just think about it guys. Current gen consoles started with laptop based CPUs, next gen consoles start with Desktop based CPUs, thats a big difference right there. ARM CPUs are amazing, but not that amazing to achieve 50% performance of 30W Ryzen CPUs with 3-4W :)

Just think about it guys. Current gen consoles started with laptop based CPUs, next gen consoles start with Desktop based CPUs, thats a big difference right there. ARM CPUs are amazing, but not that amazing to achieve 50% performance of 30W Ryzen CPUs with 3-4W :)

Last edited:

Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.The ARM CPU in the Switch is built on planar 20nm, is the very inefficient 4 A57 cores at just 1GHz and uses 1.8 watts. The jump to just 4 A73 cores at 1.5GHz offers twice the performance at 1.1 watts. Moving to 4 A76 at 2GHz offers twice the performance again around that same wattage on 10nm. Hercules actually should offer about 6x the performance of the current Switch CPU per core and come in at half the power consumption. That means you could get 8 cores with less power consumption than the launch Switch, and you can add 4 A55 cores all around 2 watts of power consumption.

The rough napkin math I have is 8 A78 cores @ 2.4GHz + 4 A55 cores @ 2GHz should use less than 2 watts, especially if we are talking about a 5nm 2023 Switch 2. I'd also use 2 of the A55 cores for the OS and offer developers 2 A55 cores @ 2GHz, which is about 33% faster than 2 A57 cores in the launch Switch. You could also down clock those A55 cores to 1.5GHz for Switch gen 1 games and give the device 10+ hours of battery life while playing Zelda.

Combined with DLSS 2.0, Switch 2 will handle next gen far better than the current Switch handles current gen.

We are talking about 3 year old tech by 2023 at least. Switch was originally coming out holiday 2016 with a 20nm 2 Billion transistor SoC, just 2 years after Apple's 20nm 2 Billion transistor A8 SoC. Not talking about new tech here at all. In 3 years we are projected to be using 3nm process node with well over 20 Billion transistors on 100mm^2.Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.

Yeah, all those people hoping for DLSS, 4k, HDR or SSD for Switch 2 are likely will be disappointed..Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.

The X1 was the latest and greatest from Nvidia though, the X2 wasnt even out for Partners till after the Switch launched.Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.

I would love for history to vindicate your view - but i think it helps to be a bit unexpectant of next gen hardware always. Especially if the company making it errs on the side of producing products based on their manufacturing price and mark-up.We are talking about 3 year old tech by 2023 at least. Switch was originally coming out holiday 2016 with a 20nm 2 Billion transistor SoC, just 2 years after Apple's 20nm 2 Billion transistor A8 SoC. Not talking about new tech here at all. In 3 years we are projected to be using 3nm process node with well over 20 Billion transistors on 100mm^2.

The production timelines could have definitely made it so X2 was in the switch. I mean, consoles have debuted new archs throughout their history.The X1 was the latest and greatest from Nvidia though, the X2 wasnt even out for Partners till after the Switch launched.

I am positive the reason for the switch's hardware is very much related to the idea that NV had a bunch of HW and a design which did not sell as much as they wanted and Nintendo was happy to grab that up. It was about price.

Last edited:

DLSS is actually a given as all new mobile GPUs from Nvidia have Tensor Cores.Yeah, all those people hoping for DLSS, 4k, HDR or SSD for Switch 2 are likely will be disappointed..

Honestly, people said the same thing with Switch speculation, tech heads in NX threads on the old site were talking about ARM A15 CPU cores, even A12 was being thrown around with head nods. Nintendo uses withered technology, but if you look a bit deeper into the Wii U, you'll see that because of their goals of using a 1990's processor and keeping T1SRAM (forcing the manufacturing on NEC) had more to do with Nintendo's lack of performance on Wii U, than just 'well it's Nintendo'. In fact, we had someone who is an expert on Die shots, speculate that the MCM used on the Wii U must have cost Nintendo over $110 to manufacture. Meanwhile we also know that Nvidia's deal with Nintendo, broke down to ~$55 per Tegra X1 SoC costs, thanks to their financial reports. The reason this is important IMO, is because Wii U actually cost Nintendo more to make than Switch, it was even known at launch that each unit sold for a loss at both $299 and $349 models. With Switch having never sold for a loss as far as we know.I would love for history to vindicate your view - but i think it helps to be a bit unexpectant of next gen hardware always. Especially if the company making it errs on the side of producing products based on their manufacturing price and mark-up.

In the end, we are all just guessing, but we shouldn't be looking at 5nm tech for 2023 as new. I'd even say it is a bit older than Nintendo generally uses, including with the Wii, which launched on 90nm in 2006.

Thanks for the post, that's very interesting stuff! From DF stuff, I believe they cited the multithreading advantage at around 30%, rather than 50%-60%. Computing with that number, we'd get a bump of:Jaguars were 1,6ghz vs 3.5ghz in current gen consoles and they could only use 6,5 cores in their work, where next gen will probably can use 7,5, thats 2,5x difference right there. Next gen consoles have multithreading and thats like 50-60% speed-up, so 2.5 * 1,5 = 3,75x.

With how the IPC of Zen3 is better like 1,7-2.3x better than Jaguars based on below post => 3,75 * 1,7 = 6,325 or 3,75 * 2,3 = 8,625x . Thats of course fully theoretical, but even reducing this by 20% gives you at least 5x performance boost, which can be greater.

r/Amd - What's the difference in IPC between jaguar and zen/zen+?

4 votes and 10 comments so far on Redditwww.reddit.com

Remember that Switch CPU was already like at least 2x slower than jaguars.

(lower end) 3.5/1.6 * 7.5/6.5 *1.30 * 1.7 = 5.58x

(higher end) 3.5/1.6 * 7.5/6.5 * 1.30 * 2.3 = 7.55x

Still a massive effective bump for sure! Switch 2 probably needs to have more than the 4 cores, as z0m3le suggested for a 2W performance level (but since deleted?). For that, the A78s and the 5nm process are probably required. The math on the A78 is still speculative since we don't have exact performance numbers yet, but perhaps Z0m3le has some idea about the math for an 8-core A78 setup?

Well, with the Switch 1, the CPU technology as well as the process node went to market in 2014. That's a similar timeline as we would have with the A78 (coming out in 2020 DV) for a Switch 2 in 2023.Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.

Whether it will be used remains to be seen, of course, but doing so wouldn't necessarily break a tradition imo, since the Switch used technology of similar age. Additionally, NVIDIA are already working with the A78 for their Orin automative part, so that could make using the A78 a bit more likely I think.

Fake edit: Saw your other post. We'll see what NVIDIA and Nintendo come up with, I guess, in order to see if they will have a more impressive or less impressive piece of tech than the Tegra X1 was at the time the Switch 1 launch. What would your personal expectation be for the CPU used for a 2023 Switch 2 device? Do you think A77 is possible (2019 tech) or do you think we should go further back?

That's true: the PS5/XSX are an example of debuting new tech (or at least launching alongside it). But the speculated specs above don't really fit that bill imo: we are looking at tech that will be 2.5-3 years old by the time the Switch 2 is speculated to launch, whereas Parker was only unveiled in 2016, so a year before the Switch 1 launched. It gives a bit of breathing room for NVIDIA and Nintendo to use some older tech and still meet the specs speculated in my (and others') posts.The production timelines could have definitely made it so X2 was in the switch. I mean, consoles have debuted new archs throughout their history.

Last edited:

I think it's also important to keep in mind Nintendo's and Nvidia's partnership just started with the Tegra X1. Nvidia and Nintendo were likely uncertain about the success of the Switch, given the Wii U and as thus, Nintendo and Nvidia used a downclocked Tegra X1 SOC rather than something custom to keep profits as high as possible in case of the Switch being not so successful again.

Now with the Switch being a huge success, Nvidia could actually build a custom SOC for the Switch, maybe based on the Ampere architecture in late 2021 or 2022. Third Party ports also have good success on Switch, meaning a lot of people want AAA games on the go, and only a Switch 2 with a decent enough SOC can deliver that.

Now with the Switch being a huge success, Nvidia could actually build a custom SOC for the Switch, maybe based on the Ampere architecture in late 2021 or 2022. Third Party ports also have good success on Switch, meaning a lot of people want AAA games on the go, and only a Switch 2 with a decent enough SOC can deliver that.

I am positive the reason for the switch's hardware is very much related to the idea that NV had a bunch of HW

But the NX was announced 2 months after the X1 was announced?

Your post had better math, mine was estimated on the fly. I'd suggest 2 to 2.5 watt CPU budget for Switch 2, DLSS should save some breathing room for portable clocks on the GPU side, something like 1280 cuda cores (as I've suggested in the past) @ just 614MHz would give almost 1.6TFLOPs of performance, and if you are rendering 540p and upscaling to 1080p on a 7inch screen, you'd have plenty of room there.Still a massive effective bump for sure! Switch 2 probably needs to have more than the 4 cores, as z0m3le suggested for a 2W performance level (but since deleted?). For that, the A78s and the 5nm process are probably required. The math on the A78 is still speculative since we don't have exact performance numbers yet, but perhaps Z0m3le has some idea about the math for an 8-core A78 setup?

Until we know hercules' power consumption, we really don't have much of an idea where the clock could be at for say 8 cores, but if your budget is 2.5 watts, you should be able to get to ~1.8GHz on 8 cores with 4 A55 cores at 2GHz easy enough, might be able to push that to 2GHz, hard to say until we have seen 5nm hercules.

For the overall performance of Switch 2, we should really be looking at Transistor counts IMO. 7nm ~100mm^2 should be 8+ Billion transistors. 5nm ~80mm^2 should be ~11 Billion transistors. *This estimation is under the assumption that Nvidia and Nintendo will spread the transistors out like they did with Tegra X1. Both Apple A8 and Nvidia Tegra X1 use TSMC's 20nm planar process node, and both have 2 Billion transistors, but Apple's die is 89mm^2, while Nvidia's is 121mm^2. Likely done to reduce heat on a bad node for higher clocks, but it is also just generally done with big die architectures like Maxwell, their design was likely copied over from a desktop architecture's transistor set up. I'm not a hardware engineer though, so I'm just thinking it was a good thing that they went with so much space between the transistors, or the chip might have very bad issues hitting even 600mhz on the GPU.

Nvidia was tied into a contract with TSMC to purchase a bunch of 20nm chips, Nintendo was the solution, and Nintendo got them at about half the price of Wii U's MCM, so really great deal.

Regardless of what Switch 2 will have behind the screen, dowports from PS5 will be a key factor in deciding the hardware for the console considering how important they were to the Switch's line up, especially in Nintendo's home market Japan.

Nvidia was tied into a contract with TSMC to purchase a bunch of 20nm chips, Nintendo was the solution, and Nintendo got them at about half the price of Wii U's MCM, so really great deal.

I've heard that, and it makes a lot more sense than accidentally making millions of chips without a buyer, however both parties would know that discounted chips wasn't a long term solution.

Nintendo could have very easily gone with the Tegra K1 processor. It was a 2014 release, Nintendo was targeting 2016 and they could have shrunk it to fit 20nm. The real issue with going with 16nm at the time was Nvidia's deal with TSMC forced a very low price for Switch's SoC on 20nm. Nintendo did look into 16nm and just thought it would cost too much. Mariko would have been the result though, not Parker.The production timelines could have definitely made it so X2 was in the switch. I mean, consoles have debuted new archs throughout their history.

I am positive the reason for the switch's hardware is very much related to the idea that NV had a bunch of HW and a design which did not sell as much as they wanted and Nintendo was happy to grab that up. It was about price.

Semi-Accurate did publicly report it with the initial Nvidia is the NX supplier article. I would suggest that first article is about the most accurate piece of NX reporting we ever got, as the information never changed, though a lot of people don't realize how fluid a lot of stuff behind the scenes really is. People report good info that never comes to light, and that is just how the business works, but yeah Nvidia had to produce around 20 Million 20nm chips, and Mariko started showing up in the firmware around the 20 Million sales mark for Switch.I've heard that, and it makes a lot more sense than accidentally making millions of chips without a buyer, however both parties would know that discounted chips wasn't a long term solution.

Lastly, I'll point out that because process nodes cost so much to initially hit mass market, they get business contracts from companies before the process is ready as investments for it to hit the market. A while back AMD paid global foundries something like 350 Million dollars to get out of a fab contract, so that sort of thing was hanging over Nvidia's head when they came to Nintendo to produce 20nm Tegra X1.

Not to be a downer - but do you think Nintendo would use "the latest and greatest" technology? They have been utilising something that is generations old each time since the Wii - 14 years of purposefully not targetting the latest technology.

Didn't really expect a lol Nintendo post from someone from Digital Foundry.

I don't really remember the Tegra X1 being "generations old", and the fact that it allows for some big porting from consoles of tge same generation basically vindicates it.

Also, even when they go with the withered technology angle in the graphics side, they usually combine it with tech that helps them reach their objective better, like the autoesthereoscopic screen for the 3DS or the 5Ghz Wifi that allows for lagless video transfer between the Wii U and the Gamepad.

DLSS can help them to keep their vision of a small form factor, hybrid console, and since they're pairing with NVidia they will surely take it (And probably add RTX to tge mix for feature parity)

I do not think I am trolling at all or being flippant. We were talking about CPU foundations and differences.Didn't really expect a lol Nintendo port from someone from Digital Foundry.

I am not talking about GPU features here.

Call me idiotic but how do I ensure the best quality ray tracing on 1440p?

What do you mean?

as a one-time deal, it makes sense. I figure Nvidia was convinced of the product enough to desire a longer-term relationship. the Switch's success justified that desire. now they have a foot in the door to leverage everything they learned to make a technically proficient mobile chipI've heard that, and it makes a lot more sense than accidentally making millions of chips without a buyer, however both parties would know that discounted chips wasn't a long term solution.

also, I think people are focused too much on the "nintendo uses old tech" and not enough on the circumstances surrounding what they use. Nintendo uses "old tech" but they primarily use off the shelf products that's available at the time. even with the 3DS, they attempted to use the Tegra 2 (the tegra 3 was available the same year as the 3DS). the Tegra X1 was released ~2 years prior to the Switch. what's available ~2 year for 2023? nothing. Nintendo and Nvidia will have to make something for that (or Nintendo would have to ditch Nvidia). coincidentally, Nvidia announced a new ARM/Nvidia device for 2023. this is quite similar to how Nintendo chose the X1 before it was available

I don't think you are either, but a 1GHz A57 quad core CPU on 20nm will be very very easy to see huge gains (5x or more) on A76/A77/A78 with a process node that is literally 3 or 4 generations newer (20nm to 16nm to 10nm to 7nm to 5nm).I do not think I am trolling at all or being flippant. We were talking about CPU foundations and differences.

I am not talking about GPU features here.

Just think of the math here, 80mm^2 even on 7nm should have as many transistors as XB1X (7 Billion), and that is a low ball estimate of the Switch 2 SoC. You're also looking at higher clocks thanks to power curves. 3x performance gain per clock and a 50% clock increase is imo the low end here. We see from Mariko just how much better the Tegra X1 performed on just the jump from 20nm process to 16nm/12nm process, and you are looking at 2 or 3 more generations of newer nodes.

The PS5 and XSX use the 4900HS laptop APU with a 4.3GHz clock, that comes with a 7CU? GPU with 1.5GHz clock and has a TDP of 35W. The CPU isn't using some 30W by its self at 3.5GHz (PS5). It's probably under 10 watts for just the CPU.2023? Oh come :)

Just think about it guys. Current gen consoles started with laptop based CPUs, next gen consoles start with Desktop based CPUs, thats a big difference right there. ARM CPUs are amazing, but not that amazing to achieve 50% performance of 30W Ryzen CPUs with 3-4W :)

Last edited:

Huh, interesting, I always thought XB1X was on 12nm, but it's on 16nm just like the PS4PRO. 16 nm -> 7nm is a 70% reduction in area, though, so wouldn't the corresponding Switch 2 chip be 108 mm² (359 mm² * 0.3 = 107.7 mm²)?I don't think you are either, but a 1GHz A57 quad core CPU on 20nm will be very very easy to see huge gains (5x or more) on A76/A77/A78 with a process node that is literally 3 or 4 generations newer (20nm to 16nm to 10nm to 7nm to 5nm).

Just think of the math here, 80mm^2 even on 7nm should have as many transistors as XB1X (7 Billion), and that is a low ball estimate of the Switch 2 SoC.

Edit: That's for the standard 7nm, though. The 7nm+ node (which I believe TSMC are moving on from in favour of 5nm) gives an additional 15% density, so at the same transistor count, that would reduce the size to about 94 mm².

Last edited:

High clocks require more room between transistors. Switch won't have 3.5ghz CPUs or 2.23ghz GPUs, so it can pack the transistors closer together. A better way to figure out a Switch 2 SoC on 7nm is actually look at Apple's use of TSMC's 7nm process in their last year's A13 SoC, a 8.5 Billion transistor die on 98mm^2. That is 7 Billion transistors packed in 80mm^2.Huh, interesting, I always thought XB1X was on 12nm, but it's on 16nm just like the PS4PRO. 16 nm -> 7nm is a 70% reduction in area, though, so wouldn't the corresponding Switch 2 chip be 108 mm² (359 mm² * 0.3 = 107.7 mm²)?

Just think of the math here, 80mm^2 even on 7nm should have as many transistors as XB1X (7 Billion),

Is this right when Series X has only twice that @360mm^2?

edit: saw your next post, they are both on new TSMC so is that the reason larger chips have fewer transistors per mm^2?

High clocks require more room between transistors. Switch won't have 3.5ghz CPUs or 2.23ghz GPUs, so it can pack the transistors closer together. A better way to figure out a Switch 2 SoC on 7nm is actually look at Apple's use of TSMC's 7nm process in their last year's A13 SoC, a 8.5 Billion transistor die on 98mm^2. That is 7 Billion transistors packed in 80mm^2.

Last edited:

Well, the Xbox One X had CPU frequencies at 2.23 GHz and GPU frequencies at 1.172 GHz, so quite similar imo to what we might expect from Switch 2 in docked mode. But I agree that it's probably better to look at a 7nm comparison point, and that A13 chip seems to indicate what you mentioned, indeed.High clocks require more room between transistors. Switch won't have 3.5ghz CPUs or 2.23ghz GPUs, so it can pack the transistors closer together. A better way to figure out a Switch 2 SoC on 7nm is actually look at Apple's use of TSMC's 7nm process in their last year's A13 SoC, a 8.5 Billion transistor die on 98mm^2. That is 7 Billion transistors packed in 80mm^2.

I don't think it's a larger chip per se more so than a chip with higher CPU/GPU frequencies. Power draw (and also heat production) increase with the square of frequency, so a lot of extra heat is produced with higher frequencies, requiring more spacing between the transistor to prevent them from melting. A Switch chip would use GPU frequencies of just over 1GHz at most instead of 1.78 GHz, and CPU frequencies of about 2 GHz instead of 3.8 GHz, so that's quite a difference. The same thing holds for the XB1X, which has significantly lower clocks than what the XSX pushes.Is this right when Series X has only twice that @360mm^2?

edit: saw your next post, they are both on new TSMC so is that the reason larger chips have fewer transistors per mm^2?

Edit: Ah, Zombie already said the same.

Last edited:

Of course it has to be. I'm wondering if the gap will be narrower than current gens 2.5x. only time will tell.I havent made any metric for the S2 vs PS5 yet, and I wont until we actually know what the specifications are.

I do imagine that it will be a much narrower gap then a blank "10x" statement, nor do I think multipling tflops difference by other metrics is a good way to define it.

That's certainly one way to read it...Didn't really expect a lol Nintendo post from someone from Digital Foundry.

But it does, actually it does take even more than 30W, it sometimes takes 50W.The PS5 and XSX use the 4900HS laptop APU with a 4.3GHz clock, that comes with a 7CU? GPU with 1.5GHz clock and has a TDP of 35W. The CPU isn't using some 30W by its self at 3.5GHz (PS5). It's probably under 10 watts for just the CPU.

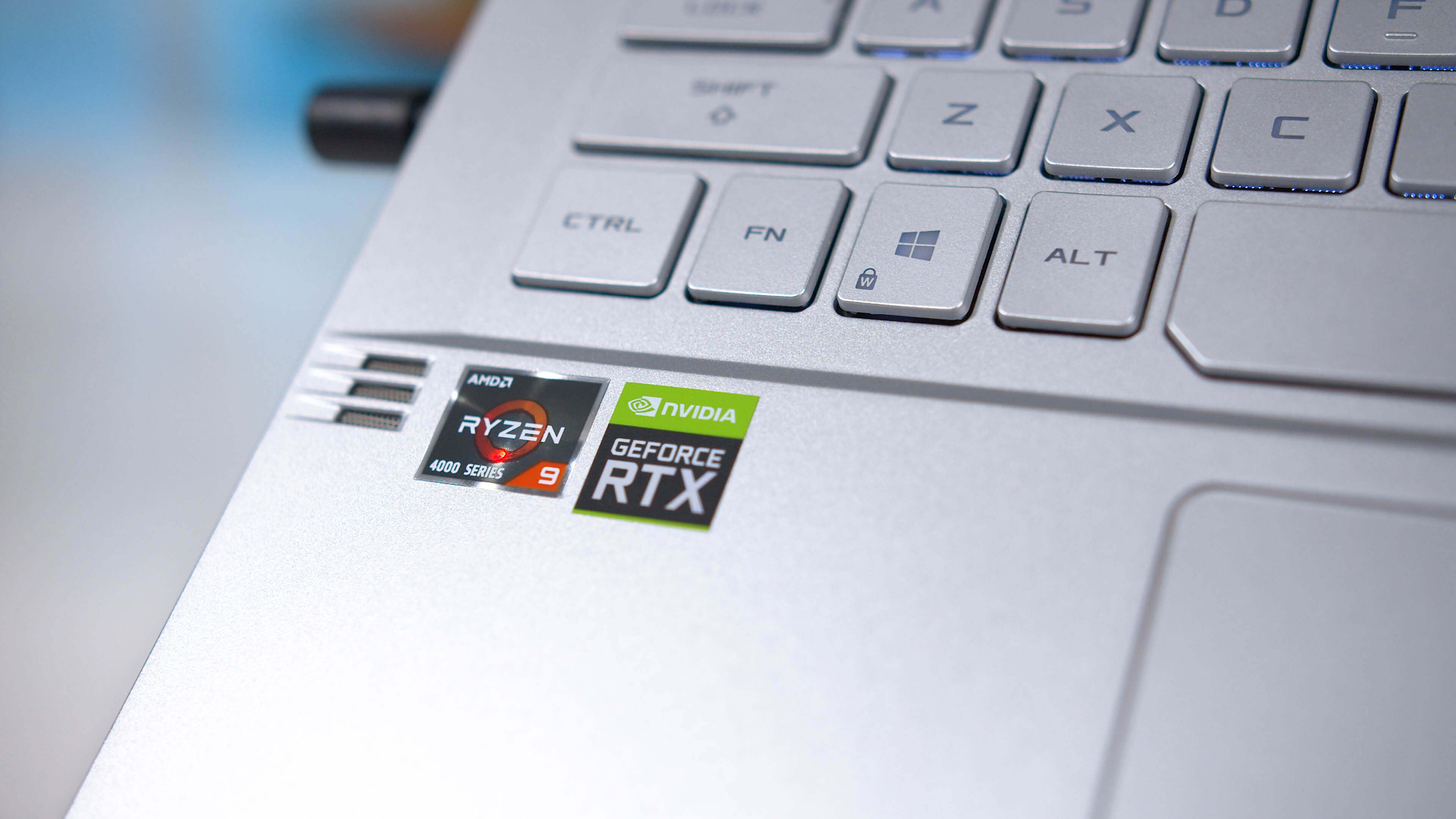

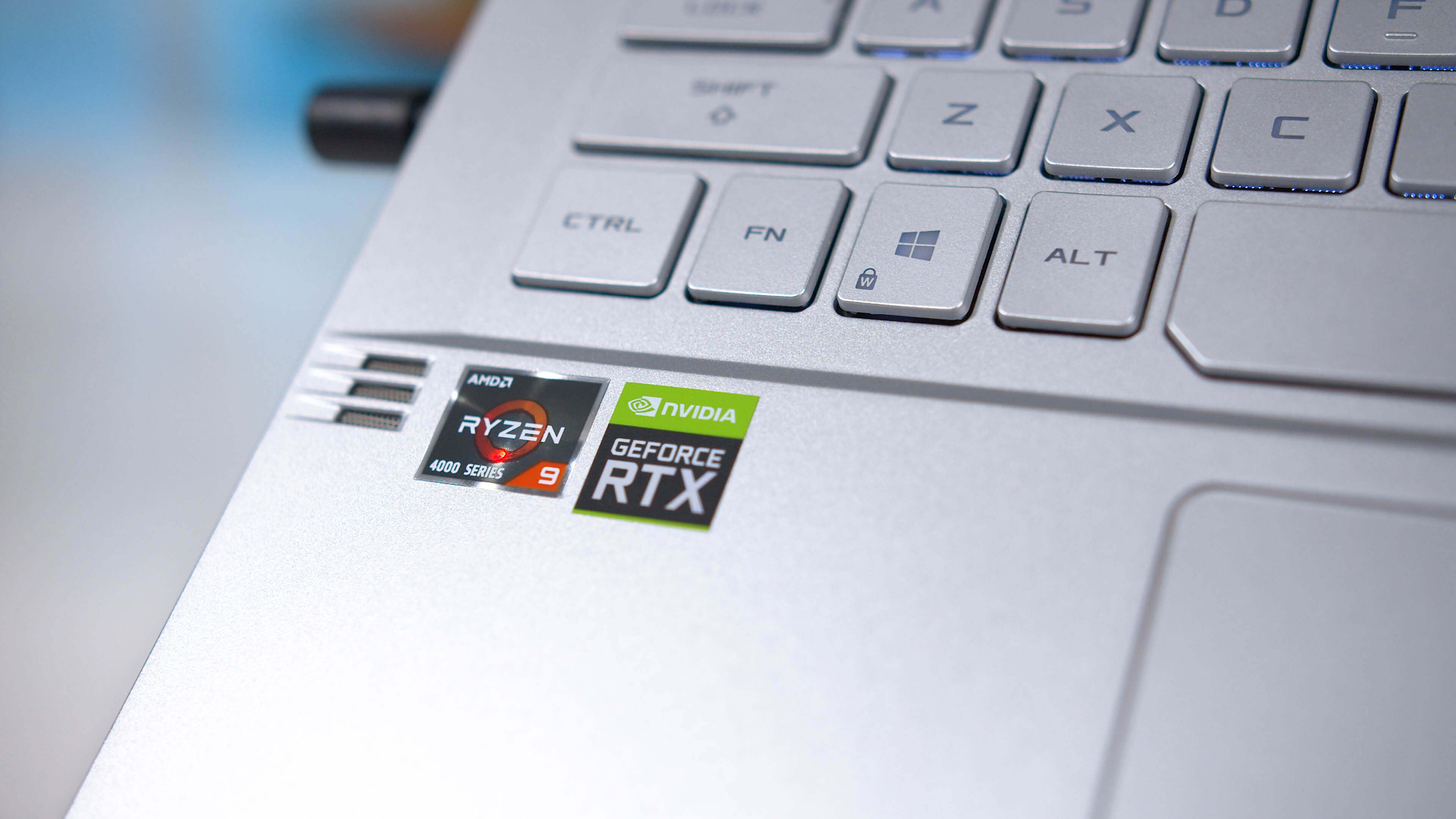

AMD Ryzen 9 4900HS Review

Mobile computing has become the next big target at AMD with its new series of Ryzen 4000 APUs. Today we have the first retail Ryzen 9 4900HS...

www.techspot.com

www.techspot.com

AMD's most important product ever - Ryzen 9 4900HS

Sign up for Private Internet Access VPN at https://lmg.gg/pialinus2Thanks to Thermal Grizzly for sponsoring today's episode! Buy Thermal Grizzly Conductonaut...

"The difference in power draw at the wall for these two systems is incredible: the G14 with the 4900HS ran comfortably at around 66W long term, compared to 150W for the power boosted 9880H in our HP Omen 15 test system." Those numbers you are sighting for the G14 laptop is ones for the entire system, not just the CPU.But it does, actually it does take even more than 30W, it sometimes takes 50W.

AMD Ryzen 9 4900HS Review

Mobile computing has become the next big target at AMD with its new series of Ryzen 4000 APUs. Today we have the first retail Ryzen 9 4900HS...www.techspot.com

AMD's most important product ever - Ryzen 9 4900HS

Sign up for Private Internet Access VPN at https://lmg.gg/pialinus2Thanks to Thermal Grizzly for sponsoring today's episode! Buy Thermal Grizzly Conductonaut...youtu.be

Those numbers are measured at the wall too, which means you are losing around 15% to convert the AC current to DC.

Check out the "Sustained Clocks and More Questions" section where they separate CPU power usage from the rest of the system and monitor while it changes the clock speeds."The difference in power draw at the wall for these two systems is incredible: the G14 with the 4900HS ran comfortably at around 66W long term, compared to 150W for the power boosted 9880H in our HP Omen 15 test system." Those numbers you are sighting for the G14 laptop is ones for the entire system, not just the CPU.

Those numbers are measured at the wall too, which means you are losing around 15% to convert the AC current to DC.

I'm just going to quote the figure that I believe is being discussed, so that everyone can quickly find the chart in question: