It is actually exactly as blanket of a solution as Checkerboard rendering is in DLSS 2.0 - as it ties into the same position of where TAA is in the render pipeline, and does not require per-game neural network training anymore. It is a generic inslot, that requires a developer to feed it things like depth buffer, motion vectors, exposure... just like TAA. It is less intensive to implement in game than checkerboard rendering as you are still working within aspect ratio of the final frame (among other concerns).

Just because my colleagues did something in a previous video does not reflect onto me and my appraisal as things are

now. I am not my colleague, and in general, opinions can likewise change over time. I am pretty sure the general opinion of DF's regarding TAA in its first ghosty, blurry, and overly soft presentations like we saw with HRAA changed dramatically over time when you look at state of the art TAA in something like Call of Duty.

The universal opinion of Rich and myself (have not asked john what he thinks of DLSS 2.0) is indeed that we think it is the best reconstruction we have seen. In terms of artefacts it leaves, its cost (the ms cost is very low), and the amount of rendering time it saves vs. an image of similar quality.

The thing to beat/approximate better is the TAA presentation at native. In games like control or Wolf Youngblood, the game's presentation literally breaks if you turn off temporal anti-aliasing. Hair no longer renders correctly (in idtech and north light), ambient occlusion no longer renders correctly (in idtech and northlight), SSR no longer renders correctly (in idtech and northlight), or even the intended denoise for RT is now incorrect (most devs have temporal spatial denoisers and then allow TAA to clean up the results from that as well: this happens both in idtech and northlight). Control does not even offer the in menu ability to turn off TAA - and idtech has switched over to also not allowing you to turn of TSSAA because it breaks the game's rendering. TAA cleans up many stocastic elements that game's now use or how the amortise costs over frames. This is applicable to all games released so far with DLSS.

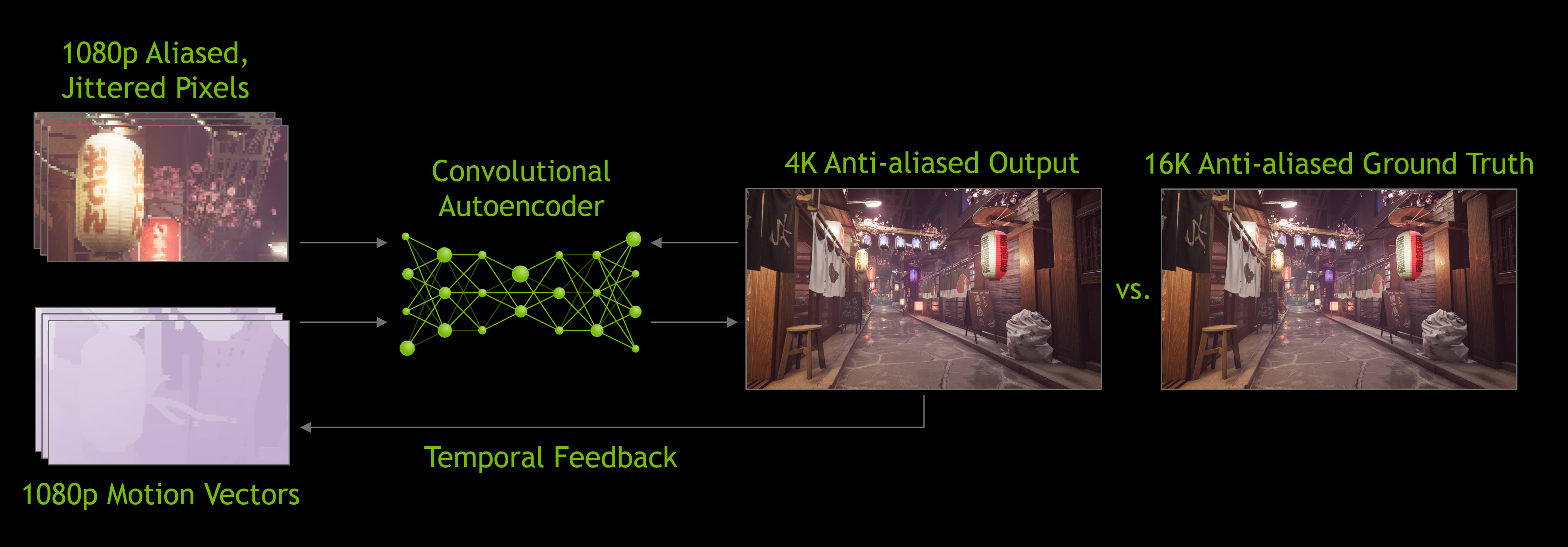

DLSS is essentially temporal reconstruction (which is a modification of native res taa) and is (this is very important) no longer tied into errors of reprojection (that is what it is doing differently, changing how reprojection and rejection is manage) - there is no ghosting. This is a pretty big deal as every TAA and temporal technique (most checkerboards) actually still ghosts. DLSS does not ghost. This is ghosting (samuel hayden's arms):

or this is ghosting:

Here a comparison of DLSS 2.0 vs. native resolution TAA at the same frame of a very small animation of jesse rocking back and forth:

In my video I say readily that the reconstruction at 1080p with DLSS is obvious at normal screen distance due to sharpening.

For 2160p reconstruction from 1080p? That requires a 400% zoom in to find the artefacts in my experience. 2160p reconstruction from 1440p? That one is really only visible on text panels that are far into the distance, so something like an 800% zoom in.

And I think this is a wholly unique situation for 4K reconstruction (especially at something rendering at 1/4 the pixels internally). As even the best reconstructions I have seen look categorically not like 4K native presentations. Temporal accumulation reconstructions in my experience start not looking like native 4K in still shots (so not even movement), when the internal scaling factor is dropped below 0.8 (so much higher res than 1440p or 1080p). My favourite reconstruction technique before DLSS was actually HZDs and Death Stranding's (1920X2160): even there developers there in their presntation on it say it has a different look than 4K in the raw non-zoomed in image. It cannot preserve single pixel detail like 4K can represents. This has a macro effect on the image of maintaining great sillhouettes with its most excellent anti-aliasing, but forgoing on detail inside the surface.