Yes, it can be combined with DSR, but...

When you are playing at 1080p, you are rendering scenes internally at 1080p.

When you are using 2160p with DLSS, you are rendering internally at 1080p/1440p (depends on what the game uses, and you set).

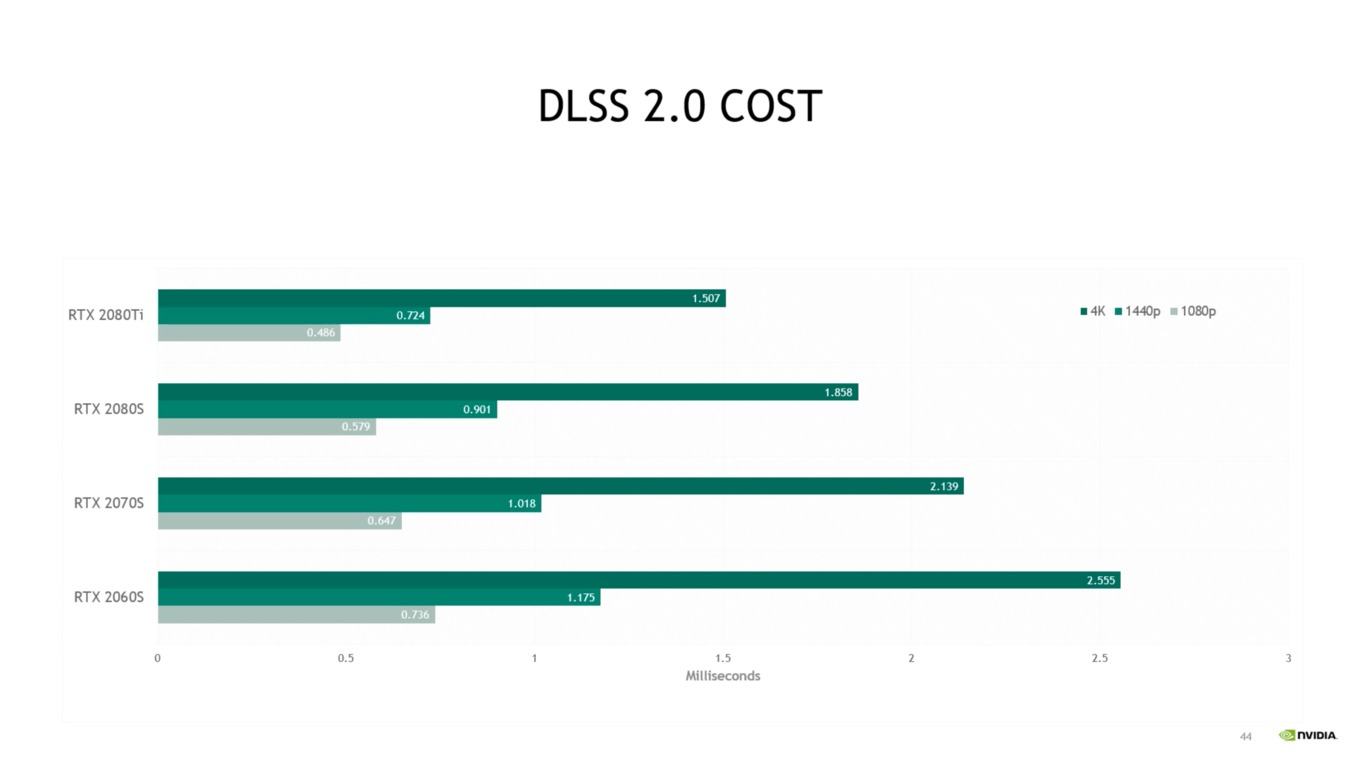

So, using 4k DSR will just force the game to render internally at 1080p reconstruct --> to 2160p --> down sample back to 1080p. While this could result in better Image Quality, it will not magically give you better performance because the internal render is still at 1080p. In fact, there should be a slight cost due to the machine learning algorithms having to run through the tensor cores of your GPU first.

That's the whole magic behind DLSS. It allows you to gain performance by rendering at a lower resolution, while still producing a sharp and detailed immage without much noise. It's not perfect, but it is impressive.