-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Assassin's Creed Valhalla PC performance thread

- Thread starter GrrImAFridge

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Assassin's Creed Valhalla im Technik-Test

Assassin's Creed Valhalla zeigt sich im Technik-Test mit einer guten Grafikqualität bei zugleich hohen Anforderungen.

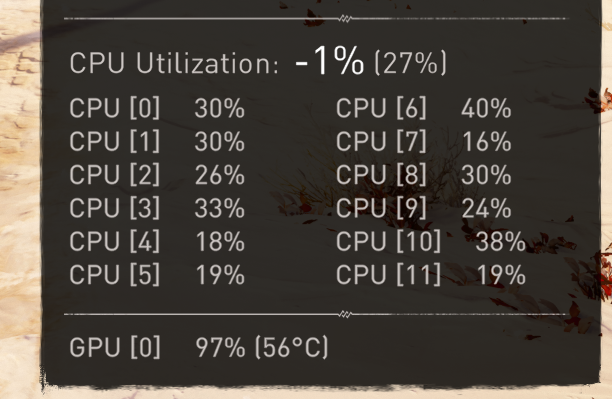

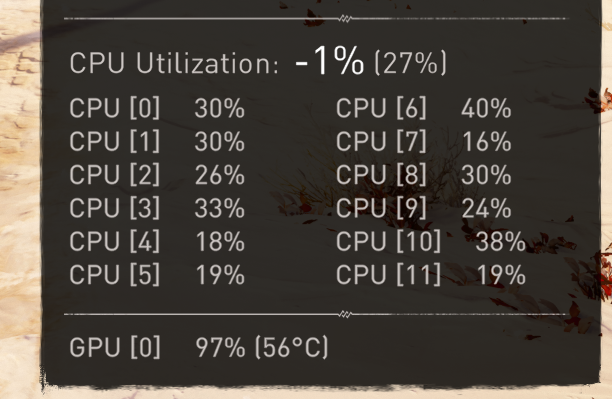

is this normal?

You get the same fps at me but I have 8700k and a 2070s lol.

Ah, the classic case of game GIVING you CPU.

Whats going on here. I only play at 1080p on mostly medium settings and I still can't get above 50fps with these readings? I'm no expert but shouldn't they be doing more? I have a Ryzen 5 2600 and 1060. it's getting on a bit but even my gf with a 960 and an ancient i5 is getting better performance.

its a very demanding game. The gpu usage in the task manager is incorrect.

Whats going on here. I only play at 1080p on mostly medium settings and I still can't get above 50fps with these readings? I'm no expert but shouldn't they be doing more? I have a Ryzen 5 2600 and 1060. it's getting on a bit but even my gf with a 960 and an ancient i5 is getting better performance.

Also, i doubt your gf is actually getting better perf at the same settings with a 960

Some people were asking about loading times on PC.

I did my best to measure it.

I'm using a free video editor and lack any kind of editing skills, but I did my best to make this as accurate as I can with my setup. But maybe I'm sometimes a frame or two off. I'm also recording on the same machine as playing, that will influence results as well.

I've seen peak 1100mb/s data transfer.

Average loading time is 12s for me.

3900x, Gen 4.0 NVMe running through the X570 Chipset.

youtu.be

youtu.be

I did my best to measure it.

I'm using a free video editor and lack any kind of editing skills, but I did my best to make this as accurate as I can with my setup. But maybe I'm sometimes a frame or two off. I'm also recording on the same machine as playing, that will influence results as well.

I've seen peak 1100mb/s data transfer.

Average loading time is 12s for me.

3900x, Gen 4.0 NVMe running through the X570 Chipset.

How does it run? Assassin's Creed Valhalla Loading 3900x / 2080Ti (OC)

Recorded with GeForce Experience 1440p, Ultra Settings

Are you also capping with RTSS? RTSS will break half refresh rate vsync and induce tearing. The best way to lock to 30 fps is just use half refresh rate vsync in the NV driver and absolutely nothing else on top of it. If you use NVinspector, you can turn on half rate non adaptive even so that it never tears. Otherwise the default half rate refresh in the normal NVCP panel is adaptive half refresh rate, so will tear below 30.I don't understand how I still get screen tearing with vsync on and a 30fps cap.

Are you also capping with RTSS? RTSS will break half refresh rate vsync and induce tearing. The best way to lock to 30 fps is just use half refresh rate vsync in the NV driver and absolutely nothing else on top of it.

Yeah I'm already using NV half refresh rate with nothing else. I'm getting locked 30fps in gameplay with good frame times but the cutscenes still have tearing somehow.

Are you doing a settings guide for this?Are you also capping with RTSS? RTSS will break half refresh rate vsync and induce tearing. The best way to lock to 30 fps is just use half refresh rate vsync in the NV driver and absolutely nothing else on top of it. If you use NVinspector, you can turn on half rate non adaptive even so that it never tears. Otherwise the default half rate refresh in the normal NVCP panel is adaptive half refresh rate, so will tear below 30.

Why is AA so demanding?

ATM I am working on other things. Maybe I will eventually. I recommend you watch my odyssey settings guide as those principles are probably the exact same in this game.Are you doing a settings guide for this?

Why is AA so demanding?

The AA setting in this game makes the game native at its highest setting, and various subnative resolutions on lower settings. In the last game the highest setting was Native Resolution, the one below that was like .9 or so I think, and the lowest AA setting was .83 (so 900p at 1080p).

Make sure the ingame FPS limiter is off then too. And tryout NV inspector then to set half refresh rate without adaptive tearing. Tell me what happens.Yeah I'm already using NV half refresh rate with nothing else. I'm getting locked 30fps in gameplay with good frame times but the cutscenes still have tearing somehow.

Thanks, this was really well done. Pretty much the settings I already had but it is good to know I made the right choices!

Basically, in this game, AA setting is the killer (unlike in Odyssey where it was lowering the volumetric clouds setting that got you the big wins). Set it to low for not much impact on image quality but large fps increase.

Ok, here is what I'm running at which works very well with a VRR display:

Specs:

5900X

3090

32GB@3600Mhz

In-game

3840x2160@120hz

Preset Ultra, then turn on Adaptive Quality 60FPS

Frame Rate Limiter 90FPS

Nvidia Control Panel

Low Latency Mode: On

Max Frame rate: Off

Vsync: Use the 3D Application Setting

Specs:

5900X

3090

32GB@3600Mhz

In-game

3840x2160@120hz

Preset Ultra, then turn on Adaptive Quality 60FPS

Frame Rate Limiter 90FPS

Nvidia Control Panel

Low Latency Mode: On

Max Frame rate: Off

Vsync: Use the 3D Application Setting

Just talked to the Bank, they say that by tomorrow the money is back on my account.

So screw Ubisoft+ lol not gonna try that again!

You confirmed that the AA setting in this game impacts the internal rendering resolution, just like it did in Odyssey? So if you set the res to 4k, you're not really rendering at 4k unless you have AA set to High?ATM I am working on other things. Maybe I will eventually. I recommend you watch my odyssey settings guide as those principles are probably the exact same in this game.

The AA setting in this game makes the game native at its highest setting, and various subnative resolutions on lower settings. In the last game the highest setting was Native Resolution, the one below that was like .9 or so I think, and the lowest AA setting was .83 (so 900p at 1080p).

Make sure the ingame FPS limiter is off then too. And tryout NV inspector then to set half refresh rate without adaptive tearing. Tell me what happens.

You confirmed that the AA setting in this game impacts the internal rendering resolution, just like it did in Odyssey? So if you set the res to 4k, you're not really rendering at 4k unless you have AA set to High?

I don't think that's true for Valhalla. I did some screencaps yesterday of high and low, and there seems to be no difference in resolution. I can upload them later.

If you are looking at still images, you wont see the difference. Look at camera cuts in cutscenes or fast movement.I don't think that's true for Valhalla. I did some screencaps yesterday of high and low, and there seems to be no difference in resolution. I can upload them later.

Yes. But expect some dipsIs 4K/60 doable on an RTX 3080 with a Ryzen 5 3600?

Watch Dogs is pretty bad, is this any better?

If you are looking at still images, you wont see the difference. Look at camera cuts in cutscenes or fast movement.

Are they doing something else other than an upscaled slightly lower res then? Because the difference between 2160p and 1800p should be immediately obvious in a zoomed in screenshot comparison.

this is impossible you should obviously have much more performance

is this normal?

You get the same fps at me but I have 8700k and a 2070s lol.

it is temporally reconstructing exactly like Osyssey most likely. They have done it in the last 2 AC games. I see no reason why they would change it now. It being named anti-aliasing probably makes more people use it as they are less adverse to turning it off due to prejudice.Are they doing something else other than an upscaled slightly lower res then? Because the difference between 2160p and 1800p should be immediately obvious in a zoomed in screenshot comparison.

For some frustrating reason, the half-rate v-sync option in the NVIDIA Control Panel is adaptive.Are you also capping with RTSS? RTSS will break half refresh rate vsync and induce tearing. The best way to lock to 30 fps is just use half refresh rate vsync in the NV driver and absolutely nothing else on top of it. If you use NVinspector, you can turn on half rate non adaptive even so that it never tears. Otherwise the default half rate refresh in the normal NVCP panel is adaptive half refresh rate, so will tear below 30.

If you use NVIDIA Profile Inspector it's possible to enable half, third, or quarter-rate v-sync without it being adaptive.

Adaptive or not, frame rate limiters should never be combined with v-sync if the goal is smoothness, rather than low latency.

Frame rate limiters should really only be combined with VRR, except in rare cases.

I really have to disagree.Adaptive or not, frame rate limiters should never be combined with v-sync if the goal is smoothness, rather than low latency.

Frame rate limiters should really only be combined with VRR, except in rare cases.

Traditional framerate limiters, this is true of.

Special K's framerate limiter uses something known as a Latency Waitable SwapChain. I actually have the OS scheduler waking the limiter up at precisely the correct time to stage the next frame without any back-pressure from V-Sync. Input latency is god tier when you build your limiter this way, you basically submit a finished image, poll input, queue the commands for the next frame and then delay submission until scan-out. Done correctly, this nets 0.0 - 0.1 ms to-display latency, and it also automagically trims pre-rendered frames out of the render queue so you're not backlogged w/ 3 or 4 frames of latency.

I cannot draw my OSD in D3D12, but I have plenty of examples of my latency monitor running combined with the limiter in D3D11. Render latency cuts from 5 frames to 0-1, it's something I'm really proud of and eager to validate and start publishing a blog series on when my LDAT hardware arrives from NVIDIA.

----

Limiter works in D3D12, I just cannot draw any of its statistics. But, you can figure those out yourself by using the in-game benchmark or CapFrameX :P

Typically SK's limiter is the one with the perfectly flat line, ~0.0 standard deviation :P Doesn't get any smoother than that, and it's always tested w/ V-SYNC.

(i.e.)

Last edited:

Just in the starting area/village, I've been getting some pretty solid performance so far.

My specs:

i7-9700k

RTX 2080 Super

16gb ram

With Vsync turned on, and the frame rate limit set to 60, I can hit 60fps consistently with everything set to what the game offers as its highest settings, on a 1080p display. In the starting village though, frame rates do drop hover between 55-58. GPU is consistently at 94-100% usage, if the software that I'm using is accurate, and VRAM sits around 64-75% typically. So far, while not the best looking game, it does look pretty good and crisp, and no screen tearing to report in the actual game. Although, there was a ton in the benchmark with Vsync turned off. I will say that there is some pretty bad pop in when you see it, even on an SSD, and I'm not sure if there's anyway to fix that in the settings?

Other than that, so far so good! I do still want to get this on Series X to have that 4K60 experience.

My specs:

i7-9700k

RTX 2080 Super

16gb ram

With Vsync turned on, and the frame rate limit set to 60, I can hit 60fps consistently with everything set to what the game offers as its highest settings, on a 1080p display. In the starting village though, frame rates do drop hover between 55-58. GPU is consistently at 94-100% usage, if the software that I'm using is accurate, and VRAM sits around 64-75% typically. So far, while not the best looking game, it does look pretty good and crisp, and no screen tearing to report in the actual game. Although, there was a ton in the benchmark with Vsync turned off. I will say that there is some pretty bad pop in when you see it, even on an SSD, and I'm not sure if there's anyway to fix that in the settings?

Other than that, so far so good! I do still want to get this on Series X to have that 4K60 experience.

Last edited:

ReShade, video capture software, various overlays, they all have the potential to do this when a game uses DXGI for HDR rather than NvAPI / AGS. Most third-party software has no reason to go around hooking the driver-level APIs and to be fair, I think that's the only good thing I can say about NvAPI or AGS -- DXGI-based HDR is more polished (when overlays aren't breaking it).

----

Nonetheless, HDR is screwed up something mighty in this game :-\ Because it has no D3D11 renderer, I cannot analyze what's going on. 16-bit scRGB games usually represent the pinnacle of HDR (FFXV, FarCry 5, ...), this is something completely different. Almost seems like they have cut the resolution in 1/4 to make up for the 2x increase in memory that scRGB requires.

Last edited:

All credits to HardwareUnboxed, but I find it handy to have the optimized settings in an image in a thread like thism for reference<

it is temporally reconstructing exactly like Osyssey most likely. They have done it in the last 2 AC games. I see no reason why they would change it now. It being named anti-aliasing probably makes more people use it as they are less adverse to turning it off due to prejudice.

Quick question is it better to have AA settings on low or put the game's resolution scale down to 80% assuming it works like previous games? Is it basically the same but with a 0.3 differential?

it is temporally reconstructing exactly like Osyssey most likely. They have done it in the last 2 AC games. I see no reason why they would change it now. It being named anti-aliasing probably makes more people use it as they are less adverse to turning it off due to prejudice.

Hmm fair enough, I guess since it's a temporal reconstruct from such a close resolution to target, that is why it's so imperceptible. I haven't noticed anything different in motion, perhaps with exception to how SSR reflections look in the sea. They're possibly slightly more unstable with AA on low.

Updated 8700k/2080ti impressions: I'm now getting a very smooth 60 fps at ~1550p rendering resolution, reconstructed up to ~1720p, by setting AA to Medium, Output Resolution to 3840x2160, and Render Scale to 80%. This is with all graphics options set to High except for World Details which is Very High, and the Special K version posted by Kaldaien earlier in the thread to dramatically improve my .1% and 1% lows.

Adaptive Quality settings make me really nervous. I have yet to see a single game implement that in a sane way, often the only thing you get out of turning that on is stutter. When it's not causing stutter, it's usually chronically under evaluating your hardware and leaving you high and dry at the bottom of the quality scaling range after about 30 minutes.All credits to HardwareUnboxed, but I find it handy to have the optimized settings in an image in a thread like thism for reference<

Even DOOM Eternal, a very impressive piece of engineering, has crap for dynamic scaling.

Adaptive Quality settings make me really nervous. I have yet to see a single game implement that in a sane way, often the only thing you get out of turning that on is stutter. When it's not causing stutter, it's usually chronically under evaluating your hardware and leaving you high and dry at the bottom of the quality scaling range after about 30 minutes.

Even DOOM Eternal, a very impressive piece of engineering, has crap for dynamic scaling.

I am using it and I think it works very well. Seems to scale the animation of things like flames and such, looks a little jarring, but I do see a noticeable increase of performance.

______________________________________________________________________________________________________________________________________________________________

So I also think the best way to gain performance is to use super sampling, it seems to take a massive load off my CPU. On medium settings with adaptive vsync and I guess dynamic resolution (nothing so far looks jarring, I think what it really does is scale the FPS on certain animations so the GPU doesn't have to work as hard) with an i7 6700k and RTX 3070, and 120% resolution scale I am getting a solid 60FPS on 3440x1440p. Looks great (due to the super sampling, probably even would be better than using ultra high) and plays very well even with my older CPU. I found the sweet spot finally.

This is the first time those words have been written in that order in recorded history :)So I also think the best way to gain performance is to use super sampling

But, yeah... I get the gist of what you were trying to say. If your CPU cannot feed the GPU more frames to draw, you can always have the GPU draw them at a higher resolution. Either way puts more load on the GPU and lackluster CPU performance stops being such a bummer.

I enabled Adaptive AA and performance went to shit. Returned to mix of Mediums/Highs as the first settlement dropped frames significantly.

My experience has been that Special K's limiter works amazingly well when combined with a variable refresh rate display, but not a fixed-refresh display using v-sync.I really have to disagree.

Traditional framerate limiters, this is true of.

Special K's framerate limiter uses something known as a Latency Waitable SwapChain. I actually have the OS scheduler waking the limiter up at precisely the correct time to stage the next frame without any back-pressure from V-Sync. Input latency is god tier when you build your limiter this way, you basically submit a finished image, poll input, queue the commands for the next frame and then delay submission until scan-out. Done correctly, this nets 0.0 - 0.1 ms to-display latency, and it also automagically trims pre-rendered frames out of the render queue so you're not backlogged w/ 3 or 4 frames of latency.

I cannot draw my OSD in D3D12, but I have plenty of examples of my latency monitor running combined with the limiter in D3D11. Render latency cuts from 5 frames to 0-1, it's something I'm really proud of and eager to validate and start publishing a blog series on when my LDAT hardware arrives from NVIDIA.

----

Limiter works in D3D12, I just cannot draw any of its statistics. But, you can figure those out yourself by using the in-game benchmark or CapFrameX :P

Typically SK's limiter is the one with the perfectly flat line, ~0.0 standard deviation :P Doesn't get any smoother than that, and it's always tested w/ V-SYNC.

(i.e.)

Keeping the buffers shorted/empty is great for latency, but means it's very easy to miss the next sync and have it stutter - especially if it's a demanding game that is placing a highly variable load on the GPU.

This is generally true of any limiter.

All of the charts I've seen, like the above, have been produced either with V-Sync disabled, or using G-Sync - where V-Sync is technically enabled, but the limiter is set well inside the VRR window of the display.

r/allbenchmarks - SpecialK's Frame Rate Limiter Review (DX11 based): Comparing Frame Time Consistency And Approximate Latency.

54 votes and 23 comments so far on Reddit

When you're trying to achieve smoothness on a fixed-refresh display, the majority of games have worse results if you enable any limiter but v-sync itself.

The smoothness of the frame-time graph does not matter as much as how the frames are being presented to the display.

I'm not sure how well it comes across, as it turns out that most browser media players - on PC at least - struggle to achieve perfectly smooth playback.

But this video tries to show the difference, where RTSS at 60 FPS produces a perfect frame-time graph, but stutters on the display.

Meanwhile V-Sync has an imperfect frame-time graph, with no stutters on the display.

GPU-limited (but not VRAM-limited) scenarios typically do not result in bad stuttering.This is the first time those words have been written in that order in recorded history :)

But, yeah... I get the gist of what you were trying to say. If your CPU cannot feed the GPU more frames to draw, you can always have the GPU draw them at a higher resolution. Either way puts more load on the GPU and lackluster CPU performance stops being such a bummer.

CPU-limited scenarios often do, so if you force a GPU-limited scenario by turning up the settings or super-sampling, it can eliminate the stuttering because the lower frame rate places less demands on the CPU.

I've seen people do the same thing in the past when GPU utilization was low because they paired a fast GPU with an old CPU. People used to complain about that all the time on the official GeForce forums, as though it was a driver bug causing the issue.

Not sure if this is where to post this,

but has anyone else been having this cloud bug?

Yep, I've had that exact bug on my 3070. Someone else has had it too ITT

Thank you.All credits to HardwareUnboxed, but I find it handy to have the optimized settings in an image in a thread like thism for reference<

Interesting. Hope theres a fix thats found or they patch it. Really a bummer looking out over a beautiful landscape then seeing the clouds like this lolYep, I've had that exact bug on my 3070. Someone else has had it too ITT

Wait, so if you turn down AA to low at 1080p the game will effectively render at 900p? What's the freaking point to calling it AA then if it lowers render resolution?

I have had pretty good performance, here is 1440p with almost everything at ultra high (just lowered clouds one notch), and then the same settings at 4k. I am pretty sure I can hit 4k60 if I turn off AA and mess around a bit. Question is honestly whether its worth it for AC:V when the XSX version runs so dang well - would very curious to see what settings the XSX version is running at compared to the PC. It's not a WD:Legion case where in that game I can hit 60 where the console is locked to 30, so the choice of where to play is definitely harder for AC:V. Anyways, here are my first two benches

https://imgur.com/a/niNjCVM

https://imgur.com/a/niNjCVM

Wait, so if you turn down AA to low at 1080p the game will effectively render at 900p? What's the freaking point to calling it AA then if it lowers render resolution?

It is Temporal AA. It just reconstructs the image from lower resolution samples. It's not like lowering the resolution scale setting.

Are there any news regarding a patch that will improve performance? So far it's been a solid experience for me until I get to the settlement. Was hitting 60fps but as soon as I get to the settlement I get 54-57fps. I turned clouds and shadows to high and feels a wee better.

Playing at 1080p

Specs: i9 9900k

RTX 2080 Super

32GB of ram

Playing at 1080p

Specs: i9 9900k

RTX 2080 Super

32GB of ram

Are there any news regarding a patch that will improve performance? So far it's been a solid experience for me until I get to the settlement. Was hitting 60fps but as soon as I get to the settlement I get 54-57fps. I turned clouds and shadows to high and feels a wee better.

Playing at 1080p

Specs: i9 9900k

RTX 2080 Super

32GB of ram

Check your 32gb of ram are running at full speed eg 3200 not 2333. This was my and others issue.

I did check and yeah it's running at full speed. When I built my PC that's the first thing I switched on hahaCheck your 32gb of ram are running at full speed eg 3200 not 2333. This was my and others issue.