Have we officially updated the joke from 30Hz? Was there a memo?? 😋Why do you need 6 GHz the human eye can't see more than 60 Hz!

Joking aside...

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Are we ever getting 6GHz CPUs?

- Thread starter Yogi

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Eventually yes. But frequency isn't the only factor in gaming performance. How many cores and IPC are also a factor.

Next-gen console CPUs are significant improvements, which while good for gaming in general is worrisome if you like very high-fps gaming on PC.

We'll need 8-12 core 16-24 thread beasts running at 5-6GHz+ to have even a slight chance of keeping those 144+ framerates in next gen games as game makers start to make use of the new cpus while targetting 30-60fps.

Is there a heat/power issue with the 10nm, 7nm and smaller nodes that means we are saying goodbye to high clock speeds? Intel has struggled with 10nm, which Intelthemselveshas said themselves won't be a major node for them. It draws a lot of power and puts out a lot of heat at high clock speeds. AMD's 7nm runs hot and doesn't hit that high of a clock speed either. 14nm was doing it pretty easily but the smaller nodes seem to be an issue. Is this the end of super high clocks?

I want to keep playing at even 144+ framerates next gen, 90 will be so difficult to go back to in first person games and there's also the PS3 emulator that can unlock framerates, RPCS3 which needs high clock speeds - I need my 144+ wipeout HD.

Is this the end of high framerates?

Ohh man this gen really coming to crush all our hopes and dreams.

Weve had;

An end to PC gaming.

An end to lower spec GPUs.

An end to 8GB RAM.

An end to SATA SSDs.

And now we have an end to High framerate gaming.

Woe is me.

I want to keep playing at even 144+ framerates next gen, 90 will be so difficult to go back to in first person games and there's also the PS3 emulator that can unlock framerates, RPCS3 which needs high clock speeds - I need my 144+ wipeout HD.

Is this the end of high framerates?

What games are you playing at 144Hz....because if the engines are coded well like Battlefield and Doom, you can all but guarantee they will still be coded well next gen to hit those speeds.

And pretty much all competitive shooters have really really low.....err low settings to enable them to hit 240Hz plus easier.

So since you are worried about FPS games expect them to still be aiming for that low low setting to enable such high framerates.

If you are talking about AAA titles....you arent hitting 144Hz right now....i wouldnt be too worried.

Also as many have said frequency isnt the end all be all....Ryzen proved that with times when OC'ing the chip actually degraded performance

I've actually got to be honest, seeing an ~40fps delta in average framerates between a 7600K and a 9600K and a 50 average delta from top to bottom with an identical setup otherwise is.Ohh man this gen really coming to crush all our hopes and dreams.

Weve had;

An end to PC gaming.

An end to lower spec GPUs.

An end to 8GB RAM.

An end to SATA SSDs.

And now we have an end to High framerate gaming.

Woe is me.

What games are you playing at 144Hz....because if the engines are coded well like Battlefield and Doom, you can all but guarantee they will still be coded well next gen to hit those speeds.

And pretty much all competitive shooters have really really low.....err low settings to enable them to hit 240Hz plus easier.

So since you are worried about FPS games expect them to still be aiming for that low low setting to enable such high framerates.

If you are talking about AAA titles....you arent hitting 144Hz right now....i wouldnt be too worried.

Also as many have said frequency isnt the end all be all....Ryzen proved that with times when OC'ing the chip actually degraded performance

Making me realise that CPUs really are much more of a bottleneck than I expected. I'm a bit shocked honestly.

If you think we're going to ever get cpus with all cores boosting to 6GHz in our lifetimes I think you've got the wrong idea 😋

We never thought about this but it was always inevitable this would happen if the Ghz race came to an end. Sure, we got some mileage from fancier prediction algorithms combined with more L1/L2/L3 cache but the single-core improvement curve is flatlining with no disruptive technology in sight to save us.

This is what graphene based processors are for though

Carbon nanotube cpu's are expected 6GHZ easy, and although it's taken a while, things are moving quicker - we went from a 200 transistor cpu in 2013 to a 15000 transistor cpu in 2019, and the 2019 one was done in a far more manufacture-happy method then before.

The First Carbon Nanotube Computer

A carbon nanotube computer processor is comparable to a chip from the early 1970s, and may be the first step beyond silicon electronics.

Why This New 16-Bit Carbon Nanotube Processor Is Such a Big Deal

Scientists just created a carbon nanotube chip, but what are carbon nanotubes exactly?

Obviously extremely primitive compared to what's in even a toaster today, and I don't expect this to be useful for at least another decade, but we'll probably have hybrid silicon/graphene cpus before then. I'd expect the hybrids could potentially reach 6GHZ too.

Lol where is this napkin math coming from? Did you just pick 6ghz from thin air to hit your theoretical frame cap? PC high end CPUs are well positioned to deliver whatever performance is needed if you pair with the correct gpu.

The issue with Ryzen is that manual overclocking is unlikely to reach the same frequencies across all cores as it is able to reach with a single core when left on auto; e.g. maybe you can hit 4.2GHz all-core, but leaving it on auto can push one core to 4.6GHz while the others are at 3.9GHz.Also as many have said frequency isnt the end all be all....Ryzen proved that with times when OC'ing the chip actually degraded performance

It's a perfect example of how frequency still matters more than cores when games are reliant on a single main thread.

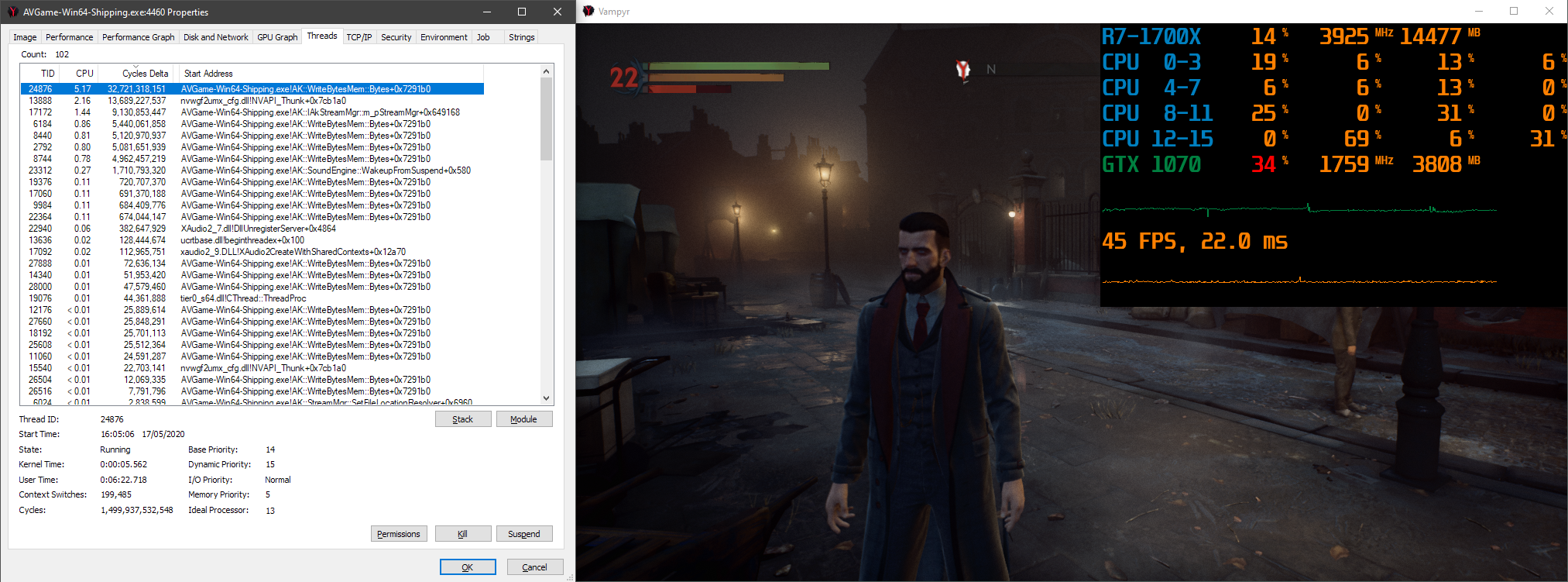

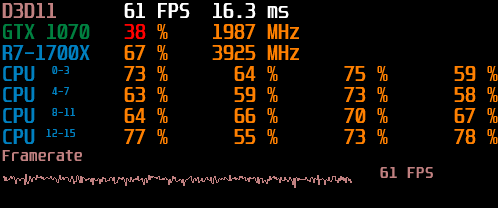

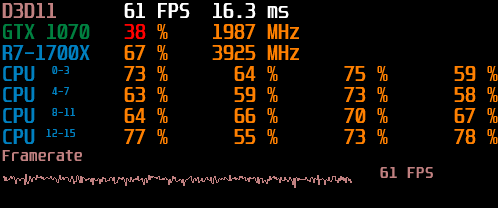

This is obviously just one game, and it's a couple of years old now, but Vampyr is an example of an Unreal Engine 4 game which clearly shows CPU bottlenecks and reliance on a single main thread if you inspect it with Process Explorer:

I had several issues with this game, but its CPU-limited performance really hurt my enjoyment of it.

Note: with an 8c16t CPU, a single core/thread running at 100% is reported as "6.25" in Process Explorer - so "5.17" is really a single thread/core working at 83% (it's set to average over 10s).

Total CPU usage for the game process itself was about 12.5% - equivalent to only two cores.

Hopefully next-gen techniques like Unreal Engine 5 are using will scale far better across cores, but if you look at most games today you'll see that performance is still reliant on a single main thread. The RE Engine is one of only very few I can think of which don't show a large disparity between a single main thread and the work that the other cores are doing.

Over 8 GHz if you look at AMD FX processors, and probably higher if you look at world records; but those CPUs are great example of why frequency is not the only thing that matters. Here's a video of der8auer revisiting his old FX 8350 recently:We reached 5 GHz in 2003 with liquid nitrogen, but I don't know if we ever broke the 6 GHz barrier.

Not if you're pushing for high frame rates rather than running games at high resolutions with ultra settings.PC high end CPUs are well positioned to deliver whatever performance is needed if you pair with the correct gpu.

Forget the 6Ghz figure in OP for a second.

High frame rate gaming next gen will undoubtedly be a lot harder than it is now with current gen consoles and their anemic CPUs.

If you think you'll be able to do that on next gen CPU intensive games with something like a 3700x think again.

High frame rate gaming next gen will undoubtedly be a lot harder than it is now with current gen consoles and their anemic CPUs.

If you think you'll be able to do that on next gen CPU intensive games with something like a 3700x think again.

Don't play on Ultra. While 144fps might be difficult there is no reason to drop down to 30fps on PC if you value framerate over graphics. The settings menu is your best friend when devs are turning their backs to you.

I had Gears 5 running at 70-80fps on three screens on a 7 year old build plus a 1080ti, the CPU wasn't even near to hit the ceiling when I did a benchmark.

I had Gears 5 running at 70-80fps on three screens on a 7 year old build plus a 1080ti, the CPU wasn't even near to hit the ceiling when I did a benchmark.

I had Gears 5 running at 70-80fps on three screens on a 7 year old build plus a 1080ti, the CPU wasn't even near to hit the ceiling when I did a benchmark.

That's because Gears 5 runs at 60fps on a Jaguar CPU

Despite clock speed falling off, I bet there will be a consumer silicon CPU that can hit 6GHz turbo stock (at least single core) at some point. (as opposed to never ever ever)

5.3 is supposedly doable on the 10th Gen i9 and thats without even a shrink.

Once Intel shrinks they will likely have thermal velocity plus that hits 5.5....a ballsy OC and maybe 6 will be possible on air.

I've actually got to be honest, seeing an ~40fps delta in average framerates between a 7600K and a 9600K and a 50 average delta from top to bottom with an identical setup otherwise is.

Making me realise that CPUs really are much more of a bottleneck than I expected. I'm a bit shocked honestly.

Hitting 60 is the easy part.....its getting double or triple that when problems really start to show up.

Its why AMDs 3300Xs are such a frikken steal hitting 60 will be easy work for them, and most people game at 60.

I dont even have a 144Hz screen anymore because with bells and whistles I could never get that high so "downgraded" to a bigger 1440p screen so I could squeeze more particles and still hit just above 60Hz.

5.3 is supposedly doable on the 10th Gen i9 and thats without even a shrink.

Once Intel shrinks they will likely have thermal velocity plus that hits 5.5....a ballsy OC and maybe 6 will be possible on air.

Yeah I'm just thinking stock turbo boost, and I'm thinking it's going to be a near thing as we get to the smallest viable nodes on silicon. It might be the kind of thing we finally reach as silicon processors are going away.

Probably when you change to silicon to direct band gab semi conductors like GaAs. The charge mobility is manifolds higher and they should produce way less heat as the transitions are way more direct and produce almost no vibrations and thus heat on the lattice of the crystalline structure.

These wafers are like 5 to 10 times more expensive though.

These wafers are like 5 to 10 times more expensive though.

The problem is that as process nodes are shrinking, so is the difficulty in reaching higher frequencies, which is due to being somewhat correlated with the the gate voltages you can scale to. Then there is the greater power density, as transistors are packed closer together, you need to dissipate all that power somewhere.Despite clock speed falling off, I bet there will be a consumer silicon CPU that can hit 6GHz turbo stock (at least single core) at some point. (as opposed to never ever ever)

Intel is reaching all those 5ghz clocks because they're forever stuck on 14nm.

That's a lot of assumptions in the OP. These CPUs are good enough to no longer be bottlenecks. That doesn't mean that every game is going to be pushing them to 100% utilization. Very few games will do that and you weren't getting 144 fps in those games on current gen anyway (looking at you AC). If a game is running at 30 FPS on next gen consoles it's because it's GPU bound, not CPU bound.

Last edited:

Hitting 60fps on a 144hz screen is still better than hitting 60fps on a 60hz screen, assuming you have some form of VRR of course.5.3 is supposedly doable on the 10th Gen i9 and thats without even a shrink.

Once Intel shrinks they will likely have thermal velocity plus that hits 5.5....a ballsy OC and maybe 6 will be possible on air.

Hitting 60 is the easy part.....its getting double or triple that when problems really start to show up.

Its why AMDs 3300Xs are such a frikken steal hitting 60 will be easy work for them, and most people game at 60.

I dont even have a 144Hz screen anymore because with bells and whistles I could never get that high so "downgraded" to a bigger 1440p screen so I could squeeze more particles and still hit just above 60Hz.

Hitting 60fps on a 144hz screen is still better than hitting 60fps on a 60hz screen, assuming you have some form of VRR of course.

1440p was better than 1080p knowing I was never going to hit 144.

And I can use LFC on my monitor so im all set till a reasonably priced monitor comes in at 4K 32" IPS or OLED.

The problem is that as process nodes are shrinking, so is the difficulty in reaching higher frequencies, which is due to being somewhat correlated with the the gate voltages you can scale to. Then there is the greater power density, as transistors are packed closer together, you need to dissipate all that power somewhere.

Intel is reaching all those 5ghz clocks because they're forever stuck on 14nm.

I wonder if we're stuck on 7nm for a while (I assume even longer), and we start using chiplets (more than AMD is now) and worry a bit less about inter-chip latency for specific functions leading to somewhat larger chips, if there will be a point where we do get a bit more out of frequencies.

lol okay not the best example perhaps :p But it was an old build and on one screen I could still see 100+ fps on some games. I eventually swapped out most internals but that was because of music production, probably just would've swapped out the GPU if I had kept it for games.

I watch a ton of vids weekly comparing and benchmarking CPUs and even in stuff like AC Odyssey the most limiting factor is the GPU.

From what I've seen it's impossible to reach something like a stable 144 fps right now in that game (or similar single player games). All due to a CPU limit (with a RTX 2080 Ti in 1080p and low settings).

As an example: https://www.pcgamer.com/why-actually-hitting-144fps-is-so-hard-in-most-games/

There's nothing inherent about high FPS gaming that requires clock speed over more cores. It's entirely up to the games/apps and how they were built, with many of them not designed to scale across that many cores. We can partially thank Intel for that as they stagnated for years releasing the same 4 core 8 thread parts with slight improvements didn't give devs much reason to target anything else. I would think going forward parallelization is a bigger focus as the industry moves to a model of more cores

Agreed, it has nothing to with clock speeds per se. But even with better multithreading support CPU demands are going to rise.

The OP's premise is that next-gen console games will be designed to be CPU-bound at 30-60 fps. The implied argument is that the only reason current games are not CPU-bound at low FPS on PC is because game developers are designing around current-gen console CPUs, which have much weaker CPU performance. The OP's argument assumes that when consoles have faster CPUs the new games will still be CPU-bound (and specifically frequency-bound) at low fps on these faster CPUs.

There are many reasons why this may turn out to be a false premise:

- Games will likely want to continue supporting lower-end hardware for years after the new consoles release. This includes both current-gen consoles as well as lower-spec PCs, like laptops.

- Designers may want to offer 120 fps on consoles now that they have HDMI 2.1, so they may not want to design around being CPU bound at low framerates.

- Developers simply might not come up with enough work for the CPU to do for it to be the bottleneck at low fps

- Game engines may be good enough at parallelism that frame rates are not frequency-bound in low ranges. In this case it may be good enough to just have more cores, and you can already buy CPUs like the 3900X that have 50% more cores than the consoles.

Of course you are right that not all games will target next-gen consoles exclusively (and some may target high frame rates), and thus CPU demands will not be very high for those games. This thread is more about those games that will actually use the power though.

Same goes for the "not enough CPU work" arguement; it's valid for some games, but there will be games that push the hardware, including the CPU; and not necessarily because its actually always needed. As a software engineer I can only say that it is terribly easy to even bring the strongest hardware to its knees, and optimization is something that comes at the cost of development time. Game code will be optimized to a level where the game runs at the target frame rate, but often not much further than that.

Most betted on this. in 2008's quakecon keynote, Carmack was openly questioning multi threadingFramerates arent bad, your programs of choice aren't multithreaded efficiently. Thats a them issue, not a core speed issue.

Anyways, not any time soon, its a waste for them to try - crytek misbet on this future and its why crysis 1 still doesnt run notably better on newer cpus.

As cores go up and multithreading increases im not sute you *need* a 6ghz core from their perspective?

My PC Monitor is 2560x1080 144hz so even with nextgen once I get a 3080ti that will do 144fps for most games no problem, if not will be fairly close.

I have my OLED for 4K gaming, but ultrawide for me is better than 4K.

Your CPU will have to be able to feed those 144 frames to your 3080 Ti in next gen games though

Your CPU will have to be able to feed those 144 frames to your 3080 Ti in next gen games though

my 9900k is at 5Ghz all cores, I'm confident it will do fine.

We're not going to get 6GHz cores with silicon because we've gone past the sweet spot of frequency vs process node. You need voltage for frequency to overcome the capacitance of the wires that make up the CPU, you need process node for both Dennard scaling and to bring the threshold voltage down but you also simultaneously need to not bring down the process node to decrease current leakage and the switching power increases that accompany it. You can't just throw voltage at the problem because you'll not only get more heat, you'll also get electromigration where the copper interconnects are literally pulled apart from the voltage.

We're seeing a plateau in frequency because Dennard scaling is broken. That's what has given us clock speed after clock speed increases for so long previously. Now, because we're getting into such small features, quantum mechanics are starting to take over and we're seeing electrons quantum tunneling through dielectric mediums in gates. Without some new materials that will let us switch at ridiculous frequencies at low voltages we're kind of stuck with MOAR CORES as the strategy for performance increases.

This isn't a simple thing because as a species we've invested trillions of dollars into making silicon fabrication better. To switch to a new base material like GaAs or GaN is going to cost trillions more. It's going to be a slow going transition and we might not ever get the capital required to make the transition at all.

We're seeing a plateau in frequency because Dennard scaling is broken. That's what has given us clock speed after clock speed increases for so long previously. Now, because we're getting into such small features, quantum mechanics are starting to take over and we're seeing electrons quantum tunneling through dielectric mediums in gates. Without some new materials that will let us switch at ridiculous frequencies at low voltages we're kind of stuck with MOAR CORES as the strategy for performance increases.

This isn't a simple thing because as a species we've invested trillions of dollars into making silicon fabrication better. To switch to a new base material like GaAs or GaN is going to cost trillions more. It's going to be a slow going transition and we might not ever get the capital required to make the transition at all.

in 2008 Carmack was making RAGE and Idtech5. We know how that turned out.Most betted on this. in 2008's quakecon keynote, Carmack was openly questioning multi threading

Stable 6GHz at room temperature is not possible at this size.

The problem I feel, and what the OP is trying to talk about, is that if they are efficiently multi threaded in the console version while targeting 30-60FPS so much so that they are effectively topping it out (that's a big IF) then how much room for improvement there is left on PC side to hit high FPS like 120+? Additionally if they are not efficiently multi threaded on consoles, then PCs not having enough advantage in that area over consoles means they can't simply brute force that high FPS either. But I'm sure we'll start seeing more and more 12-16 core CPUs and up, that will provide enough raw power to push it beyond console target FPS. Just hope Intel ups their CPU game, it's been seriously lacking recently.6Ghz is not physically possible on silicon processors at room temperature due to a whole list of physics issues that occur at the atomic level.

You have to go sub-zero to achieve that.

However it's not like we lack CPU performance in the first place, the major reason for a lot of low performance software is simply just inefficient code.

Most developers can tell you that they're not hired to do things in an optimised and smart manner; instead its "get out the door as fast as possible so we can make profit".

This right here

Getting 6ghz is meaningless when we have IPC. people pushed certain processors past these points to get little gains on better processors that were more efficient.

I'd say focus on parallelism and then your ipc over sheer clock rates.

Stable 6GHz at room temperature is not possible at this size.

The problem I feel, and what the OP is trying to talk about, is that if they are efficiently multi threaded in the console version while targeting 30-60FPS so much so that they are effectively topping it out then how much room for improvement there is left on PC side to hit high FPS like 120+? Additionally if they are not efficiently multi threaded on consoles, then PCs not having enough advantage in that area over consoles means they can't simply brute force that high FPS either. But I'm sure we'll start seeing more and more 12-16 core CPUs and up, that will provide enough raw power to push it beyond console target FPS. Just hope Intel ups their CPU game, it's been seriously lacking recently.

Because they got complacent are two years out from having decent products unless they figure out somethin amazing in one year which is unliely. 2 years is more practical but amd mulitcore tech is scaling way better and more effectively. 3 years we might see intel be as fierce as the old days. The 10k series isn't inspiring much confidence in me. Shame I normally go intel but performance beats staying with one side.

Adding more cores doesn't help unless the software can use them.The problem I feel, and what the OP is trying to talk about, is that if they are efficiently multi threaded in the console version while targeting 30-60FPS so much so that they are effectively topping it out (that's a big IF) then how much room for improvement there is left on PC side to hit high FPS like 120+? Additionally if they are not efficiently multi threaded on consoles, then PCs not having enough advantage in that area over consoles means they can't simply brute force that high FPS either. But I'm sure we'll start seeing more and more 12-16 core CPUs and up, that will provide enough raw power to push it beyond console target FPS. Just hope Intel ups their CPU game, it's been seriously lacking recently.

A 64-core Threadripper system is not going to be any faster than an 8-core 3700X in the majority of games - in fact it will be slower in most of them.

Look at the Vampyr example I posted above.

It has spawned a lot of threads, but there are fewer than eight which are actually doing any real work, with half of the game's CPU workload placed on a single thread.

The total amount of work is only 12.5% of what that CPU is capable of, but because it's all placed on a single thread the game can't even reach 60 FPS - at 720p no less. It's worse in ultrawide.

Now I certainly hope that games built for next-gen consoles scale better, but the PS4/XB1 had weak 8-core CPUs where multi-threading was important to get the best performance, and yet few games built for those systems actually scaled to anything like that number of cores.

That's why we have many games which are considered to be "bad ports" that can barely scale to 60 FPS despite the huge difference in performance between the consoles' netbook CPU and high-end desktop CPUs.

Think how much worse it will be if that doesn't change and games are built to run at 30 FPS without good multi-threading on the next-gen systems' Zen 2 CPUs. With the XSX running at 3.8 GHz, most desktop Ryzen systems are not even going to be even 500 MHz faster.

id Tech 5 is actually an engine that scales very well to high core-count CPUs due to the virtual texturing system. One of the reasons the games didn't perform well on some systems is because it also meant that the baseline CPU requirements were higher than most.in 2008 Carmack was making RAGE and Idtech5. We know how that turned out.

It's one of the engines where I saw the largest difference in performance on my R7-1700X compared to an i5-2500K, and is what makes me wonder if Unreal Engine 5 will scale much better with CPU cores than UE4 did.

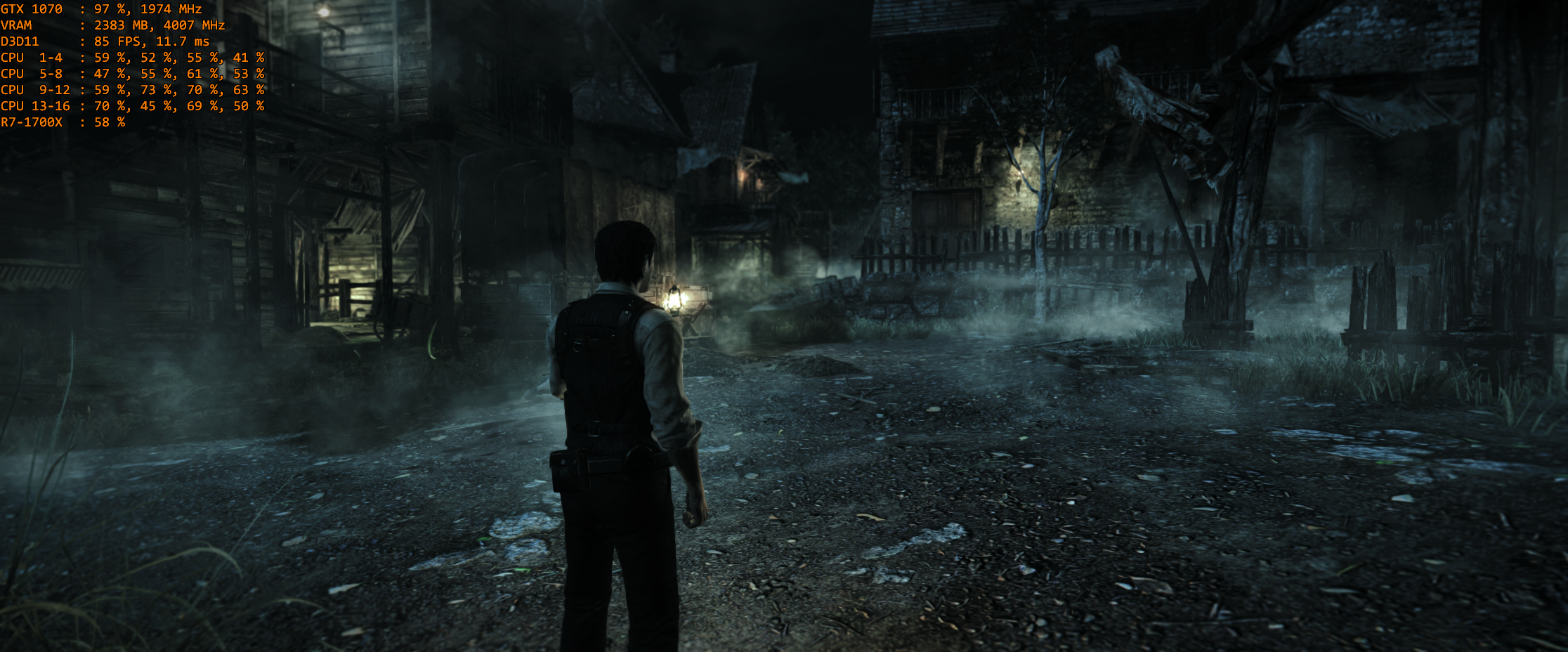

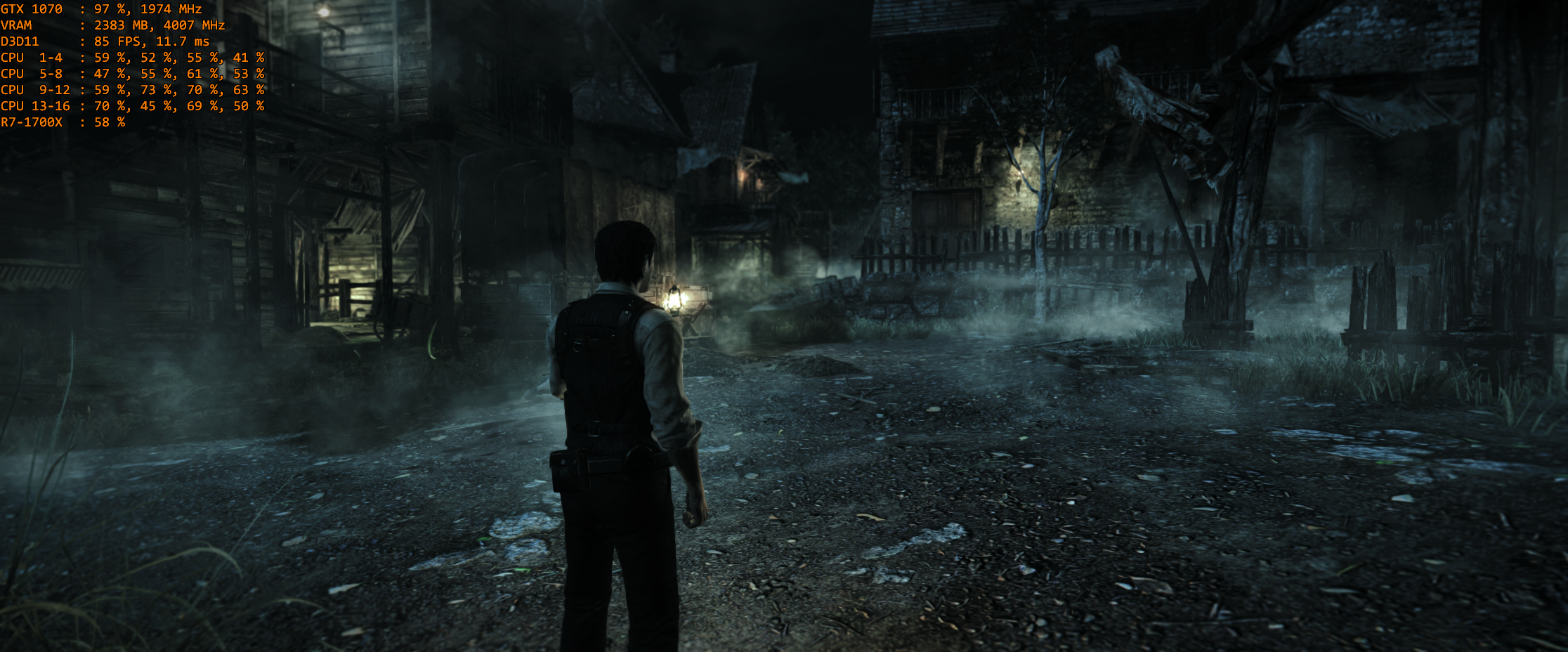

Here you can see that The Evil Within is loading up all cores fairly evenly, with the average being 58% and highest only being 73%:

That's a game which struggled to run at 30 FPS on current-gen consoles, and I'm being held back by my GPU at ~3x the frame rate. It could probably run at 120 FPS or higher if I had a faster GPU or turned down the resolution/quality settings.

That part of my post was assuming that the cores were being utilised properly even on consoles and topping out at 30 or 60FPS, which doesn't leave PC with much room for high framerate unless they have more cores (which are utilised).Adding more cores doesn't help unless the software can use them.

A 64-core Threadripper system is not going to be any faster than an 8-core 3700X in the majority of games - in fact it will be slower in most of them.

Look at the Vampyr example I posted above.

It has spawned a lot of threads, but there are fewer than eight which are actually doing any real work, with half of the game's CPU workload placed on a single thread.

The total amount of work is only 12.5% of what that CPU is capable of, but because it's all placed on a single thread the game can't even reach 60 FPS - at 720p no less. It's worse in ultrawide.

Now I certainly hope that games built for next-gen consoles scale better, but the PS4/XB1 had weak 8-core CPUs where multi-threading was important to get the best performance, and yet few games built for those systems actually scaled to anything like that number of cores.

That's why we have many games which are considered to be "bad ports" that can barely scale to 60 FPS despite the huge difference in performance between the consoles' netbook CPU and high-end desktop CPUs.

Think how much worse it will be if that doesn't change and games are built to run at 30 FPS without good multi-threading on the next-gen systems' Zen 2 CPUs. With the XSX running at 3.8 GHz, most desktop Ryzen systems are not even going to be even 500 MHz faster.

id Tech 5 is actually an engine that scales very well to high core-count CPUs due to the virtual texturing system. One of the reasons the games didn't perform well on some systems is because it also meant that the baseline CPU requirements were higher than most.

It's one of the engines where I saw the largest difference in performance on my R7-1700X compared to an i5-2500K, and is what makes me wonder if Unreal Engine 5 will scale much better with CPU cores than UE4 did.

Here you can see that The Evil Within is loading up all cores fairly evenly, with the average being 58% and highest only being 73%:

That's a game which struggled to run at 30 FPS on current-gen consoles, and I'm being held back by my GPU at ~3x the frame rate. It could probably run at 120 FPS or higher if I had a faster GPU or turned down the resolution/quality settings.

Even if they are fully utilizing all eight Zen 2 cores on the next-gen consoles, it doesn't mean that the games will scale beyond 8 cores. Maybe they scale to 8 and no more.That part of my post was assuming that the cores were being utilised properly even on consoles and topping out at 30 or 60FPS, which doesn't leave PC with much room for high framerate unless they have more cores (which are utilised).

As I understand it, a lot of engines are moving to "job systems" where multi-threading is handled automatically and should theoretically scale without developer intervention.

But some games this generation which appear to scale to higher core counts actually started performing worse when they have access to too many cores/threads. There's a point where you may not be able to go any more parallel, and splitting up tasks into smaller jobs starts to hurt more than it helps.

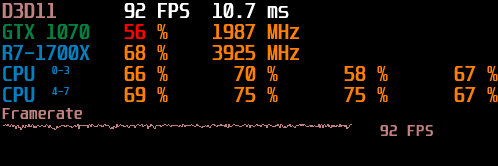

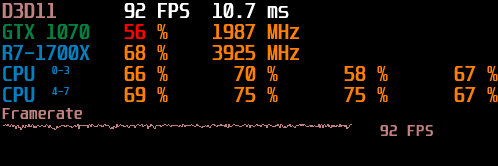

Deus Ex: Mankind Divided performs considerably worse with access to 8c16t than when it's limited to 8c8t on my R7-1700X.

The average CPU usage is about the same - so it is splitting up the work across more threads - but it actually hurts performance to do so.

8c16t: 61 FPS

8c8t: 92 FPS:

This was tested in the exact same area of the game, and nothing else changed except for disabling SMT on the CPU. I checked the results multiple times.

You're talking about the same thing that I am.Even if they are fully utilizing all eight Zen 2 cores on the next-gen consoles, it doesn't mean that the games will scale beyond 8 cores. Maybe they scale to 8 and no more.

As I understand it, a lot of engines are moving to "job systems" where multi-threading is handled automatically and should theoretically scale without developer intervention.

But some games this generation which appear to scale to higher core counts actually started performing worse when they have access to too many cores/threads. There's a point where you may not be able to go any more parallel, and splitting up tasks into smaller jobs starts to hurt more than it helps.

Deus Ex: Mankind Divided performs considerably worse with access to 8c16t than when it's limited to 8c8t on my R7-1700X.

The average CPU usage is about the same - so it is splitting up the work across more threads - but it actually hurts performance to do so.

8c16t: 61 FPS

8c8t: 92 FPS:

This was tested in the exact same area of the game, and nothing else changed except for disabling SMT on the CPU. I checked the results multiple times.

I'm saying that if the cores are utilised on consoles then the only way to push high framerate on PC would be if that PC has extra cores that are also utilised....which is going to be rare. Hence playing at high framerate may be more difficult next generation.

It's easier for hardware. It's far more difficult for software. That's why most games are still bottlenecked by a single main thread.

Its more difficult sure, but speaking as someone who likes writing multithreaded code, it's not that much more difficult. It's just a different mindset you have to think in and a different archetectural pattern you have to design for.

The bigger problem is that for the longest time Intel basically fucked the computing market by stagnating concurrent development with limited core and thread counts on their chips, and artificially limiting them to their top of the line CPUs that cost a small fortune. Because of that, people just don't have the cores on their machine for developers to really take advantage of concurrent development. And no, 4 core CPUs don't count because after the OS dedicates a core or two, and background programs steal another you are down to 1-2 cores and 4 threads to work with.

And without that it's a harder more impossible argument to make to the higher up's that they should take longer to develop the framework for something that will only affect a small percentage of their consumer base. So it's only now, after Intel has absolutely got its ass kicked, where 4-8+ cores and massive thread counts are becoming mainstream that the argument is actually worth making for developers now.

Last edited:

This is what graphene based processors are for though

Carbon nanotube cpu's are expected 6GHZ easy, and although it's taken a while, things are moving quicker - we went from a 200 transistor cpu in 2013 to a 15000 transistor cpu in 2019, and the 2019 one was done in a far more manufacture-happy method then before.

Obviously extremely primitive compared to what's in even a toaster today, and I don't expect this to be useful for at least another decade, but we'll probably have hybrid silicon/graphene cpus before then. I'd expect the hybrids could potentially reach 6GHZ too.

Even though it is true that GaAs and carbon are considered good candidates for new materials, a change in material isn't a magical salve that will single-handedly enable another long period of exponential growth. For one, there will be no equivalent of Moore's Law for those materials; if/when the price/performance economics of those materials surpasses silicon, the transistors will be small enough that a traditional Moore's-style node shrink will not be possible. The bond length and crystal structures are fixed, period, so every new node, like now with silicon, will require a lot of very expensive R&D.

To be honest, I am inclined to believe that the future will be better described by specialized, application-specific architecture, like GPUs or the Google TPU, working in parallel both in a single system and via the cloud.

Yeah, it's a valid concern if you prefer high frame rates. I can't go any lower than 90 and even that is a bummer. 120+ really is the sweet spot, at that point I find it very difficult to detect fluctuations.You're talking about the same thing that I am.

I'm saying that if the cores are utilised on consoles then the only way to push high framerate on PC would be if that PC has extra cores that are also utilised....which is going to be rare. Hence playing at high framerate may be more difficult next generation.

This isn't true at all if you are chasing 120+ frame rates. Most of us are still on 1080p displays for just that purpose. At 90+ I am almost always CPU bottlenecked.That's a lot of assumptions in the OP. These CPUs are good enough to no longer be bottlenecks.

This isn't true at all if you are chasing 120+ frame rates. Most of us are still on 1080p displays for just that purpose. At 90+ I am almost always CPU bottlenecked.

I was referring to the consoles. My point was that the consoles are very rarely going to be pushing anywhere close to 100% utilization so you're not going to need a "12 core 24 thread 6ghz CPU" for high framerate gaming on PC.

Last edited: