We actually know more about RDNA2's architecture than Ampere following Microsoft's Hot Chips presentation. There was nothing in there about improved IPC and other than the addition of RT hardware in the TMUs the architecture was an exact replica of RDNA1.

If Series X was faster than a 2080Ti we'd know about it already. So I'm not buying those rumours.

I do believe the 80 CU part will be faster than a 3070 in rasterization but I don't believe it will reach 3080 levels. Pricing is obviously going to be key as well, due to lower RT performance, poor brand prestige and crucially, a significant feature deficit, AMD need to price equivalent rasterization performance lower than Nvidia. If it's slower than a 3080 then that caps the maximum price at $500 in order for it to be competitive.

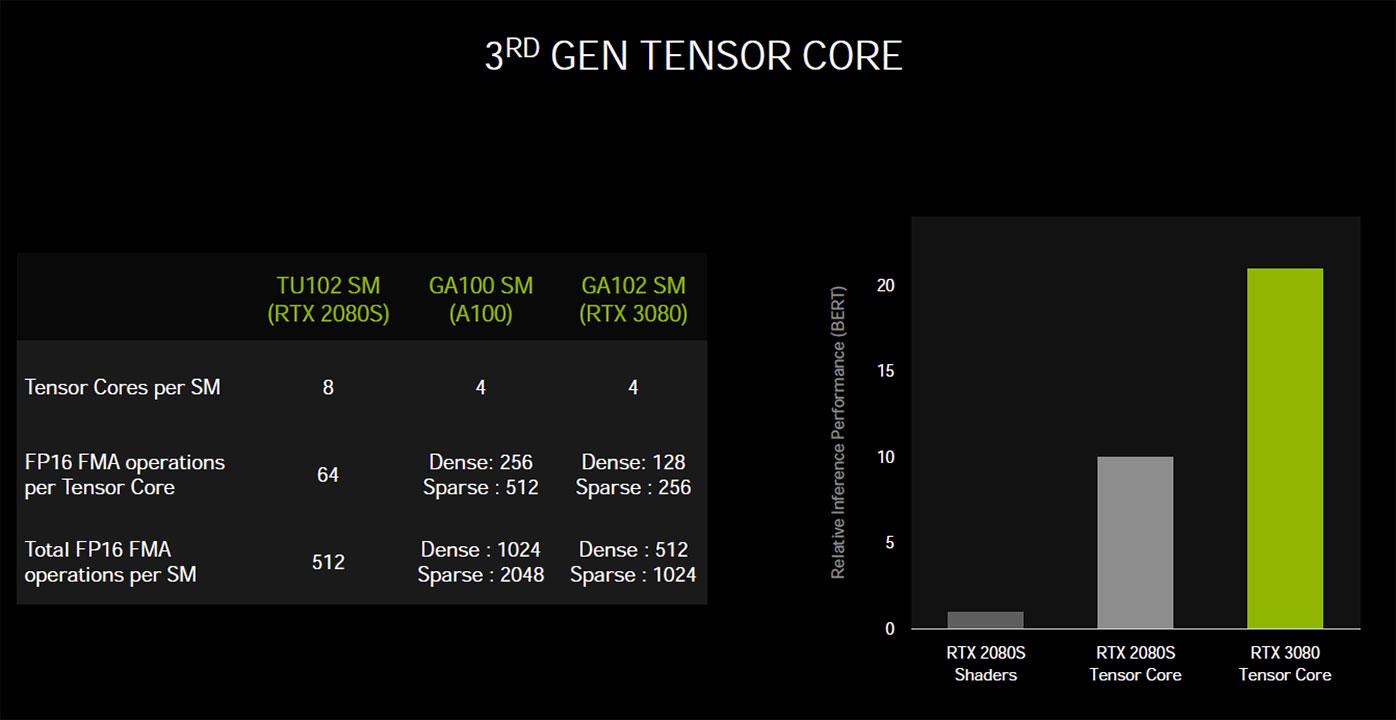

I still wouldn't personally buy a GPU with lower RT performance and no Tensor cores mind you but I understand why others would. If you can get ~25% more rasterization performance for the same price then there'll be a market for it.

If Series X was faster than a 2080Ti we'd know about it already. So I'm not buying those rumours.

I do believe the 80 CU part will be faster than a 3070 in rasterization but I don't believe it will reach 3080 levels. Pricing is obviously going to be key as well, due to lower RT performance, poor brand prestige and crucially, a significant feature deficit, AMD need to price equivalent rasterization performance lower than Nvidia. If it's slower than a 3080 then that caps the maximum price at $500 in order for it to be competitive.

I still wouldn't personally buy a GPU with lower RT performance and no Tensor cores mind you but I understand why others would. If you can get ~25% more rasterization performance for the same price then there'll be a market for it.