A process node being "scheduled" for some time doesn't mean anything for the production time of a large die PC GPU.

Also note that they are not even comitting to the use of N5 for RDNA3 at this point meaning that it's likely more than a year away from now.

I don't need a new GPU now nor have I bought one since 2019.

8 and 10 won't be enough for the generation but this hardly matter when we talk about 2022. The generation will last till 2026-27.

Not going to happen due to 384 bit bus. It's either 11 or 12 GBs for 3080Ti which aren't at all that different to 10GBs of 3080 and won't be enough for the generation either.

Note that RX6800 won't be enough for the generation too but for different reasons. So it's kind of a pointless comparison.

To me this is entirely dependent on your target resolution and how much you need to max out RT.

Consoles are not going to come close to matching PC RT settings.

If you plan on sticking with a 1440p monitor and don't need to max out RT, absolutely a 6800xt or 3080 will last you 5+ years.

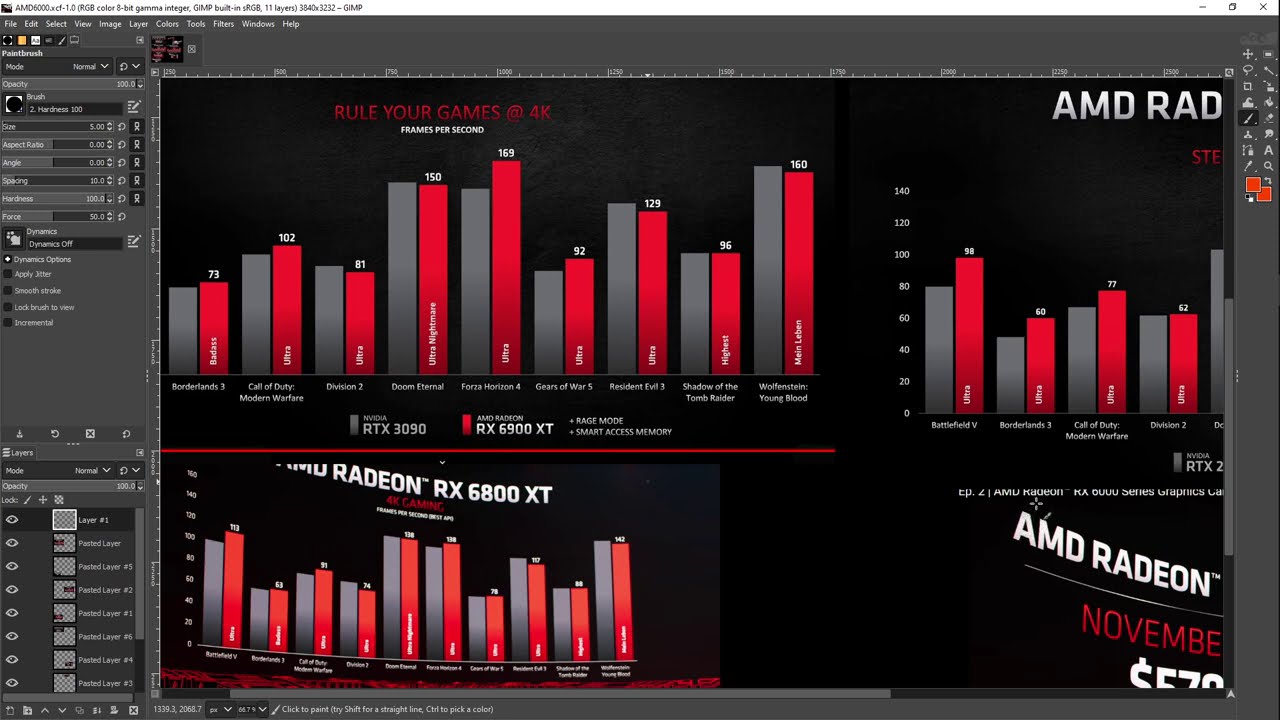

If you are targeting no compromises maxed out 4k 60 gaming meaning you never want to even turn down any settings (which is a silly, silly standard to have), then sure a 3080/6800xt will need to be upgraded likely around mid gen console refreshed time 3-4 years from now, but even then I don't see those refreshes meaningfully surpassing a 3080/6800xt.