If the rumors are right, they are one gen behind. On par with Turing. And their "DLSS" solution faster but not as good. I personally don't care that much about raytracing. If it's as good as on consoles that's good enough.Well, they didn't show any RT benchmarks unlike Nvidia in their presentation, so I don't think it's too much of a stretch to assume that they lag behind in that aspect.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

AFAIK 16GB RAM is completely useless at 1440 P So I don't know why for the 6800 AMD didn't go with 8 or 10gb and make it cheaper.

If the 6800 had 8GB and went for $480 it would be a lot more attractive, at least to me.

True but then you have to wager is $500 extra worth the limited amount of games that use it and will AMD get their own solution up and running soon?You have no valid issue. I've explained this. Drawing logical conclusions is fair game on a discussion board.

The 3090 is now completely out of pocket. Nvidia can't even drop the price this soon as they are actively fulfilling orders.

BUT, the RT performance comparison between the 6900 XT and RTX 3090 could tell an entirely different story. What if the 3090 just bulldozes the 6900 by some ridiculous percentage?

I found this presentation rather weak, they hardly touched on the games so now we have to wait for real world performance differences.

The thing is that these cards are likely to have better RT performance than the consoles, for which developers will optimize RT. The games will look great and optimal, you might just not get that "ray tracing just blew my mind"-moment.

No hardware supported DLSS has me actually worried. The UE5 demo ran at 1440p/30fps on a PS5, meaning that some sort of upscaling will be necessary for high end early UE5 games running at 60fps+. Now, we know that Microsoft is working on their own software AI super-resolution upscaler, but will that be on PC? Maybe... probably even I'd say. What about Sony though?

Really don't like that AMD doesn't seem to have an in-house solution that is implemented in RDNA2 AI upscaling.

No hardware supported DLSS has me actually worried. The UE5 demo ran at 1440p/30fps on a PS5, meaning that some sort of upscaling will be necessary for high end early UE5 games running at 60fps+. Now, we know that Microsoft is working on their own software AI super-resolution upscaler, but will that be on PC? Maybe... probably even I'd say. What about Sony though?

Really don't like that AMD doesn't seem to have an in-house solution that is implemented in RDNA2 AI upscaling.

They should've dropped the VRAM down to 12GB for the 6800 and gotten the price down to $479 or $499. Having a SKU just $70 lower than 6800XT makes no sense.

12GB would have a lower memory bus, it would need to be 8GB or 16GB to keep the memory bus the way it is...

I was dead set on a 3080, now I want a 6900xt. If stock isn't an issue and 6900xt price really is $999 hard to pass that up. Heck, most AIB 3080's are the OC models that are $800+ anyways. I've never owned an AMD card before, NVIDIA / EVGA owner since 5 series cards lol. Now my PC might be full team red, 3700x in there now.

In regard to the 3070 vs 6800 I think having less RT performance is going to hit performance harder & faster than having less VRAM, atleast at 1440p. In a 1-2 years I expect pretty much every single AAA game to be using RT, given that consoles support it.

This also applies to the 6800xt where the rumours have it at 2080Ti performance for raytracing so if that is true then these cards won't age well at all.

This also applies to the 6800xt where the rumours have it at 2080Ti performance for raytracing so if that is true then these cards won't age well at all.

You have no valid issue. I've explained this. Drawing logical conclusions is fair game on a discussion board.

My overall issue with the post is valid, though I did jump the gun on calling it trolling after engaging further with the poster.

I'd be worried if they weren't... but they really needed that tech to be ready for reveal today. DLSS is becoming increasingly common with the biggest triple A releases. I'm mainly buying a new GPU right now for Cyberpunk and Nvidia DLSS will likely give me +25% frame rate (or more) with no discernible downside.

If AMD can match or exceed that in the future (and I'm optimistic after seeing what they did with FreeSync) then we can talk, but for now... these cards just aren't compelling to me. DLSS is absolutely critical to those trying to meet or exceed 120fps in the latest games.

I'm more surprised they didn't announce a direct 3070 competitor. Seems like the 6800 performance will be halfway between the 3070 and 3080.

I actively avoid it. I want as close to 144fps as possible with no dips below 60 ever. Raytracing too intensive for that.

I have a 2080 and when fortnite added DLSS and raytracing I turned both of them on DLSS helps a lot. Raytracing frames are ok so long as nothing much is going on. Soon as a big battle along with building starts happening, the framerate tanks so matter which RTX quality is set.

I never said RT wasn't a valid topic. I'm not sure why you think I said it wasn't something worth discussing. I said the fact he proclaimed it was going to be poor with no evidence was my issue.

It was poor enough that AMD decided not to talk about it during their hardware unveiling even though it was the elephant in the room.

For those that missed it:

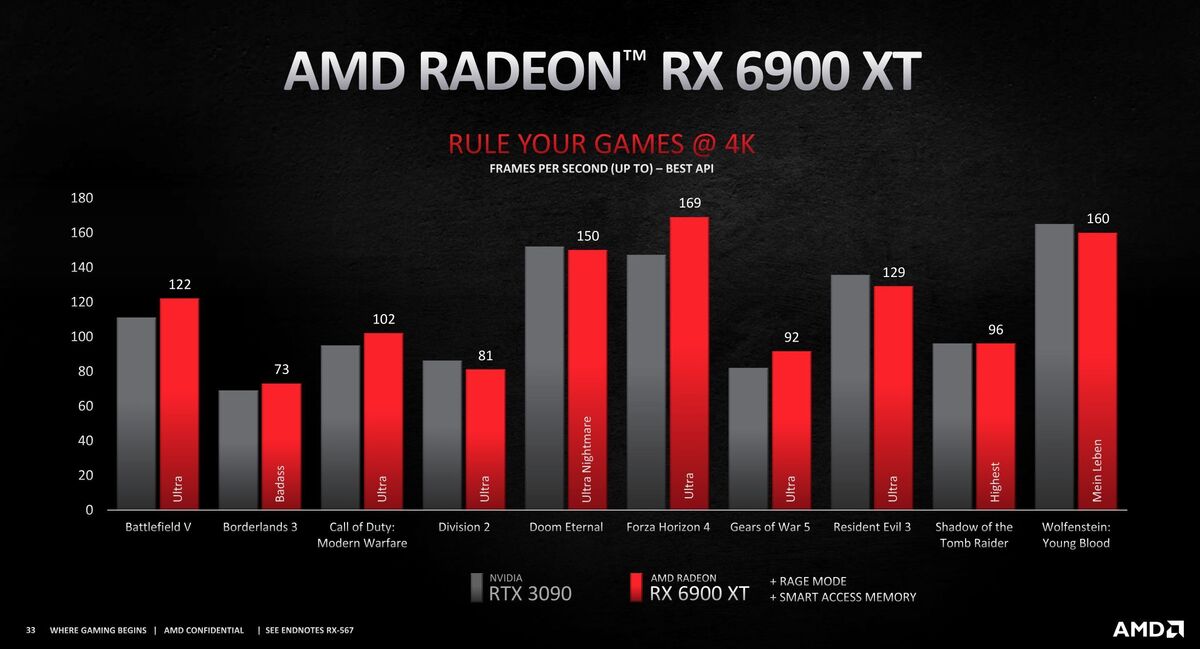

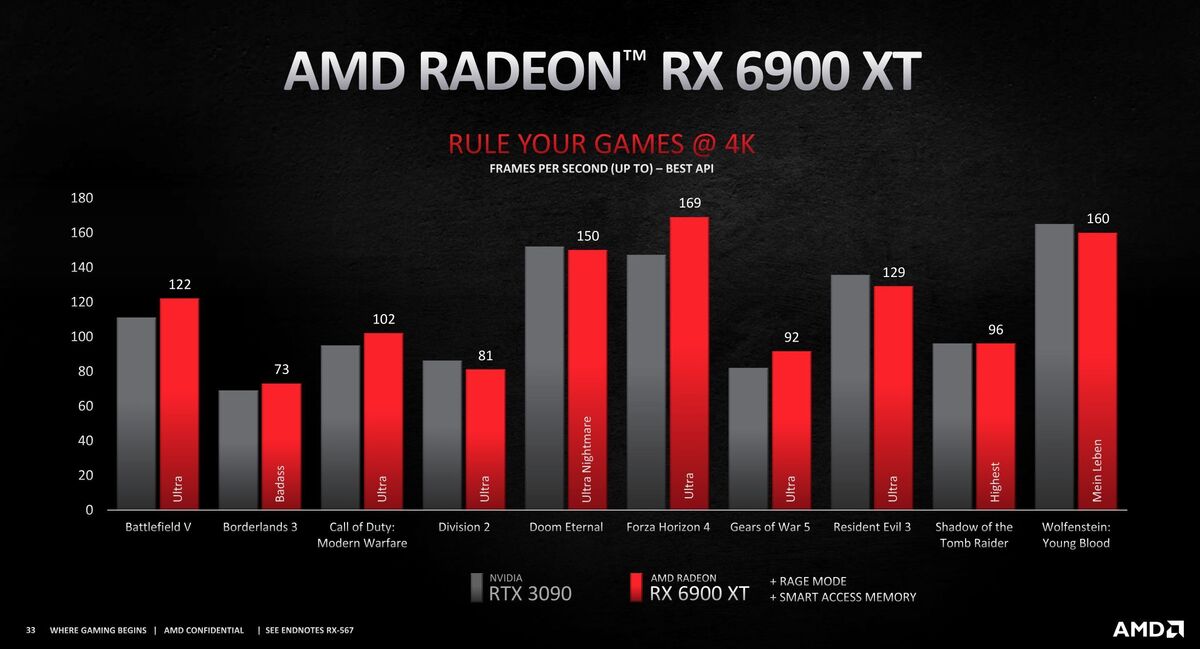

I predict with Rage Mode and SAM off, the 6900XT will be ~5% slower than the 3090. But, the rumours are the 6900XT overclocks really well. The folk that leaked most of the performance values and info for the 6000-series have been on the money so far, and the 60-72 CU cards at least being able to clock up to 2400-2500Mhz rumour is real imo.

I predict with Rage Mode and SAM off, the 6900XT will be ~5% slower than the 3090. But, the rumours are the 6900XT overclocks really well. The folk that leaked most of the performance values and info for the 6000-series have been on the money so far, and the 60-72 CU cards at least being able to clock up to 2400-2500Mhz rumour is real imo.

You can be sure as hell that it's coming.AFAIK 16GB RAM is completely useless at 1440 P So I don't know why for the 6800 AMD didn't go with 8 or 10gb and make it cheaper.

$580 price on 6800-16 hints that there will inevitably be 6800-8 at some $530 down the line.

They haven't announced it today because it would perform exactly the same as 6800-16 and this would put a big question mark over the need for 16GB RAM buffers for all of these cards. No point in destroying your competitive advantage with your own hand.

AIB models with >350W power limits and 3-slot coolers sure.and the 60-72 CU cards at least being able to clock up to 2400-2500Mhz is real imo

Let's put it this way: "Don't jump the gun" and "Stop concern trolling" are two different conversations.My overall issue with the post is valid, though I did jump the gun on calling it trolling after engaging further with the poster.

The latter is an accusation of breaking the site's TOS.

why does every youtuber use the same format for thumbnails

They didn't show raytracing graphs, so probably that performance is less impressive. Didn't hear anything about a DLSS alternative, but I may have missed it.

Otherwise the XT appears to be at least on the same level as the 3080, while drawing a little bit less power and being somewhat cheaper. We'll see if independent testing confirms.

BFV does particularly well on RDNA2 for some reason. Looks like the XT is about +15% over the 3080.

I'll probably keep my 3080 order, because DLSS seems pretty good, and it's been over five weeks since I made the order and I am not keen on potentially hitting reset on that waiting period, especially when it should end this week. But RDNA2 does seem competitive, so that's good. Especially with Nvidia having such stock issues. Hopefully AMD will do better on that front.

Interesting.

Can't really base a purchase on "we're working on it", but yeah by bringing consoles onboard it seems to only be a matter of time before AMD catches up.

Certainly at least in support. Like, most console games will eventually be using it.

Otherwise the XT appears to be at least on the same level as the 3080, while drawing a little bit less power and being somewhat cheaper. We'll see if independent testing confirms.

BFV does particularly well on RDNA2 for some reason. Looks like the XT is about +15% over the 3080.

I'll probably keep my 3080 order, because DLSS seems pretty good, and it's been over five weeks since I made the order and I am not keen on potentially hitting reset on that waiting period, especially when it should end this week. But RDNA2 does seem competitive, so that's good. Especially with Nvidia having such stock issues. Hopefully AMD will do better on that front.

Interesting.

Can't really base a purchase on "we're working on it", but yeah by bringing consoles onboard it seems to only be a matter of time before AMD catches up.

Certainly at least in support. Like, most console games will eventually be using it.

Last edited:

Because it works?

12GB would have a lower memory bus, it would need to be 8GB or 16GB to keep the memory bus the way it is...

Different layout with lower bandwidth for fewer CUs would be fine. Nvidia is getting away with offering 8 and 10GB for the products they're competing with.

I have an Asrock AB350 motherboard. Do I need to upgrade that in order to get one of these cards? PC stuff is still so confusing sometimes. Thanks in advance for anyone offering help.

$h!tty Thumbnails on Linus Tech Tips - Honest Answers Ep. 5

This video is sponsored by Fiverr. The first 300 viewers who use code LINUS will get 20% off! http://bit.ly/2o2ohp4Alright... it's time we addressed the thum...

(tldr, it increases performance of the videos by a reasonable amount)

are you asking me or telling me?

Actually, it's pretty relevant that you would ask this question while post-quoting an image of Linus. He was a pioneer in the "schlocky YT thumbnail" trend.

Because they're annoying.

they get more clicks.

He's telling you. Many YouTubers have spoken on this. They all do it because the videos get more views. It is what it is.

It gets clicks for some reason.

The word "common" is doing some legwork.I'd be worried if they weren't... but they really needed that tech to be ready for reveal today. DLSS is becoming increasingly common with the biggest triple A releases. I'm mainly buying a new GPU right now for Cyberpunk and Nvidia DLSS will likely give me +25% frame rate (or more) with no discernible downside.

It was poor enough that AMD decided not to talk about it during their hardware unveiling even though it was the elephant in the room.

They did talk about if for a significant section of the show. The issue is we weren't given performance numbers.

I'm expecting a lot of enthusiasm from Sony and MS on this subject since they'll definitely want that DLSS on their own consoles.If they manage to make that feature universal and relatively easy to implement, it might just blow the NV DLSS away.

That'll be for a 48 or 50CU 12GB variant next year at $450 or $500.AFAIK 16GB RAM is completely useless at 1440 P So I don't know why for the 6800 AMD didn't go with 8 or 10gb and make it cheaper.

You're right, but I also put 50% of the blame on the consumer for this decision as well. The 5700XT came out at a good price, eventually solved it's driver issues and now trades blows with the 2070 Super, but few people bought it. If the average income PC gamers aren't biting AMD will inevitably shift to more premium tiers for the more discerning power users who'll pay through the nose for those frames per second. It's great for those guys and gals, but no so much for the lower tier PC gamers living that covid struggle life lol. We gotta show AMD we actually want these products next time.It certainly does that. As a consumer with money literally waiting to be spent on the best sub £500 graphics on offer I'd far rather they released a product that was competitive instead. There's much bigger volume in the $500 and below price bracket but they've left it completely uncontested.

Last edited:

Did AMD say anything about HDMI2.1 today? I didn't catch anything but I might have missed it?

LOL it's so cringey isn't it?

LOL it's so cringey isn't it?

Thumbnails are very much science and they spend a lot time figuring out what drives clicks. That kind designs do.

Linus just spices it up with some braindead clickbait titles for his videos.

So they arent using compute for RT but have dedicated RT hardware called RT accelerators?

That's news to me then, wonder how it performs vs RT cores, but seeing as they didn't talk about it at all I'm betting it doesn't perform as good and maybe on same level as turing or a bit worse.

That's news to me then, wonder how it performs vs RT cores, but seeing as they didn't talk about it at all I'm betting it doesn't perform as good and maybe on same level as turing or a bit worse.

No RTX benchmarks kinda says it all really.

These cards look great at pure raster, but no DLSS and no RT performance benchmarks means that Nvidia will be king there. For something like Cyberpunk that's going to matter.

The VRAM is a big deal though. 16GB vs 10 is substantial.

These cards look great at pure raster, but no DLSS and no RT performance benchmarks means that Nvidia will be king there. For something like Cyberpunk that's going to matter.

The VRAM is a big deal though. 16GB vs 10 is substantial.

This right here is the reason - the only reason they are going for this much memory is to keep the bus fatttt12GB would have a lower memory bus, it would need to be 8GB or 16GB to keep the memory bus the way it is...

increases power budget according to Gamer's NexusI missed the start of the presentation, what is RAGE mode exactly?

Wait for AIBs.

Perennial PC gaming giants Fortnite and Minecraft both support DLSS now. The 2 biggest AAA releases this holiday (Cyberpunk 2077 and CoD Cold War) will both support it. Watch Dogs Legion will support it.

Yes, DLSS support is becoming increasingly common. Demand for it will only increase as more people experience it too.

No way anyone is releasing another 8GB card for more than $500 ever again. I expect AMD's 12GB 5700XT to be $500 max. I'm glad we're finally moving past the 8GB era. Feels a little like the end of the 4 core.You can be sure as hell that it's coming.

$580 price on 6800-16 hints that there will inevitably be 6800-8 at some $530 down the line.

They haven't announced it today because it would perform exactly the same as 6800-16 and this would put a big question mark over the need for 16GB RAM buffers for all of these cards. No point in destroying your competitive advantage with your own hand.

I missed the start of the presentation, what is RAGE mode exactly?

It's a stupid name imo but it's basically like PBO for CPUs, one click overclocking that automatically gets the most out of your particular gpu.

No way they would be base, most of us pumped for those rumor took them as boost clocks.

This right here is the reason - the only reason they are going for this much memory is to keep the bus fatttt

You shouldn't need the same bandwidth if there are fewer CUs and target a lower resolution. Costing $80 more than the competition and just $70 less than your other card basically makes 6800 pointless.

- Status

- Not open for further replies.