They are, especially if you can use DLSS without compromising the IQ or sacrificing too much in the video settings.The 3080/3090 cards aren't powerful enough for 4K at 60fps? O_o

IMO the 3080 is more a really good, high/ultra settings 1440p card.

They are, especially if you can use DLSS without compromising the IQ or sacrificing too much in the video settings.The 3080/3090 cards aren't powerful enough for 4K at 60fps? O_o

They need to be competing with the 3080 and the 3090. While the latter is more of a workstation card than a gaming GPU, especially if those recent leaks are a best-case scenario vs. the 3080, them not having a Pro Vega II equivalent with Big Navi for OEMs to adopt will result in them ceding a large chunk of the market to NVIDIA.The VRAM amounts are enticing, but those memory bus sizes do seem a tad on the small size. Hopefully AMD really puts the pedal to the medal on RDNA2. They really need to be competing with the RTX 3080 and not just the RTX 3070.

That's not true at all. At the level of a multiprocessor Ampere's improvement in RT over Turing is considerably higher than the rest of Ampere gains.

Sigh.See my post earlier.

Makes no sense for AMD to compete against Microsoft for a directML model for upscaling.

This has zero meaning for RT h/w as these games aren't limited by that.I'm talking about actual performance differences in games benchmarked

Sigh.

A model (software) which is running on DML would "compete against MS" in the same way a game running on DX12 would compete with DX12.

This has zero meaning for RT h/w as these games aren't limited by that.

That video has all of its conclusions wrong.

I do not think that is the case at all. Ampere handles RT better than Turing.The point is, in games tested, the difference in RT performance/difference in performance impact from RT between Turing and Ampere is very small.

Sigh.

A model (software) which is running on DML would "compete against MS" in the same way a game running on DX12 would compete with DX12.

Performance impact comes from additional shading which is required to incorporate any RT calculations.The point is, in games tested, the difference in RT performance/difference in performance impact from RT between Turing and Ampere is very small.

It doesn't matter how you train the model for inferencing. You only need compatible and capable (in performance) h/w to run the latter, its vendor is irrelevant.Welp, I do say it's possible AMD has a model.. but why. The purpose of using Dx12u from a developer's perspective is to call the instructions and be hardware agnostic. You think they would like to have to train an AI on an AMD model and then a model on Nvidia and then Intel...? Makes much more sense that Microsoft gives a model.

DML is h/w agnostic.It would never be used. The game developers also would want the DirectML to be hardware agnostic

I've heard that AIB partners can only launch with Navi 22 products this year, while AMD will exclusively launch the Navi 21 products.

The point is, in games tested, the difference in RT performance/difference in performance impact from RT between Turing and Ampere is very small.

Exactly, the only way to make this claim is to check a game that only uses raytracing.I do not think that is the case at all. Ampere handles RT better than Turing.

You merely have to look at the performance differential between the 2080 and 3080 in a fully rasterised game vs. a path traced one to see that.

https://www.eurogamer.net/articles/digitalfoundry-2020-nvidia-geforce-rtx-3080-review?page=6We conclude our ray tracing performance analysis with one of the only fully path-traced games on the market: Quake 2 RTX. Path tracing is RT in its purest form, so it's no surprise to learn that it's more computationally expensive than supplementing traditional rasterised rendering with effects like ray traced shadows, reflections or global illumination. So while Quake 2 was released back in 1997, the path-traced version of the game is one of the hardest games on the market to run, even with dedicated RT hardware.

So how does the new card do? Well, the RTX 3080 manages just 65fps at 1440p, but the RTX 2080 Ti and 2080 fare even worse - with average frame-rates of 34 and 44fps, respectively. That means we're looking at a performance advantage of 47 per cent for the 3080 over the 2080 Ti, which shoots up to 94 per cent when we compare the 3080 against the 2080.

RT (BVH testing) runs in parallel to shading even on Turing so it's even less than that.Say you spend 80 seconds of a GPU's rendering time on a frame for the rasterization part & 20 seconds for the RT part. What happens when you double RT performance? Well, you spend 10 seconds instead of 20 seconds on it.

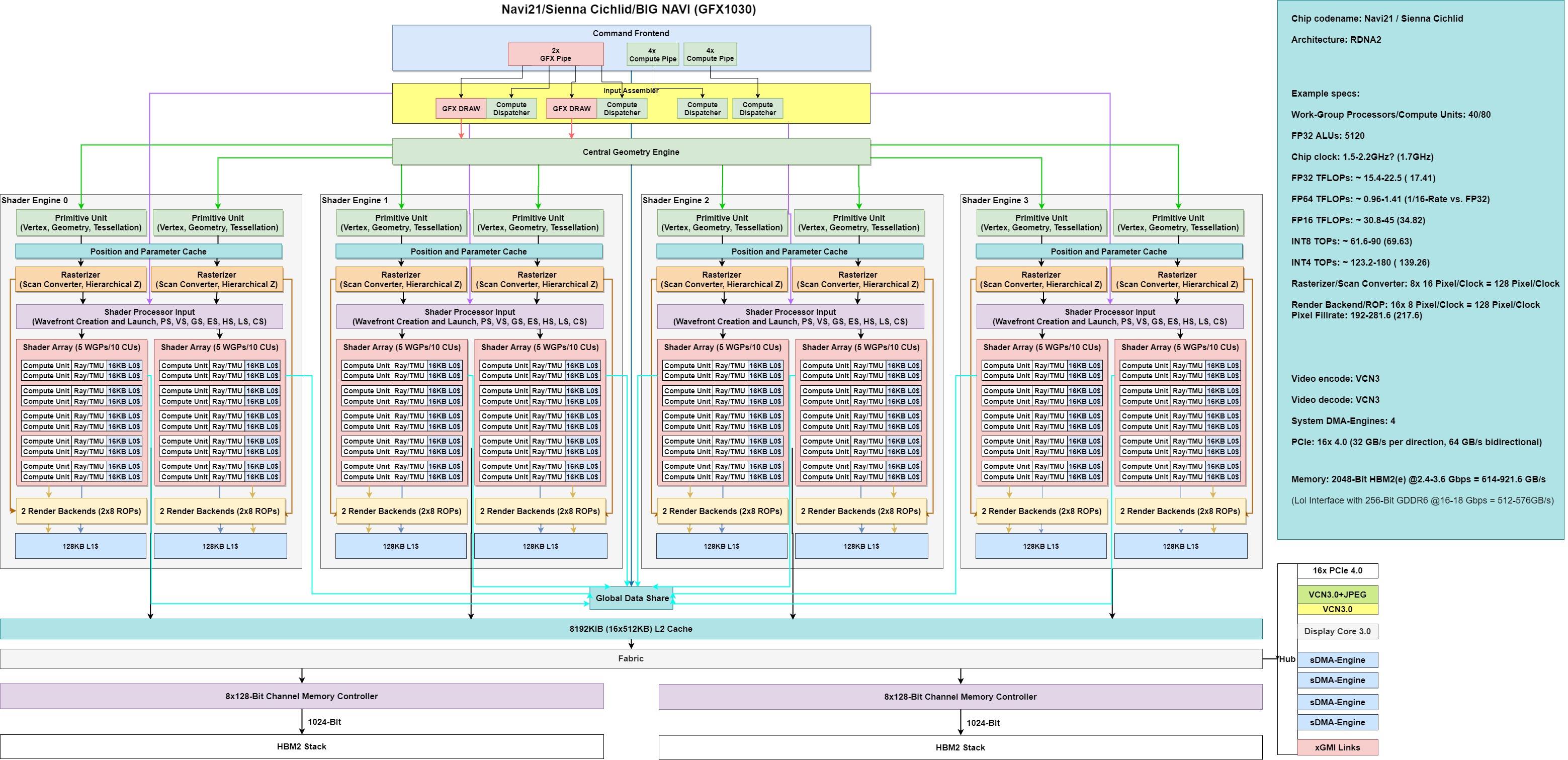

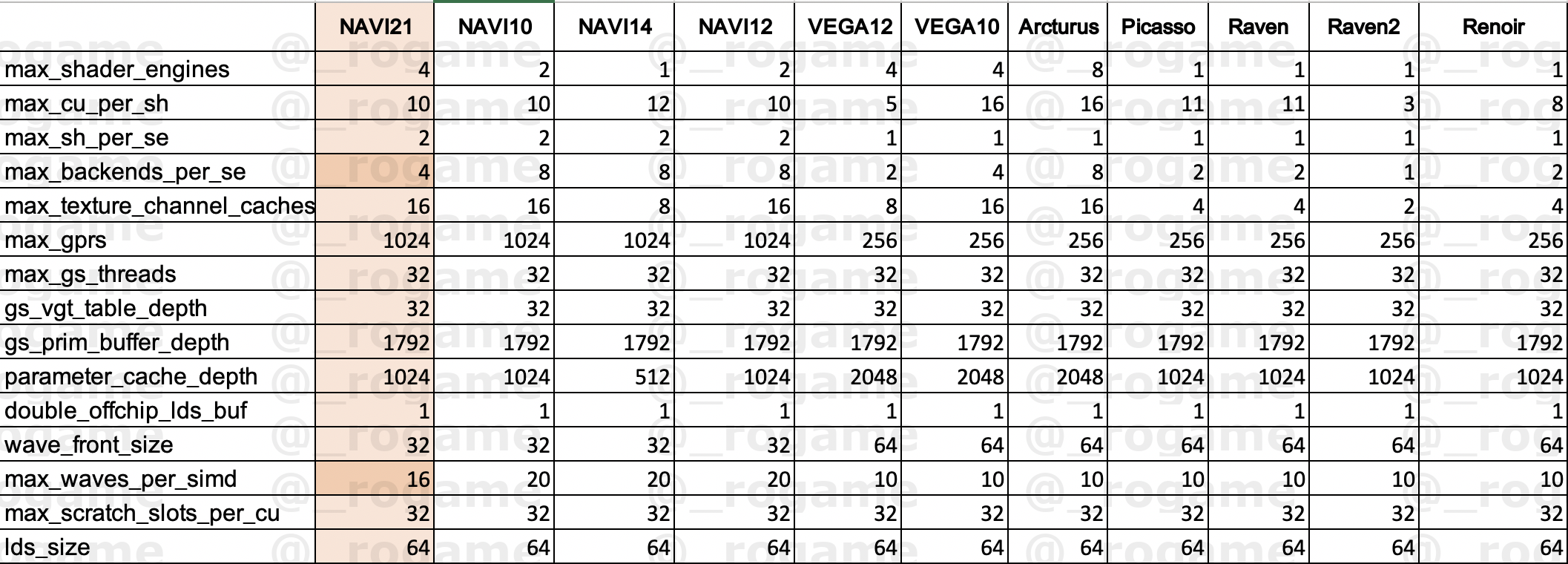

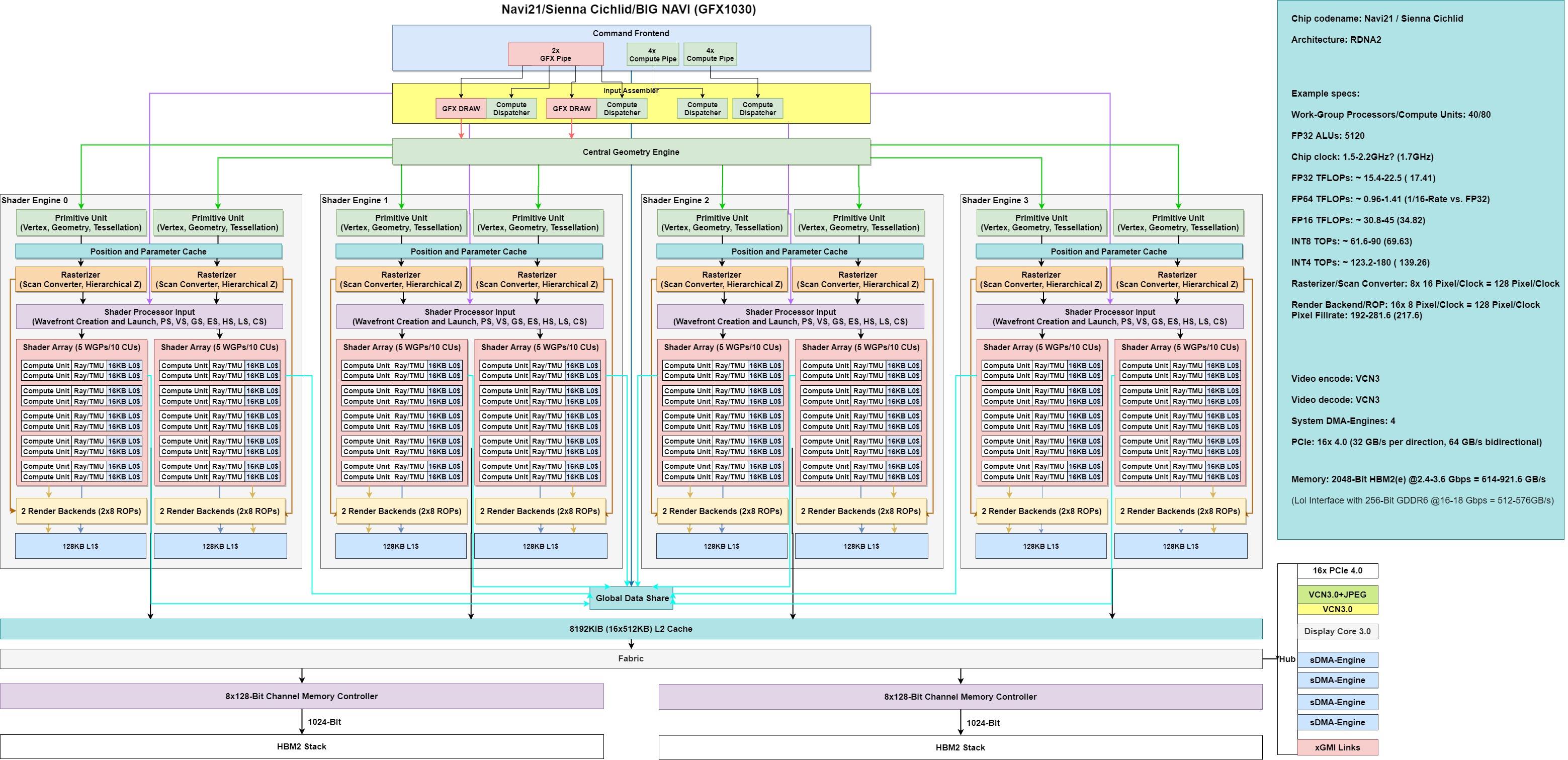

In terms of specifications, Navi 21 is Big Navi, which integrates up to 80 groups of CUs, which is 2560 stream processors, matching 256bit wide video memory, and is expected to be named RX 6900 series.

I do not think that is the case at all. Ampere handles RT better than Turing.

You merely have to look at the performance differential between the 2080 and 3080 in a fully rasterised game vs. a path traced one to see that.

Isn't the 5700xt 2070s performance

Go look at the work Richard did for the RTX 3080 Review and compare its percentage uptick in a pure path traced game vs. a purely rasterised one. Sm for SM Ampere is quite a bit faster at doing RT workloads.I would like to see some more tests then. 10% difference in HU tests of actual relevant games is small.

Go look at the work Richard did for the RTX 3080 Review and compare its percentage uptick in a pure path traced game vs. a purely rasterised one. Sm for SM Ampere is quite a bit faster at doing RT workloads.

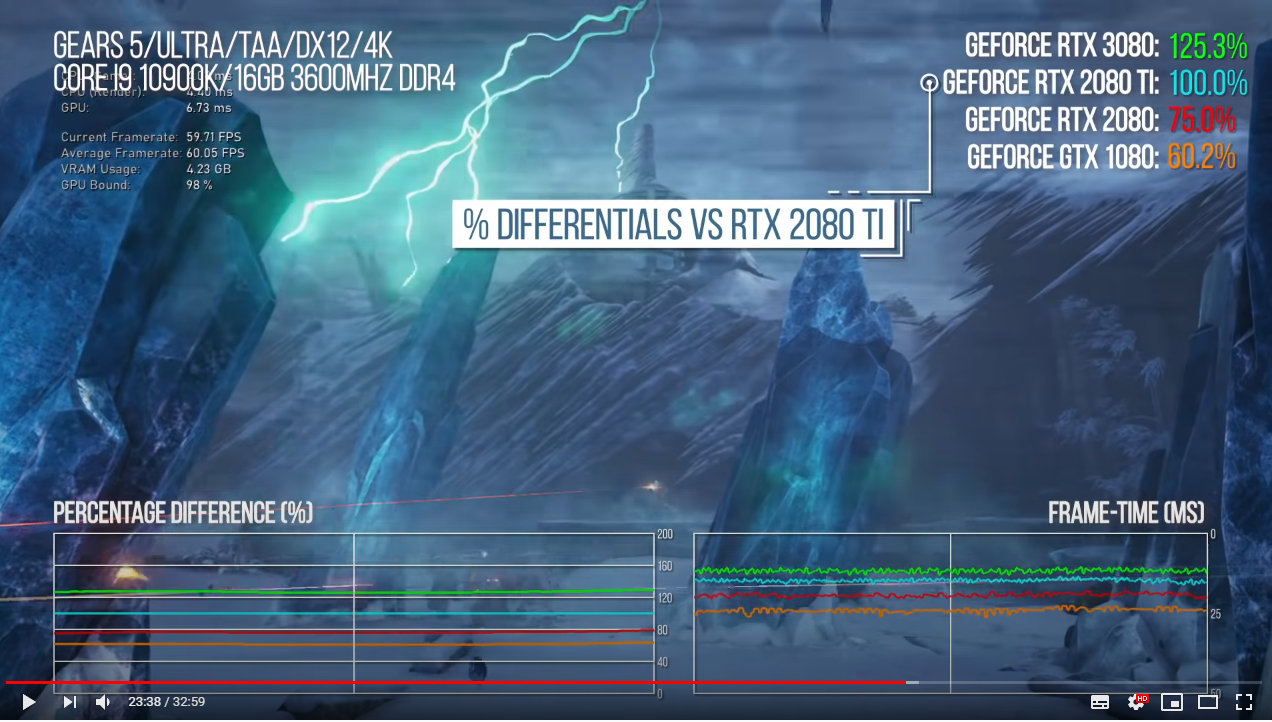

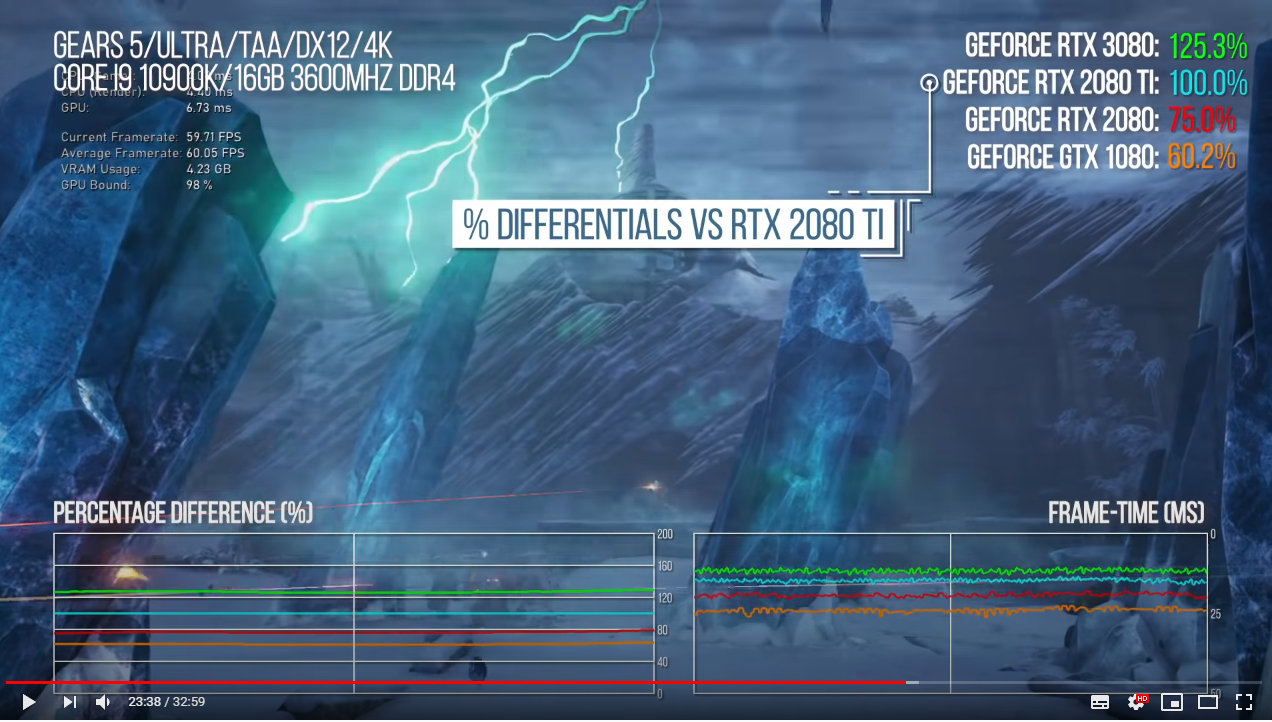

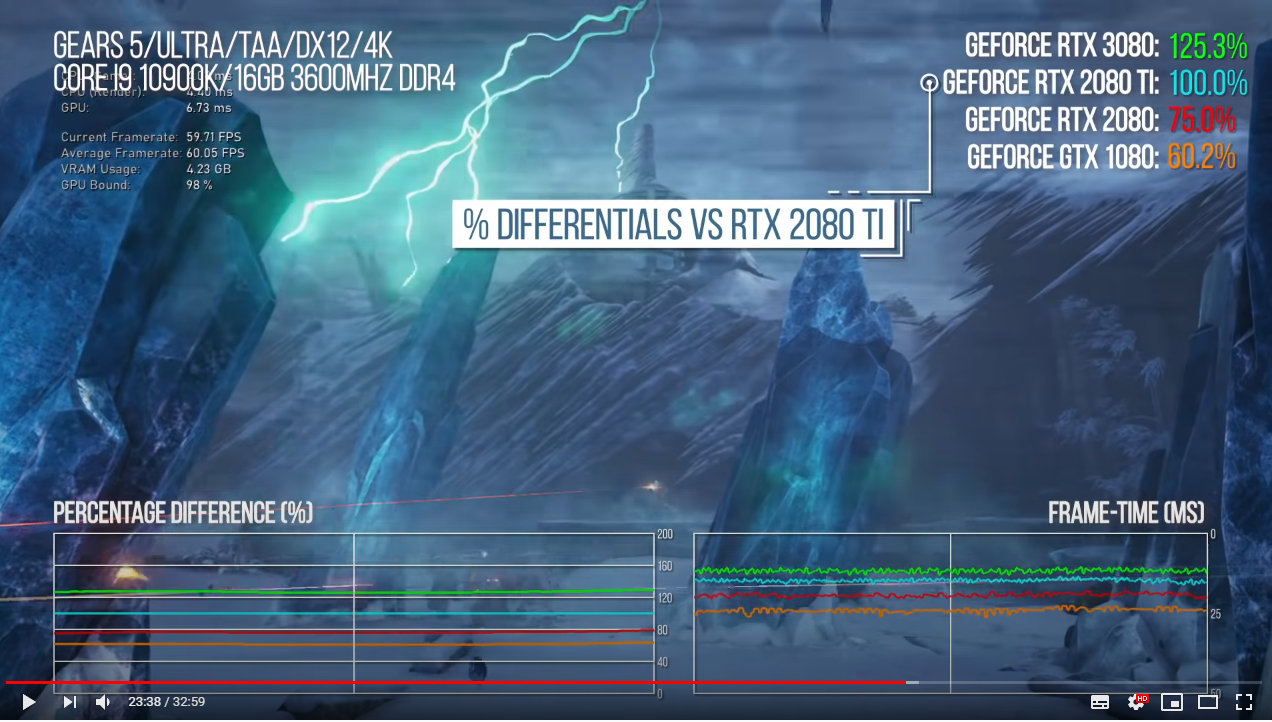

Fully rasterised/compute game:

Fully path traced/ray traced game:

It's possible that the difference in ROPs is the different Navi 21 models i.e. the RX 6900 and 6900XT.I am still so freaking confused when it comes to Navi 21 specs.

It is 80 CUs and 96 ROPs?

Or 80 CUs and 64 ROPs

Or not even 80 CUs?

thank you!Go look at the work Richard did for the RTX 3080 Review and compare its percentage uptick in a pure path traced game vs. a purely rasterised one. Sm for SM Ampere is quite a bit faster at doing RT workloads.

Fully rasterised/compute game:

Fully path traced/ray traced game:

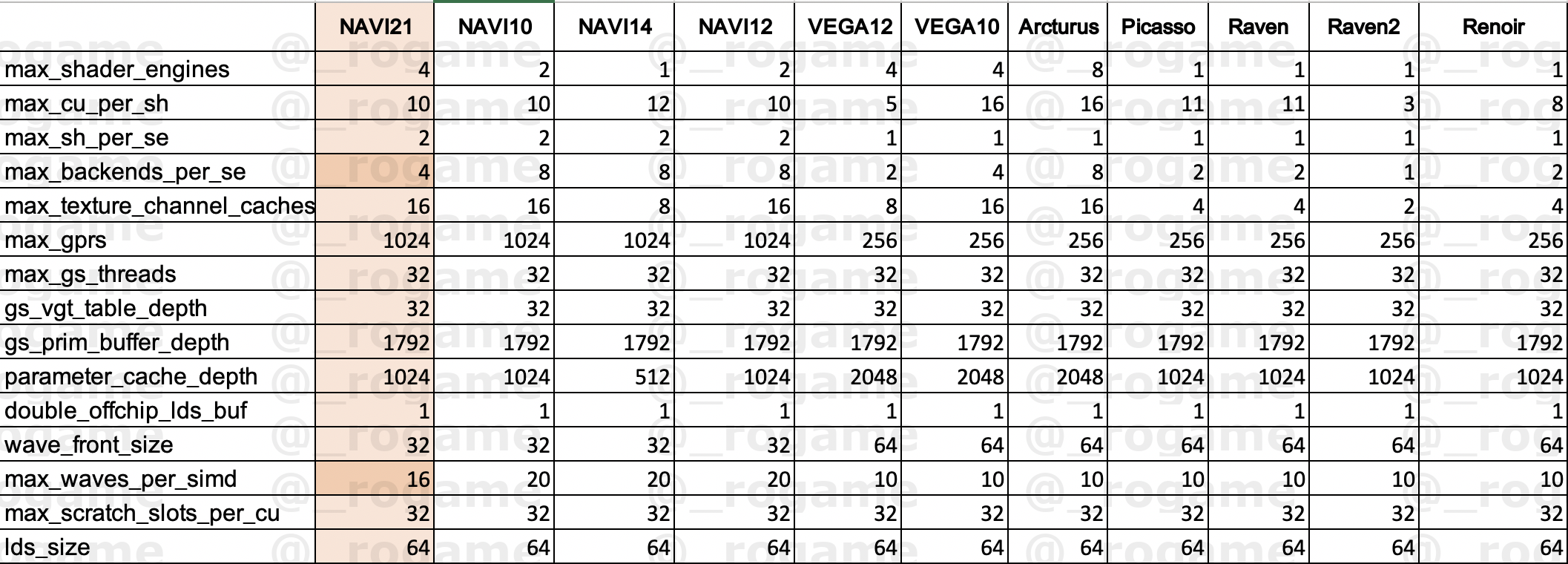

According to the firmware data from AMD, N21 has 80 CUs which would be 5120 "shader cores".I am still so freaking confused when it comes to Navi 21 specs.

It is 80 CUs and 96 ROPs?

Or 80 CUs and 64 ROPs

Or not even 80 CUs?

According to the firmware data from AMD, N21 has 80 CUs which would be 5120 "shader cores".

https://twitter.com/_rogame/status/1289239501647171584

The amount of ROPs is even, 4 Render Backends per Shader-Engine, so either 64 or 128 ROPs.

It's likely that AMD changed the amount of ROPs per RB and is going with 128 ROPs.

I drew N21 with 2048-Bit HBM2(e), which fits to the amount of L2$ tiles (16 texture channel caches for 16 memory channels).

(On a side note, Arcturus doesn't make a lot of sense with 16)

Otherwise it would be a 256-Bit GDDR6-Interface, where rumours from RedGamingTech have it that "128MB Infinity Cache" would balance it out, which I'm not inclined to believe.

It's possible that the difference in ROPs is the different Navi 21 models i.e. the RX 6900 and 6900XT.

If the Navi 22 still has 40 CUs, how much of an improvement will it really have over the 5700xt?The good thing for those of us that will stay in the midrange is that we at least know that AMD puts out good competition there. I'm quite confident that the waiting game will benefit me since competition may bring down the 3060 price, or put something better for the same price-range so I'll just wait. Excited anyway though

If the Navi 22 still has 40 CUs, how much of an improvement will it really have over the 5700xt?

9th November - Media Reviews

11th November - Card Launch

Dates can change of course

What are we expecting in terms of performance for Navi 21? Between the 3070 and 80?

9th November - Media Reviews

11th November - Card Launch

Dates can change of course

I doubt that AMD will do any better with launch really.

Yeah, the 5700xt was a midrange part, and that had really rough availability

Yeah, the 5700xt was a midrange part, and that had really rough availability

Yeah, it will fight for resources with Apple, other AMD products (CPUs) and the rest of the industry instead. NV is pretty much alone as a big customer of Samsung's 8nm process.Didn't Nvidia say they started mass masking the 3080/3090 in August? If AMD already started making the cards and it comes out mid-ish November, they will probably have a much larger stock. Not only that, with AMD being a different chip process, it should not be fighting for any resources with Nvidia.

Yeah, it will fight for resources with Apple, other AMD products (CPUs) and the rest of the industry instead. NV is pretty much alone as a big customer of Samsung's 8nm process.

There are indications that Navi 21 will be in very scarce supply this year, one of them being an apparent lack of AIB cards until 2021.

Also if AMD would have started production already they would've definitely launched it against Ampere, in any quantities. Having even a dozen cards on the market is better if it's able to compete - people would at least know this and hold on from purchasing 3080s.

Yeah, it will fight for resources with Apple, other AMD products (CPUs) and the rest of the industry instead. NV is pretty much alone as a big customer of Samsung's 8nm process.

There are indications that Navi 21 will be in very scarce supply this year, one of them being an apparent lack of AIB cards until 2021.

Also if AMD would have started production already they would've definitely launched it against Ampere, in any quantities. Having even a dozen cards on the market is better if it's able to compete - people would at least know this and hold on from purchasing 3080s.

Not sure, they do seem confident when it does eventually release:

Not sure, they do seem confident when it does eventually release:

I don't you should take the word from someone inside AMD lol

Also, while Nvidia's launch was a mess, it wasn't a paper launch. Just too much demand.

I do not think AMD's launch will be a paper one, but the same thing will happen.